Object-Oriented Virtual Sample Library: a Container of Multi-Dimensional Data for Acquisition, Visualization and Sharing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Computer Programming IV” As Capstone Design and Laboratory Attachment Shoichi Yokoyama† Yamagata University, Yonezawa, Japan

Journal of Engineering Education Research Vol. 15, No. 5, pp. 31~35, September, 2012 “Computer Programming IV” as Capstone Design and Laboratory Attachment Shoichi Yokoyama† Yamagata University, Yonezawa, Japan ABSTRACT A new obligatory subject, Computer Programming IV, is organized in the Department of Informatics, Faculty of Engineering, Yamagata University. The purposes of the subject are as follows: (1) Attachment to each laboratory for bachelor thesis was usually at the initial stage of the student’s fourth academic year. This subject actually moves up the attachment because students are tentatively attached to a laboratory for this subject. The interval to complete their bachelor thesis is extended by half a year. (2) In each laboratory, students cooperate with each other to complete their project. The project becomes capstone design which JABEE (Japan Accreditation Board for Engineering Education) is recently emphasizing. We not only explain the introduction of this subject, but also report some case studies. Keywords: Engineering education, Capstone design, Laboratory attachment, Project, JABEE I. Introduction 1) third academic year, so that students first took the subject in 2009. The detailed plan created before this first use is The education program of the Department of Informatics, described in [2]. Faculty of Engineering, Yamagata University (YUDI) was The present paper describes the syllabus and proceeds accredited in 2003 by Japan Accreditation Board for to describe case studies of some laboratories. We explain Engineering Education (JABEE) [1], the second to be three years of results. accredited for information engineering. Through the in- Computer Programming IV has the following two purposes: termediate examination in 2005, the program was re- accredited in 2008 for the 2009 to 2014 period. -

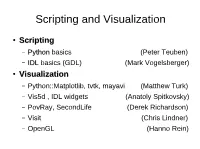

Scripting and Visualization

Scripting and Visualization ● ScriptingScripting – PythonPython basics (Peter Teuben) – IDLIDL basics (GDL) (Mark Vogelsberger) ● VisualizationVisualization – Python::Matplotlib, tvtk, mayavi (Matthew Turk) – Vis5d , IDL widgets (Anatoly Spitkovsky) – PovRay, SecondLife (Derek Richardson) – Visit (Chris Lindner) – OpenGL (Hanno Rein) S&V questions ● S: Is speed important? ● V: Specific for one type of data (point vs. grid, 1-2-3-dim?) ● V: Can it be driven by external programs (ds9, paraview) ● V: Can it be scripted? (partiview) ● V: Can it make animations? (glnemo2) ● V: Installation dependancies? Hard to install? Scripting (leaving out bash/tcsh) ● Scheme ((((((= a 1)))))) ● Perl $^_a! ● Tcl/Tk [set x [foo [bar]]] ● Python a.trim().split(':')[5] ● Ruby @a = (1,2,3) ● Cint struct B { float x[3], v[3];} ● IDL wh = where(r(delm) EQ 0, ct) ● Matlab t=r(:,1)+r(:,2)/60+r(:,3)/3600; PYTHONPYTHON http://www.python.org What's all the hype about? ● 1990 at UvA by Guido van Rossum ● Open Source, in C, portable Linux/Mac/Win/... ● Interpreted and dynamic scripting language ● Extensible and Object Oriented – Modules in python itself – Modules to any language (C, Fortran, ...) ● Many libraries have interfaces: gsl, fitsio, hdf5, pgplot,.... ● SciPy environment (numerical, plotting) The Language http://docs.python.org/tutorial ● Types: – Scalars: X=1 X=”1 2 3” – Lists: X=[1,2,3] X=range(1,4) – Tuples: X=(1,2,3,'-1','-2','-3') vx=float(X[3]) – Dictionary: X={“nbody”:10, “mode”:”euler”, “eps”:0.05} X[“eps”] ● Lots of builtin functions (and modules) -

The Graphics Cookbook SC/15.2 —Abstract Ii

SC/15.2 Starlink Project STARLINK Cookbook 15.2 A. Allan, D. Terrett 22nd August 2000 The Graphics Cookbook SC/15.2 —Abstract ii Abstract This cookbook is a collection of introductory material covering a wide range of topics dealing with data display, format conversion and presentation. Along with this material are pointers to more advanced documents dealing with the various packages, and hints and tips about how to deal with commonly occurring graphics problems. iii SC/15.2—Contents Contents 1 Introduction 3 2 Call for contributions 3 3 Subroutine Libraries 3 3.1 The PGPLOT library . 3 3.1.1 Encapsulated Postscript and PGPLOT . 5 3.1.2 PGPLOT Environment Variables . 5 3.1.3 PGPLOT Postscript Environment Variables . 5 3.1.4 Special characters inside PGPLOT text strings . 7 3.2 The BUTTON library . 7 3.3 The pgperl package . 11 3.3.1 Argument mapping – simple numbers and arrays . 12 3.3.2 Argument mapping – images and 2D arrays . 13 3.3.3 Argument mapping – function names . 14 3.3.4 Argument mapping – general handling of binary data . 14 3.4 Python PGPLOT . 14 3.5 GLISH PGPLOT . 15 3.6 ptcl Tk/Tcl and PGPLOT . 15 3.7 Starlink/Native PGPLOT . 15 3.8 Graphical Kernel System (GKS) . 16 3.8.1 Enquiring about the display . 16 3.8.2 Compiling and Linking GKS programs . 17 3.9 Simple Graphics System (SGS) . 17 3.10 PLplot Library . 17 3.10.1 PLplot and 3D Surface Plots . 18 3.11 The libjpeg Library . 19 3.12 The giflib Library . -

Documentation of the Plplot Plotting Software I

Documentation of the PLplot plotting software i Documentation of the PLplot plotting software Documentation of the PLplot plotting software ii Copyright © 1994 Maurice J. LeBrun, Geoffrey Furnish Copyright © 2000-2005 Rafael Laboissière Copyright © 2000-2016 Alan W. Irwin Copyright © 2001-2003 Joao Cardoso Copyright © 2004 Andrew Roach Copyright © 2004-2013 Andrew Ross Copyright © 2004-2016 Arjen Markus Copyright © 2005 Thomas J. Duck Copyright © 2005-2010 Hazen Babcock Copyright © 2008 Werner Smekal Copyright © 2008-2016 Jerry Bauck Copyright © 2009-2014 Hezekiah M. Carty Copyright © 2014-2015 Phil Rosenberg Copyright © 2015 Jim Dishaw Redistribution and use in source (XML DocBook) and “compiled” forms (HTML, PDF, PostScript, DVI, TeXinfo and so forth) with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code (XML DocBook) must retain the above copyright notice, this list of conditions and the following disclaimer as the first lines of this file unmodified. 2. Redistributions in compiled form (transformed to other DTDs, converted to HTML, PDF, PostScript, and other formats) must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. Important: THIS DOCUMENTATION IS PROVIDED BY THE PLPLOT PROJECT “AS IS” AND ANY EXPRESS OR IM- PLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE PLPLOT PROJECT BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. -

Integrating Existing Software Toolkits Into VO System.Chenzhou Cui

Please verify that (1) all pages are present, (2) all figures are acceptable, (3) all fonts and special characters are correct, and (4) all text and figures fit within the margin lines shown on this review document. Return to your MySPIE ToDo list and approve or disapprove this submission. Integrating Existing Software Toolkits into VO System Chenzhou Cui a, Yongheng Zhao a, Xiaoqian Wang a, Jian Sang a, Ze Luo b aNational Astronomical Observatories, CAS,20A Datun Road, Chaoyang District, Beijing 100012, China bComputer Network Information Center, CAS, 4 Nansijie, Zhongguancun, Haidian District, Beijing 100080, China ABSTRACT Virtual Observatory (VO) is a collection of interoperating data archives and software tools. Taking advantages of the latest information technologies, it aims to provide a data-intensively online research environment for astronomers all around the world. A large number of high-qualified astronomical software packages and libraries are powerful and easy of use, and have been widely used by astronomers for many years. Integrating those toolkits into the VO system is a necessary and important task for the VO developers. VO architecture greatly depends on Grid and Web services, consequently the general VO integration route is "Java Ready – Grid Ready – VO Ready". In the paper, we discuss the importance of VO integration for existing toolkits and discuss the possible solutions. We introduce two efforts in the field from China-VO project, "gImageMagick" and " Galactic abundance gradients statistical research under grid environment". We also discuss what additional work should be done to convert Grid service to VO service. Keywords: Existing Software, Integration, Virtual Observatory, Grid 1. -

Documentation of the Plplot Plotting Software I

Documentation of the PLplot plotting software i Documentation of the PLplot plotting software Documentation of the PLplot plotting software ii Copyright © 1994 Maurice J. LeBrun, Geoffrey Furnish Copyright © 2000-2005 Rafael Laboissière Copyright © 2000-2016 Alan W. Irwin Copyright © 2001-2003 Joao Cardoso Copyright © 2004 Andrew Roach Copyright © 2004-2013 Andrew Ross Copyright © 2004-2016 Arjen Markus Copyright © 2005 Thomas J. Duck Copyright © 2005-2010 Hazen Babcock Copyright © 2008 Werner Smekal Copyright © 2008-2016 Jerry Bauck Copyright © 2009-2014 Hezekiah M. Carty Copyright © 2014-2015 Phil Rosenberg Copyright © 2015 Jim Dishaw Redistribution and use in source (XML DocBook) and “compiled” forms (HTML, PDF, PostScript, DVI, TeXinfo and so forth) with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code (XML DocBook) must retain the above copyright notice, this list of conditions and the following disclaimer as the first lines of this file unmodified. 2. Redistributions in compiled form (transformed to other DTDs, converted to HTML, PDF, PostScript, and other formats) must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. Important: THIS DOCUMENTATION IS PROVIDED BY THE PLPLOT PROJECT “AS IS” AND ANY EXPRESS OR IM- PLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE PLPLOT PROJECT BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. -

User and Programmers Guide to the Neutron Ray-Tracing Package Mcstas, Version 1.12

Risø–R–1416(rev.ed.)(EN) User and Programmers Guide to the Neutron Ray-Tracing Package McStas, Version 1.12 Peter Willendrup, Erik Knudsen, Emmanuel Farhi and Kim Lefmann Risø DTU, Roskilde, Denmark May 2009 Abstract The software package McStas is a tool for carrying out Monte Carlo ray-tracing simulations of neutron scattering instruments with high complexity and precision. The simulations can compute all aspects of the performance of instruments and can thus be used to optimize the use of existing equipment, design new instrumentation, and carry out virtual experiments for e.g. training, experimental planning or data analysis. McStas is based on a unique design where an automatic compilation process translates high-level textual instrument descriptions into efficient ANSI-C code. This design makes it simple to set up typical simulations and also gives essentially unlimited freedom to handle more unusual cases. This report constitutes the reference manual for McStas, and, together with the manual for the McStas components, it contains full documentation of all aspects of the program. It covers the various ways to compile and run simulations, a descrip- tion of the meta-language used to define simulations, and some example simulations performed with the program. This report documents McStas version 1.12, released May, 2008 The authors are: Peter Kjær Willendrup <[email protected]> Materials Research Department, Risø DTU, Roskilde, Denmark Emmanuel Farhi <[email protected]> Institut Laue-Langevin, Grenoble, France Erik Knudsen <[email protected]> -

Summary of Image and Plotting Software (IPS) Packages Collected

Summary of Image and Plotting Software (IPS) packages collected for possible use with GLAST Science Analysis Tools For the Science Analysis Tools, we will need the capability to make plots, and display images on the screen. It would not be an efficient use of our manpower to write a custom set of plotting tools if we can find a package available that satisfies our needs. To this end the User Interface committee has settled on a list of basic requirements for science analysis graphics and have begun to look at some packages. The packages and their characteristics are contained in table 1 below. It seems quite clear that the choice of package is intimately related to the scope of what we want to do. For example if our tools only have to put up plots and images with minimal interactive analysis, then the plotting packages (the first group in table 1) are desirable. If we want to have more complicated interactions with the user and more extensive image manipulation (rotatable images, e.g.), then something more like a graphic toolkit would be desirable. If we decide to go the latter route and use a tool like Qt, then we need programmers to start working immediately to create the basic plotting classes and methods to ensure this does not hold up development of the science tools. The basic requirements have already reduced the number of packages to < 20, but we need to better define the requirements in greater detail to narrow it down to two or three packages. Basic requirements (IPS= Image and Plotting Software): 1) The IPS must be freely available for use, modification, and re-distribution. -

2 Game Programming with Python, Lua, and Ruby by Tom Gutschmidt

Game Programming with Python, Lua, and Ruby By Tom Gutschmidt Publisher: Premier Press Pub Date: 2003 ISBN: 1-59200-079-7 Pages: 472 LRN Dedication Acknowledgments About the Author Letter from the Series Editor Introduction What's in This Book? Why Learn Another Language? What's on the CD-ROM? Part ONE: Introducing High-Level Languages Chapter 1. High-Level Language Overview High-Level Language Roots How Programming Languages Work Low-Level Languages Today's High-Level Languages The Pros of High-Level Languages Cons of High-Level Languages A Brief History of Structured Programming Introducing Python Introducing Lua Introducing Ruby Summary Questions and Answers Exercises Chapter 2. Python, Lua, and Ruby Language Features Syntactical Similarities of Python, Lua, and Ruby Hello World Samples Summary Questions and Answers Exercises Part TWO: Programming with Python Chapter 3. Getting Started with Python Python Executables Python Debuggers Python Language Structure Creating a Simple User Interface in Python A Simple GUI with Tkinter Memory, Performance, and Speed Summary Questions and Answers 2 Exercises Chapter 4. Getting Specific with Python Games The Pygame Library Python Graphics Sound in Python Networking in Python Putting It All Together Summary Questions and Answers Exercises Chapter 5. The Python Game Community Engines Graphics Commercial Games Beyond Python Summary Question and Answer Exercises Part THREE: Programming with Lua Chapter 6. Programming with Lua Lua Executables and Debuggers Language Structure Memory, Performance, and Speed Summary Questions and Answers Exercises Chapter 7. Getting Specific with Games in Lua LuaSDL Gravity: A Lua SDL Game The Lua C API Summary Questions and Answers Exercises Chapter 8. -

The Plplot Plotting Library

The PLplot Plotting Library Programmer’s Reference Manual Maurice J. LeBrun Geoff Furnish University of Texas at Austin Institute for Fusion Studies The PLplot Plotting Library: Programmer’s Reference Manual by Maurice J. LeBrun and Geoff Furnish Copyright ľ 1994 Geoffrey Furnish, Maurice LeBrun Copyright ľ 1999, 2000, 2001, 2002, 2003, 2004 Alan W. Irwin, Rafael Laboissière Copyright ľ 2003 Joao Cardoso Redistribution and use in source (XML DocBook) and “compiled” forms (HTML, PDF, PostScript, DVI, TeXinfo and so forth) with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code (XML DocBook) must retain the above copyright notice, this list of conditions and the following disclaimer as the first lines of this file unmodified. 2. Redistributions in compiled form (transformed to other DTDs, converted to HTML, PDF, PostScript, and other formats) must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. Important: THIS DOCUMENTATION IS PROVIDED BY THE PLPLOT PROJECT “AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE PLPLOT PROJECT BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. -

PGPLOT Graphics Subroutine Library

PGPLOT Graphics Subroutine Library T. J. Pearson Copyright © 1988-1997 by California Institute of Technology Contents ● Acknowledgments ● Chapter 1 Introduction ❍ 1.1 PGPLOT ❍ 1.2 This Manual ❍ 1.3 Using PGPLOT ❍ 1.4 Graphics Devices ❍ 1.5 Environment Variables ● Chapter 2 Simple Use of PGPLOT ❍ 2.1 Introduction ❍ 2.2 An Example ❍ 2.3 Data Initialization ❍ 2.4 Starting PGPLOT ❍ 2.5 Defining Plot Scales and Drawing Axes ❍ 2.6 Labeling the Axes ❍ 2.7 Drawing Graph Markers ❍ 2.8 Drawing Lines ❍ 2.9 Ending the Plot ❍ 2.10 Compiling and Running the Program ❍ Figure 2.1: Output of Example Program ● Chapter 3 Windows and Viewports ❍ 3.1 Introduction ❍ 3.2 Selecting a View Surface ❍ 3.3 Defining the Viewport ❍ 3.4 Defining the Window ❍ 3.5 Annotating the Viewport ❍ 3.6 Routine PGENV ● Chapter 4 Primitives ❍ 4.1 Introduction ❍ 4.2 Clipping ❍ 4.3 Lines ❍ 4.4 Graph Markers ❍ 4.5 Text ❍ 4.6 Area Fill: Polygons, Rectangles, and Circles ❍ Figure 4.1: PGPLOT standard graph markers ❍ Figure 4.2: Text Examples ❍ Figure 4.3: Escape Sequences for Greek Letters ● Chapter 5 Attributes ❍ 5.1 Introduction ❍ 5.2 Color Index ❍ 5.3 Color Representation ❍ 5.4 Line Style ❍ 5.5 Line Width ❍ 5.6 Character Height ❍ 5.6 Character Font ❍ 5.7 Text Background ❍ 5.8 Fill-Area Style ❍ 5.9 The Inquiry Routines ❍ 5.10 Saving and Restoring Attributes ❍ Figure 5.1: Default color representations of color indices 0-15 ❍ Figure 5.2: Fill-area styles ● Chapter 6 Higher-Level Routines ❍ Introduction ❍ XY-plots ❍ Histograms ❍ Functions of two variables ● Chapter 7 Interactive Graphics -

PDL — Scientific Programming in Perl

PDL | Scientific Programming in Perl Karl Glazebrook Christian S¨oller Tuomas J. Lukka Marc Lehmann Jarle Brinchmann Doug Hunt John Cerney Robin Williams Tim Pickering August 12, 2001 Contents 0 PDL | Year Zero 5 0.1 Who this book is for. 6 0.2 The case for a high-level approach. 7 0.3 The case for a free Data Language. 8 0.4 So why Perl? . 9 0.5 What this book is about. 11 0.6 What this book is NOT about. 11 0.7 Conventions used in this book . 12 1 A Whirlwind tour through PDL 13 1.1 Alright let's do something. 13 1.2 Whirling through the Whirlpool . 16 1.2.1 Measuring the brightness of M51 . 20 1.2.2 Twinkle, twinkle, little star. 21 1.2.3 Getting Complex with M51 . 28 1.3 Roundoff . 29 2 The Basics of PDL 31 2.1 Basic manipulations of piddles . 31 2.1.1 What is a piddle? . 31 2.1.2 Creating piddles . 32 2.1.3 Elementary arithmetic. 33 2.1.4 In-place arithmetic . 34 2.1.5 Using = and .= . 34 2.2 Piddles are NOT Perl `arrays' . 35 1 CONTENTS 2 2.2.1 The benefits of piddles. 37 2.2.2 Lists and Hashes of piddles . 37 2.3 PDL Datatypes . 38 2.3.1 Type conversion and creation . 39 2.3.2 Complex types . 39 2.4 PDL projection operators . 40 2.4.1 What about projecting along other dimensions? . 41 2.5 `Threading' over extra dimensions. 41 2.6 Example Problem .