3 Atomic Image Examples 11 3.1 Setting up Fedora Atomic Cluster with Kubernetes and Vagrant

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Abstract Introduction Methodology

Kajetan Hinner (2000): Statistics of major IRC networks: methods and summary of user count. M/C: A Journal of Media and Culture 3(4). <originally: http://www.api-network.com/mc/0008/count.html> now: http://www.media-culture.org.au/0008/count.html - Actual figures and updates: www.hinner.com/ircstat/ Abstract The article explains a successful approach to monitor the major worldwide Internet Relay Chat (IRC) networks. It introduces a new research tool capable of producing detailed and accurate statistics of IRC network’s user count. Several obsolete methods are discussed before the still ongoing Socip.perl program is explained. Finally some IRC statistics are described, like development of user figures, their maximum count, IRC channel figures, etc. Introduction Internet Relay Chat (IRC) is a text-based service, where people can meet online and chat. All chat is organized in channels which a specific topic, like #usa or #linux. A user can be taking part in several channels when connected to an IRC network. For a long time the only IRC network has been EFnet (Eris-Free Network, named after its server eris.berkeley.edu), founded in 1990. The other three major IRC networks are Undernet (1993), DALnet (1994) and IRCnet, which split off EFnet in June 1996. All persons connecting to an IRC network at one time create that IRC network’s user space. People are constantly signing on and off, the total number of users ever been to a specific IRC network could be called social space of that IRC network. It is obvious, that the IRC network’s social space by far outnumbers its user space. -

IRC Channel Data Analysis Using Apache Solr Nikhil Reddy Boreddy Purdue University

Purdue University Purdue e-Pubs Open Access Theses Theses and Dissertations Spring 2015 IRC channel data analysis using Apache Solr Nikhil Reddy Boreddy Purdue University Follow this and additional works at: https://docs.lib.purdue.edu/open_access_theses Part of the Communication Technology and New Media Commons Recommended Citation Boreddy, Nikhil Reddy, "IRC channel data analysis using Apache Solr" (2015). Open Access Theses. 551. https://docs.lib.purdue.edu/open_access_theses/551 This document has been made available through Purdue e-Pubs, a service of the Purdue University Libraries. Please contact [email protected] for additional information. Graduate School Form 30 Updated 1/15/2015 PURDUE UNIVERSITY GRADUATE SCHOOL Thesis/Dissertation Acceptance This is to certify that the thesis/dissertation prepared By Nikhil Reddy Boreddy Entitled IRC CHANNEL DATA ANALYSIS USING APACHE SOLR For the degree of Master of Science Is approved by the final examining committee: Dr. Marcus Rogers Chair Dr. John Springer Dr. Eric Matson To the best of my knowledge and as understood by the student in the Thesis/Dissertation Agreement, Publication Delay, and Certification Disclaimer (Graduate School Form 32), this thesis/dissertation adheres to the provisions of Purdue University’s “Policy of Integrity in Research” and the use of copyright material. Approved by Major Professor(s): Dr. Marcus Rogers Approved by: Dr. Jeffery L Whitten 3/13/2015 Head of the Departmental Graduate Program Date IRC CHANNEL DATA ANALYSIS USING APACHE SOLR A Thesis Submitted to the Faculty of Purdue University by Nikhil Reddy Boreddy In Partial Fulfillment of the Requirements for the Degree of Master of Science May 2015 Purdue University West Lafayette, Indiana ii To my parents Bhaskar and Fatima: for pushing me to get my Masters before I join the corporate world! To my committee chair Dr. -

Funsociety-Ircclient Documentation Release 2.1

funsociety-ircclient Documentation Release 2.1 Dave Richer Jun 30, 2019 Contents 1 More detailed docs: 1 1.1 API....................................................1 Index 11 i ii CHAPTER 1 More detailed docs: 1.1 API This library provides IRC client functionality 1.1.1 Client irc.Client(server, nick[, options ]) This object is the base of everything, it represents a single nick connected to a single IRC server. The first two arguments are the server to connect to, and the nickname to attempt to use. The third optional argument is an options object with default values: { userName:'MrNodeBot', realName:'MrNodeBot IRC Bot', socket: false, port: 6667, localAddress: null, localPort: null, debug: false, showErrors: false, autoRejoin: false, autoConnect: true, channels: [], secure: false, selfSigned: false, certExpired: false, floodProtection: false, floodProtectionDelay: 1000, sasl: false, retryCount:0, retryDelay: 2000, (continues on next page) 1 funsociety-ircclient Documentation, Release 2.1 (continued from previous page) stripColors: false, channelPrefixes:"&#", messageSplit: 512, encoding:'' } secure (SSL connection) can be a true value or an object (the kind of object returned from crypto.createCredentials()) specifying cert etc for validation. If you set selfSigned to true SSL accepts cer- tificates from a non trusted CA. If you set certExpired to true, the bot connects even if the ssl cert has expired. Set socket to true to interpret server as a path to a UNIX domain socket. localAddress is the local address to bind to when connecting, such as 127.0.0.1 localPort is the local port to bind when connecting, such as 50555 floodProtection queues all your messages and slowly unpacks it to make sure that we won’t get kicked out because for Excess Flood. -

Table of Contents

Contents 1 Table of Contents Foreword 0 Part I Welcome to mIRC 5 Part II Connect to a Server 5 1 Connection Issues................................................................................................................................... 6 Part III Join a Channel 6 1 Channels List................................................................................................................................... 8 Part IV Chat Privately 9 Part V Send and Receive Files 9 1 Accepting Files................................................................................................................................... 11 Part VI Change Colors 12 1 Control Codes................................................................................................................................... 12 Part VII Options 14 1 Connect ................................................................................................................................... 14 Servers .......................................................................................................................................................... 15 Options .......................................................................................................................................................... 16 Identd .......................................................................................................................................................... 18 Proxy ......................................................................................................................................................... -

IRC: the Internet Relay Chat

IRC: The Internet Relay Chat Tehran Linux Users Group Overview ● What is IRC? ● A little bit of “How it all began?” ● Yet another Client/Server model ● RFC-1459 ● The culture itself ● IRC Implementations ● IRC HOW-TO ● FreeNode ● Resources What is IRC? ● Internet Relay Chat (IRC) is a form of real-time Internet chat or synchronous conferencing. It is mainly designed for group (many-to-many) communication in discussion forums called channels, but also allows one- to-one communication and data transfers via private message. ● IRC was created by Jarkko "WiZ" Oikarinen in late August 1988 to replace a program called MUT (MultiUser talk) on a BBS called OuluBox in Finland. Oikarinen found inspiration in a chat system known as Bitnet Relay, which operated on the BITNET. ● IRC gained prominence when it was used to report on the Soviet coup attempt of 1991 throughout a media blackout. It was previously used in a similar fashion by Kuwaitis during the Iraqi invasion. Relevant logs are available from ibiblio archive. ● IRC client software is available for virtually every computer operating system. A little bit of “How it all began?” ● While working at Finland's University of Oulu in August 1988, he wrote the first IRC server and client programs, which he produced to replace the MUT (MultiUser Talk) program on the Finnish BBS OuluBox. Using the Bitnet Relay chat system as inspiration, Oikarinen continued to develop IRC over the next four years, receiving assistance from Darren Reed in co- authoring the IRC Protocol. In 1997, his development of IRC earned Oikarinen a Dvorak Award for Personal Achievement— Outstanding Global Interactive Personal Communications System; in 2005, the Millennium Technology Prize Foundation, a Finnish public-private partnership, honored him with one of three Special Recognition Awards. -

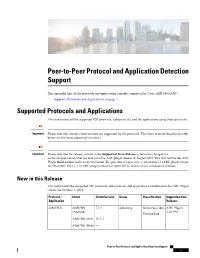

Peer-To-Peer Protocol and Application Detection Support

Peer-to-Peer Protocol and Application Detection Support This appendix lists all the protocols and applications currently supported by Cisco ASR 5500 ADC. • Supported Protocols and Applications, on page 1 Supported Protocols and Applications This section lists all the supported P2P protocols, sub-protocols, and the applications using these protocols. Important Please note that various client versions are supported for the protocols. The client versions listed in the table below are the latest supported version(s). Important Please note that the release version in the Supported from Release column has changed for protocols/applications that are new since the ADC plugin release in August 2015. This will now be the ADC Plugin Build number in the x.xxx.xxx format. The previous releases were versioned as 1.1 (ADC plugin release for December 2012 ), 1.2 (ADC plugin release for April 2013), and so on for consecutive releases. New in this Release This section lists the supported P2P protocols, sub-protocols and applications introduced in the ADC Plugin release for October 1, 2018. Protocol / Client Client Version Group Classification Supported from Application Release ABSCBN ABSCBN 7.1.2 Streaming Streaming-video ADC Plugin (Android) 2.28.990 Unclassified ABSCBN (iOS) 10.3.3 ABSCBN (Web) — Peer-to-Peer Protocol and Application Detection Support 1 Peer-to-Peer Protocol and Application Detection Support New in this Release Protocol / Client Client Version Group Classification Supported from Application Release Smashcast Smashcast 1.0.13 Streaming -

Linux IRC Mini-HOWTO

Linux IRC mini−HOWTO Frédéric L. W. Meunier v0.4 7 January, 2005 Revision History Revision 0.4 2005−01−07 Revised by: fredlwm Fifth revision. This document aims to describe the basics of IRC and respective applications for Linux. Linux IRC mini−HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Objectives.........................................................................................................................................1 1.2. Miscellaneous...................................................................................................................................1 1.3. Translations.......................................................................................................................................2 2. About IRC........................................................................................................................................................3 3. Brief History of IRC.......................................................................................................................................4 4. Beginner's guide on using IRC......................................................................................................................5 4.1. Running the ircII program................................................................................................................5 4.2. Commands........................................................................................................................................5 -

T2ACYRR T2acyru T2acyrk T2acyro T2acyrv T2acyro T2acyrd T2acyrs

Руководство по IRC Выпуск 1 Dmitry 11 November 2015 Оглавление 1 Краткое введение в IRC и KVIrc3 1.1 IRC (Internet Relay Chat).....................................3 1.2 IRC-клиент KVIrc.........................................4 1.3 Работа с KVIrc...........................................4 1.4 Интерфейс KVIrc.......................................... 24 2 Список используемых источников 35 i ii Руководство по IRC, Выпуск 1 Содержание: Оглавление 1 Руководство по IRC, Выпуск 1 2 Оглавление ГЛАВА 1 Краткое введение в IRC и KVIrc Оглавление • Краткое введение в IRC и KVIrc – IRC (Internet Relay Chat) – IRC-клиент KVIrc ∗ Основные возможности KVIrc – Работа с KVIrc ∗ Получение ∗ Установка ∗ Первый запуск и первичная найстройка KVIrc ∗ Выбор, настройка и вход на серверы (в сети) – Интерфейс KVIrc ∗ Сообщения ∗ Команды ∗ Работа со строкой ввода ∗ Информация о пользователе ∗ Вход на каналы ∗ Логи каналов и приватных бесед ∗ Всплывающие подсказки 1.1 IRC (Internet Relay Chat) IRC (англ. Internet Relay Chat) — протокол прикладного уровня для обмена сообщениями в режиме реального времени. Разработан в основном для группового общения, также позволяет общаться через личные сообщения и обмениваться данными, в том числе файлами. Согласно спецификациям протокола, IRC-сеть — это группа серверов, соединённых между собой. Про- стейшей сетью является одиночный сервер. Сеть должна иметь вид связного дерева, в котором каждый сервер является центральным узлом для остальной части сети. Клиентом азывается всё, что подключено к серверу, кроме других серверов. Примечание: Подробнее об IRC можно прочитать в следюущих статьях: • Википедия: IRC 3 Руководство по IRC, Выпуск 1 • IrcNet.ru: Об IRC • Dreamterra: Что такое IRC, знакомство с понятием IRC-чатов 1.2 IRC-клиент KVIrc KVIrc — свободный и бесплатный IRC-клиент с открытым исходным кодом. Клиент доступен для операционных систем GNU/Linux, Windows и Mac OS X. -

While IRC Is Easy to Get Into and Many People Are Happy to Use It Without

< Day Day Up > • Table of Contents • Index • Reviews • Reader Reviews • Errata • Academic • Top Ten Tricks and Tips for New IRC Users IRC Hacks By Paul Mutton Publisher: O'Reilly Pub Date: July 2004 ISBN: 0-596-00687-X Pages: 432 While IRC is easy to get into and many people are happy to use it without being aware of what's happening under the hood, there are those who hunger for more knowledge, and this book is for them. IRC Hacks is a collection of tips and tools that cover just about everything needed to become a true IRC master, featuring contributions from some of the most renowned IRC hackers, many of whom collaborated on IRC, grouping together to form the channel #irchacks on the freenode IRC network (irc.freenode.net). < Day Day Up > < Day Day Up > • Table of Contents • Index • Reviews • Reader Reviews • Errata • Academic • Top Ten Tricks and Tips for New IRC Users IRC Hacks By Paul Mutton Publisher: O'Reilly Pub Date: July 2004 ISBN: 0-596-00687-X Pages: 432 Copyright Foreword Credits About the Author Contributors Acknowledgments Preface Why IRC Hacks? How to Use This Book How This Book Is Organized Conventions Used in this Book Using Code Examples How to Contact Us Got a Hack? Chapter 1. Connecting to IRC Introduction: Hacks #1-4 Hack 1. IRC from Windows Hack 2. IRC from Linux Hack 3. IRC from Mac OS X Hack 4. IRC with ChatZilla Chapter 2. Using IRC Introduction: Hacks #5-11 Hack 5. The IRC Model Hack 6. Common Terms, Abbreviations, and Phrases Hack 7. -

Way of the Ferret: Finding and Using Educational Resources on The

DOCUMENT RESUME IR 018 778 ED 417 711 AUTHOR Harris, Judi TITLE Way of the Ferret: Finding andUsing Educational Resources on the Internet. SecondEdition. Education, Eugene, INSTITUTION International Society for Technology in OR. ISBN ISBN-1-56484-085-9 PUB DATE 1995-00-00 NOTE 291p. Education, Customer AVAILABLE FROM International Society for Technology in Service Office, 480 Charnelton Street,Eugene, OR 97401-2626; phone: 800-336-5191;World Wide Web: http://isteonline.uoregon.edu (members: $29.95,nonmembers: $26.95). PUB TYPE Books (010)-- Guides -Non-Classroom (055) EDRS PRICE MF01/PC12 Plus Postage. Mediated DESCRIPTORS *Computer Assisted Instruction; Computer Communication; *Educational Resources;Educational Technology; Electronic Mail;Information Sources; Instructional Materials; *Internet;Learning Activities; Telecommunications; Teleconferencing IDENTIFIERS Electronic Resources; Listservs ABSTRACT This book is designed to assist educators'exploration of the Internet and educational resourcesavailable online. An overview lists the five basic types of informationexchange possible on the Internet, and outlines five corresponding telecomputingoptions. The book contains an overview and four sections. The chaptersin Section One, "Information Resources," focus on:(1) Gopher Tools;(2) The World Wide Web; (3) Interactive Telnet Sessions;(4) File Transfers on the Internet;and (5) Receiving and Uncompressing Files. SectionTwo, "Interpersonal Resources," contains chapters:(6) Electronic Mail Services;(7) Internet-Based Discussion Groups; and -

CIS 76 Ethical Hacking

CIS 76 - Lesson 8 Last updated 10/24/2017 Rich's lesson module checklist Slides and lab posted WB converted from PowerPoint Print out agenda slide and annotate page numbers Flash cards Properties Page numbers 1st minute quiz Web Calendar summary Web book pages Commands Bot and other samples programs added to depot directory Lab 7 posted and tested Backup slides, whiteboard slides, CCC info, handouts on flash drive Spare 9v battery for mic Key card for classroom door Update CCC Confer and 3C Media portals 1 CIS 76 - Lesson 8 Evading Network TCP/IP Devices Cryptography Network and Computer Attacks Footprinting and Hacking Wireless CIS 76 Networks Social Engineering Ethical Hacking Hacking Web Servers Port Scanning Embedded Operating Enumeration Systems Desktop and Server Scripting and Vulnerabilities Programming Student Learner Outcomes 1.Defend a computer and a LAN against a variety of different types of security attacks using a number of hands-on techniques. 2.Defend a computer and a LAN against a variety of different types of security attacks using a number of hands-on techniques. 2 CIS 76 - Lesson 8 Introductions and Credits Rich Simms • HP Alumnus. • Started teaching in 2008 when Jim Griffin went on sabbatical. • Rich’s site: http://simms-teach.com And thanks to: • Steven Bolt at for his WASTC EH training. • Kevin Vaccaro for his CSSIA EH training and Netlab+ pods. • EC-Council for their online self-paced CEH v9 course. • Sam Bowne for his WASTC seminars, textbook recommendation and fantastic EH website (https://samsclass.info/). • Lisa Bock for her great lynda.com EH course. -

Fedora 10 Release Notes

Fedora 10 Release Notes Fedora Documentation Project Copyright © 2007, 2008 Red Hat, Inc. and others. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. All other trademarks are the property of their respective owners. Abstract Important information about this release of Fedora 1. Welcome to Fedora 10 ............................................................................................................ 2 1.1.