32Principles and Practices to Successfully Transition To

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Freebsd-And-Git.Pdf

FreeBSD and Git Ed Maste - FreeBSD Vendor Summit 2018 Purpose ● History and Context - ensure we’re starting from the same reference ● Identify next steps for more effective use / integration with Git / GitHub ● Understand what needs to be resolved for any future decision on Git as the primary repository Version control history ● CVS ○ 1993-2012 ● Subversion ○ src/ May 31 2008, r179447 ○ doc/www May 19, 2012 r38821 ○ ports July 14, 2012 r300894 ● Perforce ○ 2001-2018 ● Hg Mirror ● Git Mirror ○ 2011- Subversion Repositories svnsync repo svn Subversion & Git Repositories today svn2git git push svnsync git svn repo svn git github Repositories Today fork repo / Freebsd Downstream svn github github Repositories Today fork repo / Freebsd Downstream svn github github “Git is not a Version Control System” phk@ missive, reproduced at https://blog.feld.me/posts/2018/01/git-is-not-revision-control/ Subversion vs. Git: Myths and Facts https://svnvsgit.com/ “Git has a number of advantages in the popularity race, none of which are really to do with the technology” https://chapmanworld.com/2018/08/25/why-im-now-using-both-git-and-subversion-for-one-project/ 10 things I hate about Git https://stevebennett.me/2012/02/24/10-things-i-hate-about-git Git popularity Nobody uses Subversion anymore False. A myth. Despite all the marketing buzz related to Git, such notable open source projects as FreeBSD and LLVM continue to use Subversion as the main version control system. About 47% of other open source projects use Subversion too (while only 38% are on Git). (2016) https://svnvsgit.com/ Git popularity (2018) Git UI/UX Yes, it’s a mess. -

Common Tools for Team Collaboration Problem: Working with a Team (Especially Remotely) Can Be Difficult

Common Tools for Team Collaboration Problem: Working with a team (especially remotely) can be difficult. ▹ Team members might have a different idea for the project ▹ Two or more team members could end up doing the same work ▹ Or a few team members have nothing to do Solutions: A combination of few tools. ▹ Communication channels ▹ Wikis ▹ Task manager ▹ Version Control ■ We’ll be going in depth with this one! Important! The tools are only as good as your team uses them. Make sure all of your team members agree on what tools to use, and train them thoroughly! Communication Channels Purpose: Communication channels provide a way to have team members remotely communicate with one another. Ideally, the channel will attempt to emulate, as closely as possible, what communication would be like if all of your team members were in the same office. Wait, why not email? ▹ No voice support ■ Text alone is not a sufficient form of communication ▹ Too slow, no obvious support for notifications ▹ Lack of flexibility in grouping people Tools: ▹ Discord ■ discordapp.com ▹ Slack ■ slack.com ▹ Riot.im ■ about.riot.im Discord: Originally used for voice-chat for gaming, Discord provides: ▹ Voice & video conferencing ▹ Text communication, separated by channels ▹ File-sharing ▹ Private communications ▹ A mobile, web, and desktop app Slack: A business-oriented text communication that also supports: ▹ Everything Discord does, plus... ▹ Threaded conversations Riot.im: A self-hosted, open-source alternative to Slack Wikis Purpose: Professionally used as a collaborative game design document, a wiki is a synchronized documentation tool that retains a thorough history of changes that occured on each page. -

Protect Yourself and Your Personal Information*

CYBER SAFETY Protect yourself and your personal information * Cybercrime is a growing and serious threat, making it essential that fraud prevention is part of our daily activities. Put these safeguards in place as soon as possible—if you haven’t already. Email Public Wi-Fi/hotspots Key Use separate email accounts: one each Minimize the use of unsecured, public networks CYBER SAFETY for work, personal use, user IDs, alerts Turn oF auto connect to non-preferred networks 10 notifications, other interests Tips Turn oF file sharing Choose a reputable email provider that oFers spam filtering and multi-factor authentication When public Wi-Fi cannot be avoided, use a 1 Create separate email accounts virtual private network (VPN) to help secure your for work, personal use, alert Use secure messaging tools when replying session to verified requests for financial or personal notifications and other interests information Disable ad hoc networking, which allows direct computer-to-computer transmissions Encrypt important files before emailing them 2 Be cautious of clicking on links or Never use public Wi-Fi to enter personal attachments sent to you in emails Do not open emails from unknown senders credentials on a website; hackers can capture Passwords your keystrokes 3 Use secure messaging tools when Create complex passwords that are at least 10 Home networks transmitting sensitive information characters; use a mix of numbers, upper- and Create one network for you, another for guests via email or text message lowercase letters and special characters and children -

CSE Student Newsletter

CSE Student Newsletter August 26, 2021 – Volume 11, Issue 18 Computer Science and Engineering University of South Florida Tampa, Florida http://www.cse.usf.edu Dear CSE Students: Welcome to the first newsletter of the Fall 2021 semester. Message from the UG Advisor: Add/drop week ends on 8/27. Schedules need to be final by 5:00PM on August 27. Students are responsible for the tuition and fees for any classes they are registered for after add/drop, even if they drop them later. August 27- TUITION PAYMENTS DUE to avoid late fees and cancellation of registration for non-payment. Tuition waiver forms are due to me for students appointed as TA/RA. September 20 - Deadline to apply for graduation in OASIS and send me your paperwork. October 8 - Tuition payments due for graduate assistants with tuition waivers, students with billed Florida prepaid tuition plans or with financial aid deferments to avoid $100 late payment fee. Please see the attached emails for more information. Message from the Grad Program Assistant: Add/drop week ends on 8/27. Schedules need to be final by 5:00PM on August 27. Students are responsible for the tuition and fees for any classes they are registered for after add/drop, even if they drop them later. August 27- TUITION PAYMENTS DUE to avoid late fees and cancellation of registration for non-payment. Tuition waiver forms are due to me for students appointed as TA/RA. September 20 - Deadline to apply for graduation in OASIS and send me your paperwork. October 8 - Tuition payments due for graduate assistants with tuition waivers, students with billed Florida prepaid tuition plans or with financial aid deferments to avoid $100 late payment fee. -

Letter, If Not the Spirit, of One Or the Other Definition

Producing Open Source Software How to Run a Successful Free Software Project Karl Fogel Producing Open Source Software: How to Run a Successful Free Software Project by Karl Fogel Copyright © 2005-2021 Karl Fogel, under the CreativeCommons Attribution-ShareAlike (4.0) license. Version: 2.3214 Home site: https://producingoss.com/ Dedication This book is dedicated to two dear friends without whom it would not have been possible: Karen Under- hill and Jim Blandy. i Table of Contents Preface ............................................................................................................................. vi Why Write This Book? ............................................................................................... vi Who Should Read This Book? ..................................................................................... vi Sources ................................................................................................................... vii Acknowledgements ................................................................................................... viii For the first edition (2005) ................................................................................ viii For the second edition (2021) .............................................................................. ix Disclaimer .............................................................................................................. xiii 1. Introduction ................................................................................................................... -

Protecting the Crown: a Century of Resource Management in Glacier National Park

Protecting the Crown A Century of Resource Management in Glacier National Park Rocky Mountains Cooperative Ecosystem Studies Unit (RM-CESU) RM-CESU Cooperative Agreement H2380040001 (WASO) RM-CESU Task Agreement J1434080053 Theodore Catton, Principal Investigator University of Montana Department of History Missoula, Montana 59812 Diane Krahe, Researcher University of Montana Department of History Missoula, Montana 59812 Deirdre K. Shaw NPS Key Official and Curator Glacier National Park West Glacier, Montana 59936 June 2011 Table of Contents List of Maps and Photographs v Introduction: Protecting the Crown 1 Chapter 1: A Homeland and a Frontier 5 Chapter 2: A Reservoir of Nature 23 Chapter 3: A Complete Sanctuary 57 Chapter 4: A Vignette of Primitive America 103 Chapter 5: A Sustainable Ecosystem 179 Conclusion: Preserving Different Natures 245 Bibliography 249 Index 261 List of Maps and Photographs MAPS Glacier National Park 22 Threats to Glacier National Park 168 PHOTOGRAPHS Cover - hikers going to Grinnell Glacier, 1930s, HPC 001581 Introduction – Three buses on Going-to-the-Sun Road, 1937, GNPA 11829 1 1.1 Two Cultural Legacies – McDonald family, GNPA 64 5 1.2 Indian Use and Occupancy – unidentified couple by lake, GNPA 24 7 1.3 Scientific Exploration – George B. Grinnell, Web 12 1.4 New Forms of Resource Use – group with stringer of fish, GNPA 551 14 2.1 A Foundation in Law – ranger at check station, GNPA 2874 23 2.2 An Emphasis on Law Enforcement – two park employees on hotel porch, 1915 HPC 001037 25 2.3 Stocking the Park – men with dead mountain lions, GNPA 9199 31 2.4 Balancing Preservation and Use – road-building contractors, 1924, GNPA 304 40 2.5 Forest Protection – Half Moon Fire, 1929, GNPA 11818 45 2.6 Properties on Lake McDonald – cabin in Apgar, Web 54 3.1 A Background of Construction – gas shovel, GTSR, 1937, GNPA 11647 57 3.2 Wildlife Studies in the 1930s – George M. -

How to Stay Safe in Today's World

REFERENCES Internet Safety http://www.aarp.org/money/scams-fraud/info-08-2011/ internet-security.html How to Stay Safe in Identify Theft Federal Trade Commission (FTC) File Complaint: https://www.ftccomplaintassistant.gov/ ID Theft Hotline: 1-877-438-4338 Today’s World Credit Bureaus: Eqiuifax: 1-888-766-0008 Experian: 1-888-397-3742 TransUnion: 1-800-680-7289 Internet Terminology Medical Fraud: Hotline: 1-800-403-0864 Internet Safety Social Security: Hotline: 1-800-269-0271 Identify/Medical Theft http://oig.ssa.gov/report-fraud-waste-or-abuse/fraud-waste- and-abuse Card Skimming http://www.ftc.gov/bcp/edu/microsites/idtheft/ Remote Controls Booklet Sponsored by: Indiana Extension Homemakers Association Education Focus Group 2015-2016 www.ieha.families.com For more information contact your County Extension Office 16 INTERNET TERMINOLOGY NOTES APPS (applications): a shortcut to information categorized by an icon Attachment: a file attached to an e-mail message. Blog: diary or personal journal posted on a web site, updated frequently. Browser: a program with a graphical user interface for displaying HTML files, used to navigate the World Wide Web (a web browser) Click: pressing and releasing a button on a mouse to select or activate the area on the screen where the cursor s pointing to. Cloud: a loosely defined term for any system providing access via processing powers. Cookies: a small piece of code that is downloaded to computers to keep track of user’s activities. Cyberstalking: a crime in which the attacker harasses a victim using electronic communication, such as -e mail, instant messaging or post- ed messages. -

Why the Human Factor Is Still the Central Issue

April/May 2017 datacenterdynamics.com Why the human factor is still the central issue 10 beautiful data centers Finding new frontiers Re-shaping servers Here are some facilities that manage to Building a data center is hard They looked solid and unchanging, but get their work done, and still impress us enough. Building one in Angola now servers are being remolded by the with their looks is even tougher creative minds of the industry Contents April/May 2017 27 ON THE COVER 18 Powered by people NEWS 07 Top Story Vantage bought by investment consortium 07 In Brief 14 NTT to develop data centers for connected cars FEATURES 24 Tying down the cloud 27 New data center frontiers 37 Re-shaping servers OPINION 40 23 Data centers are fired by a human heart 46 Max Smolaks is a ghost in a shell REGIONAL FEATURES 14 LATAM Open source IoT protects Mexican bank 18 16 APAC Indonesia ups its game 37 DCD COMMUNITY 40 Dreaming of Net Zero energy The best discussion from DCD Zettastructure, for your delectation 42 Preview: DCD>Webscale and EnergySmart Find out what we have up 24 our sleeves EDITOR’S PICK 30 Top 10 beautiful data centers Data centers don’t have to be boring sheds. These ten facilities paid attention to their looks, and we think it paid off. Nominate your favorite for a new 16 30 DCD Award! Issue 21 • April/May 2017 3 HEAD OFFICE From the Editor 102–108 Clifton Street London EC2A 4HW % +44 (0) 207 377 1907 Let's hear it for MEET THE TEAM Peter Judge Global Editor the humans! @Judgecorp Max Smolaks News Editor mazon's S3 storage We aren't looking just for @MaxSmolax service went down architectural excellence. -

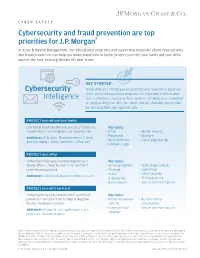

Cybersecurity Intelligence

CYBER SAFETY Cybersecurity and fraud prevention are top priorities for J.P. Morgan* In Asset & Wealth Management, our educational programs and supporting materials about cybersecurity and fraud prevention can help you understand how to better protect yourself, your family and your office against the ever-evolving threats of cyber crime. GET STARTED Cybersecurity Speak with your J.P. Morgan representative to learn more about our cyber and fraud prevention programs, for educational information Intelligence and to schedule a session with our experts. J.P. Morgan is committed to safeguarding your data, but clients remain ultimately responsible for ensuring their own cybersecurity. PROTECT yourself and your family Learn best practices that you and your family can Key topics implement to help mitigate cybersecurity risk • Email • Mobile security • Passwords • Malware Audiences: Principals, Board members, C-suite, • Wi-Fi networks • Social engineering decision makers, family members, office staff • Internet usage PROTECT your office Understand how you can help improve your Key topics family office’s, small business’s or law firm’s • Working together • Technology controls cybersecurity posture • Phishing • Operations • Social • Office security Audiences: Office staff, Board members, C-suite engineering • Third-party risk • Ransomware • Secure communications PROTECT yourself from fraud Understand the latest fraud trends and fraud Key topics prevention best practices to help strengthen • Money movement • Business email money movement controls controls compromise • Common fraud • Secure communications Audiences: Financial staff, authorized users, schemes principals, decision makers * This document is provided for educational and informational purposes only and is not intended, nor should it be relied upon, to address every aspect of the subject discussed herein. -

Trend Micro™ Scanmail™ for IBM Domino™ Administrator's Guide

Trend Micro Incorporated reserves the right to make changes to this document and to the products described herein without notice. Before installing and using the software, please review the readme files, release notes, and the latest version of the Administrator’s Guide, which are available from the Trend Micro Web site at: www.trendmicro.com/download/documentation/ NOTE: A license to the Trend Micro Software usually includes the right to product updates, pattern file updates, and basic technical support for one (1) year from the date of purchase only. Maintenance must be renewed on an annual basis at the Trend Micro then-current Maintenance fees. Trend Micro, the Trend Micro t-ball logo, and ScanMail are trademarks or registered trademarks of Trend Micro, Incorporated. All other product or company names may be trademarks or registered trademarks of their owners. This product includes software developed by the University of California, Berkeley and its contributors. All other brand and product names are trademarks or registered trademarks of their respective companies or organizations. Copyright© 2013 Trend Micro Incorporated. All rights reserved. No part of this publication may be reproduced, photocopied, stored in a retrieval system, or transmitted without the express prior written consent of Trend Micro Incorporated. Document Part No. SNEM55971_130516 Release Date: September 2013 Protected by U.S. Patent Nos. 5,951,698 and 5,889,943 i Trend Micro™ ScanMail™ for IBM Domino™ Administrator’s Guide The Administrator’s Guide for ScanMail for IBM Domino introduces the main features of the software and provides installation instructions for your production environment. Read through it before installing or using the software. -

ITC 2015 DVD Files Itcconfplanner

A High Assurance Firewall in a Cloud Environment Using Hardware and Software Item Type text; Proceedings Authors Golriz, Arya; Jaber, Nur Publisher International Foundation for Telemetering Journal International Telemetering Conference Proceedings Rights Copyright © held by the author; distribution rights International Foundation for Telemetering Download date 27/09/2021 04:32:59 Link to Item http://hdl.handle.net/10150/596381 A HIGH ASSURANCE FIREWALL IN A CLOUD ENVIRONMENT USING HARDWARE AND SOFTWARE Dr. Arya Golriz, Nur Jaber Faculty Advisors: Dr. Richard Dean, Dr. Yacob Astatke and Dr. Farzad Moazzami Department of Electrical and Computer Engineering Morgan State University ABSTRACT This paper will focus on analyzing the characteristics of firewalls and implementing them in a virtual environment as both software- and hardware-based solutions that retain the security features of a traditional firewall. INTRODUCTION With the evolution of telemetry into network environments, telemetry experts must consider the impact of cloud computing solutions in future telemetry systems. Cloud computing is becoming increasingly popular and offers a wide variety of advantages over conventional networking, including the ability to centralize resources both physically and financially. While implementing a cloud infrastructure does raise security concerns, a secure cloud infrastructure similar to that of a conventional network can be achieved using tools and tactics deployed to protect the network from adversaries and various malicious attacks. One primary component in any secure network, cloud or otherwise, is a firewall that examines inbound and outbound traffic on the network to ensure that it is authentic and based on a set of rules, as well as enables the network administrator to permit safe content. -

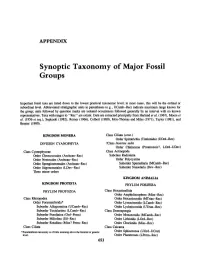

Synoptic Taxonomy of Major Fossil Groups

APPENDIX Synoptic Taxonomy of Major Fossil Groups Important fossil taxa are listed down to the lowest practical taxonomic level; in most cases, this will be the ordinal or subordinallevel. Abbreviated stratigraphic units in parentheses (e.g., UCamb-Ree) indicate maximum range known for the group; units followed by question marks are isolated occurrences followed generally by an interval with no known representatives. Taxa with ranges to "Ree" are extant. Data are extracted principally from Harland et al. (1967), Moore et al. (1956 et seq.), Sepkoski (1982), Romer (1966), Colbert (1980), Moy-Thomas and Miles (1971), Taylor (1981), and Brasier (1980). KINGDOM MONERA Class Ciliata (cont.) Order Spirotrichia (Tintinnida) (UOrd-Rec) DIVISION CYANOPHYTA ?Class [mertae sedis Order Chitinozoa (Proterozoic?, LOrd-UDev) Class Cyanophyceae Class Actinopoda Order Chroococcales (Archean-Rec) Subclass Radiolaria Order Nostocales (Archean-Ree) Order Polycystina Order Spongiostromales (Archean-Ree) Suborder Spumellaria (MCamb-Rec) Order Stigonematales (LDev-Rec) Suborder Nasselaria (Dev-Ree) Three minor orders KINGDOM ANIMALIA KINGDOM PROTISTA PHYLUM PORIFERA PHYLUM PROTOZOA Class Hexactinellida Order Amphidiscophora (Miss-Ree) Class Rhizopodea Order Hexactinosida (MTrias-Rec) Order Foraminiferida* Order Lyssacinosida (LCamb-Rec) Suborder Allogromiina (UCamb-Ree) Order Lychniscosida (UTrias-Rec) Suborder Textulariina (LCamb-Ree) Class Demospongia Suborder Fusulinina (Ord-Perm) Order Monaxonida (MCamb-Ree) Suborder Miliolina (Sil-Ree) Order Lithistida