6.034 Recitation 2 Search Methods: Addendum

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NI\SI\ I-J!'.~!.:}',-~)N, Vifm:Nu\ National Aeronautics and Space Administration Langley Research Center Hampton, Virginia 23665

https://ntrs.nasa.gov/search.jsp?R=19830020606 2020-03-21T01:58:58+00:00Z NASA Technical Memorandum 83216 NASA-TM-8321619830020606 DECISION-MAKING AND PROBLEM SOLVING METHODS IN AUTOMATION TECHNOLOGY W. W. HANKINS) J. E. PENNINGTON) AND L. K. BARKER MAY 1983 : "J' ,. (,lqir; '.I '. .' I_ \ i !- ,( ~, . L!\NGLEY F~I:~~~:r.. r~CH (TN! El~ L1B!(,!\,f(,(, NA~;I\ NI\SI\ I-J!'.~!.:}',-~)N, Vifm:nU\ National Aeronautics and Space Administration Langley Research Center Hampton, Virginia 23665 TABLE OF CONTENTS Page SUMMARY ••••••••••••••••••••••••••••••••• •• 1 INTRODUCTION •• .,... ••••••••••••••••••••••• •• 2 DETECTION AND RECOGNITION •••••••••••••••••••••••• •• 3 T,ypes of Sensors Computer Vision CONSIGHT-1 S,ystem SRI Vision Module Touch and Contact Sensors PLANNING AND SCHEDULING ••••••••••••••••••••••••• •• 7 Operations Research Methods Linear Systems Nonlinear S,ystems Network Models Queueing Theory D,ynamic Programming Simulation Artificial Intelligence (AI) Methods Nets of Action Hierarchies (NOAH) System Monitoring Resource Allocation Robotics LEARNING ••••• ••••••••••••••••••••••••••• •• 13 Expert Systems Learning Concept and Linguistic Constructions Through English Dialogue Natural Language Understanding Robot Learning The Computer as an Intelligent Partner Learning Control THEOREM PROVING ••••••••••••••••••••••••••••• •• 15 Logical Inference Disproof by Counterexample Proof by Contradiction Resolution Principle Heuristic Methods Nonstandard Techniques Mathematical Induction Analogies Question-Answering Systems Man-Machine Systems -

AI-KI-B Heuristic Search

AI-KI-B Heuristic Search Ute Schmid & Diedrich Wolter Practice: Johannes Rabold Cognitive Systems and Smart Environments Applied Computer Science, University of Bamberg last change: 3. Juni 2019, 10:05 Outline Introduction of heuristic search algorithms, based on foundations of problem spaces and search • Uniformed Systematic Search • Depth-First Search (DFS) • Breadth-First Search (BFS) • Complexity of Blocks-World • Cost-based Optimal Search • Uniform Cost Search • Cost Estimation (Heuristic Function) • Heuristic Search Algorithms • Hill Climbing (Depth-First, Greedy) • Branch and Bound Algorithms (BFS-based) • Best First Search • A* • Designing Heuristic Functions • Problem Types Cost and Cost Estimation • \Real" cost is known for each operator. • Accumulated cost g(n) for a leaf node n on a partially expanded path can be calculated. • For problems where each operator has the same cost or where no information about costs is available, all operator applications have equal cost values. For cost values of 1, accumulated costs g(n) are equal to path-length d. • Sometimes available: Heuristics for estimating the remaining costs to reach the final state. • h^(n): estimated costs to reach a goal state from node n • \bad" heuristics can misguide search! Cost and Cost Estimation cont. Evaluation Function: f^(n) = g(n) + h^(n) Cost and Cost Estimation cont. • \True costs" of an optimal path from an initial state s to a final state: f (s). • For a node n on this path, f can be decomposed in the already performed steps with cost g(n) and the yet to perform steps with true cost h(n). • h^(n) can be an estimation which is greater or smaller than the true costs. -

2011-2012 ABET Self-Study Questionnaire for Engineering

ABET SELF-STUDY REPOrt for Computer Engineering July 1, 2012 CONFIDENTIAL The information supplied in this Self-Study Report is for the confidential use of ABET and its authorized agents, and will not be disclosed without authorization of the institution concerned, except for summary data not identifiable to a specific institution. Table of Contents BACKGROUND INFORMATION ................................................................................... 5 A. Contact Information ............................................................................................. 5 B. Program History ................................................................................................... 6 C. Options ................................................................................................................. 7 D. Organizational Structure ...................................................................................... 8 E. Program Delivery Modes ..................................................................................... 8 F. Program Locations ............................................................................................... 9 G. Deficiencies, Weaknesses or Concerns from Previous Evaluation(s) ................. 9 H. Joint Accreditation ............................................................................................... 9 CRITERION 1. STUDENTS ........................................................................................... 10 A. Student Admissions .......................................................................................... -

4 Beyond Classical Search

BEYOND CLASSICAL 4 SEARCH In which we relax the simplifying assumptions of the previous chapter, thereby getting closer to the real world. Chapter 3 addressed a single category of problems: observable, deterministic, known envi- ronments where the solution is a sequence of actions. In this chapter, we look at what happens when these assumptions are relaxed. We begin with a fairly simple case: Sections 4.1 and 4.2 cover algorithms that perform purely local search in the state space, evaluating and modify- ing one or more current states rather than systematically exploring paths from an initial state. These algorithms are suitable for problems in which all that matters is the solution state, not the path cost to reach it. The family of local search algorithms includes methods inspired by statistical physics (simulated annealing)andevolutionarybiology(genetic algorithms). Then, in Sections 4.3–4.4, we examine what happens when we relax the assumptions of determinism and observability. The key idea is that if an agent cannot predict exactly what percept it will receive, then it will need to consider what to do under each contingency that its percepts may reveal. With partial observability, the agent will also need to keep track of the states it might be in. Finally, Section 4.5 investigates online search,inwhichtheagentisfacedwithastate space that is initially unknown and must be explored. 4.1 LOCAL SEARCH ALGORITHMS AND OPTIMIZATION PROBLEMS The search algorithms that we have seen so far are designed to explore search spaces sys- tematically. This systematicity is achieved by keeping one or more paths in memory and by recording which alternatives have been explored at each point along the path. -

Search Algorithms ◦ Breadth-First Search (BFS) ◦ Uniform-Cost Search ◦ Depth-First Search (DFS) ◦ Depth-Limited Search ◦ Iterative Deepening Best-First Search

9-04-2013 Uninformed (blind) search algorithms ◦ Breadth-First Search (BFS) ◦ Uniform-Cost Search ◦ Depth-First Search (DFS) ◦ Depth-Limited Search ◦ Iterative Deepening Best-First Search HW#1 due today HW#2 due Monday, 9/09/13, in class Continue reading Chapter 3 Formulate — Search — Execute 1. Goal formulation 2. Problem formulation 3. Search algorithm 4. Execution A problem is defined by four items: 1. initial state 2. actions or successor function 3. goal test (explicit or implicit) 4. path cost (∑ c(x,a,y) – sum of step costs) A solution is a sequence of actions leading from the initial state to a goal state Search algorithms have the following basic form: do until terminating condition is met if no more nodes to consider then return fail; select node; {choose a node (leaf) on the tree} if chosen node is a goal then return success; expand node; {generate successors & add to tree} Analysis ◦ b = branching factor ◦ d = depth ◦ m = maximum depth g(n) = the total cost of the path on the search tree from the root node to node n h(n) = the straight line distance from n to G n S A B C G h(n) 5.8 3 2.2 2 0 Uninformed search strategies use only the information available in the problem definition Breadth-first search ◦ Uniform-cost search Depth-first search ◦ Depth-limited search ◦ Iterative deepening search • Expand shallowest unexpanded node • Implementation: – fringe is a FIFO queue, i.e., new successors go at end idea: order the branches under each node so that the “most promising” ones are explored first g(n) is the total cost -

A. Local Beam Search with K=1. A. Local Beam Search with K = 1 Is Hill-Climbing Search

4. Give the name that results from each of the following special cases: a. Local beam search with k=1. a. Local beam search with k = 1 is hill-climbing search. b. Local beam search with one initial state and no limit on the number of states retained. b. Local beam search with k = ∞: strictly speaking, this doesn’t make sense. The idea is that if every successor is retained (because k is unbounded), then the search resembles breadth-first search in that it adds one complete layer of nodes before adding the next layer. Starting from one state, the algorithm would be essentially identical to breadth-first search except that each layer is generated all at once. c. Simulated annealing with T=0 at all times (and omitting the termination test). c. Simulated annealing with T = 0 at all times: ignoring the fact that the termination step would be triggered immediately, the search would be identical to first-choice hill climbing because every downward successor would be rejected with probability 1. d. Simulated annealing with T=infinity at all times. d. Simulated annealing with T = infinity at all times: ignoring the fact that the termination step would never be triggered, the search would be identical to a random walk because every successor would be accepted with probability 1. Note that, in this case, a random walk is approximately equivalent to depth-first search. e. Genetic algorithm with population size N=1. e. Genetic algorithm with population size N = 1: if the population size is 1, then the two selected parents will be the same individual; crossover yields an exact copy of the individual; then there is a small chance of mutation. -

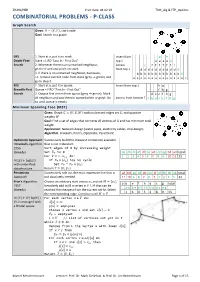

COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), Start Node Goal: Search in a Graph

ZHAW/HSR Print date: 04.02.19 TSM_Alg & FTP_Optimiz COMBINATORIAL PROBLEMS - P-CLASS Graph Search Given: 퐺 = (푉, 퐸), start node Goal: Search in a graph DFS 1. Start at a, put it on stack. Insert from g h Depth-First- Stack = LIFO "Last In - First Out" top ↓ e e e e e Search 2. Whenever there is an unmarked neighbour, Access c f f f f f f f go there and and put it on stack from top ↓ d d d d d d d d d d d 3. If there is no unmarked neighbour, backtrack; b b b b b b b b b b b b b i.e. remove current node from stack (grey ⇒ green) and a a a a a a a a a a a a a a a go to step 2. BFS 1. Start at a, put it in queue. Insert from top ↓ h g Breadth-First Queue = FIFO "First In - First Out" f h g Search 2. Output first vertex from queue (grey ⇒ green). Mark d e c f h g all neighbors and put them in queue (white ⇒ grey). Do Access from bottom ↑ a b d e c f h g so until queue is empty Minimum Spanning Tree (MST) Given: Graph 퐺 = (푉, 퐸, 푊) with undirected edges set 퐸, with positive weights 푊 Goal: Find a set of edges that connects all vertices of G and has minimum total weight. Application: Network design (water pipes, electricity cables, chip design) Algorithm: Kruskal's, Prim's, Optimistic, Pessimistic Optimistic Approach Successively build the cheapest connection available =Kruskal's algorithm that is not redundant. -

Lecture Notes

Lecture Notes Ganesh Ramakrishnan August 15, 2007 2 Contents 1 Search and Optimization 3 1.1 Search . 3 1.1.1 Depth First Search . 5 1.1.2 Breadth First Search (BFS) . 6 1.1.3 Hill Climbing . 6 1.1.4 Beam Search . 8 1.2 Optimal Search . 9 1.2.1 Branch and Bound . 10 1.2.2 A∗ Search . 11 1.3 Constraint Satisfaction . 11 1.3.1 Algorithms for map coloring . 13 1.3.2 Resource Scheduling Problem . 15 1 2 CONTENTS Chapter 1 Search and Optimization Consider the following problem: Cab Driver Problem A cab driver needs to find his way in a city from one point (A) to another (B). Some routes are blocked in gray (probably be- cause they are under construction). The task is to find the path(s) from (A) to (B). Figure 1.1 shows an instance of the problem.. Figure 1.2 shows the map of the united states. Suppose you are set to the following task Map coloring problem Color the map such that states that share a boundary are colored differently.. A simple minded approach to solve this problem is to keep trying color combinations, facing dead ends, back tracking, etc. The program could run for a very very long time (estimated to be a lower bound of 1010 years) before it finds a suitable coloring. On the other hand, we could try another approach which will perform the task much faster and which we will discuss subsequently. The second problem is actually isomorphic to the first problem and to prob- lems of resource allocation in general. -

Beam Search for Integer Multi-Objective Optimization

Beam Search for integer multi-objective optimization Thibaut Barthelemy Sophie N. Parragh Fabien Tricoire Richard F. Hartl University of Vienna, Department of Business Administration, Austria {thibaut.barthelemy,sophie.parragh,fabien.tricoire,richard.hartl}@univie.ac.at Abstract Beam search is a tree search procedure where, at each level of the tree, at most w nodes are kept. This results in a metaheuristic whose solving time is polynomial in w. Popular for single-objective problems, beam search has only received little attention in the context of multi-objective optimization. By introducing the concepts of oracle and filter, we define a paradigm to understand multi-objective beam search algorithms. Its theoretical analysis engenders practical guidelines for the design of these algorithms. The guidelines, suitable for any problem whose variables are integers, are applied to address a bi-objective 0-1 knapsack problem. The solver obtained outperforms the existing non-exact methods from the literature. 1 Introduction Everyday life decisions are often a matter of multi-objective optimization. Indeed, life is full of tradeoffs, as reflected for instance by discussions in parliaments or boards of directors. As a result, almost all cost-oriented problems of the classical literature give also rise to quality, durability, social or ecological concerns. Such goals are often conflicting. For instance, lower costs may lead to lower quality and vice versa. A generic approach to multi-objective optimization consists in computing a set of several good compromise solutions that decision makers discuss in order to choose one. Numerous methods have been developed to find the set of compromise solutions to multi-objective combi- natorial problems. -

An English-Persian Dictionary of Algorithms and Data Structures

An EnglishPersian Dictionary of Algorithms and Data Structures a a zarrabibasuacir ghodsisharifedu algorithm B A all pairs shortest path absolute p erformance guarantee b alphab et abstract data typ e alternating path accepting state alternating Turing machine d Ackermans function alternation d Ackermanns function amortized cost acyclic digraph amortized worst case acyclic directed graph ancestor d acyclic graph and adaptive heap sort ANSI b adaptivekdtree antichain adaptive sort antisymmetric addresscalculation sort approximation algorithm adjacencylist representation arc adjacencymatrix representation array array index adjacency list array merging adjacency matrix articulation p oint d adjacent articulation vertex d b admissible vertex assignmentproblem adversary asso ciation list algorithm asso ciative binary function asso ciative array binary GCD algorithm asymptotic b ound binary insertion sort asymptotic lower b ound binary priority queue asymptotic space complexity binary relation binary search asymptotic time complexity binary trie asymptotic upp er b ound c bingo sort asymptotically equal to bipartite graph asymptotically tightbound bipartite matching -

IJIR Paper Template

International Journal of Research e-ISSN: 2348-6848 p-ISSN: 2348-795X Available at https://journals.pen2print.org/index.php/ijr/ Volume 06 Issue 10 September 2019 Study of Informed Searching Algorithm for Finding the Shortest Path Wint Aye Khaing1, Kyi Zar Nyunt2, Thida Win2, Ei Ei Moe3 1Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 2 Faculty of Information Science, University of Computer Studies (Taungoo), Myanmar 3 Faculty of Computer Science, University of Computer Studies (Taungoo), Myanmar [email protected],[email protected] [email protected], [email protected], [email protected], [email protected] Abstract: frequently deal with this question when planning trips with their cars. There are also many applications like logistic While technological revolution has active role to the planning or traffic simulation that need to solve a huge increase of computer information, growing computational number of such route queries. Shortest path can be either capabilities of devices, and raise the level of knowledge inconvenient for the client if he has to wait for the response abilities, and skills. Increase developments in science and or experience for the service provider if he has to make a lot technology. In city area traffic the shortest path finding is of computing power available. While algorithm is a very difficult in a road network. Shortest path searching is procedure or formula for solve problem. Algorithm usually very important in some special case as medical emergency, means a small procedure that solves a recurrent problem. spying, theft catching, fire brigade etc. In this paper used the shortest path algorithms for solving the shortest path 2. -

Job Shop Scheduling with Beam Search

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Bilkent University Institutional Repository European Journal of Operational Research 118 (1999) 390±412 www.elsevier.com/locate/orms Theory and Methodology Job shop scheduling with beam search I. Sabuncuoglu *, M. Bayiz Department of Industrial Engineering, Bilkent University, 06533 Ankara, Turkey Received 1 July 1997; accepted 1 August 1998 Abstract Beam Search is a heuristic method for solving optimization problems. It is an adaptation of the branch and bound method in which only some nodes are evaluated in the search tree. At any level, only the promising nodes are kept for further branching and remaining nodes are pruned o permanently. In this paper, we develop a beam search based scheduling algorithm for the job shop problem. Both the makespan and mean tardiness are used as the performance measures. The proposed algorithm is also compared with other well known search methods and dispatching rules for a wide variety of problems. The results indicate that the beam search technique is a very competitive and promising tool which deserves further research in the scheduling literature. Ó 1999 Elsevier Science B.V. All rights reserved. Keywords: Scheduling; Beam search; Job shop 1. Introduction (Lowerre, 1976). There have been a number of applications reported in the literature since then. Beam search is a heuristic method for solving Fox (1983) used beam search for solving complex optimization problems. It is an adaptation of the scheduling problems by a system called ISIS. La- branch and bound method in which only some ter, Ow and Morton (1988) studied the eects of nodes are evaluated.