STC-Web-Indexing.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Web Site Metadata

Web Site Metadata Erik Wilde and Anuradha Roy School of Information UC Berkeley Abstract. Understanding the availability of site metadata on the Web is a foundation for any system or application that wants to work with the pages published by Web sites, and also wants to understand a Web site’s structure. There is little information available about how much information Web sites make available about themselves, and this paper presents data addressing this question. Based on this analysis of available Web site metadata, it is easier for Web-oriented applications to be based on statistical analysis rather than assumptions when relying on Web site metadata. Our study of robots.txt files and sitemaps can be used as a starting point for Web-oriented applications wishing to work with Web site metadata. 1 Introduction This paper presents first results from a project which ultimately aims at pro- viding accessible Web site navigation for Web sites [1]. One of the important intermediary steps is to understand how much metadata about Web sites is made available on the Web today, and how much navigational information can be extracted from that metadata. Our long-term goal is to establish a standard way for Web sites to expose their navigational structure, but since this is a long-term goal with a long adoption process, our mid-term goal is establish a third-party service that provides navigational metadata about a site as a service to users interested in that information. A typical scenario would be blind users; they typically have difficulties to navigate Web sites, because most usability and accessibility methods focus on Web pages rather than Web sites. -

Why We Need an Independent Index of the Web ¬ Dirk Lewandowski 50 Society of the Query Reader

Politics of Search Engines 49 René König and Miriam Rasch (eds), Society of the Query Reader: Reections on Web Search, Amsterdam: Institute of Network Cultures, 2014. ISBN: 978-90-818575-8-1. Why We Need an Independent Index of the Web ¬ Dirk Lewandowski 50 Society of the Query Reader Why We Need an Independent Index of the Web ¬ Dirk Lewandowski Search engine indexes function as a ‘local copy of the web’1, forming the foundation of every search engine. Search engines need to look for new documents constantly, detect changes made to existing documents, and remove documents from the index when they are no longer available on the web. When one considers that the web com- prises many billions of documents that are constantly changing, the challenge search engines face becomes clear. It is impossible to maintain a perfectly complete and current index.2 The pool of data changes thousands of times each second. No search engine can keep up with this rapid pace of change.3 The ‘local copy of the web’ can thus be viewed as the Holy Grail of web indexing at best – in practice, different search engines will always attain a varied degree of success in pursuing this goal.4 Search engines do not merely capture the text of the documents they find (as is of- ten falsely assumed). They also generate complex replicas of the documents. These representations include, for instance, information on the popularity of the document (measured by the number of times it is accessed or how many links to the document exist on the web), information extracted from the documents (for example the name of the author or the date the document was created), and an alternative text-based 1. -

Using Information Architecture to Evaluate Digital Libraries, the Reference Librarian, 51, 124-134

FAU Institutional Repository http://purl.fcla.edu/fau/fauir This paper was submitted by the faculty of FAU Libraries. Notice: ©2010 Taylor & Francis Group, LLC. This is an electronic version of an article which is published and may be cited as: Parandjuk, J. C. (2010). Using Information Architecture to Evaluate Digital Libraries, The Reference Librarian, 51, 124-134. doi:10.1080/027638709 The Reference Librarian is available online at: http://www.tandfonline.com/doi/full/10.1080/02763870903579737 The Reference Librarian, 51:124–134, 2010 Copyright © Taylor & Francis Group, LLC ISSN: 0276-3877 print/1541-1117 online DOI: 10.1080/02763870903579737 WREF0276-38771541-1117The Reference Librarian,Librarian Vol. 51, No. 2, Feb 2009: pp. 0–0 Using Information Architecture to Evaluate Digital Libraries UsingJ. C. Parandjuk Information Architecture to Evaluate Digital Libraries JOANNE C. PARANDJUK Florida Atlantic University Libraries, Boca Raton, FL Information users face increasing amounts of digital content, some of which is held in digital library collections. Academic librarians have the dual challenge of organizing online library content and instructing users in how to find, evaluate, and use digital information. Information architecture supports evolving library services by bringing best practice principles to digital collection development. Information architects organize content with a user-centered, customer oriented approach that benefits library users in resource discovery. The Publication of Archival, Library & Museum Materials (PALMM), -

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers Held May 28-19, 1996 in Cambridge, Massachusetts Sponsored by the World Wide Web Consortium Workshop co-chairs: Michael Schwartz, @Home Network Mic Bowman, Transarc Corp. This workshop brings together a cross-section of people concerned with distributed indexing and searching, to explore areas of common concern where standards might be defined. The Call For Participation1 suggested particular focus on repository interfaces that support efficient and powerful distributed indexing and searching. There was a great deal of interest in this workshop. Because of our desire to limit attendance to a workable size group while maximizing breadth of attendee backgrounds, we limited attendance to one person per position paper, and furthermore we limited attendance to one person per institution. In some cases, attendees submitted multiple position papers, with the intention of discussing each of their projects or ideas that were relevant to the workshop. We had not anticipated this interpretation of the Call For Participation; our intention was to use the position papers to select participants, not to provide a forum for enumerating ideas and projects. As a compromise, we decided to choose among the submitted papers and allow multiple position papers per person and per institution, but to restrict attendance as noted above. Hence, in the paper list below there are some cases where one author or institution has multiple position papers. 1 http://www.w3.org/pub/WWW/Search/960528/cfp.html 1 Agenda The Distributed Indexing/Searching Workshop will span two days. The first day's goal is to identify areas for potential standardization through several directed discussion sessions. -

SEO Fundamentals: Key Tips & Best Practices For

SEO Fundamentals 1 SEO Fundamentals: Key Tips & Best Practices for Search Engine Optimization The process of Search Engine Optimization (SEO) has gained popularity in recent years as a If your organization’s means to reach target audiences through improved web site positioning in search engines. mission has to do with However, few have an understanding of the SEO methods employed in order to produce such environmental results. The following tips are some basic best practices to consider in optimizing your site for protection, are your improved search engine performance. target visitors most likely to search for Identifying Target Search Terms “acid rain”, “save the forests”, “toxic waste”, The first step to any SEO campaign is to identify the search terms (also referred to as key “greenhouse effect”, or terms, or key words) for which you want your site pages to be found in search engines – these are the words that a prospective visitor might type into a search engine to find relevant web all of the above? Do sites. For example, if your organization’s mission has to do with environmental protection, are you want to reach your target visitors most likely to search for “acid rain”, “save the forests”, “toxic waste”, visitors who are local, “greenhouse effect”, or all of the above? Do you want to reach visitors who are local, regional regional or national in or national in scope? These are considerations that need careful attention as you begin the scope? These are SEO process. After all, it’s no use having a good search engine ranking for terms no one’s considerations that looking for. -

Web-Page Indexing Based on the Prioritize Ontology Terms

Web-page Indexing based on the Prioritize Ontology Terms Sukanta Sinha1, 4, Rana Dattagupta2, Debajyoti Mukhopadhyay3, 4 1Tata Consultancy Services Ltd., Victoria Park Building, Salt Lake, Kolkata 700091, India [email protected] 2Computer Science Dept., Jadavpur University, Kolkata 700032, India [email protected] 3Information Technology Dept., Maharashtra Institute of Technology, Pune 411038, India [email protected] 4WIDiCoReL Research Lab, Green Tower, C-9/1, Golf Green, Kolkata 700095, India Abstract. In this world, globalization has become a basic and most popular human trend. To globalize information, people are going to publish the docu- ments in the internet. As a result, information volume of internet has become huge. To handle that huge volume of information, Web searcher uses search engines. The Web-page indexing mechanism of a search engine plays a big role to retrieve Web search results in a faster way from the huge volume of Web re- sources. Web researchers have introduced various types of Web-page indexing mechanism to retrieve Web-pages from Web-page repository. In this paper, we have illustrated a new approach of design and development of Web-page index- ing. The proposed Web-page indexing mechanism has applied on domain spe- cific Web-pages and we have identified the Web-page domain based on an On- tology. In our approach, first we prioritize the Ontology terms that exist in the Web-page content then apply our own indexing mechanism to index that Web- page. The main advantage of storing an index is to optimize the speed and per- formance while finding relevant documents from the domain specific search engine storage area for a user given search query. -

Digital Library Federation

SPRING FORUM 2005 WESTIN HORTON PLAZA SAN DIEGO, CA APRIL 13–15, 2005 Washington, D.C. 2 DLF Spring 2005 Forum Printed by Balmar, Inc. Copyright © 2005 by the Digital Library Federation Some rights reserved. The Digital Library Federation Council on Library and Information Resources 1755 Massachusetts Avenue, NW, Suite 500 Washington, DC 20036 http://www.diglib.org It has been ten years since the founders of the Digital Library Federation signed a charter formalizing their commitment to leverage their collective strengths against the challenges faced by one and all libraries in the digital age. The forums—began in summer 1999—are an expression of this commitment to work collaboratively and congenially for the betterment of the entire membership. DLF Spring 2005 Forum 3 CONTENTS Acknowledgments……………………………………………..4 Site Map………………………………………………………..5 Schedule………………………………………………………..7 Program and Abstracts………………………………………..11 Biographies……………………………………………...........33 Appendix A: What is the DLF?................................................50 Appendix B: Recent Publications…………………………….53 Appendix C: DLF-Announce Listserv…..................................56 4 DLF Spring 2005 Forum ACKNOWLEDGMENTS DLF Forum Fellowships for Librarians New To the Profession The Digital Library Federation would like to extend its congratulations to the following for winning DLF Forum Fellowships: • John Chapman, Metadata Librarian, University of Minnesota • Keith Jenkins, Metadata Librarian, Cornell University • Tito Sierra, Digital Technologies Development Librarian, North Carolina State University • Katherine Skinner, Scholarly Communications Analyst, Emory University • Yuan Yuan Zeng, Librarian for East Asian Studies, Johns Hopkins University DLF Fellowship Selection and Program Committees The DLF would also like to extend our heartfelt thanks to the DLF Spring Forum 2005 Program Committee and Fellowship Selection Committee for all their hard work. -

Google Bing Facebook Findopen Foursquare

The Network Google Active Monthly Users: 1B+ Today's world of smartphones, mobile moments, and self-driving cars demands accurate location data more than ever. And no single search, maps, and apps provider is more important to your location marketing strategy than Google. With the Yext Location Manager, you can manage your location data on Google My Business—the tool through which Businesses can supply data to Google Search, Google Maps, and Google+. Bing Active Monthly Users: 150M+ With more than 20% of search market share in the US and rapidly increasing traction worldwide, Bing is an essential piece of the local ecosystem. More than 150 million users search for local Businesses and services on Bing every month. Beginning in 2016, Bing will also power search results across the AOL portfolio of sites, including Huffington Post, Engadget, and TechCrunch. Facebook Active Monthly Users: 1.35B Facebook is the world’s Biggest social network with more than 2 Billion users. Yext Sync for FaceBook makes it easy to manage accurate and up-to-date contact information, photos, messages and more. FindOpen FindOpen is one of the world’s leading online Business directories and helps consumers find the critical information they need to know aBout the Businesses they’d like to visit, like hours of operation, holiday hours, special hours, menus, product and service lists, and more. FindOpen — also known as FindeOffen, TrovaAperto, TrouverOuvert, VindOpen, nyitva.hu, EncuentreAbierto, AussieHours, FindAaben, TeraZOtwarte, HittaӦppna, and deschis.ro in the many countries it operates — provides a fully responsive experience, no matter where its gloBal users are searching from. -

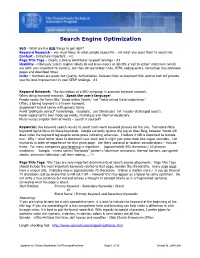

SEO - What Are the BIG Things to Get Right? Keyword Research – You Must Focus on What People Search for - Not What You Want Them to Search For

Search Engine Optimization SEO - What are the BIG things to get right? Keyword Research – you must focus on what people search for - not what you want them to search for. Content – Extremely important - #2. Page Title Tags – Clearly a strong contributor to good rankings - #3 Usability – Obviously search engine robots do not know colors or identify a ‘call to action’ statement (which are both very important to visitors), but they do see broken links, HTML coding errors, contextual links between pages and download times. Links – Numbers are good; but Quality, Authoritative, Relevant links on Keyword-Rich anchor text will provide you the best improvement in your SERP rankings. #1 Keyword Research: The foundation of a SEO campaign is accurate keyword research. When doing keyword research: Speak the user's language! People search for terms like "cheap airline tickets," not "value-priced travel experience." Often, a boring keyword is a known keyword. Supplement brand names with generic terms. Avoid "politically correct" terminology. (example: use ‘blind users’ not ‘visually challenged users’). Favor legacy terms over made-up words, marketese and internal vocabulary. Plural verses singular form of words – search it yourself! Keywords: Use keyword search results to select multi-word keyword phrases for the site. Formulate Meta Keyword tag to focus on these keywords. Google currently ignores the tag as does Bing, however Yahoo still does index the keyword tag despite some press indicating otherwise. I believe it still is important to include one. Why – what better place to document your work and it might just come back into vogue someday. List keywords in order of importance for that given page. -

Awareness Watch™ Newsletter by Marcus P

Awareness Watch™ Newsletter By Marcus P. Zillman, M.S., A.M.H.A. http://www.AwarenessWatch.com/ V9N4 April 2011 Welcome to the V9N4 April 2011 issue of the Awareness Watch™ Newsletter. This newsletter is available as a complimentary subscription and will be issued monthly. Each newsletter will feature the following: Awareness Watch™ Featured Report Awareness Watch™ Spotters Awareness Watch™ Book/Paper/Article Review Subject Tracer™ Information Blogs I am always open to feedback from readers so please feel free to email with all suggestions, reviews and new resources that you feel would be appropriate for inclusion in an upcoming issue of Awareness Watch™. This is an ongoing work of creativity and you will be observing constant changes, constant updates knowing that “change” is the only thing that will remain constant!! Awareness Watch™ Featured Report This month’s featured report covers Deep Web Research. This is a comprehensive miniguide of reference resources covering deep web research currently available on the Internet. The below list of sources is taken from my Subject Tracer™ Information Blog titled Deep Web Research and is constantly updated with Subject Tracer™ bots at the following URL: http://www.DeepWeb.us/ These resources and sources will help you to discover the many pathways available to you through the Internet to find the latest reference deep web resources and sites. 1 Awareness Watch V9N4 April 2011 Newsletter http://www.AwarenessWatch.com/ [email protected] eVoice: 800-858-1462 © 2011 Marcus P. Zillman, M.S., A.M.H.A. Deep Web Research Bots, Blogs and News Aggregators (http://www.BotsBlogs.com/) is a keynote presentation that I have been delivering over the last several years, and much of my information comes from the extensive research that I have completed over the years into the “invisible” or what I like to call the “deep” web. -

The Google Search Engine

University of Business and Technology in Kosovo UBT Knowledge Center Theses and Dissertations Student Work Summer 6-2010 The Google search engine Ganimete Perçuku Follow this and additional works at: https://knowledgecenter.ubt-uni.net/etd Part of the Computer Sciences Commons Faculty of Computer Sciences and Engineering The Google search engine (Bachelor Degree) Ganimete Perçuku – Hasani June, 2010 Prishtinë Faculty of Computer Sciences and Engineering Bachelor Degree Academic Year 2008 – 2009 Student: Ganimete Perçuku – Hasani The Google search engine Supervisor: Dr. Bekim Gashi 09/06/2010 This thesis is submitted in partial fulfillment of the requirements for a Bachelor Degree Abstrakt Përgjithësisht makina kërkuese Google paraqitet si sistemi i kompjuterëve të projektuar për kërkimin e informatave në ueb. Google mundohet t’i kuptojë kërkesat e njerëzve në mënyrë “njerëzore”, dhe t’iu kthej atyre përgjigjen në formën të qartë. Por, ky synim nuk është as afër ideales dhe realizimi i tij sa vjen e vështirësohet me zgjerimin eksponencial që sot po përjeton ueb-i. Google, paraqitet duke ngërthyer në vetvete shqyrtimin e pjesëve që e përbëjnë, atyre në të cilat sistemi mbështetet, dhe rrethinave tjera që i mundësojnë sistemit të funksionojë pa probleme apo të përtërihet lehtë nga ndonjë dështim eventual. Procesi i grumbullimit të të dhënave ne Google dhe paraqitja e tyre në rezultatet e kërkimit ngërthen në vete regjistrimin e të dhënave nga ueb-faqe të ndryshme dhe vendosjen e tyre në rezervuarin e sistemit, përkatësisht në bazën e të dhënave ku edhe realizohen pyetësorët që kthejnë rezultatet e radhitura në mënyrën e caktuar nga algoritmi i Google. -

Context Based Web Indexing for Storage of Relevant Web Pages

International Journal of Computer Applications (0975 – 8887) Volume 40– No.3, February 2012 Context based Web Indexing for Storage of Relevant Web Pages Nidhi Tyagi Rahul Rishi R.P. Agarwal Asst. Prof. Prof. & Head Prof. & Head Shobhit University, Technical Institute of Textile & Shobhit University, Meerut Sciences, Bhiwani Meerut ABSTRACT or 1. This strategy makes the searching task faster and A focused crawler downloads web pages that are relevant to a optimized. user specified topic. The downloaded documents are indexed with a view to optimize speed and performance in finding 2. RELATED WORK relevant documents for a search query at the search engine In this section, a review of previous work on index side. However, the information will be more relevant if the organization is given. In this field of index organization and context of the topic is also made available to the retrieval maintenance, many algorithms and techniques have already system. This paper proposes a technique for indexing the been proposed but they seem to be less efficient in efficiently keyword extracted from the web documents along with their accessing the index. contexts wherein it uses a height balanced binary search F. Silvestri, R.Perego and Orlando [4] proposed the (AVL) tree, for indexing purpose to enhance the performance reordering algorithm which partitions the set of documents of the retrieval system. into k ordered clusters on the basis of similarity measure where the biggest document is selected as centroid of the first General Terms cluster and n/k1 most similar documents are assigned to this Algorithm, retrieval, indexer. cluster. Then the biggest document is selected and the same process repeats.