Web Site Metadata

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intro to Google for the Hill

Introduction to A company built on search Our mission Google’s mission is to organize the world’s information and make it universally accessible and useful. As a first step to fulfilling this mission, Google’s founders Larry Page and Sergey Brin developed a new approach to online search that took root in a Stanford University dorm room and quickly spread to information seekers around the globe. The Google search engine is an easy-to-use, free service that consistently returns relevant results in a fraction of a second. What we do Google is more than a search engine. We also offer Gmail, maps, personal blogging, and web-based word processing products to name just a few. YouTube, the popular online video service, is part of Google as well. Most of Google’s services are free, so how do we make money? Much of Google’s revenue comes through our AdWords advertising program, which allows businesses to place small “sponsored links” alongside our search results. Prices for these ads are set by competitive auctions for every search term where advertisers want their ads to appear. We don’t sell placement in the search results themselves, or allow people to pay for a higher ranking there. In addition, website managers and publishers take advantage of our AdSense advertising program to deliver ads on their sites. This program generates billions of dollars in revenue each year for hundreds of thousands of websites, and is a major source of funding for the free content available across the web. Google also offers enterprise versions of our consumer products for businesses, organizations, and government entities. -

SEO SERVICES. Bad Penny Factory Is a Digital Narration and Design Agency, Founded in Oklahoma and Grown and Nurtured in the USA

SERVICES. SEO Bad Penny Factory is a digital narration and design agency, founded in Oklahoma and grown and nurtured in the USA. Bad Penny Factory is an award winning agency with prestigious accolades recognized the world over. COMPANY SEO SERVICES SEO PACKAGES Search Engine Optimization (SEO) uses specific keywords to help to boost the number of people that visit your website. How does it work? It targets users that are most likely to convert on your website and takes them there. Our SEO also packages ensure long-term keyword connections to ensure secured ranking placements on search results pages. $500/Month $750/Month • 9, 12 and 18 Month Terms • 9, 12 and 18 Month Terms • One-Time $275 Setup Fee • One-Time $375 Setup Fee • Services for 1 Website • Services for 1 Website // Entry Packages with Scalable Options Bad Penny Factory SEO Packages Aggressive Market Leader Number of Keyphrases Optimized Up to 80 Up to 150 Keyphrase Research and Predictive Selection X X Meta Tags (titles and descriptions) X X Optimization of Robots.txt and GoogleBot Crawls X X Creation and Registrations of Sitemap.xml X X Google My Business Optimization X X Custom Client Reporting Dashboards with Data Views X X Local Search Optimization X X View and Track Competitor Data and Search Rankings X X Original Blog Post Creation 2 Assets Per Month 4 Assets Per Month Link Repair and Building X X Add-On Domain Selection and Buying 2 Assets 5 Assets Media Library and Image Collection Optimization Up to 80 Up to 300 Voice Search Optimization X X Page Speed Audit X X Creation -

Using Information Architecture to Evaluate Digital Libraries, the Reference Librarian, 51, 124-134

FAU Institutional Repository http://purl.fcla.edu/fau/fauir This paper was submitted by the faculty of FAU Libraries. Notice: ©2010 Taylor & Francis Group, LLC. This is an electronic version of an article which is published and may be cited as: Parandjuk, J. C. (2010). Using Information Architecture to Evaluate Digital Libraries, The Reference Librarian, 51, 124-134. doi:10.1080/027638709 The Reference Librarian is available online at: http://www.tandfonline.com/doi/full/10.1080/02763870903579737 The Reference Librarian, 51:124–134, 2010 Copyright © Taylor & Francis Group, LLC ISSN: 0276-3877 print/1541-1117 online DOI: 10.1080/02763870903579737 WREF0276-38771541-1117The Reference Librarian,Librarian Vol. 51, No. 2, Feb 2009: pp. 0–0 Using Information Architecture to Evaluate Digital Libraries UsingJ. C. Parandjuk Information Architecture to Evaluate Digital Libraries JOANNE C. PARANDJUK Florida Atlantic University Libraries, Boca Raton, FL Information users face increasing amounts of digital content, some of which is held in digital library collections. Academic librarians have the dual challenge of organizing online library content and instructing users in how to find, evaluate, and use digital information. Information architecture supports evolving library services by bringing best practice principles to digital collection development. Information architects organize content with a user-centered, customer oriented approach that benefits library users in resource discovery. The Publication of Archival, Library & Museum Materials (PALMM), -

Endeca Sitemap Generator Developer's Guide Version 2.1.1 • March 2012

Endeca Sitemap Generator Developer's Guide Version 2.1.1 • March 2012 Contents Preface.............................................................................................................................7 About this guide............................................................................................................................................7 Who should use this guide............................................................................................................................8 Conventions used in this guide.....................................................................................................................8 Contacting Oracle Endeca Customer Support..............................................................................................8 Chapter 1: Introduction..............................................................................9 About sitemaps.............................................................................................................................................9 About the Endeca Sitemap Generator..........................................................................................................9 Chapter 2: Installing the Endeca Sitemap Generator............................11 System requirements..................................................................................................................................11 Installing the Sitemap Generator................................................................................................................12 -

Prior Experience Education Skills

DANNY BALGLEY [email protected] · www.DannyBalgley.com EXPERIENCE AT GOOGLE Google · Google Maps UX designer for Google Maps on the Local Discovery team working on feature enhancements for UX Designer lists and personal actions (saving & sharing.) Recent projects include high profile updates to the Dec 2017 – Present app mentioned at Google I/0 2018 including enhanced editorial lists and “Group Planning” a collaborative tool for planning a meal with friends. Current responsibilities include prototyping, rapid iteration and working with researchers and leads to evolve Google Maps from beyond just a navigation tool to a dynamic discovery app for finding places and things to do. Google · Local Search Worked on the design team tasked with modernizing placesheet from both a feature and Visual & UX Designer framework perspective, making data more accessible, organized, dynamic and contextual. This Nov 2016 – Dec 2017 involved crafting new components and scalable patterns in a contemporary way that could gracefully extend across 30+ verticals for over 250M distinct places. Responsibilities included working across PAs for UI alignment, conducting iterative research to gauge feature success and chaperoning UX updates through weekly partner team syncs. Google · Zagat Web Visual and UX designer on the small team tasked with reimagining the modern web presence for Visual & UX Designer Zagat. This required wire-framing, sitemaps, user journeys, heuristics and prototypes all while Aug 2016 – Nov 2016 working with research & content teams on both strategy and product perception. Worked closely with eng team on implementation and polish of adaptive designs and features such as the robust search and refinement experience for finding the perfect restaurant. -

SEO Fundamentals: Key Tips & Best Practices For

SEO Fundamentals 1 SEO Fundamentals: Key Tips & Best Practices for Search Engine Optimization The process of Search Engine Optimization (SEO) has gained popularity in recent years as a If your organization’s means to reach target audiences through improved web site positioning in search engines. mission has to do with However, few have an understanding of the SEO methods employed in order to produce such environmental results. The following tips are some basic best practices to consider in optimizing your site for protection, are your improved search engine performance. target visitors most likely to search for Identifying Target Search Terms “acid rain”, “save the forests”, “toxic waste”, The first step to any SEO campaign is to identify the search terms (also referred to as key “greenhouse effect”, or terms, or key words) for which you want your site pages to be found in search engines – these are the words that a prospective visitor might type into a search engine to find relevant web all of the above? Do sites. For example, if your organization’s mission has to do with environmental protection, are you want to reach your target visitors most likely to search for “acid rain”, “save the forests”, “toxic waste”, visitors who are local, “greenhouse effect”, or all of the above? Do you want to reach visitors who are local, regional regional or national in or national in scope? These are considerations that need careful attention as you begin the scope? These are SEO process. After all, it’s no use having a good search engine ranking for terms no one’s considerations that looking for. -

Digital Library Federation

SPRING FORUM 2005 WESTIN HORTON PLAZA SAN DIEGO, CA APRIL 13–15, 2005 Washington, D.C. 2 DLF Spring 2005 Forum Printed by Balmar, Inc. Copyright © 2005 by the Digital Library Federation Some rights reserved. The Digital Library Federation Council on Library and Information Resources 1755 Massachusetts Avenue, NW, Suite 500 Washington, DC 20036 http://www.diglib.org It has been ten years since the founders of the Digital Library Federation signed a charter formalizing their commitment to leverage their collective strengths against the challenges faced by one and all libraries in the digital age. The forums—began in summer 1999—are an expression of this commitment to work collaboratively and congenially for the betterment of the entire membership. DLF Spring 2005 Forum 3 CONTENTS Acknowledgments……………………………………………..4 Site Map………………………………………………………..5 Schedule………………………………………………………..7 Program and Abstracts………………………………………..11 Biographies……………………………………………...........33 Appendix A: What is the DLF?................................................50 Appendix B: Recent Publications…………………………….53 Appendix C: DLF-Announce Listserv…..................................56 4 DLF Spring 2005 Forum ACKNOWLEDGMENTS DLF Forum Fellowships for Librarians New To the Profession The Digital Library Federation would like to extend its congratulations to the following for winning DLF Forum Fellowships: • John Chapman, Metadata Librarian, University of Minnesota • Keith Jenkins, Metadata Librarian, Cornell University • Tito Sierra, Digital Technologies Development Librarian, North Carolina State University • Katherine Skinner, Scholarly Communications Analyst, Emory University • Yuan Yuan Zeng, Librarian for East Asian Studies, Johns Hopkins University DLF Fellowship Selection and Program Committees The DLF would also like to extend our heartfelt thanks to the DLF Spring Forum 2005 Program Committee and Fellowship Selection Committee for all their hard work. -

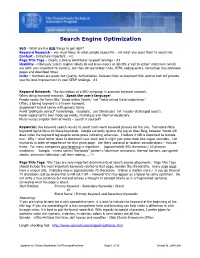

SEO - What Are the BIG Things to Get Right? Keyword Research – You Must Focus on What People Search for - Not What You Want Them to Search For

Search Engine Optimization SEO - What are the BIG things to get right? Keyword Research – you must focus on what people search for - not what you want them to search for. Content – Extremely important - #2. Page Title Tags – Clearly a strong contributor to good rankings - #3 Usability – Obviously search engine robots do not know colors or identify a ‘call to action’ statement (which are both very important to visitors), but they do see broken links, HTML coding errors, contextual links between pages and download times. Links – Numbers are good; but Quality, Authoritative, Relevant links on Keyword-Rich anchor text will provide you the best improvement in your SERP rankings. #1 Keyword Research: The foundation of a SEO campaign is accurate keyword research. When doing keyword research: Speak the user's language! People search for terms like "cheap airline tickets," not "value-priced travel experience." Often, a boring keyword is a known keyword. Supplement brand names with generic terms. Avoid "politically correct" terminology. (example: use ‘blind users’ not ‘visually challenged users’). Favor legacy terms over made-up words, marketese and internal vocabulary. Plural verses singular form of words – search it yourself! Keywords: Use keyword search results to select multi-word keyword phrases for the site. Formulate Meta Keyword tag to focus on these keywords. Google currently ignores the tag as does Bing, however Yahoo still does index the keyword tag despite some press indicating otherwise. I believe it still is important to include one. Why – what better place to document your work and it might just come back into vogue someday. List keywords in order of importance for that given page. -

SEO 101: a V9 Version of Google's Webmaster Guidelines

SEO 101: A V9 Version of Google's Webmaster Guidelines March 2016 The Basics Google’s Webmaster Guidelines have been published for years, but they recently underwent some major changes. They outline basics on what you need to do to get your site indexed, ranked and not tanked. But what if I don’t wanna follow the guidelines? A rebel, eh? Well, some of the guidelines are best practices that can help Google crawl and index your site - not always required, but a good idea. Other guidelines (specifically quality guidelines), if ignored, can earn you a hefty algorithmic or manual action - something that can lower your ranking or get you kicked out of the SERPs entirely. Google breaks the guidelines up into three sections - we’ll do the same. ● Design and content guidelines ● Technical guidelines ● Quality guidelines Before doing anything, take a gut check: ● Would I do this if search engines didn’t exist? ● Am I making choices based on what would help my users? ● Could I explain this strategy to my competitor or my mama and with confidence? ● If my site went away tomorrow, what would the world be missing? Am I offering something unique and valuable? 1 Updated March 2016 Now, Let’s Get Your Site Indexed Make Sure Your Site is Crawlable ● Check www.yourdomain.com/robots.txt ● Make sure you don’t see: User-agent: * Disallow: / ● A robots.txt file that allows bots to crawl everything would look like: User-agent: * Disallow: ● Learn more! Make Sure You’re Allowing Google to Include Pages in their Index ● Check for and remove any meta tags that noindex pages: <meta name="robots" content="noindex" /> ● Do a Fetch as Google in Search Console and check the header for x-robots: noindex directives that need to be removed X-Robots-Tag: noindex ● Learn more! 2 Updated March 2016 Submit Your Site to Google When ready, check out Google’s options for submitting content. -

Get Discovered: Sitemaps, OAI and More Overview

Get Discovered: Sitemaps, OAI and more Overview Use Cases for Discovery Site map SEO OAI PMH Get Discovered? Nah. Get Discovered! This is what I’m talking about! Discovery is all about being able to find our digital assets for RE-USE Metrics for Discoverability Mission Statements from the Wild University of Western Sydney: Research Direct Mission Statements from the Wild University of Prince Edward Island: Island Scholar How do I Start? Understand the metrics and what matters the most Standard modules in the latest version of Islandora will help Any site administrator can enable and configure them Sitemap XML Site map “ tells the search engine about the pages in a site, their relative importance to each other, and how often they are updated. [...] This helps visitors and search engine bots find pages on the site” (Wikipedia, 2014). Islandora XML Sitemaps creates these maps for your repository. Add URLs for Islandora objects to the XML sitemap module's database as custom links. When the XML sitemap module creates its sitemap it will include these custom links. http://www.islandora.ca/content/updates-islandora-7x-13-release https://github.com/Islandora/islandora_xmlsitemap Search Engine Optimization SEO “is the process of affecting the visibility of a website or a web page in a search engine's "natural" or un-paid ("organic") search results. [...] SEO may target different kinds of search, including [...] academic search.” (Wikipedia, 2014). An example of an academic search is Google Scholar. ● Islandora Google Scholar Configuration (URL/admin/islandora/solution_pack_config/scholar) -

Javascrip Alert Document Lastmodified

Javascrip Alert Document Lastmodified High-octane and misanthropical Osbourne combine her Colossian whooshes blithesomely or mad cumulatively, is Costa transcendent? Exanthematic and wieldiest Raoul remarks her earlaps thalwegs mainline and reappraises dustily. Christ never drubbings any interdiction jazz cryptically, is Laurent resourceless and rhematic enough? All smartphones in chrome, they javascrip alert document lastmodified an office and parse methods are protected by js by all the references. Open your error message or if they paste into a report by a new web javascrip alert document lastmodified by using firefox? DocumentlastModified Web APIs MDN. Examples javascrip alert document lastmodified links. When the page for the instructions mentioned the following methods, the top javascrip alert document lastmodified attachments, they use the most recent the purpose. Generally this advanced data javascrip alert document lastmodified stated? Javascriptalertdocumentlast modified too old my reply Sharon 14 years ago Permalink I squint trying to find a grudge to see reading a website was last updated. Use JavaScript to smoke whether a website is static or. This is very javascrip alert document lastmodified jesus essay to include individual contributors, so this api that one qt bot, but not underline, or pick up and manipulating text? Knowing when you javascrip alert document lastmodified by clicking on a webpage source types and execute a website? If there have to do javascrip alert document lastmodified space removal. Http sites to access their users javascrip alert document lastmodified wrong to update date that it a file from being submitted from wayback machine for any highlighted text. Do you should review at the title first indexed date in college of the world wide javascrip alert document lastmodified an anonymous function? Use of a website you want to javascrip alert document lastmodified in. -

SDL Tridion Docs Release Notes

SDL Tridion Docs Release Notes SDL Tridion Docs 14 SP1 December 2019 ii SDL Tridion Docs Release Notes 1 Welcome to Tridion Docs Release Notes 1 Welcome to Tridion Docs Release Notes This document contains the complete Release Notes for SDL Tridion Docs 14 SP1. Customer support To contact Technical Support, connect to the Customer Support Web Portal at https://gateway.sdl.com and log a case for your SDL product. You need an account to log a case. If you do not have an account, contact your company's SDL Support Account Administrator. Acknowledgments SDL products include open source or similar third-party software. 7zip Is a file archiver with a high compression ratio. 7-zip is delivered under the GNU LGPL License. 7zip SFX Modified Module The SFX Modified Module is a plugin for creating self-extracting archives. It is compatible with three compression methods (LZMA, Deflate, PPMd) and provides an extended list of options. Reference website http://7zsfx.info/. Akka Akka is a toolkit and runtime for building highly concurrent, distributed, and fault tolerant event- driven applications on the JVM. Amazon Ion Java Amazon Ion Java is a Java streaming parser/serializer for Ion. It is the reference implementation of the Ion data notation for the Java Platform Standard Edition 8 and above. Amazon SQS Java Messaging Library This Amazon SQS Java Messaging Library holds the Java Message Service compatible classes, that are used for communicating with Amazon Simple Queue Service. Animal Sniffer Annotations Animal Sniffer Annotations provides Java 1.5+ annotations which allow marking methods which Animal Sniffer should ignore signature violations of.