A Quantum Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

From Fantasy to Reality Quantum Computing Is Coming to the Marketplace

Signals for Strategists From fantasy to reality Quantum computing is coming to the marketplace By David Schatsky and Ramya Kunnath Puliyakodil Introduction: A new way Signals to solve computationally • In the last three years, venture capital investors have intensive problems placed $147 million with quantum computing start- ups; governments globally have provided $2.2 billion RANTED, quantum computing is hard to explain. in support to researchers1 But that hasn’t stopped the fantastical tech- Gnology from attracting billions of dollars of R&D • Some of the world’s leading tech companies have investment, catching the eye of venture capital firms, active quantum computing programs and spurring research programs at big tech companies • Financial services, aerospace and defense, and public and enterprises. Some companies are getting a head sector organizations are researching quantum com- start on applying quantum technology to computation- puting applications ally intensive problems in finance, risk management, cybersecurity, materials science, energy, and logistics. • Quantum computer maker D-Wave Systems announced the general availability of its next- 1 From fantasy to reality Quantum computing is coming to desktops generation computer, along with a first customer for particles—that is, objects smaller than atoms. For the new system2 instance, electrons can exist in multiple distinct states at the same time, a phenomenon known as superposi- • VC firm Andreessen Horowitz has signaled its inten- tion. And it’s impossible to know for sure at any given tion to fund quantum computing start-ups3 instant what state an electron may be in, because the • Standards-setting bodies are seeking a transition very act of observing the state changes it. -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

Quantum Inductive Learning and Quantum Logic Synthesis

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 2009 Quantum Inductive Learning and Quantum Logic Synthesis Martin Lukac Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Let us know how access to this document benefits ou.y Recommended Citation Lukac, Martin, "Quantum Inductive Learning and Quantum Logic Synthesis" (2009). Dissertations and Theses. Paper 2319. https://doi.org/10.15760/etd.2316 This Dissertation is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. For more information, please contact [email protected]. QUANTUM INDUCTIVE LEARNING AND QUANTUM LOGIC SYNTHESIS by MARTIN LUKAC A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in ELECTRICAL AND COMPUTER ENGINEERING. Portland State University 2009 DISSERTATION APPROVAL The abstract and dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering were presented January 9, 2009, and accepted by the dissertation committee and the doctoral program. COMMITTEE APPROVALS: Irek Perkowski, Chair GarrisoH-Xireenwood -George ^Lendaris 5artM ?teven Bleiler Representative of the Office of Graduate Studies DOCTORAL PROGRAM APPROVAL: Malgorza /ska-Jeske7~Director Electrical Computer Engineering Ph.D. Program ABSTRACT An abstract of the dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering presented January 9, 2009. Title: Quantum Inductive Learning and Quantum Logic Synhesis Since Quantum Computer is almost realizable on large scale and Quantum Technology is one of the main solutions to the Moore Limit, Quantum Logic Synthesis (QLS) has become a required theory and tool for designing Quantum Logic Circuits. -

BCG) Is a Global Management Consulting Firm and the World’S Leading Advisor on Business Strategy

The Next Decade in Quantum Computing— and How to Play Boston Consulting Group (BCG) is a global management consulting firm and the world’s leading advisor on business strategy. We partner with clients from the private, public, and not-for-profit sectors in all regions to identify their highest-value opportunities, address their most critical challenges, and transform their enterprises. Our customized approach combines deep insight into the dynamics of companies and markets with close collaboration at all levels of the client organization. This ensures that our clients achieve sustainable competitive advantage, build more capable organizations, and secure lasting results. Founded in 1963, BCG is a private company with offices in more than 90 cities in 50 countries. For more information, please visit bcg.com. THE NEXT DECADE IN QUANTUM COMPUTING— AND HOW TO PLAY PHILIPP GERBERT FRANK RUESS November 2018 | Boston Consulting Group CONTENTS 3 INTRODUCTION 4 HOW QUANTUM COMPUTERS ARE DIFFERENT, AND WHY IT MATTERS 6 THE EMERGING QUANTUM COMPUTING ECOSYSTEM Tech Companies Applications and Users 10 INVESTMENTS, PUBLICATIONS, AND INTELLECTUAL PROPERTY 13 A BRIEF TOUR OF QUANTUM COMPUTING TECHNOLOGIES Criteria for Assessment Current Technologies Other Promising Technologies Odd Man Out 18 SIMPLIFYING THE QUANTUM ALGORITHM ZOO 21 HOW TO PLAY THE NEXT FIVE YEARS AND BEYOND Determining Timing and Engagement The Current State of Play 24 A POTENTIAL QUANTUM WINTER, AND THE OPPORTUNITY THEREIN 25 FOR FURTHER READING 26 NOTE TO THE READER 2 | The Next Decade in Quantum Computing—and How to Play INTRODUCTION he experts are convinced that in time they can build a Thigh-performance quantum computer. -

Nearest Centroid Classification on a Trapped Ion Quantum Computer

www.nature.com/npjqi ARTICLE OPEN Nearest centroid classification on a trapped ion quantum computer ✉ Sonika Johri1 , Shantanu Debnath1, Avinash Mocherla2,3,4, Alexandros SINGK2,3,5, Anupam Prakash2,3, Jungsang Kim1 and Iordanis Kerenidis2,3,6 Quantum machine learning has seen considerable theoretical and practical developments in recent years and has become a promising area for finding real world applications of quantum computers. In pursuit of this goal, here we combine state-of-the-art algorithms and quantum hardware to provide an experimental demonstration of a quantum machine learning application with provable guarantees for its performance and efficiency. In particular, we design a quantum Nearest Centroid classifier, using techniques for efficiently loading classical data into quantum states and performing distance estimations, and experimentally demonstrate it on a 11-qubit trapped-ion quantum machine, matching the accuracy of classical nearest centroid classifiers for the MNIST handwritten digits dataset and achieving up to 100% accuracy for 8-dimensional synthetic data. npj Quantum Information (2021) 7:122 ; https://doi.org/10.1038/s41534-021-00456-5 INTRODUCTION Thus, one might hope that noisy quantum computers are 1234567890():,; Quantum technologies promise to revolutionize the future of inherently better suited for machine learning computations than information and communication, in the form of quantum for other types of problems that need precise computations like computing devices able to communicate and process massive factoring or search problems. amounts of data both efficiently and securely using quantum However, there are significant challenges to be overcome to resources. Tremendous progress is continuously being made both make QML practical. -

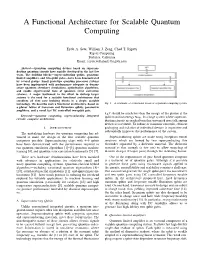

A Functional Architecture for Scalable Quantum Computing

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

Explorations in Quantum Neural Networks with Intermediate Measurements

ESANN 2020 proceedings, European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning. Online event, 2-4 October 2020, i6doc.com publ., ISBN 978-2-87587-074-2. Available from http://www.i6doc.com/en/. Explorations in Quantum Neural Networks with Intermediate Measurements Lukas Franken and Bogdan Georgiev ∗Fraunhofer IAIS - Research Center for ML and ML2R Schloss Birlinghoven - 53757 Sankt Augustin Abstract. In this short note we explore a few quantum circuits with the particular goal of basic image recognition. The models we study are inspired by recent progress in Quantum Convolution Neural Networks (QCNN) [12]. We present a few experimental results, where we attempt to learn basic image patterns motivated by scaling down the MNIST dataset. 1 Introduction The recent demonstration of Quantum Supremacy [1] heralds the advent of the Noisy Intermediate-Scale Quantum (NISQ) [2] technology, where signs of supe- riority of quantum over classical machines in particular tasks may be expected. However, one should keep in mind the limitations of NISQ-devices when study- ing and developing quantum-algorithmic solutions - among other things, these include limits on the number of gates and qubits. At the same time the interaction of quantum computing and machine learn- ing is growing, with a vast amount of literature and new results. To name a few applications, the well-known HHL algorithm [3], quantum phase estimation [5] and inner products speed-up techniques lead to further advances in Support Vector Machines [4] and Principal Component Analysis [6, 7]. Intensive progress and ongoing research has also been made towards quantum analogues of Neural Networks (QNN) [8, 9, 10]. -

Quantum Magnonics with a Macroscopic Ferromagnetic Sphere

Quantum magnonics with a macroscopic ferromagnetic sphere Yasunobu Nakamura Superconducting Quantum Electronics Team Center for Emergent Matter Science (CEMS), RIKEN Research Center for Advanced Science and Technology (RCAST), The University of Tokyo Quantum Information Physics & Engineering Lab @ UTokyo http://www.qc.rcast.u-tokyo.ac.jp/ The magnonists Ryosuke Mori Seiichiro Ishino Dany Lachance-Quirion (Sherbrooke) Yutaka Tabuchi Alto Osada Koji Usami Ryusuke Hisatomi Quantum mechanics in macroscopic scale Quantum state control of collective excitation modes in solids Superconductivity Microscopic Surface Macroscopic plasmon Ordered phase Charge polariton Broken symmetry Photon Photon Spin Atom Magnon Phonon Ferromagnet Lattice order • Spatially-extended rigid mode • Large transition moment Superconducting qubit – nonlinear resonator LC resonator Josephson junction resonator Josephson junction = nonlinear inductor anharmonicity ⇒ effective two-level system energy inductive energy = confinement potential charging energy = kinetic energy ⇒ quantized states Quantum interface between microwave and light • Quantum repeater • Quantum computing network Hybrid quantum systems Superconducting quantum electronics Superconducting circuits Surface Nonlinearity plasmon polariton Quantum Quantum magnonics nanomechanics Microwave Ferromagnet Nanomechanics Magnon Phonon Light Quantum surface acoustics Quantum magnonics Hybrid with paramagnetic spin ensembles Spin ensemble of NV-centers in diamond Kubo et al. PRL 107, 220501 (2011). CEA Saclay Zhu et -

Experimental Kernel-Based Quantum Machine Learning in Finite Feature

www.nature.com/scientificreports OPEN Experimental kernel‑based quantum machine learning in fnite feature space Karol Bartkiewicz1,2*, Clemens Gneiting3, Antonín Černoch2*, Kateřina Jiráková2, Karel Lemr2* & Franco Nori3,4 We implement an all‑optical setup demonstrating kernel‑based quantum machine learning for two‑ dimensional classifcation problems. In this hybrid approach, kernel evaluations are outsourced to projective measurements on suitably designed quantum states encoding the training data, while the model training is processed on a classical computer. Our two-photon proposal encodes data points in a discrete, eight-dimensional feature Hilbert space. In order to maximize the application range of the deployable kernels, we optimize feature maps towards the resulting kernels’ ability to separate points, i.e., their “resolution,” under the constraint of fnite, fxed Hilbert space dimension. Implementing these kernels, our setup delivers viable decision boundaries for standard nonlinear supervised classifcation tasks in feature space. We demonstrate such kernel-based quantum machine learning using specialized multiphoton quantum optical circuits. The deployed kernel exhibits exponentially better scaling in the required number of qubits than a direct generalization of kernels described in the literature. Many contemporary computational problems (like drug design, trafc control, logistics, automatic driving, stock market analysis, automatic medical examination, material engineering, and others) routinely require optimiza- tion over huge amounts of data1. While these highly demanding problems can ofen be approached by suitable machine learning (ML) algorithms, in many relevant cases the underlying calculations would last prohibitively long. Quantum ML (QML) comes with the promise to run these computations more efciently (in some cases exponentially faster) by complementing ML algorithms with quantum resources. -

![Arxiv:2011.01938V2 [Quant-Ph] 10 Feb 2021 Ample and Complexity-Theoretic Argument Showing How Or Small Polynomial Speedups [8,9]](https://docslib.b-cdn.net/cover/8446/arxiv-2011-01938v2-quant-ph-10-feb-2021-ample-and-complexity-theoretic-argument-showing-how-or-small-polynomial-speedups-8-9-958446.webp)

Arxiv:2011.01938V2 [Quant-Ph] 10 Feb 2021 Ample and Complexity-Theoretic Argument Showing How Or Small Polynomial Speedups [8,9]

Power of data in quantum machine learning Hsin-Yuan Huang,1, 2, 3 Michael Broughton,1 Masoud Mohseni,1 Ryan 1 1 1 1, Babbush, Sergio Boixo, Hartmut Neven, and Jarrod R. McClean ∗ 1Google Research, 340 Main Street, Venice, CA 90291, USA 2Institute for Quantum Information and Matter, Caltech, Pasadena, CA, USA 3Department of Computing and Mathematical Sciences, Caltech, Pasadena, CA, USA (Dated: February 12, 2021) The use of quantum computing for machine learning is among the most exciting prospective ap- plications of quantum technologies. However, machine learning tasks where data is provided can be considerably different than commonly studied computational tasks. In this work, we show that some problems that are classically hard to compute can be easily predicted by classical machines learning from data. Using rigorous prediction error bounds as a foundation, we develop a methodology for assessing potential quantum advantage in learning tasks. The bounds are tight asymptotically and empirically predictive for a wide range of learning models. These constructions explain numerical results showing that with the help of data, classical machine learning models can be competitive with quantum models even if they are tailored to quantum problems. We then propose a projected quantum model that provides a simple and rigorous quantum speed-up for a learning problem in the fault-tolerant regime. For near-term implementations, we demonstrate a significant prediction advantage over some classical models on engineered data sets designed to demonstrate a maximal quantum advantage in one of the largest numerical tests for gate-based quantum machine learning to date, up to 30 qubits.