Collective Multi-Type Entity Alignment Between Knowledge Graphs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The New Yorker, March 9, 2015

PRICE $7.99 MAR. 9, 2015 MARCH 9, 2015 7 GOINGS ON ABOUT TOWN 27 THE TALK OF THE TOWN Jeffrey Toobin on the cynical health-care case; ISIS in Brooklyn; Imagine Dragons; Knicks knocks; James Surowiecki on Greece. Peter Hessler 34 TRAVELS WITH MY CENSOR In Beijing for a book tour. paul Rudnick 41 TEST YOUR KNOWLEDGE OF SEXUAL DIFFERENCE JOHN MCPHEE 42 FRAME OF REFERENCE What if someone hasn’t heard of Scarsdale? ERIC SCHLOSSER 46 BREAK-IN AT Y-12 How pacifists exposed a nuclear vulnerability. Saul Leiter 70 HIDDEN DEPTHS Found photographs. FICTION stephen king 76 “A DEATH” THE CRITICS A CRITIC AT LARGE KELEFA SANNEH 82 The New York hardcore scene. BOOKS KATHRYN SCHULZ 90 “H Is for Hawk.” 95 Briefly Noted ON TELEVISION emily nussbaum 96 “Fresh Off the Boat,” “Black-ish.” THE THEATRE HILTON ALS 98 “Hamilton.” THE CURRENT CINEMA ANTHONY LANE 100 “Maps to the Stars,” “ ’71.” POEMS WILL EAVES 38 “A Ship’s Whistle” Philip Levine 62 “More Than You Gave” Birgit Schössow COVER “Flatiron Icebreaker” DRAWINGS Charlie Hankin, Zachary Kanin, Liana Finck, David Sipress, J. C. Duffy, Drew Dernavich, Matthew Stiles Davis, Michael Crawford, Edward Steed, Benjamin Schwartz, Alex Gregory, Roz Chast, Bruce Eric Kaplan, Jack Ziegler, David Borchart, Barbara Smaller, Kaamran Hafeez, Paul Noth, Jason Adam Katzenstein SPOTS Guido Scarabottolo 2 THE NEW YORKER, MARCH 9, 2015 CONTRIBUTORS eric schlosser (“BREAK-IN AT Y-12,” P. 46) is the author of “Fast Food Nation” and “Command and Control: Nuclear Weapons, the Damascus Accident, and the Illusion of Safety.” jeFFrey toobin (COMMENT, P. -

Rockschool Male Vocals Grade 3

Introductions & Information Title Page Male Vocals Grade 3 Performance pieces, technical exercises and in-depth guidance for Rockschool examinations www.rslawards.com Acknowledgements Acknowledgements Published by Rockschool Ltd. © 2014 under license from Music Sales Ltd. Catalogue Number RSK091411R ISBN: 978-1-912352-33-3 This edition published July 2017 | Errata details can be found at www.rslawards.com AUDIO Backing tracks produced by Music Sales Limited Supporting test backing tracks recorded by Jon Musgrave, Jon Bishop and Duncan Jordan Supporting test vocals recorded by Duncan Jordan Supporting tests mixed at Langlei Studios by Duncan Jordan Mastered by Duncan Jordan MUSICIANS Neal Andrews, Lucie Burns (Lazy Hammock), Jodie Davies,Tenisha Edwards, Noam Lederman, Beth Loates-Taylor, Dave Marks, Salena Mastroianni, Paul Miro, Ryan Moore, Jon Musgrave, Chris Smart, Ross Stanley, T-Jay, Stacy Taylor, Daniel Walker PUBLISHING Compiled and edited by James Uings, Simon Troup, Stephen Lawson and Stuart Slater Internal design and layout by Simon and Jennie Troup, Digital Music Art Cover designed by Philip Millard, Philip Millard Design Fact Files written by Stephen Lawson, Owen Bailey and Michael Leonard Additional proofing by Chris Bird, Ronan Macdonald, Jonathan Preiss and Becky Baldwin Cover photography © JSN Photography / WireImage / Getty Images Full transcriptions by Music Sales Ltd. SYLLABUS Vocal specialists: Martin Hibbert and Eva Brandt Additional consultation: Emily Nash, Stuart Slater and Sarah Page Supporting tests composition: -

Imagine Dragons Announces 13 Summer Tour Dates Due to Overwhelming Demand for Sold out Spring Tour

IMAGINE DRAGONS ANNOUNCES 13 SUMMER TOUR DATES DUE TO OVERWHELMING DEMAND FOR SOLD OUT SPRING TOUR LOS ANGELES, CA (February 22, 2013) - Imagine Dragons, one of the biggest break-out rock bands of 2012, has announced 13 additional summer tour dates due to overwhelming demand. The summer tour announcement comes on the heels of the band's first ever headlining club & theater tour, which sold out quickly with many dates selling out the same day tickets went on sale. Tickets to their summer tour go on sale to the public beginning Friday, March 1st at LiveNation.com. Beginning today fans can click here or visit http://on.fb.me/XDgEng to RSVP for early access to presale tickets available on Thursday, February 28th. Citi® cardmembers will have access to presale tickets beginning Tuesday, February 26th at 10 a.m. local time through Citi's Private Pass® Program. For complete presale details visit www.citiprivatepass.com. Live Nation mobile app users will have access to presale tickets beginning Thursday, February 28th. Mobile users can text “LNAPP” to 404040 to download the Live Nation mobile app. (Available for iOS and Android.) The summer leg of the Imagine Dragons tour will begin on Tuesday, May 7th at the Time Warner Cable Arena in Charlotte, North Carolina with dates through June 3rd at the Comerica Theater in Phoenix, Arizona. The current spring tour kicked-off on February 8th at The Marquee in Tempe, Arizona and will visit 29 Cities, wrapping March 23rd at the Fillmore Auditorium in Denver, Colorado. Imagine Dragons are touring in support of their full-length debut gold album Night Visions (KIDinaKORNER/Interscope Records) which debuted at No. -

Dan Reynolds

14 Friday, July 14, 2017 Kim Kardashian West @KimKardashian OMG you guys!!! Check my snap chats or insta stories I’m crying!!! That was not candy on my table! The table was marble this whole time! Los Angeles ctress Amiah Miller, who can Manchester be seen in the upcoming “War inger Ariana Grande has for the Planet of the Apes”, been made an honorary Ahas joined the cast of fantasy-comedy citizen of Manchester for “Anastasia” opposite Emily Carey and Sorganising a concert to raise Aliyah Moulden. money for the victims of the Miller, who plays the Nova role as Manchester Arena terror a mute orphan in the latest “Apes” attack. movie, will play a young American who The 23-year-old was the befriends Anastasia in the re-telling of subject of an unanimous the classic story, reports variety.com. vote at a meeting to decide Set in 1917, “Anastasia” sees Anastasia the award and was praised for Romanov escape through a time portal returning to the city for the event when her family is threatened by just two weeks after the attack took Vladimir Lenin, and finds herself in the place, reports independent.co.uk. year 1988. “I don’t know what to say. Words Blake Harris is directing the film, don’t suffice. I’m moved and honoured. which begins production next month in My heart is very much still there. I love Portugal. (IANS) you. Thank you,” Grande wrote on Instagram. In total, 22 people were killed and dozens more were injured after a lone suicide bomber detonated a device inside the foyer at the arena, shortly after Grande’s May 22 concert had finished. -

IMAGINE DRAGONS LYRICS - on Top of the World

8/24/2014 IMAGINE DRAGONS LYRICS - On Top Of The World "On Top Of The World" lyrics IMAGINE DRAGONS LYRICS "On Top Of The World" If you love somebody Better tell them while they’re here ’cause They just may run away from you You’ll never know quite when, well Then again it just depends on How long of time is left for you I’ve had the highest mountains I’ve had the deepest rivers You can have it all but life keeps moving I take it in but don’t look down ‘Cause I’m on top of the world, ‘ay I’m on top of the world, ‘ay Waiting on this for a while now Paying my dues to the dirt I’ve been waiting to smile, ‘ay Been holding it in for a while, ‘ay Take you with me if I can Been dreaming of this since a child I’m on top of the world. I’ve tried to cut these corners Try to take the easy way out I kept on falling short of something I coulda gave up then but Then again I couldn’t have ’cause I’ve traveled all this way for something I take it in but don’t look down ‘Cause I’m on top of the world, ‘ay I’m on top of the world, ‘ay Waiting on this for a while now Paying my dues to the dirt I’ve been waiting to smile, ‘ay Been holding it in for a while, ‘ay Take you with me if I can Been dreaming of this since a child I’m on top of the world. -

Imagine Dragons Allsaints LA Sessions Press

A L L S A I N T S PRESENTS LA SESSIONS 2017- IMAGINE DRAGONS Expanding beyond Fashion, Film and Image, Music has always been a part of AllSaints' heritage. This year AllSaints continues LA Sessions. With the AllSaints Flagship store in the heart of Los Angeles (Beverly Hills) serving as the backdrop to spotlight some of the most notable up-and-coming bands internationally, LA Sessions provides an exclusive venue for groundbreaking musicians to perform live. These performances will be released on the last Friday of every month, exclusively through our website. AllSaints has always had a keen eye for talent in global creative communities. Through curated casting of our Biker Portraits series, and with exclusive live performances from some of the most notable touring bands of today, LA Sessions in 2017 will be one to watch. The December launch features our closing act for 2017, Grammy Award-winning band Imagine Dragons coming back to LA Sessions to perform their latest single. For the LA Sessions exclusive, they perform an acoustic version of “Believer” off their album Evolve, named the biggest selling rock album of 2017. MORE ON IMAGINE DRAGONS Formed in 2009 and featuring lead vocalist Dan Reynolds, guitarist Wayne Sermon, bassist Ben McKee, and drummer Daniel Platzman, Imagine Dragons earned a grassroots following by independently releasing a series of EPs. After Alex Da Kid signed them to his KIDinaKORNER/Interscope label, the band made its major-label debut with the release of Continued Silence, a 2012 EP featuring the 2x platinum breakthrough single “It’s Time.” Night Visions arrived later that year and Imagine Dragons found themselves on a skyward trajectory that saw the album debut at No. -

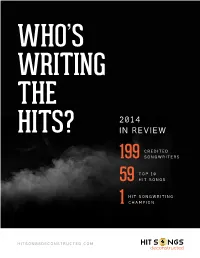

2014 in REVIEW 2 © 2015 Hit Songs Deconstructedtm

WHO’S WRITING THE 2014 HITS? IN REVIEW CREDITED 199 SONGWRITERS TOP 10 59 HIT SONGS HIT SONGWRITING 1 CHAMPION HITSONGSDECONSTRUCTED.COM deconstructed CONTENTS Pg. 3: Introduction Pg. 4: The Hits Pg. 5: The Hit Songwriting Champion of 2014 Pg. 7: Key Players Pg. 11: #1 Hit Club Pg. 13: Collaboration Pg. 17: Hit Songwriter Connections Pg. 19: Who’s Influencing The Hits? Pg. 20: Genre Boundary Pushers Pg. 23: Staying Power Pg. 25: Gender Pg. 27: Age Pg. 30: Where Are They From? Pg. 33: Performing Artists Who Write For Themselves & Others Pg. 35: Performing Arists Who Didn’t Receive a Writing Credit Pg. 36: Genre Pg. 47: The Names Behind The Names Pg. 51: The 2014 Top 10 Hit Makers WHO’S WRITING THE HITS? 2014 IN REVIEW 2 © 2015 Hit Songs DeconstructedTM. All Rights Reserved. INTRODUCTION Unless you’re in the music industry or a hardcore music fan, names such as Max Martin, Benny Blanco, Henry Walter, and Savan Kotecha probably don’t ring a bell. Most casual music fans are completely unaware that today’s mainstream hits are crafted by teams of behind-the- scenes writers and performing artists, all of whom work together toward one core goal – getting their music to as many ears as possible. They come from all around the world – male and female, young and “old” (by mainstream standards) and a range of ethnicities. Despite their differences, they all share a few core attributes - they’re masters at their craft, persistent, in tune with the artist’s core target audience, and blessed with a stroke of luck. -

La Intervención Enfermera Musicoterapia En Personas Con Estado De Ánimo Depresivo

Dña. MIRIAN ALONSO MAZA Unidad docente multiprofesional del Hospital Príncipe de Asturias de Alcalá de henares. MADRID Tutor: Dr. Enfermero D. Crispín Gigante Pérez Departamento de Enfermería de la Universidad de Alcalá de Henares. MADRID PREMIO ANESM AL MEJOR PROYECTO DE INVESTIGACION EIR 2015 La Intervención enfermera Musicoterapia en personas con estado de ánimo depresivo. ÍNDICE 1. INTRODUCCIÓN ................................................................................................................... 4 1.1 MARCO TEÓRICO .......................................................................................................... 6 2. JUSTIFICACIÓN .................................................................................................................. 10 3. HIPÓTESIS Y OBJETIVOS ................................................................................................ 11 4. MATERIAL Y MÉTODOS ................................................................................................... 11 4.1 ÁMBITO DE ESTUDIO ................................................................................................. 11 4.2 VARIABLES A ESTUDIO ............................................................................................. 13 4.3 CUESTIONARIOS ......................................................................................................... 14 4.4 INTERVENCIÓN ............................................................................................................ 17 4.5 DISEÑO .......................................................................................................................... -

Imagine Dragons Release New Album — Evolve — Via Kidinakorner / Interscope Records

For Immediate Release IMAGINE DRAGONS RELEASE NEW ALBUM — EVOLVE — VIA KIDINAKORNER / INTERSCOPE RECORDS BAND BRINGS DYNAMIC LIVE SHOW TO ARENAS ACROSS NORTH AMERICA WITH THE EVOLVE TOUR THIS FALL May 9th, 2017 — (Santa Monica, CA) — Multi-platinum, Grammy Award-winning band Imagine Dragons will bring its electrifying live show to arenas across North America this fall with the Evolve Tour, produced by Live Nation. Watch the video for “Believer” HERE and “Thunder” HERE. “Believer” spent six consecutive weeks at No. 1 at Alternative Radio and broke a 13+ year record for number of spins at the format, besting the record previously held by Linkin Park’s “Faint” in 2003. TIME called “Believer” “another showstopper of an anthem,” while Entertainment Weekly noted its “thudding tribal drums, a massive shout along chorus, and a wicked bassline that’s the sonic equivalent of a slug to the gut.” Billboard called “Believer” “a whimsical-yet-authoritative anthem sure to turn even the most hesitant of optimists into a believer.” Imagine Dragons will kick off the Evolve Tour on September 26th in Phoenix, AZ, with Grouplove and K.Flay providing support. Formed in 2009 and featuring lead vocalist Dan Reynolds, guitarist Wayne Sermon, bassist Ben McKee, and drummer Daniel Platzman, Imagine Dragons earned a grassroots following by independently releasing a series of EPs. After Alex Da Kid signed them to his KIDinaKORNER/Interscope label, the band made its major-label debut with the release of Continued Silence, a 2012 EP featuring the 2x platinum breakthrough single “It’s Time.” Night Visions arrived later that year and Imagine Dragons found themselves on a skyward trajectory that saw the album debut at No. -

William Wayne Dehoney Collection Ar

1 WILLIAM WAYNE DEHONEY COLLECTION AR 880 Prepared by: Dorothy A. Davis Southern Baptist Historical Library and Archives December, 2007 Updated February, 2012 2 William Wayne Dehoney Collection AR 880 Summary Main Entry: William Wayne Dehoney Collection Date Span: 1933 – 2005 Abstract: The Dehoney Collection contains materials from Dr. Dehoney’s tenure as president of the Southern Baptist Convention and as pastor of churches in Tennessee, Kentucky, and Alabama. The collection includes official papers, which are primarily of sermon notes, bound bulletins, correspondence, and clippings. Size: 60 linear ft. (102 boxes) Collection #: AR 880 Biographical Sketch Dr. William Wayne Dehoney was President of the Southern Baptist Convention from 1964 – 1966. Before his presidency, he participated in the Southern Baptist Pastors’ Conference as Vice–President and President. In addition, Dr. Dehoney served on the Alabama State Executive Board, Executive Board of the Tennessee Baptist Convention, Executive Board of the Kentucky Baptist Convention, and the Christian Life Commission, now the Ethics and Religious Liberty Commission. He was also a Trustee of Union University, Southern Baptist Theological Seminary, Campbellsville College, and Howard College. Dr. Dehoney was active in the Baptist World Alliance and served on its Executive Committee. He led missions to many countries in Africa, Europe, South America, and Asia, and served as North American Coordinator for the Crusade of the Americas. As part of his mission efforts, Dr. Dehoney co–founded BibleLand Travel, later Heritage Travel, which offered seminars and tours throughout the Holy Land. Today, Heritage Travel has transitioned into Dehoney Travel, which continues to offer Holy Land tours and tours in other countries. -

Michael Bay's "TRANSFORMERS: AGE of EXTINCTION" to Feature an Original Song by the Grammy Award-Winning Band IMAGINE DRAGONS

Michael Bay's "TRANSFORMERS: AGE OF EXTINCTION" to Feature an Original Song by the Grammy Award-Winning Band IMAGINE DRAGONS The Band Contributed Creatively in the Scoring Process with the Film's Composer Steve Jablonsky Will Perform Live at the Film's World Premiere in Hong Kong on June 19th HOLLYWOOD--(BUSINESS WIRE)-- Director Michael Bay, film composer Steve Jablonsky and Paramount Pictures are collaborating with the Grammy Award-winning band IMAGINE DRAGONS to feature the band's original music in the upcoming film "TRANSFORMERS: AGE OF EXTINCTION," one of the most anticipated movies of the summer. Following an early footage screening of the film, IMAGINE DRAGONS wrote the original song "BATTLE CRY," which Bay used in critical points in the film. Additionally, the band contributed original music during the scoring process by recording cues with Jablonsky, the film's composer, and Hans Zimmer, who assisted in the process. The collaboration resulted in added depth to the sound of the film. "We're incredibly lucky that Dan, Wayne, Ben and Daniel were available to work with us," says director Bay. "I remember being drawn to the emotion of 'Demons' and 'Radioactive' the first time I heard those songs, and I knew I wanted that same energy and heart for this movie. They've created a really epic, otherworldly sound for 'Battle Cry.'" "The TRANSFORMER franchise has set the bar for cinematic innovation over the years," says Alex Da Kid, label head at KIDinaKORNER records. "We are excited to collaborate with Michael Bay and Paramount Pictures and view this as an incredible global platform." IMAGINE DRAGONS will perform its new single live at the film's worldwide premiere in Hong Kong, one of the locations in the film, on June 19th. -

May 18, 2014 Mgm Grand Garden Arena Las Vegas Take Your Favorite Bands on the Road

MAY 18, 2014 MGM GRAND GARDEN ARENA LAS VEGAS TAKE YOUR FAVORITE BANDS ON THE ROAD. THE 2014 CHEVROLET CRUzE With available Chevrolet MyLink1 you can stream personalized Internet stations on your compatible smartphone 2 or let your playlists rule by pairing your device to an available USB port 3 or via Bluetooth. Now you never have to leave your music behind. 1 MyLink functionality varies by model. Full functionality requires compatible Bluetooth and smartphone, and USB connectivity for some devices. 2 Data plan rates apply. 3 Not compatible with all devices. RANCHO MONTE ALEGRE SANTA BARBARA • CALIFORNIA ust ten minutes from Montecito and Santa Barbara, Rancho Monte Alegre has been Jplanned as a small rural community set within the spectacular coastal California foothills of the Santa Barbara region. Over 2800 acres have been preserved on a historical 19th century ranch by limiting development to 25 carefully selected Home sites. Rancho Monte Alegre is one of the last pristine, expansive pieces of land remaining on the California central coast. With spectacular vistas and natural beauty as well as miles of private riding and hiking trails, the Ranch provides a rare opportunity to experience nature in an undisturbed state, while still being within minutes of the city and beach. Villa Sevillano elegant 22 acre equestrian estate in santa barbara 3215FOOTHILLRD.COM Artful Architecture By The Sea oasis off the beach in montecito 1159HILLRD.COM exclusive representation Sotheby’s International Realty and the Sotheby’s International Realty logo are registered service marks used with permission. Operated by Sotheby’s International Realty, Inc.