Numbers That Count: Measuring the BBC World Service Global Audience

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Managing Cancer-Related Fatigue

Managing cancer-related fatigue Cancer-related fatigue is the term used to describe extreme tiredness and is one of the most common, distressing and under-addressed symptoms in people living with or beyond a blood cancer. Cancer-related fatigue is not improved by rest and affects people physically, psychologically and socially; making it hard to complete normal everyday activities. It sometimes improves when treatment has finished but for some people it may last for months or years. Cancer-related fatigue can have many different causes including symptoms of the disease or side-effects of treatment. Some of these symptoms or side-effects may be linked together which can cause a cycle of fatigue. People with fatigue are often incorrectly advised to rest and limit activity. However, physical inactivity causes muscle weakness, increased stiffness and pain, and this may make fatigue worse. Fatigue is often linked to several physiological and psychological symptoms such as It is important to speak with your doctor to depression, anxiety, anaemia, weight loss find out which symptoms might be making and pain. These symptoms are linked to one your fatigue worse. Inside you will find some another and can make each other worse which practical tips for managing your fatigue. makes fatigue difficult to manage. leukaemia.org.nz 10 tips for managing fatigue 1) Keep a fatigue diary 4) Know your numbers a. Write down the times of day when you a. Anaemia is a common cause of fatigue feel your best and when you feel most so it is important to keep an eye on your tired. -

Reuters Institute Digital News Report 2020

Reuters Institute Digital News Report 2020 Reuters Institute Digital News Report 2020 Nic Newman with Richard Fletcher, Anne Schulz, Simge Andı, and Rasmus Kleis Nielsen Supported by Surveyed by © Reuters Institute for the Study of Journalism Reuters Institute for the Study of Journalism / Digital News Report 2020 4 Contents Foreword by Rasmus Kleis Nielsen 5 3.15 Netherlands 76 Methodology 6 3.16 Norway 77 Authorship and Research Acknowledgements 7 3.17 Poland 78 3.18 Portugal 79 SECTION 1 3.19 Romania 80 Executive Summary and Key Findings by Nic Newman 9 3.20 Slovakia 81 3.21 Spain 82 SECTION 2 3.22 Sweden 83 Further Analysis and International Comparison 33 3.23 Switzerland 84 2.1 How and Why People are Paying for Online News 34 3.24 Turkey 85 2.2 The Resurgence and Importance of Email Newsletters 38 AMERICAS 2.3 How Do People Want the Media to Cover Politics? 42 3.25 United States 88 2.4 Global Turmoil in the Neighbourhood: 3.26 Argentina 89 Problems Mount for Regional and Local News 47 3.27 Brazil 90 2.5 How People Access News about Climate Change 52 3.28 Canada 91 3.29 Chile 92 SECTION 3 3.30 Mexico 93 Country and Market Data 59 ASIA PACIFIC EUROPE 3.31 Australia 96 3.01 United Kingdom 62 3.32 Hong Kong 97 3.02 Austria 63 3.33 Japan 98 3.03 Belgium 64 3.34 Malaysia 99 3.04 Bulgaria 65 3.35 Philippines 100 3.05 Croatia 66 3.36 Singapore 101 3.06 Czech Republic 67 3.37 South Korea 102 3.07 Denmark 68 3.38 Taiwan 103 3.08 Finland 69 AFRICA 3.09 France 70 3.39 Kenya 106 3.10 Germany 71 3.40 South Africa 107 3.11 Greece 72 3.12 Hungary 73 SECTION 4 3.13 Ireland 74 References and Selected Publications 109 3.14 Italy 75 4 / 5 Foreword Professor Rasmus Kleis Nielsen Director, Reuters Institute for the Study of Journalism (RISJ) The coronavirus crisis is having a profound impact not just on Our main survey this year covered respondents in 40 markets, our health and our communities, but also on the news media. -

House of Commons Welsh Affairs Committee

House of Commons Welsh Affairs Committee S4C Written evidence - web List of written evidence 1 URDD 3 2 Hugh Evans 5 3 Ron Jones 6 4 Dr Simon Brooks 14 5 The Writers Guild of Great Britain 18 6 Mabon ap Gwynfor 23 7 Welsh Language Board 28 8 Ofcom 34 9 Professor Thomas P O’Malley, Aberystwth University 60 10 Tinopolis 64 11 Institute of Welsh Affairs 69 12 NUJ Parliamentary Group 76 13 Plaim Cymru 77 14 Welsh Language Society 85 15 NUJ and Bectu 94 16 DCMS 98 17 PACT 103 18 TAC 113 19 BBC 126 20 Mercator Institute for Media, Languages and Culture 132 21 Mr S.G. Jones 138 22 Alun Ffred Jones AM, Welsh Assembly Government 139 23 Celebrating Our Language 144 24 Peter Edwards and Huw Walters 146 2 Written evidence submitted by Urdd Gobaith Cymru In the opinion of Urdd Gobaith Cymru, Wales’ largest children and young people’s organisation with 50,000 members under the age of 25: • The provision of good-quality Welsh language programmes is fundamental to establishing a linguistic context for those who speak Welsh and who wish to learn it. • It is vital that this is funded to the necessary level. • A good partnership already exists between S4C and the Urdd, but the Urdd would be happy to co-operate and work with S4C to identify further opportunities for collaboration to offer opportunities for children and young people, thus developing new audiences. • We believe that decisions about the development of S4C should be made in Wales. -

ECHO 2 Contents SPRING 2019, Volume 16 | No

ECHO 2 Contents SPRING 2019, Volume 16 | No. 8 This magazine is produced three times a year to provide students, alumni, parents, friends and donors with news from the institution, articles connecting our readers with our community and features on relevant Christian thought. 3 27 MISSION STATEMENT The President Speaks Leading Beyond Language Lancaster Bible College exists to educate Christian students to think and live a biblical (For a Final Time) worldview and to proclaim Christ by serving Him in the Church and society. 29 VISION 5 Lancaster Bible College will be a premier Leading the Pack: learning community that intentionally develops Gratitude Beyond the head, heart and hands of servant ministry A Lifelong Effort leaders for global impact. Measure ACCREDITATION Accredited through the Middle States Commission on Higher Education and the 31 Association for Biblical Higher Education 9 Commission on Accreditation, LBC is also Finding Spiritual Healing approved by the Pennsylvania Department A Faith-Filled Partner of Education and the Association of Christian Schools International. LBC is approved to operate in Pennsylvania, Florida, Maryland and Tennessee. LBC is authorized to offer online 11 33 programs in all states where our online students reside. Hallmarks of a Leading the Church EDITORIAL COMMITTEE Servant Leader as a Family Peter W. Teague, Ed.D. PRESIDENT John Zeswitz, D.D. EXECUTIVE VICE PRESIDENT 15 35 Philip Dearborn, Ed.D., (‘90) The Impact of a The Emotionally PROVOST Servant Leader Josh Beers, M.A.B.S. Healthy Leader SENIOR VICE PRESIDENT OF STUDENT EXPERIENCE Rev. Rodney Carter, Jr., M.A. (‘18) 19 DIRECTOR OF ALUMNI The Compassion of a 40 Judy Heckaman, B.S. -

Crwydro Bae Colwyn – Las Vegas Cymru Ar Un Adeg

22.04.2021 Heledd Williams Cyswllt Contact Ffôn Phone 01286 674622 Erthygl i’r Wasg Press Release Crwydro Bae Colwyn – Las Vegas Cymru ar un adeg Mae Bae Colwyn yn un o drysorau glan môr Cymru, ac yn un o ardaloedd mwyaf poblog y gogledd. Mae’n dref sydd wedi denu ymwelwyr o bell ac agos ar hyd y degawdau, yn dwristiaid ac yn drigolion y cymunedau cyfagos. Cawn ddysgu mwy am yr ardal arbennig hon wrth i dîm rhaglen Cynefin – Heledd Cynwal, Dr Iestyn Jones a Siôn Tomos Owen grwydro’r fro yn y bennod nesaf ar nos Sul 2 Mai. Defnyddiwyd yr enw Bae Colwyn am y tro cyntaf yn yr 1870au wrth i filoedd o bobl heidio i’r arfordir er mwyn cael gwyliau ar lan y môr. “Mae Bae Colwyn yn parhau i ddenu ymwelwyr a does dim syndod, gan fod y dref Fictorianaidd wedi symud gyda’r oes, ac mae’r bobl yn dal i dyrru yma. Yn dwristiaid ac yn drigolion y cymunedau cyfagos, maen nhw i gyd yn chwilio am un peth – adloniant. Ac roedd adeiladau fel Theatr Colwyn yn arloesi yn y maes” meddai Heledd. A Theatr Colwyn; theatr weithredol hynaf y Deyrnas Unedig a adeiladwyd yn 1888 fydd lleoliad cyntaf Heledd wrth iddi ddysgu mwy am rai o ganolfannau adloniannol y dref yng nghwmni Dilwyn Price. “Den ni mewn ardal, oedd ar gychwyn yr ugeinfed ganrif yn fwrlwm o adloniant. Yn ystod yr Ail Ryfel Byd, symudwyd yr Weinyddiaeth Fwyd yma i Fae Colwyn” meddai Dilwyn. Mi ddaru’r boblogaeth chwyddo; 5,000 o bobl yn symud yma. -

Register of Journalists' Interests

REGISTER OF JOURNALISTS’ INTERESTS (As at 14 June 2019) INTRODUCTION Purpose and Form of the Register Pursuant to a Resolution made by the House of Commons on 17 December 1985, holders of photo- identity passes as lobby journalists accredited to the Parliamentary Press Gallery or for parliamentary broadcasting are required to register: ‘Any occupation or employment for which you receive over £795 from the same source in the course of a calendar year, if that occupation or employment is in any way advantaged by the privileged access to Parliament afforded by your pass.’ Administration and Inspection of the Register The Register is compiled and maintained by the Office of the Parliamentary Commissioner for Standards. Anyone whose details are entered on the Register is required to notify that office of any change in their registrable interests within 28 days of such a change arising. An updated edition of the Register is published approximately every 6 weeks when the House is sitting. Changes to the rules governing the Register are determined by the Committee on Standards in the House of Commons, although where such changes are substantial they are put by the Committee to the House for approval before being implemented. Complaints Complaints, whether from Members, the public or anyone else alleging that a journalist is in breach of the rules governing the Register, should in the first instance be sent to the Registrar of Members’ Financial Interests in the Office of the Parliamentary Commissioner for Standards. Where possible the Registrar will seek to resolve the complaint informally. In more serious cases the Parliamentary Commissioner for Standards may undertake a formal investigation and either rectify the matter or refer it to the Committee on Standards. -

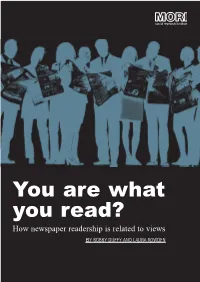

You Are What You Read

You are what you read? How newspaper readership is related to views BY BOBBY DUFFY AND LAURA ROWDEN MORI's Social Research Institute works closely with national government, local public services and the not-for-profit sector to understand what works in terms of service delivery, to provide robust evidence for policy makers, and to help politicians understand public priorities. Bobby Duffy is a Research Director and Laura Rowden is a Research Executive in MORI’s Social Research Institute. Contents Summary and conclusions 1 National priorities 5 Who reads what 18 Explaining why attitudes vary 22 Trust and influence 28 Summary and conclusions There is disagreement about the extent to which the media reflect or form opinions. Some believe that they set the agenda but do not tell people what to think about any particular issue, some (often the media themselves) suggest that their power has been overplayed and they mostly just reflect the concerns of the public or other interests, while others suggest they have enormous influence. It is this last view that has gained most support recently. It is argued that as we have become more isolated from each other the media plays a more important role in informing us. At the same time the distinction between reporting and comment has been blurred, and the scope for shaping opinions is therefore greater than ever. Some believe that newspapers have also become more proactive, picking up or even instigating campaigns on single issues of public concern, such as fuel duty or Clause 28. This study aims to shed some more light on newspaper influence, by examining how responses to a key question – what people see as the most important issues facing Britain – vary between readers of different newspapers. -

APPENDIX 1 S4C Standing Orders (October 2018)

APPENDIX 1 S4C Standing Orders (October 2018) This English translation of the Standing Orders of the S4C Board is provided for information. In the event of a discrepancy between the Welsh version and English version of S4C documents, including these Standing Orders, the Welsh version shall take precedence. Introduction S4C is an independent public body responsible for the provision of high quality public service television and digital services through the medium of Welsh, mainly for the audience in Wales, but also for the wider audience that wishes to use S4C’s Welsh-language service. S4C is a statutory corporation and the relevant legal provisions relating to the structure, governance and responsibilities of S4C are set out in legislation. These standing Orders put in place detailed corporate governance arrangements and do not supersede or replace the relevant statutory provisions. These standing Orders have been developed and ratified by the S4C Board in accordance with Schedule 6 of the Broadcasting Act 1990 (as amended). The Review of S4C published in March 2018 recommended that S4C’s corporate governance structure should be based on a unitary board comprising executive and non-executive directors, with clearly defined roles and responsibilities, where the executive directors manage and non-executive directors scrutinise and advise. These standing Orders are intended to implement the corporate governance structure recommended by the review. S4C’s current corporate governance structure is based on a non-executive board and an executive management team. The Unitary Board (and shadow Unitary Board model) will replace the current non-executive-only structure. The legislation that established S4C refers to one statutory body and television service. -

MF3261 Keys to Embracing Aging: Tuning Into the Times

Keys to Embracing Aging Tuning Into the Times Can’t teach an old dog a new trick? Centenarians (people 100 years old and older) beg to differ. When a group of 100-year-olds were asked why they have lived so long, they shared that they spent a lifetime staying in tune with the times. While the days of Charlie Chaplin, Louis Arm- that incorporates computing abilities. Smartphones strong, Ted Williams, talk radio programs, Model Ts, have an “app” or application for almost everything. An and party lines have been replaced with reality televi- application is a computer program that can be down- sion, hip-hop music, multimillion-dollar professional loaded to a smartphone, allowing it to take photos, athletes, SUVs, and smartphones, it is clear that tech- play music and videos, and access Internet sites. A nology and society are not slowing down. But neither smartphone even has the ability to act as a navigation are the tech-savvy seniors who drive successful aging system, start your vehicle, and activate your lights through their zest for life and learning. Staying in or home security system when you are not there. tune with the times throughout life promotes lifelong Whether it’s a basic cellular phone or a smartphone, learning, which increases knowledge, problem-solv- one of the best advantages of a mobile device is that ing, and decision-making. Staying on top of the latest it is a portable and accessible. Charged on batteries, a gadgets, playing video games, and keeping up with mobile phone fits in your pocket and can be ready in the news also enhances brain stimulation and helps hand to call a friend or call for help. -

BMJ in the News Is a Weekly Digest of BMJ Stories, Plus Any Other News

BMJ in the News is a weekly digest of BMJ stories, plus any other news about the company that has appeared in the national and a selection of English-speaking international media. Latest highlights (23 - 29 Apr): Two research papers in BMJ Open made global headlines this week: Coffee in pregnancy linked to excess childhood weight gain made the front page of The Times, plus the New York Times, CNN and Malaysia Today. Alcohol intake may be linked to premenstrual syndrome also made headlines including Sky News, Newsweek, New Delhi TV and Channel News Asia A study in The BMJ suggesting use of some antidepressants and bladder medications may be linked to dementia was covered by BBC Radio 4 Today Programme plus extensive national, regional and international pick up. A JNNP study suggesting that vigorous physical activity may be linked to heightened risk of motor neurone disease generated headlines including BBC News, STV News (Scotland), India TV and New Telegraph (Nigeria) BMJ BMJ Best Practice partners with NHS - InPublishing 23/04/2018 The BMJ plays its part in tackling the medical crisis - Ri5 25/04/2018 The BMJ Research: Anticholinergic drugs and risk of dementia: case-control study Long-term antidepressant use linked to higher dementia risk - The Telegraph 25/04/2018 Certain common medications tied to 30% higher dementia risk, study finds - CNN 25/04/2018 BBC Radio 4 Today Programme (06.05am) - 26/04/2018 Other broadcasts: BBC 1 Breakfast, The Chris Evans Breakfast Show, BBC Radio 2, Victoria -

News Consumption in the UK: 2018

News Consumption in the UK: 2018 Produced by: Jigsaw Research Fieldwork dates: November/December 2017 and March/April 2018 PROMOTING CHOICE • SECURING STANDARDS • PREVENTING HARM 1 2 Key findings from the report TV is the most-used platform for news nowadays by UK adults (79%), followed by the internet (64%), radio (44%) and newspapers (40%). However, the internet is the most popular platform among 16-24s (82%) and ethnic minority groups (EMGs) (73%). BBC One is the most-used news source, used by 62% of UK adults, followed by ITV (41%) and Facebook (33%). BBC One also had the highest proportion of respondents claiming it was their most important news source (27% of users). Social media is the most popular type of online news, used by 44% of UK adults. However, while lots of people are able to recall the social media site they consumed the news on, some struggle to remember the original source of the news story. When scored by their users on measures of quality, accuracy, trustworthiness and impartiality (among other things) magazines perform better than any other news platform. Scores were lower among users of social media TV is the most popular platform for accessing international and local news. In the Nations, BBC One is the most- used source for news in Wales, Scotland and England, but UTV is the most popular in Northern Ireland. Six in ten (63%) UK adults thought that it was important for ‘society overall’ that broadcasters provide current affairs programming. This was more than those who felt it was important to them personally (51%). -

News Consumption in the UK: 2019

News Consumption in the UK: 2019 Produced by: Jigsaw Research Fieldwork dates: November 2018 and March 2019 Published: 24 July 2019 PROMOTING CHOICE • SECURING STANDARDS • PREVENTING HARM 1 Key findings from the report While TV remains the most-used platform for news nowadays by UK adults, usage has decreased since last year (75% vs. 79% in 2018). At the same time, use of social media for news use has gone up (49% vs. 44%). Use of TV for news is much more likely among the 65+ age group (94%), while the internet is the most-used platform for news consumption among 16-24s and those from a minority ethnic background. Fewer UK adults use BBC TV channels for news compared to last year, while more are using social media platforms. As was the case in 2018, BBC One is the most-used news source among all adults (58%), followed by ITV (40%) and Facebook (35%). However, several BBC TV news sources (BBC One, BBC News Channel and BBC Two) have all seen a decrease in use for news compared to 2018. Use of several social media platforms for news have increased since last year (Twitter, WhatsApp, Instagram and Snapchat). There is evidence that UK adults are consuming news more actively via social media. For example, those who access news shared by news organisations, trending news or news stories from friends and family or other people they follow via Facebook or Twitter are more likely to make comments on the new posts they see compared to the previous year. When rated by their users on measures such as quality, accuracy, trustworthiness and impartiality, magazines continue to perform better than other news platforms, followed by TV.