Scaling the Cloud Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Ttethernet for Integrated Fault-Tolerant Spacecraft Networks

On TTEthernet for Integrated Fault-Tolerant Spacecraft Networks Andrew Loveless∗ NASA Johnson Space Center, Houston, TX, 77058 There has recently been a push for adopting integrated modular avionics (IMA) princi- ples in designing spacecraft architectures. This consolidation of multiple vehicle functions to shared computing platforms can significantly reduce spacecraft cost, weight, and de- sign complexity. Ethernet technology is attractive for inclusion in more integrated avionic systems due to its high speed, flexibility, and the availability of inexpensive commercial off-the-shelf (COTS) components. Furthermore, Ethernet can be augmented with a variety of quality of service (QoS) enhancements that enable its use for transmitting critical data. TTEthernet introduces a decentralized clock synchronization paradigm enabling the use of time-triggered Ethernet messaging appropriate for hard real-time applications. TTEther- net can also provide two forms of event-driven communication, therefore accommodating the full spectrum of traffic criticality levels required in IMA architectures. This paper explores the application of TTEthernet technology to future IMA spacecraft architectures as part of the Avionics and Software (A&S) project chartered by NASA's Advanced Ex- ploration Systems (AES) program. Nomenclature A&S = Avionics and Software Project AA2 = Ascent Abort 2 AES = Advanced Exploration Systems Program ANTARES = Advanced NASA Technology Architecture for Exploration Studies API = Application Program Interface ARM = Asteroid Redirect Mission -

Mikrodenetleyicili Endüstriyel Seri Protokol Çözümleyici Sisteminin Programi

YILDIZ TEKNİK ÜNİVERSİTESİ FEN BİLİMLERİ ENSTİTÜSÜ MİKRODENETLEYİCİLİ ENDÜSTRİYEL SERİ PROTOKOL ÇÖZÜMLEYİCİ SİSTEMİNİN PROGRAMI Elektronik ve Haberleşme Müh. Kemal GÜNSAY FBE Elektronik ve Haberleşme Anabilim Dalı Elektronik Programında Hazırlanan YÜKSEK LİSANS TEZİ Tez Danışmanı : Yrd. Doç. Dr. Tuncay UZUN (YTÜ) İSTANBUL, 2009 YILDIZ TEKNİK ÜNİVERSİTESİ FEN BİLİMLERİ ENSTİTÜSÜ MİKRODENETLEYİCİLİ ENDÜSTRİYEL SERİ PROTOKOL ÇÖZÜMLEYİCİ SİSTEMİNİN PROGRAMI Elektronik ve Haberleşme Müh. Kemal GÜNSAY FBE Elektronik ve Haberleşme Anabilim Dalı Elektronik Programında Hazırlanan YÜKSEK LİSANS TEZİ Tez Danışmanı : Yrd. Doç. Dr. Tuncay UZUN (YTÜ) İSTANBUL, 2009 İÇİNDEKİLER Sayfa KISALTMA LİSTESİ ................................................................................................................ v ŞEKİL LİSTESİ ...................................................................................................................... viii ÇİZELGE LİSTESİ .................................................................................................................... x ÖNSÖZ ...................................................................................................................................... xi ÖZET ........................................................................................................................................ xii ABSTRACT ............................................................................................................................ xiii 1. GİRİŞ ...................................................................................................................... -

Ethernet Alliance Hosts Third IEEE 802.1 Data Center Bridging

MEDIA ALERT Ethernet Alliance® Hosts Third IEEE 802.1 Data Center Bridging Interoperability Test Event Participation is Open to Both Ethernet Alliance Members and Non‐Members WHAT: The Ethernet Alliance Ethernet in the Data Center Subcommittee has announced it will host an IEEE 802.1 Data Center Bridging (DCB) interoperability test event the week of May 23 in Durham, NH at the University of New Hampshire Interoperability Lab. The Ethernet Alliance invites both members and non‐members to participate in this third DCB test event that will include both protocol and applications testing. The event targets interoperability testing of Ethernet standards being developed by IEEE 802.1 DCB task force to address network convergence issues. Testing of protocols will include projects such as IEEE P802.1Qbb Priority Flow Control (PFC), IEEE P802.1Qaz Enhanced Transmission Selection (ETS) and DCB Capability Exchange Protocol (DCBX). The test event will include testing across a broad vendor community and will exercise DCB features across multiple platforms as well as exercise higher layer protocols such as Fibre Channel over Ethernet (FCoE), iSCSI over DCB, RDMA over Converged Ethernet (RoCE) and other latency sensitive applications. WHY: These test events help vendors create interoperable, market‐ready products that interoperate to the IEEE 802.1 DCB standards. WHEN: Conference Call for Interested Participants: Friday, March 4 at 10 AM PST Event Registration Open Until: March 4, 2011 Event Dates (tentative): May 23‐27, 2011 WHERE: University of New Hampshire Interoperability Lab (UNH‐IOL) Durham, NH WHO: The DCB Interoperability Event is hosted by the Ethernet Alliance. REGISTER: To get more information, learn about participation fees and/or register for the DCB plugfest, please visit Ethernet Alliance DCB Interoperability Test Event page or contact [email protected]. -

Gigabit Ethernet - CH 3 - Ethernet, Fast Ethernet, and Gigabit Ethern

Switched, Fast, and Gigabit Ethernet - CH 3 - Ethernet, Fast Ethernet, and Gigabit Ethern.. Page 1 of 36 [Figures are not included in this sample chapter] Switched, Fast, and Gigabit Ethernet - 3 - Ethernet, Fast Ethernet, and Gigabit Ethernet Standards This chapter discusses the theory and standards of the three versions of Ethernet around today: regular 10Mbps Ethernet, 100Mbps Fast Ethernet, and 1000Mbps Gigabit Ethernet. The goal of this chapter is to educate you as a LAN manager or IT professional about essential differences between shared 10Mbps Ethernet and these newer technologies. This chapter focuses on aspects of Fast Ethernet and Gigabit Ethernet that are relevant to you and doesn’t get into too much technical detail. Read this chapter and the following two (Chapter 4, "Layer 2 Ethernet Switching," and Chapter 5, "VLANs and Layer 3 Switching") together. This chapter focuses on the different Ethernet MAC and PHY standards, as well as repeaters, also known as hubs. Chapter 4 examines Ethernet bridging, also known as Layer 2 switching. Chapter 5 discusses VLANs, some basics of routing, and Layer 3 switching. These three chapters serve as a precursor to the second half of this book, namely the hands-on implementation in Chapters 8 through 12. After you understand the key differences between yesterday’s shared Ethernet and today’s Switched, Fast, and Gigabit Ethernet, evaluating products and building a network with these products should be relatively straightforward. The chapter is split into seven sections: l "Ethernet and the OSI Reference Model" discusses the OSI Reference Model and how Ethernet relates to the physical (PHY) and Media Access Control (MAC) layers of the OSI model. -

Data Center Ethernet 2

DataData CenterCenter EthernetEthernet Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] These slides and audio/video recordings of this class lecture are at: http://www.cse.wustl.edu/~jain/cse570-15/ Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-1 OverviewOverview 1. Residential vs. Data Center Ethernet 2. Review of Ethernet Addresses, devices, speeds, algorithms 3. Enhancements to Spanning Tree Protocol 4. Virtual LANs 5. Data Center Bridging Extensions Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-2 Quiz:Quiz: TrueTrue oror False?False? Which of the following statements are generally true? T F p p Ethernet is a local area network (Local < 2km) p p Token ring, Token Bus, and CSMA/CD are the three most common LAN access methods. p p Ethernet uses CSMA/CD. p p Ethernet bridges use spanning tree for packet forwarding. p p Ethernet frames are 1518 bytes. p p Ethernet does not provide any delay guarantees. p p Ethernet has no congestion control. p p Ethernet has strict priorities. Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-3 ResidentialResidential vs.vs. DataData CenterCenter EthernetEthernet Residential Data Center Distance: up to 200m r No limit Scale: Few MAC addresses r Millions of MAC Addresses 4096 VLANs r Millions of VLANs Q-in-Q Protection: Spanning tree r Rapid spanning tree, … (Gives 1s, need 50ms) Path determined by r Traffic engineered path spanning tree Simple service r Service Level Agreement. -

Gigabit Ethernet

Ethernet Technologies and Gigabit Ethernet Professor John Gorgone Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 1 Topics • Origins of Ethernet • Ethernet 10 MBS • Fast Ethernet 100 MBS • Gigabit Ethernet 1000 MBS • Comparison Tables • ATM VS Gigabit Ethernet •Ethernet8SummaryCopyright 1998, John T. Gorgone, All Rights Reserved 2 Origins • Original Idea sprang from Abramson’s Aloha Network--University of Hawaii • CSMA/CD Thesis Developed by Robert Metcalfe----(1972) • Experimental Ethernet developed at Xerox Palo Alto Research Center---1973 • Xerox’s Alto Computers -- First Ethernet Ethernet8systemsCopyright 1998, John T. Gorgone, All Rights Reserved 3 DIX STANDARD • Digital, Intel, and Xerox combined to developed the DIX Ethernet Standard • 1980 -- DIX Standard presented to the IEEE • 1980 -- IEEE creates the 802 committee to create acceptable Ethernet Standard Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 4 Ethernet Grows • Open Standard allows Hardware and Software Developers to create numerous products based on Ethernet • Large number of Vendors keeps Prices low and Quality High • Compatibility Problems Rare Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 5 What is Ethernet? • A standard for LANs • The standard covers two layers of the ISO model – Physical layer – Data link layer Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 6 What is Ethernet? • Transmission speed of 10 Mbps • Originally, only baseband • In 1986, broadband was introduced • Half duplex and full duplex technology • Bus topology Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 7 Components of Ethernet • Physical Medium • Medium Access Control • Ethernet Frame Ethernet8 Copyright 1998, John T. Gorgone, All Rights Reserved 8 CableCable DesignationsDesignations 10 BASE T SPEED TRANSMISSION MAX TYPE LENGTH Ethernet8 Copyright 1998, John T. -

VSC8489-10 and VSC8489-13

VSC8489-10 and VSC8489-13 Dual Channel WAN/LAN/Backplane Highlights RXAUI/XAUI to SFP+/KR 10 GbE SerDes PHY • IEEE 1588v2 compliant with VeriTime™ • Failover switching and lane ordering Vitesse’s dual channel SerDes PHY provides fully • Simultaneous LAN and WAN support IEEE 1588v2-compliant devices and hardware-based KR • RXAUI/XAUI support support for timing-critical applications, including all • SFP+ I/O with KR support industry-standard protocol encapsulations. • 1 GbE support VeriTime™ is Vitesse’s patent-pending distributed timing technology Applications that delivers the industry’s most accurate IEEE 1588v2 timing implementation. IEEE 1588v2 timing integrated in the PHY is the • Multiple-port RXAUI/XAUI to quickest, lowest cost method of implementing the timing accuracy that SFI/ SFP+ line cards or NICs is critical to maintaining existing timing-critical capabilities during the • 10GBASE-KR compliant backplane migration from TDM to packet-based architectures. transceivers The VSC8489-10 and VSC8489-13 devices support 1-step and 2-step • Carrier Ethernet networks requiring PTP frames for ordinary clock, boundary clock, and transparent clock IEEE 1588v2 timing applications, along with complete Y.1731 OAM performance monitoring capabilities. • Secure data center to data center interconnects The devices meet the SFP+ SR/LR/ER/220MMF host requirements in accordance with the SFF-8431 specifications. They also compensate • 10 GbE switch cards and router cards for optical impairments in SFP+ applications, along with degradations of the PCB. The devices provide full KR support, including KR state machine, for autonegotiation and link optimization. The transmit path incorporates a multitap output driver to provide flexibility to meet the demanding 10GBASE-KR (IEEE 802.3ap) Tx output launch requirements. -

Power Over Ethernet

How To | Power over Ethernet Introduction Power over Ethernet (PoE) is a technology allowing devices such as IP telephones to receive power over existing LAN cabling. This technical note is in four parts as follows: • PoE Technology • How PoE works • Allied Telesyn PoE implementation • Command Reference What information will you find in this document? The first two parts of this document describe the PoE technology, and the installation and management advantages that PoE can provide. This is followed by an overview of how PoE works, Power Device(PD) discovery, PD classification, and the delivery of power to PD data cables. The third part of this document focuses on Allied Telesyn’s implementation of PoE on the AT-8624PoE switch. The document concludes with a list of configuration and monitoring commands. Which product and software version does this information apply to? The information provided here applies to: • Products: AT8624PoE switch • Software version: 2.6.5 C613-16048-00 REV C www.alliedtelesyn.com PoE Technology Power over Ethernet is a mechanism for supplying power to network devices over the same cabling used to carry network traffic. PoE allows devices that require power, called Powered Devices (PDs), such as IP telephones, wireless LAN Access Points, and network cameras to receive power in addition to data, over existing infrastructure without needing to upgrade it. This feature can simplify network installation and maintenance by using the switch as a central power source for other network devices. A device that can source power such as an Ethernet switch is termed Power Sourcing Equipment (PSE). Power Sourcing Equipment can provide power, along with data, over existing LAN cabling to Powered Devices. -

DECLARATION of CONFORMITY Manufacturer: Fiberworks AS

DECLARATION OF CONFORMITY Manufacturer: Fiberworks AS Manufacturer's Address: Eikenga 11, 0579 Oslo, Norway declare, under its sole responsibility that the products listed in Appendix I conforms to RoHS Directive 2011/65/EU and EMC Directive 2014/30/EU tested according to the following standards: ROHS EMC IEC 62321-1:2013 EN 55032:2015 IEC 62321-3-1:2013 EN55035:2017 IEC 62321-4:2013 EN 61000-3-2:2014 IEC 62321-5:2013 EN 61000-3-3:2013 IEC 62321-6:2015 IEC 62321-7-1:2015 IEC 62321-8:2017 and thereby can carry the CE and RoHS marking. Ole Evju Product Manager Transceivers Fiberworks AS Fiberworks AS, Eikenga 11, 0579 Oslo, Norway - www.fiberworks.no APPENDIX I SFP SFP SFP BiDi STM-1 (155 Mbps) 4x/2x/1x Fibre Channel Fast Ethernet Bi-Di w/DDM SFP-155M-L15D SFP-4GFC-SD SFP-FE-BX20D-34 SFP-155M-L40D SFP-4GFC-L5D SFP-FE-BX20D-43 SFP-155M-L80D SFP-4GFC-L10D SFP-MR155-BX20D-35 STM-1 (155 Mbps) xWDM SFP-4GFC-ER SFP-MR155-BX20D-53 SFP-155M-L80D-Cxx SFP-4GFC-ZR SFP-MR155-BX40D-35 SFP-155M-L160D-Cxx 4x/2x/1x Fibre Channel xWDM SFP-MR155-BX40D-53 Fast Ethernet SFP-4GFC-30D-Cxx Fast Ethernet Bi-Di w/o DDM SFP-100Base-FX SFP-4GFC-50D-Cxx SFP-FE-BX2D-35 SFP-100Base-LX SFP-4GFC-80D-Cxx SFP-FE-BX2D-53 SFP-100Base-EX SFP-4GFC-80D-Dxxxx SFP-FE-100BX-U-10 SFP-100Base-ZX SFP-FE-100BX-D-10 SFP-100Base-L160D SFP Copper SFP-MR155-BX40-35 SFP-GE-FE-FX SFP-1000Base-TX SFP-MR155-BX40-53 SFP-GE-FE-LX SFP-GE-T Dual Rate 100/1000Mb Fast Ethernet xWDM SFP-100Base-T 1310/1490 SFP-100B-L40D-Cxx SFP-DR-BX20D-34 SFP-100B-L80D-Cxx SFP-DR-BX20D-43 SFP-100B-L160D-Cxx SFP-DR-BX40D-34 -

IEEE Std 802.3™-2012 New York, NY 10016-5997 (Revision of USA IEEE Std 802.3-2008)

IEEE Standard for Ethernet IEEE Computer Society Sponsored by the LAN/MAN Standards Committee IEEE 3 Park Avenue IEEE Std 802.3™-2012 New York, NY 10016-5997 (Revision of USA IEEE Std 802.3-2008) 28 December 2012 IEEE Std 802.3™-2012 (Revision of IEEE Std 802.3-2008) IEEE Standard for Ethernet Sponsor LAN/MAN Standards Committee of the IEEE Computer Society Approved 30 August 2012 IEEE-SA Standard Board Abstract: Ethernet local area network operation is specified for selected speeds of operation from 1 Mb/s to 100 Gb/s using a common media access control (MAC) specification and management information base (MIB). The Carrier Sense Multiple Access with Collision Detection (CSMA/CD) MAC protocol specifies shared medium (half duplex) operation, as well as full duplex operation. Speed specific Media Independent Interfaces (MIIs) allow use of selected Physical Layer devices (PHY) for operation over coaxial, twisted-pair or fiber optic cables. System considerations for multisegment shared access networks describe the use of Repeaters that are defined for operational speeds up to 1000 Mb/s. Local Area Network (LAN) operation is supported at all speeds. Other specified capabilities include various PHY types for access networks, PHYs suitable for metropolitan area network applications, and the provision of power over selected twisted-pair PHY types. Keywords: 10BASE; 100BASE; 1000BASE; 10GBASE; 40GBASE; 100GBASE; 10 Gigabit Ethernet; 40 Gigabit Ethernet; 100 Gigabit Ethernet; attachment unit interface; AUI; Auto Negotiation; Backplane Ethernet; data processing; DTE Power via the MDI; EPON; Ethernet; Ethernet in the First Mile; Ethernet passive optical network; Fast Ethernet; Gigabit Ethernet; GMII; information exchange; IEEE 802.3; local area network; management; medium dependent interface; media independent interface; MDI; MIB; MII; PHY; physical coding sublayer; Physical Layer; physical medium attachment; PMA; Power over Ethernet; repeater; type field; VLAN TAG; XGMII The Institute of Electrical and Electronics Engineers, Inc. -

IEEE 802.3 Working Group November 2006 Plenary Week

IEEE 802.3 Working Group November 2006 Plenary Week Robert M. Grow Chair, IEEE 802.3 Working Group [email protected] Web site: www.ieee802.org/3 13 November 2006 IEEE 802 November Plenary 1 Current IEEE 802.3 activities • P802.3ap, Backplane Ethernet Published• P802.3aq, 10GBASE-LRM • P802.3ar, Congestion Management Approved• P802.3as, Frame Format Extensions • P802.3at, DTE Power Enhancements • P802.3av, 10 Gb/s EPON New • Higher Speed Study Group 13 November 2006 IEEE 802 November Plenary 2 P802.3ap Backplane Ethernet • Define Ethernet operation over electrical backplanes – 1Gb/s serial – 10Gb/s serial – 10Gb/s XAUI-based 4-lane – Autonegotiation • In Sponsor ballot • Meeting plan – Complete resolution of comments on P802.3ap/D3.1, 1st recirculation Sponsor ballot – Possibly request conditional approval for submittal to RevCom 13 November 2006 IEEE 802 November Plenary 3 P802.3aq 10GBASE-LRM • Extends Ethernet capabilities at 10 Gb/s – New physical layer to run under 802.3ae specified XGMII – Extends Ethernet capabilities at 10 Gb/s – Operation over FDDI-grade multi-mode fiber • Approved by Standards Board at September meeting • Published 16 October 2006 • No meeting – Final report to 802.3 13 November 2006 IEEE 802 November Plenary 4 P802.3ar Congestion Management • Proposed modified project documents failed to gain consensus support in July • Motion to withdraw the project was postponed to this meeting • Current draft advancement to WG ballot was not considered in July • Meeting plan – Determine future of the project – Reevaluate -

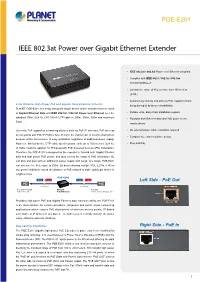

IEEE 802.3At Power Over Gigabit Ethernet Extender

POE-E201 IEEE 802.3at Power over Gigabit Ethernet Extender • IEEE 802.3at / 802.3af Power over Ethernet compliant • Complies with IEEE 802.3 / 802.3u / 802.3ab 10/100/1000Base-T • Extends the range of PoE to more than 100 meters (328ft.). • Automatically detects and protects PoE equipment from Long Distance High Power PoE and Gigabit Data Extension Solution being damaged by incorrect installation PLANET POE-E201 is a newly designed simple device which extends both the reach of Gigabit Ethernet Data and IEEE 802.3at / 802.3af Power over Ethernet over the • Multiple units, daisy-chain installation support standard 100m (328 ft.) CAT. 5/5e/6 UTP cable to 200m, 300m, 400m and maximum • Forwards both Ethernet data and PoE power to the 500m. remote device Currently, PoE supported networking devices such as PoE IP cameras, PoE wireless • No external power cable installation required access points and PoE IP Phones have become the mainstream of network deployment • Compact size, wall-mountable design because of the convenience of easy installation regardless of additional power supply. However, limited by the UTP cable specifications, only up to 100 meters (328 ft.) • Plug and Play of cable could be applied for IP-based and PoE powered devices (PD) installation. Therefore, the POE-E201 is designed as the repeater to forward both Gigabit Ethernet data and high power PoE power, and thus extend the range of PoE installation. By just plug and play without additional power supply and setup, one single POE-E201 can increase the PoE range to 200m. By daisy-chaining multiple POE-E201s, it offers you great flexibility to extend the distance of PoE network to triple, quadruple further in length or more.