Balancing a Knapsack

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Under the Direction of Denise S

EXPLORING MIDDLE GRADE TEACHERS’ KNOWLEDGE OF PARTITIVE AND QUOTITIVE FRACTION DIVISION by SOO JIN LEE (Under the Direction of Denise S. Mewborn) ABSTRACT The purpose of the present qualitative study was to investigate middle grades (Grade 5-7) mathematics teachers’ knowledge of partitive and quotitive fraction division. Existing research has documented extensively that preservice and inservice teachers lack adequate preparation in the mathematics they teach (e.g., Ball, 1990, 1993). Especially, research on teachers’ understanding of fraction division (e.g., Ball, 1990; Borko, 1992; Ma, 1999; Simon, 1993) has demonstrated that one or more pieces of an ideal knowledge package (Ma, 1999) for fraction division is missing. Although previous studies (e.g., Ball, 1990; Borko, 1992; Ma, 1999; Simon, 1993; Tirosh & Graeber, 1989) have stressed errors and constraints on teachers’ knowledge of fraction division, few studies have been conducted to explore teachers’ knowledge of fraction division at a fine-grained level (Izsák, 2008). Thus, I concentrated on teachers’ operations and flexibilities with conceptual units in partitive and quotitive fraction division situations. Specifically, I attempted to develop a model of teachers’ ways of knowing fraction division by observing their performance through a sequence of division problems in which the mathematical relationship between the dividend and the divisor became increasingly complex. This is my first step toward building a learning trajectory of teachers’ ways of thinking, which can be extremely useful for thinking about how to build an effective professional development program and a teacher education program. The theoretical frame that I developed for this study emerged through analyses of teachers’ participation in the professional development program where they were encouraged to reason with/attend to quantitative units using various drawings such as length and area models. -

Counting Keith Numbers

1 2 Journal of Integer Sequences, Vol. 10 (2007), 3 Article 07.2.2 47 6 23 11 Counting Keith Numbers Martin Klazar Department of Applied Mathematics and Institute for Theoretical Computer Science (ITI) Faculty of Mathematics and Physics Charles University Malostransk´en´am. 25 11800 Praha Czech Republic [email protected] Florian Luca Instituto de Matem´aticas Universidad Nacional Autonoma de M´exico C.P. 58089 Morelia, Michoac´an M´exico [email protected] Abstract A Keith number is a positive integer N with the decimal representation a a a 1 2 ··· n such that n 2 and N appears in the sequence (K ) given by the recurrence ≥ m m≥1 K = a ,...,K = a and K = K + K + + K for m>n. We prove 1 1 n n m m−1 m−2 ··· m−n that there are only finitely many Keith numbers using only one decimal digit (i.e., a = a = = a ), and that the set of Keith numbers is of asymptotic density zero. 1 2 ··· n 1 Introduction With the number 197, let (Km)m≥1 be the sequence whose first three terms K1 =1, K2 =9 and K3 = 7 are the digits of 197 and that satisfies the recurrence Km = Km−1 +Km−2 +Km−3 1 for all m> 3. Its initial terms are 1, 9, 7, 17, 33, 57, 107, 197, 361, 665,... Note that 197 itself is a member of this sequence. This phenomenon was first noticed by Mike Keith and such numbers are now called Keith numbers. More precisely, a number N with decimal representation a1a2 an is a Keith number if n 2 and N appears in the N N ··· ≥ sequence K = (Km )m≥1 whose n initial terms are the digits of N read from left to right N N N N and satisfying Km = Km−1 + Km−2 + + Km−n for all m>n. -

Phd Complete Document

Idiopathy: A Novel and ‘A Failed Entertainment:’ The Process of Paying Attention in and to Infinite Jest by Sam Byers Thesis submitted for the qualification of Doctor of Philosophy at The University of East Anglia, Department of Literature, Drama, and Creative Writing, September 2013. © This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognize that its copyright rests with the author and that use of any information derived there from must be in accordance with current UK Copyright Law. In addition, any quotation or extract must include full attribution. WORD COUNT: 90,000 words + Appendix 2 Abstract My PhD thesis combines a novel, Idiopathy, and a critical essay, ‘A Failed Entertainment:’ The Process of Paying Attention In and To Infinite Jest. Idiopathy examines the inner lives of three friends and their experiences of both separation and a reunion. By emphasising internality over externality it seeks to examine the notion that how we feel about our lives may be more important, indeed, more ‘real,’ than what takes place around us. Idiopathy is overtly concerned with the extent to which interaction with others is problematic, and pays particular attention to the emotional complexities of friendships, relationships and family dynamics. Through these pained and frequently unsatisfactory interactions the novel seeks to satirise broader social phenomena, such as media panics, health epidemics, ideological protest movements and the rise of what might be termed a ‘pathologised’ culture, in which all the characters are continually concerned that something is ‘wrong’ with them. Illness is a metaphor throughout, and is explored not only through the characters’ sense of personal malaise, but also through the device of a fictional cattle epidemic that forms a media backdrop to unfolding events. -

Section Handout

CS106B Handout 11 Autumn 2012 October 1st, 2012 Section Handout This first week, I’m only including two problems, because introductions might take 15 or so of the 50 minutes we have. One of the problems is a discussion problem, and the other is written up as an informal coding exercise. However, I’ll always allow your section leader and your peers to decide if you’d rather adopt the collaborative discussion-problem format for both of them. Discussion Problem 1: Publishing Stories Social networking sites like Facebook, LinkedIn, and Google+ typically record and publish stories about actions taken by you and your friends. Stories like: Jessie Duan accepted your friend request. Matt Anderson is listening to Green Day on Spotify. Patrick Costello wrote a note called "Because Faiz told me to". David Wang commented on Jeffrey Spehar’s status. Mike Vernal gave The French Laundry a 5-star review. are created from story templates like {name} accepted your friend request. {name} is listening to {band} on {application}. {name} wrote a note called "{title}". {name} commented on {target}’s status. {actor} gave {restaurant} a {rating}-star review. The specific story is generated from the skeletal one by replacing the tokens—substrings like "{name}", "{title}", and "{rating}"—with event-specific values, like "Jessie Duan", "Because Faiz told me to", and "5". The token-value pairs can be packaged in a Map<string, string>, and given a story template and a data map, it’s possible to generate an actual story. 2 Write the generateStory function, which accepts a story template (like "{actor} gave {restaurant} a {rating}-star review.") and a Map<string, string> (which might map "actor" to "Mike Vernal", "restaurant" to "The French Laundry", and "rating" to "5"), and builds a string just like the story template, except the tokens have been replaced by the text they map to. -

Solving Knapsack and Related Problems

Solving knapsack and related problems Daniel Lichtblau Wolfram Research, Inc. 100 Trade Centre Dr. Champaign IL USA, 61820 [email protected] Abstract. Knapsack problems and variants thereof arise in several different fields from operations research to cryptography to really, really serious problems for hard−core puzzle enthusiasts. We discuss some of these and show ways in which one might formulate and solve them using Mathematica. 1. Introduction A knapsack problem is described informally as follows. One has a set of items. One must select from it a subset that fulfills specified criteria. A classical example, from cryptosystems, is what is called the "subset sum" problem. From a set S of numbers, and a given number k, find a subset of S whose sum is k. A variant is to find a subset whose sum is as close as possible to k. Another variant is to allow integer multiples of the summands, provided they are small. That is, we are to find a componentwise "small" vector v such that v.S » k (where we regard S as being an ordered set, that is, a vector). More general knapsack problems may allow values other than zero and one (typically selected from a small range), inequality constraints, and other variations on the above themes.. Of note is that the general integer linear programming problem (ILP) can be cast as a knapsack problem provided the search space is bounded. Each variable is decomposed into new variables, one for each "bit"; they are referred to as 0 −1 variables because these are the values they may take. -

Numbers 1 to 100

Numbers 1 to 100 PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Tue, 30 Nov 2010 02:36:24 UTC Contents Articles −1 (number) 1 0 (number) 3 1 (number) 12 2 (number) 17 3 (number) 23 4 (number) 32 5 (number) 42 6 (number) 50 7 (number) 58 8 (number) 73 9 (number) 77 10 (number) 82 11 (number) 88 12 (number) 94 13 (number) 102 14 (number) 107 15 (number) 111 16 (number) 114 17 (number) 118 18 (number) 124 19 (number) 127 20 (number) 132 21 (number) 136 22 (number) 140 23 (number) 144 24 (number) 148 25 (number) 152 26 (number) 155 27 (number) 158 28 (number) 162 29 (number) 165 30 (number) 168 31 (number) 172 32 (number) 175 33 (number) 179 34 (number) 182 35 (number) 185 36 (number) 188 37 (number) 191 38 (number) 193 39 (number) 196 40 (number) 199 41 (number) 204 42 (number) 207 43 (number) 214 44 (number) 217 45 (number) 220 46 (number) 222 47 (number) 225 48 (number) 229 49 (number) 232 50 (number) 235 51 (number) 238 52 (number) 241 53 (number) 243 54 (number) 246 55 (number) 248 56 (number) 251 57 (number) 255 58 (number) 258 59 (number) 260 60 (number) 263 61 (number) 267 62 (number) 270 63 (number) 272 64 (number) 274 66 (number) 277 67 (number) 280 68 (number) 282 69 (number) 284 70 (number) 286 71 (number) 289 72 (number) 292 73 (number) 296 74 (number) 298 75 (number) 301 77 (number) 302 78 (number) 305 79 (number) 307 80 (number) 309 81 (number) 311 82 (number) 313 83 (number) 315 84 (number) 318 85 (number) 320 86 (number) 323 87 (number) 326 88 (number) -

Solving Knapsack Problems

1 Solving knapsack problems Daniel Lichtblau Wolfram Research, Inc. 100 Trade Centre Dr. Champaign IL USA, 61820 [email protected] IMS 2004, Banff, Canada August 2004 Introduction Informal description of a knapsack problem: í One has a set of items. í One must select from it a subset that fulfills specified criteria. Classical Example: The "subset sum" problem, from cryptosystems í From a set S of numbers, and a given number k, find a subset of S whose sum is k. To be honest I am never certain of what specifically comprises a knapsack problem. I wanted to write up what I know about various types of problems that all seem to fall in or near the knapsack umbrella. I don’t know the correct definition of quadratic programs either, but that never stopped me from talking about them. 2 Acknowledgements (probably should be apologies) I thank Christian Jacob and Peter Mitic for allowing me to send a paper relatively late in the process. I had for some time wanted to write up whatever I know, or suspect, about knapsack problems. This conference was my incentive to do so. Alas, while I found that I could do many of things I had hoped, some problems proved elusive. I kept working at them after the paper went in, and so I now have an extended version as well. Not surprisingly, I STILL cannot solve all the problems I like to think fall into the knapsack category. So this may grow yet more. I also thank everyone whose methods and/or code I borrowed, adapted, or stole outright in the course of writing up the paper. -

BRAIN TICKLERS Brain Ticklers

BRAIN TICKLERS Brain Ticklers RESULTS FROM = 0. The generalized equation for a roots is 2/(3q0.5) for q ≥ 4, and 0.5 – q/24 SUMMER 2011 parabola is ax2 + bxy + cy2 + dx + ey for q ≤ 4, where b and c are both in the + f = 0, with the further condition that range ±q. At q = 4, both equations give Perfect b2 = 4ac, where a and c aren’t both a 1/3 probability. A requirement for the Brule, John D. MI B ’49 Couillard, J. Gregory IL A ’89 zero. (Wikipedia on the internet is one quadratic equation to have complex Gerken, Gary M. CA H ’11 source of these requirements.) Sub- roots is c > b2/4. Prepare a set of Car- Rasbold, J. Charles OH A ’83 Spong, Robert N. UT A ’58 stituting data points (3,0) and (-1,0) tesian coordinates with c on the ver- *Thaller, David B. MA B ’93 in the generalized equation yields e = tical axis and b on the horizontal axis, -2c. And, substituting (0,1) and (0,-1) and plot the parabola c = b2/4. Draw a Other Aron, Gert IA B ’58 yields d = 0 and a = -f. The generalized square with sides b = ±q and c = ±q. Beaudet, Paul R. Father of member equation then reduces to ax2 + bxy + The region yielding complex roots is Bertrand, Richard M. WI B ’73 2 Conway, David B. TX I ’79 cy - 2cy – a = 0. Now, substituting bounded below by the parabola and Davis, John H. OH G ’60 data point (3,0) in this equation gives above and on the right by the square. -

Subject Index

Subject Index Many of these terms are defined in the glossary, others are defined in the Prime Curios! themselves. The boldfaced entries should indicate the key entries. γ 97 Arecibo Message 55 φ 79, 184, see golden ratio arithmetic progression 34, 81, π 8, 12, 90, 102, 106, 129, 136, 104, 112, 137, 158, 205, 210, 154, 164, 172, 173, 177, 181, 214, 219, 223, 226, 227, 236 187, 218, 230, 232, 235 Armstrong number 215 5TP39 209 Ars Magna 20 ASCII 66, 158, 212, 230 absolute prime 65, 146, 251 atomic number 44, 51, 64, 65 abundant number 103, 156 Australopithecus afarensis 46 aibohphobia 19 autism 85 aliquot sequence 13, 98 autobiographical prime 192 almost-all-even-digits prime 251 averaging sets 186 almost-equipandigital prime 251 alphabet code 50, 52, 61, 65, 73, Babbage 18, 146 81, 83 Babbage (portrait) 147 alphaprime code 83, 92, 110 balanced prime 12, 48, 113, 251 alternate-digit prime 251 Balog 104, 159 Amdahl Six 38 Balog cube 104 American Mathematical Society baseball 38, 97, 101, 116, 127, 70, 102, 196, 270 129 Antikythera mechanism 44 beast number 109, 129, 202, 204 apocalyptic number 72 beastly prime 142, 155, 229, 251 Apollonius 101 bemirp 113, 191, 210, 251 Archimedean solid 19 Bernoulli number 84, 94, 102 Archimedes 25, 33, 101, 167 Bernoulli triangle 214 { Page 287 { Bertrand prime Subject Index Bertrand prime 211 composite-digit prime 59, 136, Bertrand's postulate 111, 211, 252 252 computer mouse 187 Bible 23, 45, 49, 50, 59, 72, 83, congruence 252 85, 109, 158, 194, 216, 235, congruent prime 29, 196, 203, 236 213, 222, 227, -

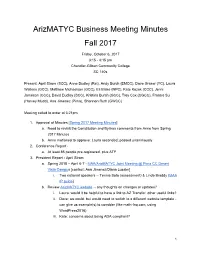

Approved Minutes Fall 2017

ArizMATYC Business Meeting Minutes Fall 2017 Friday, October 6, 2017 3:15 - 4:15 pm Chandler-Gilbert Community College SC 140s Present: April Strom (SCC), Anne Dudley (Ret), Andy Burch (EMCC), Dave Graser (YC), Laura Watkins (GCC), Matthew Michaelson (GCC), Eli Blake (NPC), Kate Kozak (CCC), Jenni Jameson (CCC), David Dudley (SCC), Kristina Burch (GCC), Trey Cox (CGCC), Francis Su (Harvey Mudd), Ana Jimenez (Pima), Shannon Ruth (GWCC) Meeting called to order at 3:21pm 1. Approval of Minutes [Spring 2017 Meeting Minutes] a. Need to revisit the Constitution and Bylaws comments from Anne from Spring 2017 Minutes b. Anne motioned to approve; Laura seconded; passed unanimously 2. Conference Report - a. At least 85 people pre-registered, plus ATF 3. President Report - April Strom a. Spring 2018 – April 6-7 - MAA/ArizMATYC Joint Meeting @ Pima CC Desert Vista Campus [contact: Ana Jimenez/Diana Lussier] i. Two national speakers -- Tensia Soto (assessment) & Linda Braddy (MAA IP guide) b. Review ArizMATYC website -- any thoughts on changes or updates? i. Laura: would it be helpful to have a link to AZ Transfer, other useful links? ii. Dave: we could, but would need to switch to a different website template - can give us example(s) to consider (like math-faq.com, using WordPress2016) iii. Kate: concerns about being ADA compliant? 1 iv. Anne: not clear that “Google Group” is how to join communication group - change to “Join Us” or something more intuitive; also need to make sure we make Meeting information easily accessible and easy to find v. Matt: add links to jump to various parts of meeting information vi. -

Section Handout #1: Collections, File Reading, Big-O Based on Handouts by Various Current and Past CS106B/X Instructors and Tas

Nick Troccoli Section #1 CS 106X Week 2 Section Handout #1: Collections, File Reading, Big-O Based on handouts by various current and past CS106B/X instructors and TAs. 1. Mirror (Grid) Write a function mirror that accepts a reference to a grid of integers as a parameter and flips the grid along its diagonal, so that each index [i][j] contains what was previously at index [j][i] in the grid. You may assume the grid is square, that is, it has the same number of rows as columns. For example, the grid below on the left would be altered to give it the new grid state on the right: {{ 6, 1, 9, 4}, {{6, -2, 14, 21}, {-2, 5, 8, 12}, {1, 5, 39, 55}, {14, 39, -6, 18}, --> {9, 8, -6, 73}, {21, 55, 73, -3}} {4, 12, 18, -3}} Bonus: How would you solve this problem if the grid were not square? 2. reorder (Stack, Queue) Write a function named reorder that takes a queue of integers that are already sorted by absolute value, and modifies it so that the integers are sorted normally. Use a single Stack<int> to help you. For example, passing in the Queue below on the left would alter it to the new queue state on the right: {1, -2, 3, 4, -5, -6, 7} --> {-6, -5, -2, 1, 3, 4, 7} 3. Stack(s) as a Queue (Stack, Queue) Your job is to implement a Stack<int> using one or more Queues. That is, you will be responsible for writing the push and pop methods for a Stack<int> but your internal data representation must be a Queue: void push(Queue<int>& queue, int entry) int pop(Queue<int>& queue) Bonus: Conceptually, how would you implement a Queue using only one or more Stacks? 4. -

Dictionary of Mathematics

Dictionary of Mathematics English – Spanish | Spanish – English Diccionario de Matemáticas Inglés – Castellano | Castellano – Inglés Kenneth Allen Hornak Lexicographer © 2008 Editorial Castilla La Vieja Copyright 2012 by Kenneth Allen Hornak Editorial Castilla La Vieja, c/o P.O. Box 1356, Lansdowne, Penna. 19050 United States of America PH: (908) 399-6273 e-mail: [email protected] All dictionaries may be seen at: http://www.EditorialCastilla.com Sello: Fachada de la Universidad de Salamanca (ESPAÑA) ISBN: 978-0-9860058-0-0 All rights reserved. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording or by any informational storage or retrieval system without permission in writing from the author Kenneth Allen Hornak. Reservados todos los derechos. Quedan rigurosamente prohibidos la reproducción de este libro, el tratamiento informático, la transmisión de alguna forma o por cualquier medio, ya sea electrónico, mecánico, por fotocopia, por registro u otros medios, sin el permiso previo y por escrito del autor Kenneth Allen Hornak. ACKNOWLEDGEMENTS Among those who have favoured the author with their selfless assistance throughout the extended period of compilation of this dictionary are Andrew Hornak, Norma Hornak, Edward Hornak, Daniel Pritchard and T.S. Gallione. Without their assistance the completion of this work would have been greatly delayed. AGRADECIMIENTOS Entre los que han favorecido al autor con su desinteresada colaboración a lo largo del dilatado período de acopio del material para el presente diccionario figuran Andrew Hornak, Norma Hornak, Edward Hornak, Daniel Pritchard y T.S. Gallione. Sin su ayuda la terminación de esta obra se hubiera demorado grandemente.