ZFS: Love Your Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hybrid Drowsy SRAM and STT-RAM Buffer Designs for Dark-Silicon

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination. IEEE TRANSACTIONS ON VERY LARGE SCALE INTEGRATION (VLSI) SYSTEMS 1 Hybrid Drowsy SRAM and STT-RAM Buffer Designs for Dark-Silicon-Aware NoC Jia Zhan, Student Member, IEEE, Jin Ouyang, Member, IEEE,FenGe,Member, IEEE, Jishen Zhao, Member, IEEE, and Yuan Xie, Fellow, IEEE Abstract— The breakdown of Dennard scaling prevents us MCMC MC from powering all transistors simultaneously, leaving a large fraction of dark silicon. This crisis has led to innovative work on Tile link power-efficient core and memory architecture designs. However, link link RouterRouter the research for addressing dark silicon challenges with network- To router on-chip (NoC), which is a major contributor to the total chip NI power consumption, is largely unexplored. In this paper, we Core link comprehensively examine the network power consumers and L1-I$ L2 L1-D$ the drawbacks of the conventional power-gating techniques. To overcome the dark silicon issue from the NoC’s perspective, we MCMC MC propose DimNoC, a dim silicon scheme, which leverages recent drowsy SRAM design and spin-transfer torque RAM (STT-RAM) Fig. 1. 4 × 4 NoC-based multicore architecture. In each node, the local technology to replace pure SRAM-based NoC buffers. processing elements (core, L1, L2 caches, and so on) are attached to a router In particular, we propose two novel hybrid buffer architectures: through an NI. Routers are interconnected through links to form an NoC. 1) a hierarchical buffer architecture, which divides the input Four memory controllers are attached at the four corners, which will be used buffers into a set of levels with different power states and 2) a for off-chip memory access. -

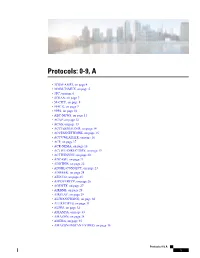

Protocols: 0-9, A

Protocols: 0-9, A • 3COM-AMP3, on page 4 • 3COM-TSMUX, on page 5 • 3PC, on page 6 • 4CHAN, on page 7 • 58-CITY, on page 8 • 914C G, on page 9 • 9PFS, on page 10 • ABC-NEWS, on page 11 • ACAP, on page 12 • ACAS, on page 13 • ACCESSBUILDER, on page 14 • ACCESSNETWORK, on page 15 • ACCUWEATHER, on page 16 • ACP, on page 17 • ACR-NEMA, on page 18 • ACTIVE-DIRECTORY, on page 19 • ACTIVESYNC, on page 20 • ADCASH, on page 21 • ADDTHIS, on page 22 • ADOBE-CONNECT, on page 23 • ADWEEK, on page 24 • AED-512, on page 25 • AFPOVERTCP, on page 26 • AGENTX, on page 27 • AIRBNB, on page 28 • AIRPLAY, on page 29 • ALIWANGWANG, on page 30 • ALLRECIPES, on page 31 • ALPES, on page 32 • AMANDA, on page 33 • AMAZON, on page 34 • AMEBA, on page 35 • AMAZON-INSTANT-VIDEO, on page 36 Protocols: 0-9, A 1 Protocols: 0-9, A • AMAZON-WEB-SERVICES, on page 37 • AMERICAN-EXPRESS, on page 38 • AMINET, on page 39 • AN, on page 40 • ANCESTRY-COM, on page 41 • ANDROID-UPDATES, on page 42 • ANET, on page 43 • ANSANOTIFY, on page 44 • ANSATRADER, on page 45 • ANY-HOST-INTERNAL, on page 46 • AODV, on page 47 • AOL-MESSENGER, on page 48 • AOL-MESSENGER-AUDIO, on page 49 • AOL-MESSENGER-FT, on page 50 • AOL-MESSENGER-VIDEO, on page 51 • AOL-PROTOCOL, on page 52 • APC-POWERCHUTE, on page 53 • APERTUS-LDP, on page 54 • APPLEJUICE, on page 55 • APPLE-APP-STORE, on page 56 • APPLE-IOS-UPDATES, on page 57 • APPLE-REMOTE-DESKTOP, on page 58 • APPLE-SERVICES, on page 59 • APPLE-TV-UPDATES, on page 60 • APPLEQTC, on page 61 • APPLEQTCSRVR, on page 62 • APPLIX, on page 63 • ARCISDMS, -

An Analysis of Data Corruption in the Storage Stack

An Analysis of Data Corruption in the Storage Stack Lakshmi N. Bairavasundaram∗, Garth R. Goodson†, Bianca Schroeder‡ Andrea C. Arpaci-Dusseau∗, Remzi H. Arpaci-Dusseau∗ ∗University of Wisconsin-Madison †Network Appliance, Inc. ‡University of Toronto {laksh, dusseau, remzi}@cs.wisc.edu, [email protected], [email protected] Abstract latent sector errors, within disk drives [18]. Latent sector errors are detected by a drive’s internal error-correcting An important threat to reliable storage of data is silent codes (ECC) and are reported to the storage system. data corruption. In order to develop suitable protection Less well-known, however, is that current hard drives mechanisms against data corruption, it is essential to un- and controllers consist of hundreds-of-thousandsof lines derstand its characteristics. In this paper, we present the of low-level firmware code. This firmware code, along first large-scale study of data corruption. We analyze cor- with higher-level system software, has the potential for ruption instances recorded in production storage systems harboring bugs that can cause a more insidious type of containing a total of 1.53 million disk drives, over a pe- disk error – silent data corruption, where the data is riod of 41 months. We study three classes of corruption: silently corrupted with no indication from the drive that checksum mismatches, identity discrepancies, and par- an error has occurred. ity inconsistencies. We focus on checksum mismatches since they occur the most. Silent data corruptionscould lead to data loss more of- We find more than 400,000 instances of checksum ten than latent sector errors, since, unlike latent sector er- mismatches over the 41-month period. -

Understanding Real World Data Corruptions in Cloud Systems

Understanding Real World Data Corruptions in Cloud Systems Peipei Wang, Daniel J. Dean, Xiaohui Gu Department of Computer Science North Carolina State University Raleigh, North Carolina {pwang7,djdean2}@ncsu.edu, [email protected] Abstract—Big data processing is one of the killer applications enough to require attention, little research has been done to for cloud systems. MapReduce systems such as Hadoop are the understand software-induced data corruption problems. most popular big data processing platforms used in the cloud system. Data corruption is one of the most critical problems in In this paper, we present a comprehensive study on the cloud data processing, which not only has serious impact on characteristics of the real world data corruption problems the integrity of individual application results but also affects the caused by software bugs in cloud systems. We examined 138 performance and availability of the whole data processing system. data corruption incidents reported in the bug repositories of In this paper, we present a comprehensive study on 138 real world four Hadoop projects (i.e., Hadoop-common, HDFS, MapRe- data corruption incidents reported in Hadoop bug repositories. duce, YARN [17]). Although Hadoop provides fault tolerance, We characterize those data corruption problems in four aspects: our study has shown that data corruptions still seriously affect 1) what impact can data corruption have on the application and system? 2) how is data corruption detected? 3) what are the the integrity, performance, and availability -

Persistent 9P Sessions for Plan 9

Persistent 9P Sessions for Plan 9 Gorka Guardiola, [email protected] Russ Cox, [email protected] Eric Van Hensbergen, [email protected] ABSTRACT Traditionally, Plan 9 [5] runs mainly on local networks, where lost connections are rare. As a result, most programs, including the kernel, do not bother to plan for their file server connections to fail. These programs must be restarted when a connection does fail. If the kernel’s connection to the root file server fails, the machine must be rebooted. This approach suffices only because lost connections are rare. Across long distance networks, where connection failures are more common, it becomes woefully inadequate. To address this problem, we wrote a program called recover, which proxies a 9P session on behalf of a client and takes care of redialing the remote server and reestablishing con- nection state as necessary, hiding network failures from the client. This paper presents the design and implementation of recover, along with performance benchmarks on Plan 9 and on Linux. 1. Introduction Plan 9 is a distributed system developed at Bell Labs [5]. Resources in Plan 9 are presented as synthetic file systems served to clients via 9P, a simple file protocol. Unlike file protocols such as NFS, 9P is stateful: per-connection state such as which files are opened by which clients is maintained by servers. Maintaining per-connection state allows 9P to be used for resources with sophisticated access control poli- cies, such as exclusive-use lock files and chat session multiplexers. It also makes servers easier to imple- ment, since they can forget about file ids once a connection is lost. -

MRAM Technology Status

National Aeronautics and Space Administration MRAM Technology Status Jason Heidecker Jet Propulsion Laboratory Pasadena, California Jet Propulsion Laboratory California Institute of Technology Pasadena, California JPL Publication 13-3 2/13 National Aeronautics and Space Administration MRAM Technology Status NASA Electronic Parts and Packaging (NEPP) Program Office of Safety and Mission Assurance Jason Heidecker Jet Propulsion Laboratory Pasadena, California NASA WBS: 104593 JPL Project Number: 104593 Task Number: 40.49.01.09 Jet Propulsion Laboratory 4800 Oak Grove Drive Pasadena, CA 91109 http://nepp.nasa.gov i This research was carried out at the Jet Propulsion Laboratory, California Institute of Technology, and was sponsored by the National Aeronautics and Space Administration Electronic Parts and Packaging (NEPP) Program. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise, does not constitute or imply its endorsement by the United States Government or the Jet Propulsion Laboratory, California Institute of Technology. ©2013. California Institute of Technology. Government sponsorship acknowledged. ii TABLE OF CONTENTS 1.0 Introduction ............................................................................................................................................................ 1 2.0 MRAM Technology ................................................................................................................................................ 2 2.1 -

The Effects of Repeated Refresh Cycles on the Oxide Integrity of EEPROM Memories at High Temperature by Lynn Reed and Vema Reddy Tekmos, Inc

The Effects of Repeated Refresh Cycles on the Oxide Integrity of EEPROM Memories at High Temperature By Lynn Reed and Vema Reddy Tekmos, Inc. 4120 Commercial Center Drive, #400, Austin, TX 78744 [email protected], [email protected] Abstract Data retention in stored-charge based memories, such as Flash and EEPROMs, decreases with increasing temperature. Compensation for this shortening of retention time can be accomplished by refreshing the data using periodic erase-write refresh cycles, although the number of these cycles is limited by oxide integrity. An alternate approach is to use refresh cycles consisting of a rewrite only cycles, without the prior erase cycle. The viability of this approach requires that this refresh cycle induces less damage than an erase-write cycle. This paper studies the effects of repeated refresh cycles on oxide integrity in a high temperature environment and makes comparisons to the damage caused by erase-write cycles. The experiment consisted of running a large number of refresh cycles on a selected byte. The control group was other bytes which were not subjected to refresh only cycles. The oxide integrity was checked by performing repeated erase-write cycles on each of the two groups to determine if the refresh cycles decreased the number of erase-write cycles before failure. Data was collected from multiple parts, with different numbers of refresh cycles, and at temperatures ranging from 25C to 190C. The experiment was conducted on microcontrollers containing embedded EEPROM memories. The microcontrollers were programmed to test and measure their own memories, and to report the results to an external controller. -

On the Effects of Data Corruption in Files

Just One Bit in a Million: On the Effects of Data Corruption in Files Volker Heydegger Universität zu Köln, Historisch-Kulturwissenschaftliche Informationsverarbeitung (HKI), Albertus-Magnus-Platz, 50968 Köln, Germany [email protected] Abstract. So far little attention has been paid to file format robustness, i.e., a file formats capability for keeping its information as safe as possible in spite of data corruption. The paper on hand reports on the first comprehensive research on this topic. The research work is based on a study on the status quo of file format robustness for various file formats from the image domain. A controlled test corpus was built which comprises files with different format characteristics. The files are the basis for data corruption experiments which are reported on and discussed. Keywords: digital preservation, file format, file format robustness, data integ- rity, data corruption, bit error, error resilience. 1 Introduction Long-term preservation of digital information is by now and will be even more so in the future one of the most important challenges for digital libraries. It is a task for which a multitude of factors have to be taken into account. These factors are hardly predictable, simply due to the fact that digital preservation is something targeting at the unknown future: Are we still able to maintain the preservation infrastructure in the future? Is there still enough money to do so? Is there still enough proper skilled manpower available? Can we rely on the current legal foundation in the future as well? How long is the tech- nology we use at the moment sufficient for the preservation task? Are there major changes in technologies which affect the access to our digital assets? If so, do we have adequate strategies and means to cope with possible changes? and so on. -

Virtfs—A Virtualization Aware File System Pass-Through

VirtFS—A virtualization aware File System pass-through Venkateswararao Jujjuri Eric Van Hensbergen Anthony Liguori IBM Linux Technology Center IBM Research Austin IBM Linux Technology Center [email protected] [email protected] [email protected] Badari Pulavarty IBM Linux Technology Center [email protected] Abstract operations into block device operations and then again into host file system operations. This paper describes the design and implementation of In addition to performance improvements over a tradi- a paravirtualized file system interface for Linux in the tional virtual block device, exposing guest file system KVM environment. Today’s solution of sharing host activity to the hypervisor provides greater insight to the files on the guest through generic network file systems hypervisor about the workload the guest is running. This like NFS and CIFS suffer from major performance and allows the hypervisor to make more intelligent decisions feature deficiencies as these protocols are not designed with respect to I/O caching and creates new opportuni- or optimized for virtualization. To address the needs of ties for hypervisor-based services like de-duplification. the virtualization paradigm, in this paper we are intro- ducing a new paravirtualized file system called VirtFS. In Section 2 of this paper, we explore more details about This new file system is currently under development and the motivating factors for paravirtualizing the file sys- is being built using QEMU, KVM, VirtIO technologies tem layer. In Section 3, we introduce the VirtFS design and 9P2000.L protocol. including an overview of the 9P protocol, which VirtFS is based on, along with a set of extensions introduced for greater Linux guest compatibility. -

Exploiting Asymmetry in Edram Errors for Redundancy-Free Error

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TETC.2019.2960491, IEEE Transactions on Emerging Topics in Computing IEEE TRANSACTIONS ON EMERGING TOPICS IN COMPUTING 1 Exploiting Asymmetry in eDRAM Errors for Redundancy-Free Error-Tolerant Design Shanshan Liu (Member, IEEE), Pedro Reviriego (Senior Member, IEEE), Jing Guo, Jie Han (Senior Member, IEEE) and Fabrizio Lombardi (Fellow, IEEE) Abstract—For some applications, errors have a different impact on data and memory systems depending on whether they change a zero to a one or the other way around; for an unsigned integer, a one to zero (or zero to one) error reduces (or increases) the value. For some memories, errors are also asymmetric; for example, in a DRAM, retention failures discharge the storage cell. The tolerance of such asymmetric errors would result in a robust and efficient system design. Error Control Codes (ECCs) are one common technique for memory protection against these errors by introducing some redundancy in memory cells. In this paper, the asymmetry in the errors in Embedded DRAMs (eDRAMs) is exploited for error-tolerant designs without using any ECC or parity, which are redundancy-free in terms of memory cells. A model for the impact of retention errors and refresh time of eDRAMs on the False Positive rate or False Negative rate of some eDRAM applications is proposed and analyzed. Bloom Filters (BFs) and read-only or write-through caches implemented in eDRAMs are considered as the first case studies for this model. -

Looking to the Future by JOHN BALDWIN

1 of 3 Looking to the Future BY JOHN BALDWIN FreeBSD’s 13.0 release delivers new features to users and refines the workflow for new contri- butions. FreeBSD contributors have been busy fixing bugs and adding new features since 12.0’s release in December of 2018. In addition, FreeBSD developers have refined their vision to focus on FreeBSD’s future users. An abbreviated list of some of the changes in 13.0 is given below. A more detailed list can be found in the release notes. Shifting Tools Not all of the changes in the FreeBSD Project over the last two years have taken the form of patches. Some of the largest changes have been made in the tools used to contribute to FreeBSD. The first major change is that FreeBSD has switched from Subversion to Git for storing source code, documentation, and ports. Git is widely used in the software industry and is more familiar to new contribu- tors than Subversion. Git’s distributed nature also more easily facilitates contributions from individuals who are Not all of the changes in the not committers. FreeBSD had been providing Git mir- rors of the Subversion repositories for several years, and FreeBSD Project over the last many developers had used Git to manage in-progress patches. The Git mirrors have now become the offi- two years have taken the form cial repositories and changes are now pushed directly of patches. to Git instead of Subversion. FreeBSD 13.0 is the first release whose sources are only available via Git rather than Subversion. -

Virtualization Best Practices

SUSE Linux Enterprise Server 15 SP1 Virtualization Best Practices SUSE Linux Enterprise Server 15 SP1 Publication Date: September 24, 2021 Contents 1 Virtualization Scenarios 2 2 Before You Apply Modifications 2 3 Recommendations 3 4 VM Host Server Configuration and Resource Allocation 3 5 VM Guest Images 25 6 VM Guest Configuration 36 7 VM Guest-Specific Configurations and Settings 42 8 Xen: Converting a Paravirtual (PV) Guest to a Fully Virtual (FV/HVM) Guest 45 9 External References 49 1 SLES 15 SP1 1 Virtualization Scenarios Virtualization oers a lot of capabilities to your environment. It can be used in multiple scenarios. To get more details about it, refer to the Book “Virtualization Guide” and in particular, to the following sections: Book “Virtualization Guide”, Chapter 1 “Virtualization Technology”, Section 1.2 “Virtualization Capabilities” Book “Virtualization Guide”, Chapter 1 “Virtualization Technology”, Section 1.3 “Virtualization Benefits” This best practice guide will provide advice for making the right choice in your environment. It will recommend or discourage the usage of options depending on your workload. Fixing conguration issues and performing tuning tasks will increase the performance of VM Guest's near to bare metal. 2 Before You Apply Modifications 2.1 Back Up First Changing the conguration of the VM Guest or the VM Host Server can lead to data loss or an unstable state. It is really important that you do backups of les, data, images, etc. before making any changes. Without backups you cannot restore the original state after a data loss or a misconguration. Do not perform tests or experiments on production systems.