Long-Lived Digital Data Collections

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Signals

Technical Information Digital Signals 1 1 bit t Part 1 Fundamentals Technical Information Part 1: Fundamentals Part 2: Self-operated Regulators Part 3: Control Valves Part 4: Communication Part 5: Building Automation Part 6: Process Automation Should you have any further questions or suggestions, please do not hesitate to contact us: SAMSON AG Phone (+49 69) 4 00 94 67 V74 / Schulung Telefax (+49 69) 4 00 97 16 Weismüllerstraße 3 E-Mail: [email protected] D-60314 Frankfurt Internet: http://www.samson.de Part 1 ⋅ L150EN Digital Signals Range of values and discretization . 5 Bits and bytes in hexadecimal notation. 7 Digital encoding of information. 8 Advantages of digital signal processing . 10 High interference immunity. 10 Short-time and permanent storage . 11 Flexible processing . 11 Various transmission options . 11 Transmission of digital signals . 12 Bit-parallel transmission. 12 Bit-serial transmission . 12 Appendix A1: Additional Literature. 14 99/12 ⋅ SAMSON AG CONTENTS 3 Fundamentals ⋅ Digital Signals V74/ DKE ⋅ SAMSON AG 4 Part 1 ⋅ L150EN Digital Signals In electronic signal and information processing and transmission, digital technology is increasingly being used because, in various applications, digi- tal signal transmission has many advantages over analog signal transmis- sion. Numerous and very successful applications of digital technology include the continuously growing number of PCs, the communication net- work ISDN as well as the increasing use of digital control stations (Direct Di- gital Control: DDC). Unlike analog technology which uses continuous signals, digital technology continuous or encodes the information into discrete signal states (Fig. 1). When only two discrete signals states are assigned per digital signal, these signals are termed binary si- gnals. -

Measuring the Impact of Digital Repositories

Measuring the Impact of Digital Repositories February 28-March 1, 2017 Participant Introductions Bruce Ambacher’s career spans four decades at NARA, George Mason University, and the University of Maryland. His responsibilities included service as acting chief of NARA’s digital preservation unit and as court-appointed preservation officer for the PROFS, Iran-Contra, and Clinton email collections. He represented NARA on the Federal Geographic Data Committee and helped develop federal and international geospatial standards. He was NARA’s representative for the OAIS Reference Model. He co-chaired the development of TRAC. He helped develop the trustworthy digital repositories standards ISO 16363 and ISO 16919. He began teaching courses in archives and digital preservation at George Mason University in 1984, became an adjunct professor at the University of Maryland in 2000 and a Visiting Professor between 2007 and 2013. He has consulted on digital preservation for industry and cultural humanities institutions. He is a Research Affiliate of the Digital Curation Innovation Center at the University of Maryland Mary Barlow is head of the Strategic Project Management Office at the European Bioinformatics Institute (EMBL-EBI), and has oversight of impact reporting and the development of infrastructure business cases. Prior to taking on this role, Mary served as a Programme Manager for the multi-million pound investment in EMBL-EBI by the UK government's Large Facilities Capital Fund. This programme included the construction of new office space and the on-going public procurement of ICT infrastructure to support EMBL-EBI's growing public databases. Mary's work prior to EMBL-EBI focused on ICT integration and intelligent buildings. -

The Institutional Challenges of Cyberinfrastructure and E-Research

E-Research and E-Scholarship The Institutional Challenges Cyberinfrastructureof and E-Research cholarly practices across an astoundingly wide range of dis- ciplines have become profoundly and irrevocably changed By Clifford Lynch by the application of advanced information technology. This collection of new and emergent scholarly practices was first widely recognized in the science and engineering disciplines. In the late 1990s, the term e-science (or occasionally, particularly in Asia, cyber-science) began to be used as a shorthand for these new methods and approaches. The United Kingdom launched Sits formal e-science program in 2001.1 In the United States, a multi-year inquiry, having its roots in supercomputing support for the portfolio of science and engineering disciplines funded by the National Science Foundation, culminated in the production of the “Atkins Report” in 2003, though there was considerable delay before NSF began to act program- matically on the report.2 The quantitative social sciences—which are largely part of NSF’s funding purview and which have long traditions of data curation and sharing, as well as the use of high-end statistical com- putation—received more detailed examination in a 2005 NSF report.3 Key leaders in the humanities and qualitative social sciences recognized that IT-driven innovation in those disciplines was also well advanced, though less uniformly adopted (and indeed sometimes controversial). In fact, the humanities continue to showcase some of the most creative and transformative examples of the use of information technology to create new scholarship.4 Based on this recognition of the immense disciplinary scope of the impact of information technology, the more inclusive term e-research (occasionally, e-scholarship) has come into common use, at least in North America and Europe. -

The OCLC Research Survey of Special Collections and Archives

Taking Our Pulse: The OCLC Research Survey of Special Collections and Archives Jackie M. Dooley Program Officer Katherine Luce Research Intern OCLC Research A publication of OCLC Research Taking Our Pulse: The OCLC Research Survey of Special Collections and Archives Taking Our Pulse: The OCLC Research Survey of Special Collections and Archives Jackie M. Dooley and Katherine Luce, for OCLC Research © 2010 OCLC Online Computer Library Center, Inc. Reuse of this document is permitted as long as it is consistent with the terms of the Creative Commons Attribution-Noncommercial-Share Alike 3.0 (USA) license (CC-BY-NC- SA): http://creativecommons.org/licenses/by-nc-sa/3.0/. October 2010 Updates: 15 November 2010, p. 75: corrected percentage in final sentence. 17 November 2010, p. 2: added Creative Commons license statement. 28 January 2011, p. 25, penultimate para., line 3: deleted “or more” following “300%”; p. 26, final para., 5th line: changed 89 million to 90 million; p. 30, final para.: changed 2009-10 to 2010-11; p. 75, final para.: changed 400 to 80; p. 76, 2nd para.: corrected funding figures; p. 90, final line: changed 67% to 75%. OCLC Research Dublin, Ohio 43017 USA www.oclc.org ISBN: 1-55653-387-X (978-1-55653-387-7) OCLC (WorldCat): 651793026 Please direct correspondence to: Jackie Dooley Program Officer [email protected] Suggested citation: Dooley, Jackie M., and Katherine Luce. 2010. Taking our pulse: The OCLC Research survey of special collections and archives. Dublin, Ohio: OCLC Research. http://www.oclc.org/research/publications/library/2010/2010-11.pdf. http://www.oclc.org/research/publications/library/2010/2010-11.pdf October 2010 Jackie M. -

Chapter 1 Computers and Digital Basics Computer Concepts 2014 1 Your Assignment…

Chapter 1 Computers and Digital Basics Computer Concepts 2014 1 Your assignment… Prepare an answer for your assigned question. Use Chapter 1 in the book and this PowerPoint to procure information. Prepare a PowerPoint presentation to: 1. Show your answer and additional information/facts – make sure you understand and can explain your answer. Provide as must information and detail as possible. Add graphics to enhance. 2. Where in the book did you find your information? Include the page number. 3. What more do you need to find out to help you better understand this question? Be prepared to share your information with the class. Chapter 1: Computers and Digital Basics 2 1 The Digital Revolution The digital revolution is an ongoing process of social, political, and economic change brought about by digital technology, such as computers and the Internet The technology driving the digital revolution is based on digital electronics and the idea that electrical signals can represent data, such as numbers, words, pictures, and music Chapter 1: Computers and Digital Basics 6 1 The Digital Revolution Digitization is the process of converting text, numbers, sound, photos, and video into data that can be processed by digital devices The digital revolution has evolved through four phases, beginning with big, expensive, standalone computers, and progressing to today’s digital world in which small, inexpensive digital devices are everywhere Chapter 1: Computers and Digital Basics 7 1 The Digital Revolution Chapter 1: Computers and Digital Basics -

2018 Annual Report Alfred P

2018 Annual Report Alfred P. Sloan Foundation $ 2018 Annual Report Contents Preface II Mission Statement III From the President IV The Year in Discovery VI About the Grants Listing 1 2018 Grants by Program 2 2018 Financial Review 101 Audited Financial Statements and Schedules 103 Board of Trustees 133 Officers and Staff 134 Index of 2018 Grant Recipients 135 Cover: The Sloan Foundation Telescope at Apache Point Observatory, New Mexico as it appeared in May 1998, when it achieved first light as the primary instrument of the Sloan Digital Sky Survey. An early set of images is shown superimposed on the sky behind it. (CREDIT: DAN LONG, APACHE POINT OBSERVATORY) I Alfred P. Sloan Foundation $ 2018 Annual Report Preface The ALFRED P. SLOAN FOUNDATION administers a private fund for the benefit of the public. It accordingly recognizes the responsibility of making periodic reports to the public on the management of this fund. The Foundation therefore submits this public report for the year 2018. II Alfred P. Sloan Foundation $ 2018 Annual Report Mission Statement The ALFRED P. SLOAN FOUNDATION makes grants primarily to support original research and education related to science, technology, engineering, mathematics, and economics. The Foundation believes that these fields—and the scholars and practitioners who work in them—are chief drivers of the nation’s health and prosperity. The Foundation also believes that a reasoned, systematic understanding of the forces of nature and society, when applied inventively and wisely, can lead to a better world for all. III Alfred P. Sloan Foundation $ 2018 Annual Report From the President ADAM F. -

Istec Distinguished Lectures, Colorado State

Colorado State University’s Information Science and Technology Center (ISTeC) presents two lectures by Dr. Fran Berman Director, San Diego Supercomputer Center Professor and HPC Endowed Chair, UC San Diego ISTeC Distinguished Lecture in conjunction with the Electrical and Computer Engineering Department and Computer Science Department Seminar Series “100 Years of Digital Data” Monday, March 31, 2008 Reception: 10:30 a.m. Lecture: 11:00 – 12:00 noon Location: Lory Student Center Room 214 • Joint Electrical and Computer Engineering Department and Computer Science Department Special Seminar sponsored by ISTeC “Cyberinfrastructure Challenges in Computer Science” Monday, March 31, 2008 Lecture: 4:00 – 5:00 p.m. Location: Wagar Room 231 ABSTRACTS “100 Years of Digital Data” The Information Age has brought with it a deluge of digital data. Current estimates are that in 2006, 161 exabytes (10^18 bytes) of digital data were created from cell phones, computers, iPods, DVDs, sensors, satellites, scientific instruments, and other sources, providing a foundation for our digital world. Migrating digital content through new generations of storage media, making sense of its content, and ensuring that needed information is accessible now and for the foreseeable future constitute some of the most critical challenges of the Information Age. The San Diego Supercomputer Center (SDSC) is a national Center leading the development and deployment of a comprehensive infrastructure for managing, storing, preserving, and using digital data. Leveraging ongoing collaborations with the research community (National Science Foundation, Department of Energy, etc.), data preservation and archival communities (Library of Congress, National Archives and Records Administration) and other partners, SDSC is providing innovative leadership in the emerging area of Data Cyberinfrastructure. -

Lecture Notes for Digital Electronics

Lecture Notes for Digital Electronics Raymond E. Frey Physics Department University of Oregon Eugene, OR 97403, USA [email protected] March, 2000 1 Basic Digital Concepts By converting continuous analog signals into a finite number of discrete states, a process called digitization, then to the extent that the states are sufficiently well separated so that noise does create errors, the resulting digital signals allow the following (slightly idealized): • storage over arbitrary periods of time • flawless retrieval and reproduction of the stored information • flawless transmission of the information Some information is intrinsically digital, so it is natural to process and manipulate it using purely digital techniques. Examples are numbers and words. The drawback to digitization is that a single analog signal (e.g. a voltage which is a function of time, like a stereo signal) needs many discrete states, or bits, in order to give a satisfactory reproduction. For example, it requires a minimum of 10 bits to determine a voltage at any given time to an accuracy of ≈ 0:1%. For transmission, one now requires 10 lines instead of the one original analog line. The explosion in digital techniques and technology has been made possible by the incred- ible increase in the density of digital circuitry, its robust performance, its relatively low cost, and its speed. The requirement of using many bits in reproduction is no longer an issue: The more the better. This circuitry is based upon the transistor, which can be operated as a switch with two states. Hence, the digital information is intrinsically binary. So in practice, the terms digital and binary are used interchangeably. -

Lecture 9 Analog and Digital I/Q Modulation

Lecture 9 Analog and Digital I/Q Modulation Analog I/Q Modulation • Time Domain View •Polar View • Frequency Domain View Digital I/Q Modulation • Phase Shift Keying • Constellations 11/4/2006 Coherent Detection Transmitter Output 0 x(t) y(t) π 2cos(2 fot) Receiver Output Lowpass y(t) z(t) r(t) π 2cos(2 fot) • Requires receiver local oscillator to be accurately aligned in phase and frequency to carrier sine wave 11/4/2006 L Lecture 9 Fall 2006 2 Impact of Phase Misalignment in Receiver Local Oscillator Transmitter Output 0 x(t) y(t) π 2cos(2 fot) Receiver Output Output is zero Lowpass y(t) z(t) r(t) π 2sin(2 fot) • Worst case is when receiver LO and carrier frequency are phase shifted 90 degrees with respect to each other 11/4/2006 L Lecture 9 Fall 2006 3 Analog I/Q Modulation Baseband Input iti(t)(t) it (t) t cos 2 f tπ yt (t) 2cos(2( π 0 )f1t) π 2sin(2sin() 2π f0t f1t) qqt(t)(t) t qt (t) • Analog signals take on a continuous range of values (as viewed in the time domain) • I/Q signals are orthogonal and therefore can be transmitted simultaneously and fully recovered 11/4/2006 L Lecture 9 Fall 2006 4 Polar View of Analog I/Q Modulation it (t) = i(t)cos() 2π fot + 0° ii(t(t)t) it (t) qt (t) = q(t)cos() 2π fot + 90° = q(t)sin() 2π fot t cos 2π f tπ y (t) 2cos(2( 0 )f1t) t 2 2 π 2sin(2sin() 2π f0t f1t) yt (t) = i (t) + q (t) cos() 2π fot + θ(t) qq(tt(t)) −1 where θ(t) = tan q(t)/i(t) t qt (t) −180°<θ < 180° 11/4/2006 L Lecture 9 Fall 2006 5 Polar View of Analog I/Q Modulation (Con’t) • Polar View shows amplitude and phase of it(t), qt(t) and yt(t) combined signal for transmission at a given frequency f. -

Introduction (Pdf)

chapter1.fm Page 1 Thursday, August 17, 2000 4:43 PM CHAPTER 1 INTRODUCTION The evolution of digital circuit design n Compelling issues in digital circuit design n How to measure the quality of digital design n Valuable references 1.1 A Historical Perspective 1.2 Issues in Digital Integrated Circuit Design 1.3 Quality Metrics of A Digital Design 1.4 Summary 1.5 To Probe Further 1 chapter1.fm Page 2 Thursday, August 17, 2000 4:43 PM 2 INTRODUCTION Chapter 1 1.1A Historical Perspective The concept of digital data manipulation has made a dramatic impact on our society. One has long grown accustomed to the idea of digital computers. Evolving steadily from main- frame and minicomputers, personal and laptop computers have proliferated into daily life. More significant, however, is a continuous trend towards digital solutions in all other areas of electronics. Instrumentation was one of the first noncomputing domains where the potential benefits of digital data manipulation over analog processing were recognized. Other areas such as control were soon to follow. Only recently have we witnessed the con- version of telecommunications and consumer electronics towards the digital format. Increasingly, telephone data is transmitted and processed digitally over both wired and wireless networks. The compact disk has revolutionized the audio world, and digital video is following in its footsteps. The idea of implementing computational engines using an encoded data format is by no means an idea of our times. In the early nineteenth century, Babbage envisioned large- scale mechanical computing devices, called Difference Engines [Swade93]. Although these engines use the decimal number system rather than the binary representation now common in modern electronics, the underlying concepts are very similar. -

A Micro-Programmable Correlator for Real-Time Radar Processing

ALKER: Correlator for radar processing A MICRO-PROGRAMMABLE CORRELATOR FOR REAL-TIME RADAR PROCESSING by Hans-J¢rgen Alker ELECTRONICS RESEARCH LABORATORY (ELAB) Trondheim, NORWAY ABSTRACT This paper presents a novel design of a mul tibi t ·, digital correlator for incoherent scatter radar observations. By utilizing bit-slice microprocessor elements and internal control by microcode instructions, a high-speed arithmetical preprocessor has been developed. Arithmetical and control operations are separated to obtain increased speed performance. The arithmetical part is optimized for complex auto/cross correlation processing and has an effective rate of 24·106 multiplications/sec. Input data has 8-bit accuracy in integer format. The processor includes, at input, a 2-ported buffer memory for storing single/multiple channel inputs. Processing results are temporarily stored in a 4 K word high-speed memory before transferred to a general-purpose computer. Processing speed can be increased by adding up to 3 slave processors thus achieving direct parallel processing. Internal hardware is available for interface with standard CAMAC modules. INTRODUCTION During 1976-79 a feasibility study of a digital, multibit correlator for the EISCAT (European Incoherent SCATter) radar system was conducted. The main topics of the study embraced system design and hardware construction of a digital processor which fulfilled the specifications for real-time data process- ing. The result of the study is a prototype construction of a high- speed multiprocessor system under interactive control of the EISCAT radar site computer. Support software for microprogram development, simulation and hardware testing are available for execution on a host computer. The main objectives for constructing a digital processor for the EISCAT system were the ultimate requirements for special purpose data handling algorithms and the real-time processing speed. -

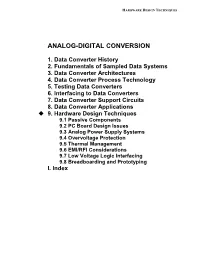

Hardware Design Techniques

HARDWARE DESIGN TECHNIQUES ANALOG-DIGITAL CONVERSION 1. Data Converter History 2. Fundamentals of Sampled Data Systems 3. Data Converter Architectures 4. Data Converter Process Technology 5. Testing Data Converters 6. Interfacing to Data Converters 7. Data Converter Support Circuits 8. Data Converter Applications 9. Hardware Design Techniques 9.1 Passive Components 9.2 PC Board Design Issues 9.3 Analog Power Supply Systems 9.4 Overvoltage Protection 9.5 Thermal Management 9.6 EMI/RFI Considerations 9.7 Low Voltage Logic Interfacing 9.8 Breadboarding and Prototyping I. Index ANALOG-DIGITAL CONVERSION HARDWARE DESIGN TECHNIQUES 9.1 PASSIVE COMPONENTS CHAPTER 9 HARDWARE DESIGN TECHNIQUES This chapter, one of the longer of those within the book, deals with topics just as important as all of those basic circuits immediately surrounding the data converter, discussed earlier. The chapter deals with various and sundry circuit/system issues which fall under the guise of system hardware design techniques. In this context, the design techniques may be all those support items surrounding a data converter, excluding the data converter itself. This includes issues of passive components, printed circuit design, power supply systems, protection of linear devices against overvoltage and thermal effects, EMI/RFI issues, high speed logic considerations, and finally, simulation, breadboarding and prototyping. Some of these topics aren't directly involved in the actual signal path of a design, but they are every bit as important as choosing the correct device and surrounding circuit values. Remote sensing and signal conditioning is such a vital part of data conversion that a considerable amount of discussion is given to topics such as overvoltage protection, cable driving, shielding, and receiving—where the remote interface is often with op amps and instrumentation amplifiers.