Downloaded from His Web Site

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ZUGZWANGS in CHESS STUDIES G.Mcc. Haworth,1 H.M.J.F. Van Der

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/290629887 Zugzwangs in Chess Studies Article in ICGA journal · June 2011 DOI: 10.3233/ICG-2011-34205 CITATION READS 1 2,142 3 authors: Guy McCrossan Haworth Harold M.J.F. Van der Heijden University of Reading Gezondheidsdienst voor Dieren 119 PUBLICATIONS 354 CITATIONS 49 PUBLICATIONS 1,232 CITATIONS SEE PROFILE SEE PROFILE Eiko Bleicher 7 PUBLICATIONS 12 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Chess Endgame Analysis View project The Skilloscopy project View project All content following this page was uploaded by Guy McCrossan Haworth on 23 January 2017. The user has requested enhancement of the downloaded file. 82 ICGA Journal June 2011 NOTES ZUGZWANGS IN CHESS STUDIES G.McC. Haworth,1 H.M.J.F. van der Heijden and E. Bleicher Reading, U.K., Deventer, the Netherlands and Berlin, Germany ABSTRACT Van der Heijden’s ENDGAME STUDY DATABASE IV, HHDBIV, is the definitive collection of 76,132 chess studies. The zugzwang position or zug, one in which the side to move would prefer not to, is a frequent theme in the literature of chess studies. In this third data-mining of HHDBIV, we report on the occurrence of sub-7-man zugs there as discovered by the use of CQL and Nalimov endgame tables (EGTs). We also mine those Zugzwang Studies in which a zug more significantly appears in both its White-to-move (wtm) and Black-to-move (btm) forms. We provide some illustrative and extreme examples of zugzwangs in studies. -

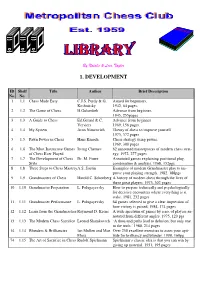

1. Development

By Natalie & Leon Taylor 1. DEVELOPMENT ID Shelf Title Author Brief Description No. No. 1 1.1 Chess Made Easy C.J.S. Purdy & G. Aimed for beginners, Koshnitsky 1942, 64 pages. 2 1.2 The Game of Chess H.Golombek Advance from beginner, 1945, 255pages 3 1.3 A Guide to Chess Ed.Gerard & C. Advance from beginner Verviers 1969, 156 pages. 4 1.4 My System Aron Nimzovich Theory of chess to improve yourself 1973, 372 pages 5 1.5 Pawn Power in Chess Hans Kmoch Chess strategy using pawns. 1969, 300 pages 6 1.6 The Most Instructive Games Irving Chernev 62 annotated masterpieces of modern chess strat- of Chess Ever Played egy. 1972, 277 pages 7 1.7 The Development of Chess Dr. M. Euwe Annotated games explaining positional play, Style combination & analysis. 1968, 152pgs 8 1.8 Three Steps to Chess MasteryA.S. Suetin Examples of modern Grandmaster play to im- prove your playing strength. 1982, 188pgs 9 1.9 Grandmasters of Chess Harold C. Schonberg A history of modern chess through the lives of these great players. 1973, 302 pages 10 1.10 Grandmaster Preparation L. Polugayevsky How to prepare technically and psychologically for decisive encounters where everything is at stake. 1981, 232 pages 11 1.11 Grandmaster Performance L. Polugayevsky 64 games selected to give a clear impression of how victory is gained. 1984, 174 pages 12 1.12 Learn from the Grandmasters Raymond D. Keene A wide spectrum of games by a no. of players an- notated from different angles. 1975, 120 pgs 13 1.13 The Modern Chess Sacrifice Leonid Shamkovich ‘A thousand paths lead to delusion, but only one to the truth.’ 1980, 214 pages 14 1.14 Blunders & Brilliancies Ian Mullen and Moe Over 250 excellent exercises to asses your apti- Moss tude for brilliancy and blunder. -

Download the Booklet of Chessbase Magazine #199

THE Magazine for Professional Chess JANUARY / FEBRUARY 2021 | NO. 199 | 19,95 Euro S R V ON U I O D H E O 4 R N U A N DVD H N I T NG RE TIME: MO 17 years old, second place at the Altibox Norway Chess: Alireza Firouzja is already among the Top 20 TOP GRANDmasTERS ANNOTATE: ALL IN ONE: SEMI-TaRRasCH Duda, Edouard, Firouzja, Igor Stohl condenses a Giri, Nielsen, et al. trendy opening AVRO TOURNAMENT 1938 – LONDON SYSTEM – no reST FOR THE Bf4 CLash OF THE GENERATIONS Alexey Kuzmin hits with the Retrospective + 18 newly annotated active 5...Nh5!? Keres games THE MODERN BENONI UNDER FIRE! Patrick Zelbel presents a pointed repertoire with 6.Nf3/7.Bg5 THE MAGAZINE FOR PROFESSIONAL CHESS JANU 17 years old, secondARY / placeFEB at the RU Altibox Norway Chess: AlirezaAR FirouzjaY 2021 is already among the Top 20 NO . 199 1 2 0 2 G R U B M A H , H B M G E S DVD with first class training material for A B S S E club players and professionals! H C © EDITORIAL The new chess stars: Alireza Firouzja and Moscow in order to measure herself against Beth Harmon the world champion in a tournament. She is accompanied by a US official who warns Now the world and also the world of chess her about the Soviets and advises her not to has been hit by the long-feared “second speak with anyone. And what happens? She wave” of the Covid-19 pandemic. Many is welcomed with enthusiasm by the popu- tournaments have been cancelled. -

The Check Is in the Mail June 2007

The Check Is in the Mail June 2007 NOTICE: I will be out of the office from June 16 through June 25 to teach at Castle Chess Camp in Atlanta, Georgia. During that GM Cesar Augusto Blanco-Gramajo time I will be unable to answer any of your email, US mail, telephone calls, or GAME OF THE MONTH any other form of communication. Cesar’s provisional USCF rating is 2463. NOTICE #2 As you watch this game unfold, you can The email address for USCF almost see Blanco’s rating go upwards. correspondence chess matters has changed to [email protected] RUY LOPEZ (C67) White: Cesar Blanco (2463) ICCF GRANDMASTER in 2006 Black: Benjamin Coraretti (0000) ELECTRONIC KNIGHTS 2006 Electronic Knights Cesar Augusto Blanco-Gramajo, born 1.e4 e5 2.Nf3 Nc6 3.Bb5 Nf6 4.0–0 January 14, 1959, is a Guatemalan ICCF Nxe4 5.d4 Nd6 6.Bxc6 dxc6 7.dxe5 Grandmaster now living in the US and Ne4 participating in the 2006 Electronic An unusual sideline that seems to be Knights. Cesar has had an active career gaining in popularity lately. in ICCF (playing over 800 games there) and sports a 2562 ICCF rating along 8.Qe2 with the GM title which was awarded to him at the ICCF Congress in Ostrava in White chooses to play the middlegame 2003. He took part in the great Rest of as opposed to the endgame after 8. the World vs. Russia match, holding Qxd8+. With an unstable Black Knight down Eleventh Board and making two and quick occupancy of the d-file, draws against his Russian opponent. -

Draft – Not for Circulation

A Gross Miscarriage of Justice in Computer Chess by Dr. Søren Riis Introduction In June 2011 it was widely reported in the global media that the International Computer Games Association (ICGA) had found chess programmer International Master Vasik Rajlich in breach of the ICGA‟s annual World Computer Chess Championship (WCCC) tournament rule related to program originality. In the ICGA‟s accompanying report it was asserted that Rajlich‟s chess program Rybka contained “plagiarized” code from Fruit, a program authored by Fabien Letouzey of France. Some of the headlines reporting the charges and ruling in the media were “Computer Chess Champion Caught Injecting Performance-Enhancing Code”, “Computer Chess Reels from Biggest Sporting Scandal Since Ben Johnson” and “Czech Mate, Mr. Cheat”, accompanied by a photo of Rajlich and his wife at their wedding. In response, Rajlich claimed complete innocence and made it clear that he found the ICGA‟s investigatory process and conclusions to be biased and unprofessional, and the charges baseless and unworthy. He refused to be drawn into a protracted dispute with his accusers or mount a comprehensive defense. This article re-examines the case. With the support of an extensive technical report by Ed Schröder, author of chess program Rebel (World Computer Chess champion in 1991 and 1992) as well as support in the form of unpublished notes from chess programmer Sven Schüle, I argue that the ICGA‟s findings were misleading and its ruling lacked any sense of proportion. The purpose of this paper is to defend the reputation of Vasik Rajlich, whose innovative and influential program Rybka was in the vanguard of a mid-decade paradigm change within the computer chess community. -

Chess Endgame News

Chess Endgame News Article Published Version Haworth, G. (2014) Chess Endgame News. ICGA Journal, 37 (3). pp. 166-168. ISSN 1389-6911 Available at http://centaur.reading.ac.uk/38987/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online 166 ICGA Journal September 2014 CHESS ENDGAME NEWS G.McC. Haworth1 Reading, UK This note investigates the recently revived proposal that the stalemated side should lose, and comments further on the information provided by the FRITZ14 interface to Ronald de Man’s DTZ50 endgame tables (EGTs). Tables 1 and 2 list relevant positions: data files (Haworth, 2014b) provide chess-line sources and annotation. Pos.w-b Endgame FEN Notes g1 3-2 KBPKP 8/5KBk/8/8/p7/P7/8/8 b - - 34 124 Korchnoi - Karpov, WCC.5 (1978) g2 3-3 KPPKPP 8/6p1/5p2/5P1K/4k2P/8/8/8 b - - 2 65 Anand - Kramnik, WCC.5 (2007) 65. … Kxf5 g3 3-2 KRKRB 5r2/8/8/8/8/3kb3/3R4/3K4 b - - 94 109 Carlsen - van Wely, Corus (2007) 109. … Bxd2 == g4 7-7 KQR..KQR.. 2Q5/5Rpk/8/1p2p2p/1P2Pn1P/5Pq1/4r3/7K w Evans - Reshevsky, USC (1963), 49. -

Chess Problems out of the Box

werner keym Chess Problems Out of the Box Nightrider Unlimited Chess is an international language. (Edward Lasker) Chess thinking is good. Chess lateral thinking is better. Photo: Gabi Novak-Oster In 2002 this chess problem (= no. 271) and this photo were pub- lished in the German daily newspaper Rhein-Zeitung Koblenz. That was a great success: most of the ‘solvers’ were wrong! Werner Keym Nightrider Unlimited The content of this book differs in some ways from the German edition Eigenartige Schachprobleme (Curious Chess Problems) which was published in 2010 and meanwhile is out of print. The complete text of Eigenartige Schachprobleme (errata included) is freely available for download from the publisher’s site, see http://www.nightrider-unlimited.de/angebot/keym_1st_ed.pdf. Copyright © Werner Keym, 2018 All rights reserved. Kuhn † / Murkisch Series No. 46 Revised and updated edition 2018 First edition in German 2010 Published by Nightrider Unlimited, Treuenhagen www.nightrider-unlimited.de Layout: Ralf J. Binnewirtz, Meerbusch Printed / bound by KLEVER GmbH, Bergisch Gladbach ISBN 978-3-935586-14-6 Contents Preface vii Chess composition is the poetry of chess 1 Castling gala 2 Four real castlings in directmate problems and endgame studies 12 Four real castlings in helpmate two-movers 15 Curious castling tasks 17 From the Allumwandlung to the Babson task 18 From the Valladao task to the Keym task 28 The (lightened) 100 Dollar theme 35 How to solve retro problems 36 Economical retro records (type A, B, C, M) 38 Economical retro records -

Chessbase.Com - Chess News - Grandmasters and Global Growth

ChessBase.com - Chess News - Grandmasters and Global Growth Grandmasters and Global Growth 07.01.2010 Professor Kenneth Rogoff is a strong chess grandmaster, who also happens to be one of the world's leading economists. In a Project Syndicate article that appeared this week Ken sees the new decade as one in which "artificial intelligence hits escape velocity," with an economic impact on par with the emergence of India and China. He uses computer chess to illustrated the point. Grandmasters and Global Growth By Kenneth Rogoff As the global economy limps out of the last decade and enters a new one in 2010, what will be the next big driver of global growth? Here’s betting that the “teens” is a decade in which artificial intelligence hits escape velocity, and starts to have an economic impact on par with the emergence of India and China. Admittedly, my perspective is heavily colored by events in the world of chess, a game I once played at a professional level and still follow from a distance. Though special, computer chess nevertheless offers both a window into silicon evolution and a barometer of how people might adapt to it. A little bit of history might help. In 1996 and 1997, World Chess Champion Gary Kasparov played a pair of matches against an IBM computer named “Deep Blue.” At the time, Kasparov dominated world chess, in the same way that Tiger Woods – at least until recently – has dominated golf. In the 1996 match, Deep Blue stunned the champion by beating him in the first game. -

2011-10 Working Version.Pmd

$3.95 Northwest Chess October 2011 Northwest Chess Contents October 2011, Volume 65,10 Issue 765 ISSN Publication 0146-6941 Cover art: Robert Herrera Published monthly by the Northwest Chess Board. Office of record: 3310 25th Ave S, Seattle, WA 98144 Photo credit: Andrei Botez POSTMASTER: Send address changes to: Northwest Chess, PO Box 84746, Page 3: Annual Post Office Statement ............................ Eric Holcomb Seattle WA 98124-6046. Page 4: Portland Chess Club Centennial Open .................... Frank Niro Periodicals Postage Paid at Seattle, WA USPS periodicals postage permit number (0422-390) Page 17: Idaho Chess News ............................................. Jeffrey Roland NWC Staff Page 21: SPNI History ....................................................... Howard Hwa Editor: Ralph Dubisch, Page 22: Two Games .................................... Georgi Orlov, Kairav Joshi [email protected] Page 23: Letter to (and from) the editor ....................... Philip McCready Publisher: Duane Polich, Page 24: Publisher’s Desk and press release ...................... Duane Polich [email protected] Business Manager: Eric Holcomb, Page 25: Theoretically Speaking ........................................Bill McGeary [email protected] Page 27: Book Reviews .................................................. John Donaldson Board Representatives Page 29: NWGP 2011 ........................................................ Murlin Varner David Yoshinaga, Josh Sinanan, Page 30: USCF Delegates’ Report ........................................ -

Determining If You Should Use Fritz, Chessbase, Or Both Author: Mark Kaprielian Revised: 2015-04-06

Determining if you should use Fritz, ChessBase, or both Author: Mark Kaprielian Revised: 2015-04-06 Table of Contents I. Overview .................................................................................................................................................. 1 II. Not Discussed .......................................................................................................................................... 1 III. New information since previous versions of this document .................................................................... 1 IV. Chess Engines .......................................................................................................................................... 1 V. Why most ChessBase owners will want to purchase Fritz as well. ......................................................... 2 VI. Comparing the features ............................................................................................................................ 3 VII. Recommendations .................................................................................................................................... 4 VIII. Additional resources ................................................................................................................................ 4 End of Table of Contents I. Overview Fritz and ChessBase are software programs from the same company. Each has a specific focus, but overlap in some functionality. Both programs can be considered specialized interfaces that sit on top a -

Move Similarity Analysis in Chess Programs

Move similarity analysis in chess programs D. Dailey, A. Hair, M. Watkins Abstract In June 2011, the International Computer Games Association (ICGA) disqual- ified Vasik Rajlich and his Rybka chess program for plagiarism and breaking their rules on originality in their events from 2006-10. One primary basis for this came from a painstaking code comparison, using the source code of Fruit and the object code of Rybka, which found the selection of evaluation features in the programs to be almost the same, much more than expected by chance. In his brief defense, Rajlich indicated his opinion that move similarity testing was a superior method of detecting misappropriated entries. Later commentary by both Rajlich and his defenders reiterated the same, and indeed the ICGA Rules themselves specify move similarity as an example reason for why the tournament director would have warrant to request a source code examination. We report on data obtained from move-similarity testing. The principal dataset here consists of over 8000 positions and nearly 100 independent engines. We comment on such issues as: the robustness of the methods (upon modifying the experimental conditions), whether strong engines tend to play more similarly than weak ones, and the observed Fruit/Rybka move-similarity data. 1. History and background on derivative programs in computer chess Computer chess has seen a number of derivative programs over the years. One of the first was the incident in the 1989 World Microcomputer Chess Cham- pionship (WMCCC), in which Quickstep was disqualified due to the program being \a copy of the program Mephisto Almeria" in all important areas. -

1979 September 29

I position with International flocked to see; To sorri{ of position and succumbed to · Chess - Masters Jonathan Speelman the top established players John Littlewood. In round> and Robert · Bellin until in · they represented vultures I 0, as Black in a Frerich (' . round eight the sensational come to witness the greatest. again, Short drew with Nunn. Short caught happened. As White against "humiliation" of British chess In the last round he met. Short, Miles selected an indif• ~r~a~! • 27-yeai:-old Robert Bellin, THIS YEAR's British Chain- an assistant at his Interzonal ferent line against the French Next up was defending also on 7½ points. Bellin · pionship was one of the most tournament. More quietly, at defence and was thrashed off champion '. Speelman, stood to win the champion- · sensational ever. The stage the other end of the scale, 14- the board. amusingly described along _ ship on tie-break if they drew was set when Grandmaster year-old Nigel Short scraped _ Turmoil reigned! Short had with Miles as· "having the the game as he had faced J - Tony Miles flew in from an in because the selected field great talent and an already physique of a boxer" in pub• stronger opposition earlier in international in Argentina . was then· extended to 48 formidable reputation - but lic information leaflets. Short the event. Against Bellin, and decided to exercise his players. no one had -foreseen this. He defeated Speelman as well to Short rattled off his moves special last-minute entry op- Miles began impressively, .couldn't possibly · win the take the sole lead on seven like · a machinegun in the tion, apparently because he.