Perceptual Synthesis Engine: an Audio-Driven Timbre Generator

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TITLE Integrating Technology Jnto the K-12 Music Curriculum. INSTITUTION Washington Office of the State Superintendent of Public Instruction, Olympia

DOCUMENT RESUME SO 022 027 ED 343 837 the K-12 Music TITLE Integrating Technology Jnto Curriculum. State Superintendent of INSTITUTION Washington Office of the Public Instruction, Olympia. PUB DATE Jun 90 NOTE 247p. PUB TYPE Guides - Non-Classroom Use(055) EDRS PRICE MF01/PC10 Plus Postage. *Computer Software; DESCRIPTORS Computer Assisted Instruction; Computer Uses in Education;Curriculum Development; Educational Resources;*Educational Technology; Elementary Secondary Education;*Music Education; *State Curriculum Guides;Student Educational Objectives Interface; *Washington IDENTIFIERS *Musical Instrument Digital ABSTRACT This guide is intended toprovide resources for The focus of integrating technologyinto the K-12 music curriculum. (Musical the guide is on computersoftware and the use of MIDI The guide gives Instrument DigitalInterface) in the music classroom. that integrate two examples ofcommercially available curricula on the technology as well as lessonplans that incorporate technology and music subjects of music fundamentals,aural literacy composition, concerning history. The guide providesERIC listings and literature containing information computer assistedinstruction. Ten appendices including lists of on music-relatedtechnology are provided, MIDI equipment software, softwarepublishers, books and videos, manufacturers, and book andperiodical pu'lishers. (DB) *********************************************************************** Reproductions supplied by EDRS arethe best that can be made from the original document. *************************,.********************************************* -

Interpretação Em Tempo Real Sobre Material Sonoro Pré-Gravado

Interpretação em tempo real sobre material sonoro pré-gravado JOÃO PEDRO MARTINS MEALHA DOS SANTOS Mestrado em Multimédia da Universidade do Porto Dissertação realizada sob a orientação do Professor José Alberto Gomes da Universidade Católica Portuguesa - Escola das Artes Julho de 2014 2 Agradecimentos Em primeiro lugar quero agradecer aos meus pais, por todo o apoio e ajuda desde sempre. Ao orientador José Alberto Gomes, um agradecimento muito especial por toda a paciência e ajuda prestada nesta dissertação. Pelo apoio, incentivo, e ajuda à Sara Esteves, Inês Santos, Manuel Molarinho, Carlos Casaleiro, Luís Salgado e todos os outros amigos que apesar de se encontraram fisicamente ausentes, estão sempre presentes. A todos, muito obrigado! 3 Resumo Esta dissertação tem como foco principal a abordagem à interpretação em tempo real sobre material sonoro pré-gravado, num contexto performativo. Neste caso particular, material sonoro é entendido como música, que consiste numa pulsação regular e definida. O objetivo desta investigação é compreender os diferentes modelos de organização referentes a esse material e, consequentemente, apresentar uma solução em forma de uma aplicação orientada para a performance ao vivo intitulada Reap. Importa referir que o material sonoro utilizado no software aqui apresentado é composto por músicas inteiras, em oposição às pequenas amostras (samples) recorrentes em muitas aplicações já existentes. No desenvolvimento da aplicação foi adotada a análise estatística de descritores aplicada ao material sonoro pré-gravado, de maneira a retirar segmentos que permitem uma nova reorganização da informação sequencial originalmente contida numa música. Através da utilização de controladores de matriz com feedback visual, o arranjo e distribuição destes segmentos são alterados e reorganizados de forma mais simplificada. -

Technical Essays for Electronic Music Peter Elsea Fall 2002 Introduction

Technical Essays For Electronic Music Peter Elsea Fall 2002 Introduction....................................................................................................................... 2 The Propagation Of Sound .............................................................................................. 3 Acoustics For Music...................................................................................................... 10 The Numbers (and Initials) of Acoustics ...................................................................... 16 Hearing And Perception................................................................................................. 23 Taking The Waveform Apart ........................................................................................ 29 Some Basic Electronics................................................................................................. 34 Decibels And Dynamic Range ................................................................................... 39 Analog Sound Processors ............................................................................................... 43 The Analog Synthesizer............................................................................................... 50 Sampled Sound Processors............................................................................................. 56 An Overview of Computer Music.................................................................................. 62 The Mathematics Of Electronic Music ........................................................................ -

Digital Piano

Address KORG ITALY Spa Via Cagiata, 85 I-60027 Osimo (An) Italy Web servers www.korgpa.com www.korg.co.jp www.korg.com www.korg.co.uk www.korgcanada.com www.korgfr.net www.korg.de www.korg.it www.letusa.es DIGITAL PIANO ENGLISH MAN0010006 © KORG Italy 2006. All rights reserved PART NUMBER: MAN0010006 E 2 User’s Manual User’s C720_English.fm Page 1 Tuesday, October 10, 2006 4:14 PM IMPORTANT SAFETY INSTRUCTIONS The lightning flash with arrowhead symbol within an equilateral triangle, is intended to alert the user to the presence of uninsulated • Read these instructions. “dangerous voltage” within the product’s enclosure that may be of sufficient magni- • Keep these instructions. tude to constitute a risk of electric shock to • Heed all warnings. persons. • Follow all instructions. • Do not use this apparatus near water. The exclamation point within an equilateral • Mains powered apparatus shall not be exposed to dripping or triangle is intended to alert the user to the splashing and that no objects filled with liquids, such as vases, presence of important operating and mainte- shall be placed on the apparatus. nance (servicing) instructions in the literature accompanying the product. • Clean only with dry cloth. • Do not block any ventilation openings, install in accordance with the manufacturer’s instructions. • Do not install near any heat sources such as radiators, heat reg- THE FCC REGULATION WARNING (FOR U.S.A.) isters, stoves, or other apparatus (including amplifiers) that pro- duce heat. This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. -

Generic Artistic Strategies and Ambivalent Affect

I GENERIC ARTISTIC STRATEGIES AND AMBIVALENT AFFECT THOMAS SMITH A thesis in fulfillment of the requirements for the degree of Doctor of Philosophy Faculty of Art & Design 2019 THOMAS SMITH II Surname/Family Name : Smith Given Name/s : Thomas William Abbreviation for degree as give in the University calendar : 1292 – PhD Art, Design and Media Faculty : Faculty of Art & Design School : School of Art & Design Generic Artistic Strategies and Ambivalent Thesis Title : Affect This practice-based thesis investigates how contemporary artists are using generic artistic strategies and ambivalent affect to inhabit post-digital conditions. It proposes that artists reproducing and re-performing generic post-digital aesthetics both engender and are enveloped by ambivalent affect, and that these strategies are immanently political rather than overtly critical. I argue that ambivalent artworks secede from requirements for newness and innovation, and the exploitation of creative labour that are a feature of post-digital environments. Drawing on Sianne Ngai’s affect theory and Paolo Virno’s post-Fordist thinking, I summarise contemporary conditions for creativity as a ‘general intellect of the post-digital’; proposing that artists’ and workers’ relations with this mode of production are necessarily ambivalent. Throughout this research I produced several performance and video works that exemplify this ambivalence. These works reproduce standardised electronic music, stock imagery and PowerPoint presentations, alongside re-performances of digital labour such as online shopping, image searches, and paying rent online. Through these examples I develop concepts for discussing generic aesthetics, framing them as vital to understanding post-digital conditions. I address works by other artists including Amalia Ulman’s Excellences and Perfections (2014), which I argue is neither critical nor complicit with generic post-digital routines, but rather signals affective ambivalence and a desire to secede from social media performance. -

Sound-Source Recognition: a Theory and Computational Model

Sound-Source Recognition: A Theory and Computational Model by Keith Dana Martin B.S. (with distinction) Electrical Engineering (1993) Cornell University S.M. Electrical Engineering (1995) Massachusetts Institute of Technology Submitted to the department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June, 1999 © Massachusetts Institute of Technology, 1999. All Rights Reserved. Author .......................................................................................................................................... Department of Electrical Engineering and Computer Science May 17, 1999 Certified by .................................................................................................................................. Barry L. Vercoe Professor of Media Arts and Sciences Thesis Supervisor Accepted by ................................................................................................................................. Professor Arthur C. Smith Chair, Department Committee on Graduate Students _____________________________________________________________________________________ 2 Sound-source recognition: A theory and computational model by Keith Dana Martin Submitted to the Department of Electrical Engineering and Computer Science on May 17, 1999, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical Engineering -

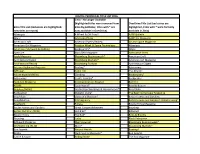

(OR LESS!) Food & Cooking English One-Off (Inside) Interior Design

Publication Magazine Genre Frequency Language $10 DINNERS (OR LESS!) Food & Cooking English One-Off (inside) interior design review Art & Photo English Bimonthly . -

Title List of Rbdigital Magazine Subscriptions (May27, 2020)

Title List of RBdigital Magazine Subscriptions (May27, 2020) TITLE SUBJECT LANGUAGE PLACE OF PUBLICATION 7 Jours News & General Interest FrencH Canada AD – France Architecture FrencH France AD – Italia Architecture Italian Italy AD – GerMany Architecture GerMan GerMany Advocate Lifestyle EnglisH United States Adweek Business EnglisH United States Affaires Plus (A+) Business FrencH Canada All About History History EnglisH United KingdoM Allure WoMen EnglisH United States American Craft Crafts & Hobbies EnglisH United States American History History EnglisH United States American Poetry Review Literature & Writing EnglisH United States American Theatre Theatre EnglisH United States Aperture Art & Photography EnglisH United States Apple Magazine Computers EnglisH United States Architectural Digest Architecture EnglisH United States Architectural Digest - India Architecture EnglisH India Architectural Digest - MeXico Architecture SpanisH MeXico Art & Décoration - France HoMe & Lifestyle FrencH France Artist’s Magazine Art & Photography EnglisH United States Astronomy Science & TecHnology EnglisH United States Audubon Magazine Science & TecHnology EnglisH United States Australian Knitting Crafts & Hobbies EnglisH Australia Avantages HS HoMe & Lifestyle FrencH France AZURE HoMe & Lifestyle EnglisH Canada Backpacker Sports & Recreation EnglisH United States Bake from ScratcH Food & Beverage EnglisH United States BBC Easycook Food & Beverage EnglisH United KingdoM BBC Good Food Magazine Food & Beverage EnglisH United KingdoM BBC History Magazine -

The Early History of Music Programming and Digital Synthesis, Session 20

Chapter 20. Meeting 20, Languages: The Early History of Music Programming and Digital Synthesis 20.1. Announcements • Music Technology Case Study Final Draft due Tuesday, 24 November 20.2. Quiz • 10 Minutes 20.3. The Early Computer: History • 1942 to 1946: Atanasoff-Berry Computer, the Colossus, the Harvard Mark I, and the Electrical Numerical Integrator And Calculator (ENIAC) • 1942: Atanasoff-Berry Computer 467 Courtesy of University Archives, Library, Iowa State University of Science and Technology. Used with permission. • 1946: ENIAC unveiled at University of Pennsylvania 468 Source: US Army • Diverse and incomplete computers © Wikimedia Foundation. License CC BY-SA. This content is excluded from our Creative Commons license. For more information, see http://ocw.mit.edu/fairuse. 20.4. The Early Computer: Interface • Punchcards • 1960s: card printed for Bell Labs, for the GE 600 469 Courtesy of Douglas W. Jones. Used with permission. • Fortran cards Courtesy of Douglas W. Jones. Used with permission. 20.5. The Jacquard Loom • 1801: Joseph Jacquard invents a way of storing and recalling loom operations 470 Photo courtesy of Douglas W. Jones at the University of Iowa. 471 Photo by George H. Williams, from Wikipedia (public domain). • Multiple cards could be strung together • Based on technologies of numerous inventors from the 1700s, including the automata of Jacques Vaucanson (Riskin 2003) 20.6. Computer Languages: Then and Now • Low-level languages are closer to machine representation; high-level languages are closer to human abstractions • Low Level • Machine code: direct binary instruction • Assembly: mnemonics to machine codes • High-Level: FORTRAN • 1954: John Backus at IBM design FORmula TRANslator System • 1958: Fortran II 472 • 1977: ANSI Fortran • High-Level: C • 1972: Dennis Ritchie at Bell Laboratories • Based on B • Very High-Level: Lisp, Perl, Python, Ruby • 1958: Lisp by John McCarthy • 1987: Perl by Larry Wall • 1990: Python by Guido van Rossum • 1995: Ruby by Yukihiro “Matz” Matsumoto 20.7. -

Zinio Title List (Exclusives Are Highlighted, New Titles Are Noted) Zinio

DIGITAL PERIODICAL TITLE LIST 2016 Zinio - No Longer Available (highlighted titles were removed from OverDrive Title List (exclusives are Zinio Title List (exclusives are highlighted, Zinio by publisher, titles with * are highlighted, titles with * were formerly new titles are noted) now available in OverDrive) available in Zinio) Allrecipes 4 Wheel & Off Road* AARP Bulletin Allure American Photo AARP the Magazine American Craft American Poetry Review Air and Space Magazine American Girl Magazine Aviation Week & Space Technology Allrecipes American Patchwork & Quilting Backcountry* Allure Aperture Black Belt Magazine Alternative Press AppleMagazine Bloomberg Businessweek* American Craft Architectural Digest Bloomberg Markets* American Girl Magazine Architectural Record Bloomberg Pursuits Architectural Digest Arizona Highways Magazine Boating* Astronomy ARTnews Cabin Life The Atlantic Ask en español (NEW) Climbing Backcountry* Astronomy Cook's Country* Backpacker Audubon Magazine Cosmopolitan en Espanol Barron's AZURE Cycle World* Bead & Button Babybug (NEW) Destination Weddings & Honeymoons* Bead Style Backpacker Diabetic Living* The Beer Connoisseur Magazine Bead Style Electronic Musician* Better Homes and Gardens Bead&Button Fit Pregnancy Better Homes and Gardens' Diabetic Living* Beadwork Fitness Bicycle Times Magazine Better Homes and Gardens Great Garage Makeovers Bicycling Better Nutrition (NEW) Hot Bike* Billboard Bicycle Times Hot Rod* Birds & Blooms Bicycling Lucky Black Enterprise Billboard Magazine Men's Journal Bloomberg Businessweek* -

UVI Synthlegacy | Software User Manual

Software User Manual Software Version 1.0 EN 160307 End User License Agreement (EULA) Do not use this product until the following license agreement is understood and accepted. By using this product, or allowing anyone else to do so, you are accepting this agreement. Synth Legacy (henceforth ‘the Product’) is licensed to you 3. Ownership as the end user. Please read this Agreement carefully. As between you and UVI, ownership of, and title to, the You cannot transfer ownership of these Sounds and Software enclosed digitally recorded sounds (including any copies) they contain. You cannot re-sell or copy the Product. are held by UVI. Copies are provided to you only to enable you to exercise your rights under the license. LICENSE AND PROTECTION 4. Term This agreement is effective from the date you open this package, and will remain in full force until termination. This agreement 1. License Grant will terminate if you break any of the terms or conditions of this UVI grants to you, subject to the following terms and agreement. Upon termination you agree to destroy and return to conditions, a non-exclusive, non-transferable right UVI all copies of this product and accompanying documentation. to use each authorized copy of the Product. 5. Restrictions The product is the property of UVI and is licensed to you only Except as expressly authorized in this agreement, you may not rent, for use as part of a musical performance, live or recorded. This sell, lease, sub-license, distribute, transfer, copy, reproduce, display, license expressly forbids resale or other distribution of the modify or time share the enclosed product or documentation. -

Geoffrey Kidde Music Department, Manhattanville College Telephone: (914) 798 - 2708 Email: [email protected]

Geoffrey Kidde Music Department, Manhattanville College Telephone: (914) 798 - 2708 Email: [email protected] Education: 1989 - 1995 Doctor of Musical Arts in Composition. Columbia University, New York, NY. Composition - Chou Wen-Chung, Mario Davidovsky, George Edwards. Theory - J. L. Monod, Jeff Nichols, Joseph Dubiel, David Epstein. Electronic and Computer Music - Mario Davidovsky, Brad Garton. Teaching Fellowships in Musicianship and Electronic Music. 1986 - 1988 Master of Music in Composition. New England Conservatory, Boston, MA. Composition - John Heiss, Malcolm Peyton. Theory - Robert Cogan, Pozzi Escot, James Hoffman. Electronic and Computer Music - Barry Vercoe, Robert Ceely. 1983 - 1985 Bachelor of Arts in Music. Columbia University, New York, NY. Theory - Severine Neff, Peter Schubert. Music History - Walter Frisch, Joel Newman, Elaine Sisman. 1981 - 1983 Princeton University, Princeton, NJ. Theory - Paul Lansky, Peter Westergaard. Computer Music - Paul Lansky. Improvisation - J. K. Randall. Teaching Experience: 2014 – present Professor of Music. Manhattanville College 2008 - 2014 Associate Professor of Music. Manhattanville College. 2002 - 2008 Assistant Professor of Music. Manhattanville College. Founding Director of Electronic Music Band (2004-2009). 1999 - 2002 Adjunct Assistant Professor of Music. Hofstra University, Hempstead, NY. 1998 - 2002 Adjunct Assistant Professor of Music. Queensborough Community College, CUNY. Bayside, NY. 1998 (fall semester) Adjunct Professor of Music. St. John’s University, Jamaica, NY.