What Is System Hang and How to Handle It

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Theendokernel: Fast, Secure

The Endokernel: Fast, Secure, and Programmable Subprocess Virtualization Bumjin Im Fangfei Yang Chia-Che Tsai Michael LeMay Rice University Rice University Texas A&M University Intel Labs Anjo Vahldiek-Oberwagner Nathan Dautenhahn Intel Labs Rice University Abstract Intra-Process Sandbox Multi-Process Commodity applications contain more and more combina- ld/st tions of interacting components (user, application, library, and Process Process Trusted Unsafe system) and exhibit increasingly diverse tradeoffs between iso- Unsafe ld/st lation, performance, and programmability. We argue that the Unsafe challenge of future runtime isolation is best met by embracing syscall()ld/st the multi-principle nature of applications, rethinking process Trusted Trusted read/ architecture for fast and extensible intra-process isolation. We IPC IPC write present, the Endokernel, a new process model and security Operating System architecture that nests an extensible monitor into the standard process for building efficient least-authority abstractions. The Endokernel introduces a new virtual machine abstraction for Figure 1: Problem: intra-process is bypassable because do- representing subprocess authority, which is enforced by an main is opaque to OS, sandbox limits functionality, and inter- efficient self-isolating monitor that maps the abstraction to process is slow and costly to apply. Red indicates limitations. system level objects (processes, threads, files, and signals). We show how the Endokernel Architecture can be used to develop enforces subprocess access control to memory and CPU specialized separation abstractions using an exokernel-like state [10, 17, 24, 25, 75].Unfortunately, these approaches only organization to provide virtual privilege rings, which we use virtualize minimal parts of the CPU and neglect tying their to reorganize and secure NGINX. -

Impact Analysis of System and Network Attacks

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DigitalCommons@USU Utah State University DigitalCommons@USU All Graduate Theses and Dissertations Graduate Studies 12-2008 Impact Analysis of System and Network Attacks Anupama Biswas Utah State University Follow this and additional works at: https://digitalcommons.usu.edu/etd Part of the Computer Sciences Commons Recommended Citation Biswas, Anupama, "Impact Analysis of System and Network Attacks" (2008). All Graduate Theses and Dissertations. 199. https://digitalcommons.usu.edu/etd/199 This Thesis is brought to you for free and open access by the Graduate Studies at DigitalCommons@USU. It has been accepted for inclusion in All Graduate Theses and Dissertations by an authorized administrator of DigitalCommons@USU. For more information, please contact [email protected]. i IMPACT ANALYSIS OF SYSTEM AND NETWORK ATTACKS by Anupama Biswas A thesis submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE in Computer Science Approved: _______________________ _______________________ Dr. Robert F. Erbacher Dr. Chad Mano Major Professor Committee Member _______________________ _______________________ Dr. Stephen W. Clyde Dr. Byron R. Burnham Committee Member Dean of Graduate Studies UTAH STATE UNIVERSITY Logan, Utah 2008 ii Copyright © Anupama Biswas 2008 All Rights Reserved iii ABSTRACT Impact Analysis of System and Network Attacks by Anupama Biswas, Master of Science Utah State University, 2008 Major Professor: Dr. Robert F. Erbacher Department: Computer Science Systems and networks have been under attack from the time the Internet first came into existence. There is always some uncertainty associated with the impact of the new attacks. -

Hang Analysis: Fighting Responsiveness Bugs

Hang Analysis: Fighting Responsiveness Bugs Xi Wang† Zhenyu Guo‡ Xuezheng Liu‡ Zhilei Xu† Haoxiang Lin‡ Xiaoge Wang† Zheng Zhang‡ †Tsinghua University ‡Microsoft Research Asia Abstract return to life. However, during the long wait, the user can neither Soft hang is an action that was expected to respond instantly but in- cancel the operation nor close the application. The user may have stead drives an application into a coma. While the application usu- to kill the application or even reboot the computer. ally responds eventually, users cannot issue other requests while The problem of “not responding” is widespread. In our expe- waiting. Such hang problems are widespread in productivity tools rience, hang has occurred in everyday productivity tools, such as such as desktop applications; similar issues arise in server programs office suites, web browsers and source control clients. The actions as well. Hang problems arise because the software contains block- that trigger the hang issues are often the ones that users expect to re- ing or time-consuming operations in graphical user interface (GUI) turn instantly, such as opening a file or connecting to a printer. As and other time-critical call paths that should not. shown in Figure 1(a), TortoiseSVN repository browser becomes unresponsive when a user clicks to expand a directory node of a This paper proposes HANGWIZ to find hang bugs in source code, which are difficult to eliminate before release by testing, remote source repository. The causes of “not responding” bugs may be complicated. An as they often depend on a user’s environment. HANGWIZ finds hang bugs by finding hang points: an invocation that is expected erroneous program that becomes unresponsive may contain dead- to complete quickly, such as a GUI action, but calls a blocking locks (Engler and Ashcraft 2003; Williams et al. -

What Is an Operating System III 2.1 Compnents II an Operating System

Page 1 of 6 What is an Operating System III 2.1 Compnents II An operating system (OS) is software that manages computer hardware and software resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Memory management Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. This ensures that a program does not interfere with memory already in use by another program. Since programs time share, each program must have independent access to memory. Cooperative memory management, used by many early operating systems, assumes that all programs make voluntary use of the kernel's memory manager, and do not exceed their allocated memory. This system of memory management is almost never seen any more, since programs often contain bugs which can cause them to exceed their allocated memory. If a program fails, it may cause memory used by one or more other programs to be affected or overwritten. Malicious programs or viruses may purposefully alter another program's memory, or may affect the operation of the operating system itself. With cooperative memory management, it takes only one misbehaved program to crash the system. Memory protection enables the kernel to limit a process' access to the computer's memory. Various methods of memory protection exist, including memory segmentation and paging. All methods require some level of hardware support (such as the 80286 MMU), which doesn't exist in all computers. -

Exercises for Portfolio

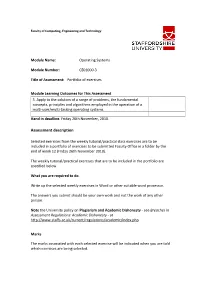

Faculty of Computing, Engineering and Technology Module Name: Operating Systems Module Number: CE01000-3 Title of Assessment: Portfolio of exercises Module Learning Outcomes for This Assessment 3. Apply to the solution of a range of problems, the fundamental concepts, principles and algorithms employed in the operation of a multi-user/multi-tasking operating systems. Hand in deadline: Friday 26th November, 2010. Assessment description Selected exercises from the weekly tutorial/practical class exercises are to be included in a portfolio of exercises to be submitted Faculty Office in a folder by the end of week 12 (Friday 26th November 2010). The weekly tutorial/practical exercises that are to be included in the portfolio are specified below. What you are required to do. Write up the selected weekly exercises in Word or other suitable word processor. The answers you submit should be your own work and not the work of any other person. Note the University policy on Plagiarism and Academic Dishonesty - see Breaches in Assessment Regulations: Academic Dishonesty - at http://www.staffs.ac.uk/current/regulations/academic/index.php Marks The marks associated with each selected exercise will be indicated when you are told which exercises are being selected. Week 1. Tutorial questions: 6. How does the distinction between supervisor mode and user mode function as a rudimentary form of protection (security) system? The operating system assures itself a total control of the system by establishing a set of privileged instructions only executable in supervisor mode. In that way the standard user won’t be able to execute these commands, thus the security. -

A Universal Framework for (Nearly) Arbitrary Dynamic Languages Shad Sterling Georgia State University

Georgia State University ScholarWorks @ Georgia State University Undergraduate Honors Theses Honors College 5-2013 A Universal Framework for (nearly) Arbitrary Dynamic Languages Shad Sterling Georgia State University Follow this and additional works at: https://scholarworks.gsu.edu/honors_theses Recommended Citation Sterling, Shad, "A Universal Framework for (nearly) Arbitrary Dynamic Languages." Thesis, Georgia State University, 2013. https://scholarworks.gsu.edu/honors_theses/12 This Thesis is brought to you for free and open access by the Honors College at ScholarWorks @ Georgia State University. It has been accepted for inclusion in Undergraduate Honors Theses by an authorized administrator of ScholarWorks @ Georgia State University. For more information, please contact [email protected]. A UNIVERSAL FRAMEWORK FOR (NEARLY) ARBITRARY DYNAMIC LANGUAGES (A THEORETICAL STEP TOWARD UNIFYING DYNAMIC LANGUAGE FRAMEWORKS AND OPERATING SYSTEMS) by SHAD STERLING Under the DireCtion of Rajshekhar Sunderraman ABSTRACT Today's dynamiC language systems have grown to include features that resemble features of operating systems. It may be possible to improve on both by unifying a language system with an operating system. Complete unifiCation does not appear possible in the near-term, so an intermediate system is desCribed. This intermediate system uses a common call graph to allow Components in arbitrary languages to interaCt as easily as components in the same language. Potential benefits of such a system include signifiCant improvements in interoperability, -

Mac OS X: an Introduction for Support Providers

Mac OS X: An Introduction for Support Providers Course Information Purpose of Course Mac OS X is the next-generation Macintosh operating system, utilizing a highly robust UNIX core with a brand new simplified user experience. It is the first successful attempt to provide a fully-functional graphical user experience in such an implementation without requiring the user to know or understand UNIX. This course is designed to provide a theoretical foundation for support providers seeking to provide user support for Mac OS X. It assumes the student has performed this role for Mac OS 9, and seeks to ground the student in Mac OS X using Mac OS 9 terms and concepts. Author: Robert Dorsett, manager, AppleCare Product Training & Readiness. Module Length: 2 hours Audience: Phone support, Apple Solutions Experts, Service Providers. Prerequisites: Experience supporting Mac OS 9 Course map: Operating Systems 101 Mac OS 9 and Cooperative Multitasking Mac OS X: Pre-emptive Multitasking and Protected Memory. Mac OS X: Symmetric Multiprocessing Components of Mac OS X The Layered Approach Darwin Core Services Graphics Services Application Environments Aqua Useful Mac OS X Jargon Bundles Frameworks Umbrella Frameworks Mac OS X Installation Initialization Options Installation Options Version 1.0 Copyright © 2001 by Apple Computer, Inc. All Rights Reserved. 1 Startup Keys Mac OS X Setup Assistant Mac OS 9 and Classic Standard Directory Names Quick Answers: Where do my __________ go? More Directory Names A Word on Paths Security UNIX and security Multiple user implementation Root Old Stuff in New Terms INITs in Mac OS X Fonts FKEYs Printing from Mac OS X Disk First Aid and Drive Setup Startup Items Mac OS 9 Control Panels and Functionality mapped to Mac OS X New Stuff to Check Out Review Questions Review Answers Further Reading Change history: 3/19/01: Removed comment about UFS volumes not being selectable by Startup Disk. -

Irmx® 286/Irmx® II Troubleshooting Guide

iRMX® 286/iRMX® II Troubleshooting Guide Q1/1990 Order Number: 273472-001 inter iRMX® 286/iRMX® II Troubleshooting Guide Q1/1990 Order Number: 273472-001 Intel Corporation 2402 W. Beardsley Road Phoenix, Arizona Mailstop DV2-42 Intel Corporation (UK) Ltd. Pipers Way Swindon, Wiltshire SN3 1 RJ United Kingdom Intel Japan KK 5-6 Tokodai, Toyosato-machi Tsukuba-gun Ibaragi-Pref. 300-26 An Intel Technical Report from Technical Support Operations Copyright ©1990, Intel Corporation Copyright ©1990, Intel Corporation The information in this document is subject to change without notice. Intel Corporation makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Intel Corporation assumes no responsibility for any errors that ~ay :!ppe3r in this d~ur:::ent. Intel Corpomtion make:; nc ccmmitmcut tv update nor to keep C"l..iiTeilt thc information contained in this document. Intel Corporation assUmes no responsibility for the use of any circuitry other than circuitry embodied in an Intel product. No other circuit patent licenses are implied. Intel software products are copyrighted by and shall remain the property of Intel Corporation. Use, duplication or disclosure is subject to restrictions stated in Intel's software license, or as defined in FAR 52.227-7013. No part of this document may be copied or reproduced in any form or by any means without the prior written consent of Intel Corporation. The following are trademarks of Intel Corporation -

CS 151: Introduction to Computers

Information Technology: Introduction to Computers Handout One Computer Hardware 1. Components a. System board, Main board, Motherboard b. Central Processing Unit (CPU) c. RAM (Random Access Memory) SDRAM. DDR-RAM, RAMBUS d. Expansion cards i. ISA - Industry Standard Architecture ii. PCI - Peripheral Component Interconnect iii. PCMCIA - Personal Computer Memory Card International Association iv. AGP – Accelerated Graphics Port e. Sound f. Network Interface Card (NIC) g. Modem h. Graphics Card (AGP – accelerated graphics port) i. Disk drives (A:\ floppy diskette; B:\ obsolete 5.25” floppy diskette; C:\Internal Hard Disk; D:\CD-ROM, CD-R/RW, DVD-ROM/R/RW (Compact Disk-Read Only Memory) 2. Peripherals a. Monitor b. Printer c. Keyboard d. Mouse e. Joystick f. Scanner g. Web cam Operating system – a collection of files and small programs that enables input and output. The operating system transforms the computer into a productive entity for human use. BIOS (Basic Input Output System) date, time, language, DOS – Disk Operating System Windows (Dual, parallel development for home and industry) Windows 3.1 Windows 3.11 (Windows for Workgroups) Windows 95 Windows N. T. (Network Technology) Windows 98 Windows N. T. 4.0 Windows Me Windows 2000 Windows XP Home Windows XP Professional The Evolution of Windows Early 80's IBM introduced the Personal PC using the Intel 8088 processor and Microsoft's Disk Operating System (DOS). This was a scaled down computer aimed at business which allowed a single user to execute a single program. Many changes have been introduced over the last 20 years to bring us to where we are now. -

Principles of Operating Systems Name (Print): Fall 2019 Seat: SEAT Final

Principles of Operating Systems Name (Print): Fall 2019 Seat: SEAT Final Left person: 12/13/2019 Right person: Time Limit: 8:00am { 10:00pm • Don't forget to write your name on this exam. • This is an open book, open notes exam. But no online or in-class chatting. • Ask us if something is confusing. • Organize your work, in a reasonably neat and coherent way, in the space provided. Work scattered all over the page without a clear ordering will receive very little credit. • Mysterious or unsupported answers will not receive full credit. A correct answer, unsupported by explanation will receive no credit; an incorrect answer supported by substan- tially correct explanations might still receive partial credit. • If you need more space, use the back of the pages; clearly indicate when you have done this. • Don't forget to write your name on this exam. Problem Points Score 1 10 2 15 3 15 4 15 5 17 6 15 7 4 Total: 91 Principles of Operating Systems Final - Page 2 of 12 1. Operating system interface (a) (10 points) Write code for a simple program that implements the following pipeline: cat main.c | grep "main" | wc I.e., you program should start several new processes. One for the cat main.c command, one for grep main, and one for wc. These processes should be connected with pipes that cat main.c redirects its output into the grep "main" program, which itself redirects its output to the wc. forked pid:811 forked pid:812 fork failed, pid:-1 Principles of Operating Systems Final - Page 3 of 12 2. -

Computer Viruses and Malware Advances in Information Security

Computer Viruses and Malware Advances in Information Security Sushil Jajodia Consulting Editor Center for Secure Information Systems George Mason University Fairfax, VA 22030-4444 email: [email protected] The goals of the Springer International Series on ADVANCES IN INFORMATION SECURITY are, one, to establish the state of the art of, and set the course for future research in information security and, two, to serve as a central reference source for advanced and timely topics in information security research and development. The scope of this series includes all aspects of computer and network security and related areas such as fault tolerance and software assurance. ADVANCES IN INFORMATION SECURITY aims to publish thorough and cohesive overviews of specific topics in information security, as well as works that are larger in scope or that contain more detailed background information than can be accommodated in shorter survey articles. The series also serves as a forum for topics that may not have reached a level of maturity to warrant a comprehensive textbook treatment. Researchers, as well as developers, are encouraged to contact Professor Sushil Jajodia with ideas for books under this series. Additional tities in the series: HOP INTEGRITY IN THE INTERNET by Chin-Tser Huang and Mohamed G. Gouda; ISBN-10: 0-387-22426-3 PRIVACY PRESERVING DATA MINING by Jaideep Vaidya, Chris Clifton and Michael Zhu; ISBN-10: 0-387- 25886-8 BIOMETRIC USER AUTHENTICATION FOR IT SECURITY: From Fundamentals to Handwriting by Claus Vielhauer; ISBN-10: 0-387-26194-X IMPACTS AND RISK ASSESSMENT OF TECHNOLOGY FOR INTERNET SECURITY.'Enabled Information Small-Medium Enterprises (TEISMES) by Charles A. -

Recovering from Operating System Crashes

Recovering from Operating System Crashes Francis David Daniel Chen Department of Computer Science Department of Electrical and Computer Engineering University of Illinois at Urbana-Champaign University of Illinois at Urbana-Champaign Urbana, USA Urbana, USA Email: [email protected] Email: [email protected] Abstract— When an operating system crashes and hangs, it timers and processor support techniques [12] are required to leaves the machine in an unusable state. All currently running detect such crashes. program state and data is lost. The usual solution is to reboot the It is accepted behavior that when an operating system machine and restart user programs. However, it is possible that after a crash, user program state and most operating system state crashes, all currently running user programs and data in is still in memory and hopefully, not corrupted. In this project, volatile memory is lost and unrecoverable because the proces- we use a watchdog timer to reset the processor on an operating sor halts and the system needs to be rebooted. This is inspite system crash. We have modified the Linux kernel and added of the fact that all of this information is available in volatile a recovery routine that is called instead of the normal boot up memory as long as there is no power failure. This paper function when the processor is reset by a watchdog. This resumes execution of user processes after killing the process that was explores the possibility of recovering the operating system executing when the watchdog fired. We have implemented this using the contents of memory in order to resume execution on the ARM architecture and we use a periodic watchdog kick of user programs without any data loss.