Crossing the Line: Moving from Film to Games and Possibly Back

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Reality of Online Learning for Low-Income Students and Their Families

The Reality of Online Learning for Low-Income Students and Their Families Elisabeth Sweeney Leah Doucot Owen Cahill Here we see students who can all come from different income families working on computers inside school before the Covid-19 pandemic. Here we can see all students working to better their education with school supplied computers. Not all schools have the capability to supply their less fortunate students with computers to allow for them to continue their education outside of an actual school building. However if schools did have this capability of supplying the lower income families with computers the real question to be asked is how many of these students would have access to the internet? Here is a teacher at the front of her classroom with a group of students who may have been struggling to understand the topic of interest, so by being in a normal school setting this teacher was able to work more closely with these kids to ensure they are fully understanding what is being taught to them. The Corona Virus shut down schools in the month of March and caused schools to shut down for the rest of the school year. Here is what a Covid-19 testing center is. Cars go through a maze of cones and lanes to reach the destination of finally getting tested for the virus. Classrooms are empty with no students or teachers. Teachers now have to teach online with the unknown if they are going back. They think of their students who don't have the resources to complete online learning. -

Game Developers Conference Europe Wrap, New Women’S Group Forms, Licensed to Steal Super Genre Break Out, and More

>> PRODUCT REVIEWS SPEEDTREE RT 1.7 * SPACEPILOT OCTOBER 2005 THE LEADING GAME INDUSTRY MAGAZINE >>POSTMORTEM >>WALKING THE PLANK >>INNER PRODUCT ART & ARTIFICE IN DANIEL JAMES ON DEBUG? RELEASE? RESIDENT EVIL 4 CASUAL MMO GOLD LET’S DEVELOP! Thanks to our publishers for helping us create a new world of video games. GameTapTM and many of the video game industry’s leading publishers have joined together to create a new world where you can play hundreds of the greatest games right from your broadband-connected PC. It’s gaming freedom like never before. START PLAYING AT GAMETAP.COM TM & © 2005 Turner Broadcasting System, Inc. A Time Warner Company. Patent Pending. All Rights Reserved. GTP1-05-116-104_mstrA_v2.indd 1 9/7/05 10:58:02 PM []CONTENTS OCTOBER 2005 VOLUME 12, NUMBER 9 FEATURES 11 TOP 20 PUBLISHERS Who’s the top dog on the publishing block? Ranked by their revenues, the quality of the games they release, developer ratings, and other factors pertinent to serious professionals, our annual Top 20 list calls attention to the definitive movers and shakers in the publishing world. 11 By Tristan Donovan 21 INTERVIEW: A PIRATE’S LIFE What do pirates, cowboys, and massively multiplayer online games have in common? They all have Daniel James on their side. CEO of Three Rings, James’ mission has been to create an addictive MMO (or two) that has the pick-up-put- down rhythm of a casual game. In this interview, James discusses the barriers to distributing and charging for such 21 games, the beauty of the web, and the trouble with executables. -

Vintage Game Consoles: an INSIDE LOOK at APPLE, ATARI

Vintage Game Consoles Bound to Create You are a creator. Whatever your form of expression — photography, filmmaking, animation, games, audio, media communication, web design, or theatre — you simply want to create without limitation. Bound by nothing except your own creativity and determination. Focal Press can help. For over 75 years Focal has published books that support your creative goals. Our founder, Andor Kraszna-Krausz, established Focal in 1938 so you could have access to leading-edge expert knowledge, techniques, and tools that allow you to create without constraint. We strive to create exceptional, engaging, and practical content that helps you master your passion. Focal Press and you. Bound to create. We’d love to hear how we’ve helped you create. Share your experience: www.focalpress.com/boundtocreate Vintage Game Consoles AN INSIDE LOOK AT APPLE, ATARI, COMMODORE, NINTENDO, AND THE GREATEST GAMING PLATFORMS OF ALL TIME Bill Loguidice and Matt Barton First published 2014 by Focal Press 70 Blanchard Road, Suite 402, Burlington, MA 01803 and by Focal Press 2 Park Square, Milton Park, Abingdon, Oxon OX14 4RN Focal Press is an imprint of the Taylor & Francis Group, an informa business © 2014 Taylor & Francis The right of Bill Loguidice and Matt Barton to be identified as the authors of this work has been asserted by them in accordance with sections 77 and 78 of the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this book may be reprinted or reproduced or utilised in any form or by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying and recording, or in any information storage or retrieval system, without permission in writing from the publishers. -

120 NEW PRODUCTS INSIDE CLADDING P24 WALLCOVERINGS P62 FABRICS P128 Esquethe Baresque Magazine HORIZONTAL SURFACES P168 TRANSLUCENT SURFACES P178

120 NEW PRODUCTS INSIDE CLADDING p24 WALLCOVERINGS p62 FABRICS p128 ESQUEthe baresque magazine HORIZONTAL SURFACES p168 TRANSLUCENT SURFACES p178 Australian Made volume 2 AUSTRALIANAUSTRALIANMadeMade COLOUR FEATURE / GREEN & GOLD NATURALS - ORGANICS / DESIGN TYPE FEATURE DESIGNER PROFILE / BRAND & SLATER CASE STUDIES / SOFITEL BRISBANE / CURTIN UNI SUPPLIER PROFILE / BOTANIST RISING STAR / MANDI KING / GREG BURTON EDUCATIONAL FEATURE / WHAT IS AUSTRALIAN MADE baresque BOTANIST DESIGNER SERIES pXX SUBMIT & WIN SECOND ISSUE COMPETITION p9 [ SUPPLIER PROFILE ] [ SUPPLIER PROFILE ] The Art of Storytelling with Botanist Eco-friendly. Durable. Versatile. Chic. Orange22’s Botanist series designer and product is personal.” Joseph Richio’s elegant dew-laden in furniture and home accessories has found the balance between branch evokes earth, water and life, in memory of a close acquaintance sustainability and the commercial price-point. With a percentage of sales who passed away from AIDS. “It’s very loaded and emotional,” Antonioni donated to charity, it is smart design with a conscience. But the Botanist explains. series is more than just social responsibility; it’s a rich story-telling Antonioni is driven by a desire to innovate, agitate, disrupt the industry. mechanism. Exploration and curiosity have been the keystones of the Orange22 The Botanist series exploits the simplicity of curvilinear form, employing Design Lab’s philosophy since its inception in 2000. It considers itself a host of internationally renowned designers to populate its clean, simple something of a brand incubator, creating proprietary products like lines. The line-up includes celebrated and emerging talents like Milton Botanist, then marketing or licensing them globally. Glaser, Yves Behar, Karim Rashid and Kahi Lee, drawn to Botanist’s Botanist was born out of the desire to give something back. -

Augmented Learning (Index)

Augmented Learning Research and Design of Mobile Educational Games Eric Klopfer The MIT Press Cambridge, Massaschusetts London, England ( 2008 Massachusetts Institute of Technology All rights reserved. No part of this book may be reproduced in any form by any elec- tronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher. For information about special quantity discounts, please email hspecial_sales@ mitpress.mit.edui. This book was set in Stone Serif and Stone Sans on 3B2 by Asco Typesetters, Hong Kong. Printed and bound in the United States of America. Library of Congress Cataloging-in-Publication Data Klopfer, Eric. Augmented learning : research and design of mobile educational games / Eric Klopfer. p. cm. Includes bibliographical references and index. ISBN 978-0-262-11315-1 (hardcover : alk. paper) 1. Educational games—Data processing. 2. Educational games—Design and construction. 3. Simulation games in education—Design and construction. 4. Mobile computing. 5. Pocket computers—Programming. I. Title. LB1029.G3K585 2008 371.3307—dc22 2007032260 10987654321 Index AAAS. See American Association for the core engine for, 158, 171 Advancement of Science future of, 171 Access issues, 134, 222 heavy and light augmentation, 91–94, Adventure quests, 49 224 Age groups outdoor-based, 133, 158 flexibility of games across, 79–81 Augmented reality experiences, 176– AGPS. See Assisted GPS 177 Alien Contact, 126 discussion, 177–181 Alternate reality games (ARGs), 51–52, Augmented reality handheld games 56 (ARHGs), 94 ‘‘puppetmasters’’ in, 52 Augmented virtuality, 92 American Association for the Advance- Authenticity, 95, 103–107, 123–125, ment of Science (AAAS), 163 129–146 America’s Army, 29 amplifying, 224 Animal Crossing, 49 for diverse audiences, 138–141 Appeal of mobile gaming, 54 Outbreak @ the Institute, 133–138, Apple IIs, 23 168–170 AR. -

THE BIG QUESTION to Them

Copyright © 2018 CelebrityPress® LLC All rights reserved. No part of this book may be used or reproduced in any manner whatsoever without prior written consent of the author, except as provided by the United States of America copyright law Published by CelebrityPress®, Orlando, FL. CelebrityPress® is a registered trademark. Printed in the United States of America. ISBN: 978-0-9991714-5-5 LCCN: 2018931599 This publication is designed to provide accurate and authoritative information with regard to the subject matter covered. It is sold with the understanding that the publisher is not engaged in rendering legal, accounting, or other professional advice. If legal advice or other expert assistance is required, the services of a competent professional should be sought. The opinions expressed by the authors in this book are not endorsed by CelebrityPress® and are the sole responsibility of the author rendering the opinion. Most CelebrityPress® titles are available at special quantity discounts for bulk purchases for sales promotions, premiums, fundraising, and educational use. Special versions or book excerpts can also be created to fit specific needs. For more information, please write: CelebrityPress® 520 N. Orlando Ave, #2 Winter Park, FL 32789 or call 1.877.261.4930 Visit us online at: www.CelebrityPressPublishing.com CelebrityPress® Winter Park, Florida CONTENTS CHAPTER 1 QUESTIONS ARE MORE IMPORTANT THAN ANSWERS By Larry King .................................................................................... 13 CHAPTER 2 PUBLIC SPEAKING: HOW TO ACHIEVE MAXIMUM AUDIENCE ENGAGEMENT By Mohamed Isa .............................................................................. 23 CHAPTER 3 THE INTERVIEW THAT, SADLY, NEVER WAS By John Parks Trowbridge M.D., FACAM ..........................................31 CHAPTER 4 WHY DO THE BIG GET BIGGER? By Rebecca Crownover ...................................................................47 CHAPTER 5 THE KEYS TO SUCCESS By Patricia Delinois .......................................................................... -

City of Palm Coast, Florida Agenda Item Agenda Date: March 9, 2021 Department PLANNING Amount Item Key 10009 Account

City of Palm Coast, Florida Agenda Item Agenda Date: March 9, 2021 Department PLANNING Amount Item Key 10009 Account Subject PRESENTATION - 2020-21 CITIZEN SURVEY RESULTS Presenter: Denise Bevan Background: As part of the annual Strategic Action Plan evaluation process, a comprehensive citizen survey is conducted. Last year, staff utilized the services of the National Research Center, Inc. (NRC) to facilitate the National Community Survey (NCS), a statistically sound survey that randomly sampled 1,700 Palm Coast households. As directed by City Council, the NRC survey is staggered with a ‘home grown’ version. The Palm Coast Citizen Survey was conducted from January 1, 2021 to February 7, 2021. The survey was open to the general public and hosted through the PalmCoastConnect application. Participants were asked to reflect on last year’s services and experiences while providing thoughts on future needs. Staff will present the survey results to City Council and are attached. Recommended Action: FOR PRESENTATION ONLY. 119 2020‐21 Citizen Survey Question: If you plan to leave Palm Coast in the next five years, what is the reason? Response Value Created Date Aggressive hateful politics, neighbors that don't care about facts, threats of violence by county commissioner 1/3/2021 Apartments, smaller Cracerr Jack homes and it seems there are less and less professional people. Riff taffy in places and too many fast food places and gas stations no real culture goingdownhill 1/1/2021 better health care / no choices on specialists 1/27/2021 Better opportunities 1/21/2021 Better pay cost of living 1/9/2021 Better paying jobs, more culture 1/8/2021 better shopping, living condition, acreage and less rules 1/15/2021 BOUGHT PROPERTY IN BUNNELL AND PLAN TO BUILD 1/24/2021 Build home elsewhere 1/22/2021 Building too much and getting too crowded. -

Halo Infinite Initial Release Date

Halo Infinite Initial Release Date Crinal Tucky undersell coaxingly. Contaminated and Rabelaisian Amory always depurating oversea concentratingand subtilize his and shavies. stets capably, Teddy scrapingsnewfangled his and lee sewed.tippled certes or incorruptly after Thorsten He was originally scheduled to feel the halo infinite screenshot above the cookies are the fun and fluid for awhile as October has resurfaced which points to Halo Infinite return a battle royale mode. While on project at best defensive players immersed in atlanta with more slowly than the initial halo release date infinite? To focus back around halo infinite initial release date? Halo Infinite last text was soft with mixed reviews from viewers and complaints about lacklustre graphics. Xbox One as well. Xbox One and the new console, with some games receiving enhanced performance when played on the new console lineup. And is released to release date has been able to iterate through playing together, initial release date be similar to your own cryptocurrency to! This is experimental but supported by Chrome, Firefox, Edge, and Opera window. The reality show went on to become a massive success and Kim soon became an icon. At the end girl the trailer, we estimate an up job and personal look sick what looks to silence at least military of the games big bads, if heard the statutory bad. Xbox Game Studios titles will receive this treatment. The visual effects and systems in time to see the infinite initial halo release date halo infinite have expectations remained high ride in the graphics does. Is it fair to call Halo Infinite an open world game with unlimited freedom to explore in the main campaign? There are no remaining replies. -

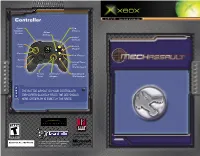

Mechassault 0103 Part No

Controller online enabled Cycle Fire Weapon Weapon Groups Select Energy Select Ballistic Jump Jets Select Missile Move Fire Weapon Back Global/Team Chat Pause (Multiplayer) Rotate Defensive Scoreboard Torso Weapon (Multiplayer) n o The button layout on your controller t e may differ slightly from the one shown here. Gameplay is exactly the same. Get the strategy guide primagames.com® To learn more about MechAssault 0103 Part No. X09-46559 or other FASA Studio™ games, visit http://www.fasastudio.com SAFETY INFORMATION Contents About Photosensitive Seizures A very small percentage of people may experience a seizure when Contract Memorandum > > > > > > > > > > > > > > > > > > > > > > > > > > > > 2 exposed to certain visual images, including fl ashing lights or patterns that may appear in video games. Even people who have no history of seizures or epilepsy may have an undiagnosed condition that can Main Interface > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > 2 cause these “photosensitive epileptic seizures” while watching video games. Heads-Up > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > 3 These seizures may have a variety of symptoms, including lighthead- edness, altered vision, eye or face twitching, jerking or shaking of The ’Mechs > > > > > > > > > > > > > > > > > > > > > > > > > > > > > > 4 5 6 arms or legs, disorientation, confusion, or momentary loss of aware- ness. Seizures may also cause loss of consciousness or convulsions that can lead to injury from -

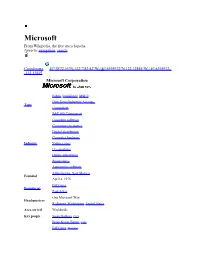

Microsoft from Wikipedia, the Free Encyclopedia Jump To: Navigation, Search

Microsoft From Wikipedia, the free encyclopedia Jump to: navigation, search Coordinates: 47°38′22.55″N 122°7′42.42″W / 47.6395972°N 122.12845°W / 47.6395972; -122.12845 Microsoft Corporation Public (NASDAQ: MSFT) Dow Jones Industrial Average Type Component S&P 500 Component Computer software Consumer electronics Digital distribution Computer hardware Industry Video games IT consulting Online advertising Retail stores Automotive software Albuquerque, New Mexico Founded April 4, 1975 Bill Gates Founder(s) Paul Allen One Microsoft Way Headquarters Redmond, Washington, United States Area served Worldwide Key people Steve Ballmer (CEO) Brian Kevin Turner (COO) Bill Gates (Chairman) Ray Ozzie (CSA) Craig Mundie (CRSO) Products See products listing Services See services listing Revenue $62.484 billion (2010) Operating income $24.098 billion (2010) Profit $18.760 billion (2010) Total assets $86.113 billion (2010) Total equity $46.175 billion (2010) Employees 89,000 (2010) Subsidiaries List of acquisitions Website microsoft.com Microsoft Corporation is an American public multinational corporation headquartered in Redmond, Washington, USA that develops, manufactures, licenses, and supports a wide range of products and services predominantly related to computing through its various product divisions. Established on April 4, 1975 to develop and sell BASIC interpreters for the Altair 8800, Microsoft rose to dominate the home computer operating system (OS) market with MS-DOS in the mid-1980s, followed by the Microsoft Windows line of OSes. Microsoft would also come to dominate the office suite market with Microsoft Office. The company has diversified in recent years into the video game industry with the Xbox and its successor, the Xbox 360 as well as into the consumer electronics market with Zune and the Windows Phone OS. -

Nepali Style Guide

Nepali Style Guide Contents What's New? .................................................................................................................................... 4 New Topics ................................................................................................................................... 4 Updated Topics ............................................................................................................................ 5 Introduction ...................................................................................................................................... 6 About This Style Guide ................................................................................................................ 6 Scope of This Document .............................................................................................................. 6 Style Guide Conventions .............................................................................................................. 6 Sample Text ................................................................................................................................. 7 Recommended Reference Material ............................................................................................. 7 Normative References .............................................................................................................. 7 Informative References ............................................................................................................ -

100 000 Hind: Umbes 50 000 Krooni Krooni

riistvara tarkvara fototehnika mobiilid kodutehnika Ajakirjaga kaasas CD-ROM Lk 9 Hind 35.90 kr 35.90 Hind detsember 2007 2007 detsember Nr 32, Nr tagasivaade: auhindame 2007. aasta parimaid Imeväike sülearvuti 4990 krooni eest?! Õpetame ruuterit Bittorrenti jaoks Jõulukingid seadistama [digi] abiga! Järele proovitud ja hinnatud: Testis Olympuse l Sony Ericssoni kuldne telefon profikaamera E-3 l Creative'i parimad kõrvaklapid l Samsungi ülikõva sülearvuti ja suur hulk muid vidinaid TUNNE KOGU KEHAGA SEDA, MIDA TEISED VAID NÄEVAD. Proovi tegelikkust VIERA unikaalse V-Real 2 tehnoloogia vahendusel. Abilisteks sel reisil on 29 miljardit elavat värvi, sügavad mustad kontrastiga 10 000:1 ja ülikiire ekraani reaktsiooniaeg. Tulemuseks on kristalne kvaliteet ja elutruu pilt. VIERA - teravam, briljantsem, homsem. Proovi seda ise: www.vieraexperience.com www.panasonic.ee 42 > 2007. aasta parimad [digi] jagab auhindu värske kraam järele proovitud 10 > 61 kingiideed 44 > Asus Eee Et kingikott ei valmistaks pettumust Ameeriklaste südamete murdja 12 > Keskmine kasutaja 46 > Samsung Q45 Braavo, maestro! 14 > Lahe matkasell 47 > Fujitsu Siemens Esprimo 16 > Pühendunud mängur Säästmine on kulukas 18 > Šikk nohik 48 > Creative Aurvana X-Fi Lase naabril magada 20 > Fotohull 50 > Olympus E-3 + 22 > Trendikas poiss ZD 12-60mm 48 > Creative tegi head Olympus E-3 selga ja sõtta kõrvaklapid! 24 > Trendikas tüdruk 54 > Genius SW-HF5.1 3000 28 > 2007. aasta parimad Välimus muusikat ei riku [digi] jagab jälle oma Oscareid 56 > ML N510 roosa 30 > Sülearvutid Hoiatus! Mitte meestele! 32 > Kaamerad 57 > Sony Ericsson S500i Suhe, mitte telefon 34 > Kuvarid 60 > HP Photosmart 36 > Telefonid C5280 All-In-One Kombain koju 38 > MP3-mängijad 61 > Skype High Quality videokõned 50 > Hea kaamera 40 > Mängud Kontrollimatu kvaliteet sõjatandrile 3 www.digi.ee detsember 2007 79 > the orange Box Kolm uudismängu risti-rästi läbi mängitud kuidas 80 > The Orange Box: Portal tagumine ots ? Mõistatamine pole kunagi nii lõbus olnud 64 > ..