Aerospace Engineering Handbook Chapter 2(V): Flight Test Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Validation of Wind Tunnel Test and Cfd Techniques for Retro-Propulsion (Retpro): Overview on a Project Within the Future Launchers Preparatory Programme (Flpp)

VALIDATION OF WIND TUNNEL TEST AND CFD TECHNIQUES FOR RETRO-PROPULSION (RETPRO): OVERVIEW ON A PROJECT WITHIN THE FUTURE LAUNCHERS PREPARATORY PROGRAMME (FLPP) D. Kirchheck, A. Marwege, J. Klevanski, J. Riehmer, A. Gulhan¨ German Aerospace Center (DLR) Supersonic and Hypersonic Technologies Department Cologne, Germany S. Karl O. Gloth German Aerospace Center (DLR) enGits GmbH Spacecraft Department Todtnau, Germany Gottingen,¨ Germany ABSTRACT and landing (VTVL) spacecraft, assisted by retro-propulsion. Up to now, in Europe, knowledge and expertise in that field, The RETPRO project is a 2-years activity, led by the Ger- though constantly growing, is still limited. Systematic stud- man Aerospace Center (DLR) in the frame of ESA’s Future ies were conducted to compare concepts for possible future Launchers Preparatory Program (FLPP), to close the gap of European launchers [2, 3], and activities on detailed inves- knowledge on aerodynamics and aero-thermodynamics of tigations of system components of VTVL re-usable launch retro-propulsion assisted landings for future concepts in Eu- vehicles (RLV) recently started in the RETALT project [4, 5]. rope. The paper gives an overview on the goals, strategy, and Nevertheless, validated knowledge on the aerodynamic current status of the project, aiming for the validation of inno- and aerothermal characteristics of such vehicles is still lim- vative WTT and CFD tools for retro-propulsion applications. ited to a small amount of experimental and numerical inves- Index Terms— RETPRO, retro-propulsion, launcher tigations mostly on lower altitude VTVL trajectories, e. g. aero-thermodynamics, wind tunnel testing, CFD validation within the CALLISTO project [6, 7, 8]. Other studies were conducted to analyze the aerothermodynamics of a simplified generic Falcon 9 geometry during its re-entry and landing 1. -

Reusable Launch Vehicle Technology Program{

Acta Astronautica Vol. 41, No. 11, pp. 777±790, 1997 # 1998 Published by Elsevier Science Ltd. All rights reserved Printed in Great Britain PII: S0094-5765(97)00197-5 0094-5765/98 $19.00 + 0.00 REUSABLE LAUNCH VEHICLE TECHNOLOGY PROGRAM{ DELMA C. FREEMAN{ JR. and THEODORE A. TALAY} NASA Langley Research Center, Hampton, Virginia 23681-0001, USA R. EUGENE AUSTIN} NASA Marshall Space Flight Center, Marshall Space Flight Center, Alabama 35812-1000, USA (Received 25 April 1997) AbstractÐIndustry/NASA reusable launch vehicle (RLV) technology program eorts are underway to design, test, and develop technologies and concepts for viable commercial launch systems that also satisfy national needs at acceptable recurring costs. Signi®cant progress has been made in understanding the technical challenges of fully reusable launch systems and the accompanying management and oper- ational approaches for achieving a low-cost program. This paper reviews the current status of the RLV technology program including the DC-XA, X-33 and X-34 ¯ight systems and associated technology programs. It addresses the speci®c technologies being tested that address the technical and operability challenges of reusable launch systems including reusable cryogenic propellant tanks, composite structures, thermal protection systems, improved propul- sion, and subsystem operability enhancements. The recently concluded DC-XA test program demon- strated some of these technologies in ground and ¯ight tests. Contracts were awarded recently for both the X-33 and X-34 ¯ight demonstrator systems. The Orbital Sciences Corporation X-34 ¯ight test ve- hicle will demonstrate an air-launched reusable vehicle capable of ¯ight to speeds of Mach 8. -

A Review of Current Research in Subscale Flight Testing and Analysis of Its Main Practical Challenges

aerospace Article A Review of Current Research in Subscale Flight Testing and Analysis of Its Main Practical Challenges Alejandro Sobron * , David Lundström and Petter Krus Department of Management and Engineering, Division of Fluid and Mechatronic Systems, Linköping University, SE-58183 Linköping, Sweden; [email protected] (D.L.); [email protected] (P.K.) * Correspondence: [email protected]; Tel.: +46-1328-1893 Abstract: Testing of untethered subscale models, often referred to as subscale flight testing, has traditionally had a relatively minor, yet relevant use in aeronautical research and development. As recent advances in electronics, rapid prototyping and unmanned-vehicle technologies expand its capabilities and lower its cost, this experimental method is seeing growing interest across academia and the industry. However, subscale models cannot meet all similarity conditions required for simulating full-scale flight. This leads to a variety of approaches to scaling and to other alternative applications. Through a literature review and analysis of different scaling strategies, this study presents an overall picture of how subscale flight testing has been used in recent years and synthesises its main issues and practical limitations. Results show that, while the estimation of full-scale characteristics is still an interesting application within certain flight conditions, subscale models are progressively taking a broader role as low-cost technology-testing platforms with relaxed similarity constraints. Different approaches to tackle the identified practical challenges, implemented both by the authors and by other organisations, are discussed and evaluated through flight experiments. Citation: Sobron, A.; Lundström, D.; Keywords: subscale flight testing; similarity; scale model; remotely piloted aircraft; demonstration; Krus, P. -

+ Part 10: Test and Evaluation

10. Test and Evaluation 10.1 Approach Architecture Design, Development, Test, and Evaluation (DDT&E) schedule, costs, and risk are highly dependent on the integrated test and evaluation approach for each of the major elements. As a part of the Exploration Systems Architecture Study (ESAS), a top-level test and evaluation plan, including individual flight test objectives, was developed and is summarized in this section. The test and evaluation plan described here is derived from the Apollo Flight Test Program of the 1960s. A more detailed test and evaluation plan will be based on detailed verification requirements and objectives documented in specifications and verification plans. In order to support schedule, cost, and risk assessments for the reference ESAS architecture, an integrated test and evaluation plan was developed to identify the number and type of major test articles (flight and ground) and the timing and objectives of each major flight test, including facilities and equipment required to support those tests. This initial plan is based on the Apollo Program and the ESAS Ground Rules and Assumptions (GR&As)—including the human- rating requirements from NASA Procedural Requirements (NPR) 8705.2A, Human-Rating Requirements for Space Systems. 10. Test and Evaluation 645 10.2 Ground Rules and Assumptions ESAS GR&As establish the initial set of key constraints to testing. Although all ESAS GR&As are considered, the specific ones listed below are particularly significant, as they deal with schedule and testing/qualification assumptions. • The crew launch system shall facilitate crew survival using abort and escape. There will be three all-up tests of the Launch Abort System (LAS). -

Flight Engineer Knowledge Test Guide

AC 63-1 FLIGHT ENGINEER KNOWLEDGE TEST GUIDE U.S. Department of Transportation Federal Aviation Administration 1 FLIGHT ENGINEER KNOWLEDGE TEST GUIDE 1995 U.S. DEPARTMENT OF TRANSPORTATION FEDERAL AVIATION ADMINISTRATION Flight Standards Service 2 PREFACE The Flight Standards Service of the Federal Aviation Administration (FAA) has developed this guide to help applicants meet the knowledge requirements for the computer administered tests for flight engineer turbojet, turboprop, and reciprocating class certification. This guide contains information about the knowledge test eligibility requirements, test descriptions, testing and retesting procedures, and sample test questions with answers. As a convenience to the applicant, the eligibility requirements for the oral and flight tests are included. Appendix 1 provides a list of reference materials and subject matter knowledge codes, and computer testing designees. Changes to the subject matter knowledge code list will be published as a separate advisory circular. The sample questions and answers in this guide are predicated on Federal Aviation Regulations (FAR's) and references that were current at the time of publication. Questions and answers in the computer administered knowledge tests are updated when changes are made to these reference materials. The flight engineer test question bank and subject matter knowledge code list for all airmen certificates and ratings, with changes, may be obtained by computer access from FedWorld at (703) 321-3339. This bulletin board service is provided by the U.S. Department of Commerce, 24 hours a day, 7 days per week. For technical assistance regarding computer requirements for this service, contact the FedWorld help desk at (703) 487-4608 from 7:30 a.m. -

UAS FLIGHT TEST for SAFETY and for EFFICIENCY Seamus M

UAS FLIGHT TEST FOR SAFETY AND FOR EFFICIENCY Seamus M. McGovern, U.S. DOT National Transportation Systems Center, Cambridge, Massachusetts Abstract (e.g., SAE International technical standards and recommended practices) but also takes advantage of Manned aircraft that operate in the National competitive racing in order to evaluate new Airspace System (NAS) typically undergo technologies and materials (as it turns out, this may certification flight test to ensure they meet a have applicability to UASs as well with the advent of prescribed level of safety—dependent on their several organizations and sanctioning bodies category—before they are able to enter service [for including the European Rotor Sports Association and example, Federal Aviation Administration (FAA) the Drone Racing League). Other aviation-related advisory circular (AC) 25-7C is the flight-test guide tests and test formats not discussed here include the for certification of transport-category airplanes]. typically Department of Defense-focused With the integration of unmanned aircraft systems developmental test and evaluation; operational test (UAS) into the NAS, in the future some type of and evaluation; and research, development, test, and certification flight test may ultimately be required, evaluation structures. however, even lacking such a requirement UAS manufacturers can find value in flight testing UASs In terms of flight test, the military services have using familiar experimental and certification flight- their own criteria for evaluating their various aircraft. test procedures, the results of which can enhance the These requirements are often bound by contractual safety of the design, the safety of the operation, agreements between the service and the vendor, and/or the efficiency of the operation. -

General Flight Test Theory Applied to Aircraft Modifications

General Flight Test TheoryTUTORIAL Applied to Aircraft Modifications GENERAL FLIGHT TEST THEORY APPLIED TO AIRCRAFT MODIFICATIONS Lt Col Lionel D. Alford, Jr., USAF and Robert C. Knarr Any external aircraft modification has potentially far-reaching effects on the capability of the aircraft to succeed or fail in its mission. The authors take a systematic look at the effects that small changes can have upon the whole, with a series of examples that demonstrate why careful review of data or testing is often vital in the assessment of system modifications. new design aircraft program always external aircraft modification. The aircraft includes an instrumented test to design problems covered here represent Avalidate the analyses. But a modifi- the fundamental characteristics by which cation program may rely instead on pre- aircraft capability is judged. These design viously collected data for model valida- problems, when not properly analyzed and tion. Such a program must adequately tested (if required), have historically address the effects of the modification on resulted in significant degradation of air the aircraft and its mission. The user must worthiness. We define the subject area and judge these effects for their desirability— explain the importance of each problem especially when they degrade mission by discussing the rationale behind stan- capability. But, to be judged, they must dard design practices and air worthiness be fully understood. Reviewing historical and operational considerations for the fleet data or conducting a test are two ways to aircraft. Concrete examples illustrate each validate the data by which these effects case. Although only effects to the C–130 on aircraft capability are judged. -

The Parashield Entry Vehicle Concept: Basic Theory and Flight Test

I I The ParaShield Entry Vehicle Concept: I Basic Theory and Flight Test Development I David L. Akint Abstract: With the emergence of microsatellite launch vehicle technology and the development of I interest in space commercialization, there is a renewed need for entry vehicle technology to return mass from low earth orbiL This paper documents the ParaShield concept of the Space Systems Laboratory. which is an ultra-low ballistic coefficient (UI...p) entry vehicle. Trajectory simulations show that. as the ballistic coefficient is lowered into the range of I 100-150 Pa (2-31b1ft2). the total heat load and peak heating flux drop markedly. due to primary deceleration in regions of extremely low dynamic pressure. In this range. any of a number of ceramic or glass-based fabrics can withstand the entry dynamic pressures and I heat loads. Incorporating an offset of the center of gravity from the symmetrical axis of the shield allows UD. and thus peak deceleration loads. to be controlled. By using a titanium support truss and deployment mechanism. a very large heat shield can be deployed from an entry capsule prior to deorbit; since the shield survives entry. the same rib-braced fabric I structure results in aerodynamic deceleration to a nominal landing velocity of 10-15 m/sec,. Thus. the same structure that provides heating protection for hypersonic entry is also the terminal decelerator in the subsonic regime. and either water splashdown or a mechanical I decelerator is used for landing impact attenuation. Since the same structure acts as both the heat shield and the landing parachute, the term "ParaShield" has been adopted to describe this concept Results presented show the application of the ParaShield concept to a variety of entry capsules. -

Airplane Pitch Response to Rapid Configuration Change: Flight Test and Safety Assessment

Available online at http://docs.lib.purdue.edu/jate Journal of Aviation Technology and Engineering 9:2 (2020) 45–56 Airplane Pitch Response to Rapid Configuration Change: Flight Test and Safety Assessment Ralph D. Kimberlin1, Markus Wilde2, Brian A. Kish3, and Isaac Silver4 Florida Institute of Technology, Melbourne, FL 32901, USA Abstract This paper examines airplane response to rapid flap extension on seven general aviation airplanes. The scenario involves a pilot flying in the traffic pattern becoming distracted, abruptly extending flaps while looking outside the airplane, and failing to notice airspeed and pitch-attitude changes. The airplanes tested reached pitch forces of up to 36 lbf, meeting FAA requirements but exceeding the capability of 55% of the population. Flight data showed a pitch-up to more than 30˚ in 5 s after flap extension, causing airspeed to drop below stall speed for four of the airplanes. At traffic pattern altitudes, stalling an airplane can be fatal. The NTSB lists over 1000 accidents caused by loss of control in the traffic pattern between 1982 and 2017. As general aviation airplanes do not carry flight data recorders, it is unknown how many of those accidents may have involved stalls caused by uncommanded response after flap extension. From the data gathered in flight, it seems possible some were. To improve safety, flight training should prepare students to anticipate rapid pitch changes during flap extension and retraction. In addition, airplane developers could interconnect flaps with the elevator, reduce horizontal tail size, or use a T-tail. The FAA should consider reducing the maximum pitch stick and wheel forces in 14 CFR 123.143 to 20 lbf or less. -

Commercial Crew

Table of Contents What is Commercial Crew? 3 Biographies 4 Crew 4 NASA 7 SpaceX 8 Astronaut Training 10 Commercial Crew Program Timeline 10 National Investment 12 SpaceX Missions 13 Demo-2 13 Crew-1 18 SpaceX Operations 19 Crew Dragon 19 Falcon 9 22 SpaceX Spacesuit 24 Launch Complex 39A 26 Ascent 28 Retrieving Crew Dragon 30 Safety and Innovation 31 Media Contacts 35 Multimedia 36 STEM Engagement 39 Working side-by-side with our two partners: What is Commercial Crew? NASA’s Commercial Crew Program is working with the American aerospace industry as companies develop and operate a new generation of spacecraft and launch systems capable of carrying crews to low-Earth orbit and the International Space Station. Commercial transportation to and from the station will provide expanded utility, additional research time and broader opportunities for discovery on the orbiting laboratory. The station is a critical testbed for NASA to understand and overcome the challenges of long- duration spaceflight. As commercial companies focus on providing human transportation services to and from low-Earth orbit, NASA is freed up to focus on building spacecraft and rockets for deep space missions. How is the Commercial Crew Program Different? The Commercial Crew Program represents a revolutionary approach to government and commercial collaborations for the advancement of space exploration. NASA's Prior Approach for Obtaining Crew Transportation Systems Since the Mercury program in the early 1960s, NASA has used an almost identical operating model to achieve its goals of human spaceflight. This includes the Space Shuttle Program and the American portions of the International Space Station. -

Concepts of Operations for a Reusable Launch Vehicle

Concepts of Operations for a Reusable Launch Vehicle MICHAEL A. RAMPINO, Major, USAF School of Advanced Airpower Studies THESIS PRESENTED TO THE FACULTY OF THE SCHOOL OF ADVANCED AIRPOWER STUDIES, MAXWELL AIR FORCE BASE, ALABAMA, FOR COMPLETION OF GRADUATION REQUIREMENTS, ACADEMIC YEAR 1995–96. Air University Press Maxwell Air Force Base, Alabama September 1997 Disclaimer Opinions, conclusions, and recommendations expressed or implied within are solely those of the author(s), and do not necessarily represent the views of Air University, the United States Air Force, the Department of Defense, or any other US government agency. Cleared for public release: Distribution unlimited. ii Contents Chapter Page DISCLAIMER . ii ABSTRACT . v ABOUT THE AUTHOR . vii ACKNOWLEDGMENTS . ix 1 INTRODUCTION . 1 2 RLV CONCEPTS AND ATTRIBUTES . 7 3 CONCEPTS OF OPERATIONS . 19 4 ANALYSIS . 29 5 CONCLUSIONS . 43 BIBLIOGRAPHY . 49 Illustrations Figure 1 Current RLV Concepts . 10 2 Cumulative 2,000-Pound Weapons Delivery within Three Days . 32 Table 1 Attributes of Proposed RLV Concepts and One Popular TAV Concept . 9 2 Summary of Attributes of a Notional RLV . 15 3 CONOPS A and B RLV Capabilities . 19 4 Cumulative 2,000-Pound Weapons Delivery within Three Days . 32 5 Summary of Analysis . 47 iii Abstract The United States is embarked on a journey toward maturity as a spacefaring nation. One key step along the way is development of a reusable launch vehicle (RLV). The most recent National Space Transportation Policy (August 1994) assigned improvement and evolution of current expendable launch vehicles to the Department of Defense while National Aeronautical Space Administration (NASA) is responsible for working with industry on demonstrating RLV technology. -

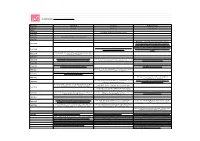

Billionaire Space Race DATA

RS Components: https://uk.rs-online.com/web/ Billionaire: Elon Musk Jeff Bezos Richard Branson Company: SpaceX Blue Origin Virgin Galactic YEARS Year 2000 Sept 2000: Jeff Bezos founds Blue Origin Year 2001 Year 2002 May 2002: Elon Musk founds SpaceX Year 2003 Early 2004: Richard Branson founds Virgin Galactic. Year 2004 October 2004: SpaceShipOne takes three people to space over two weeks, winning a $10m Ansari X Prize August 2005: Virgin Galactic starts accepting refundable March 2005: Blue Origin's first flight test vehicle, Charon, Year 2005 deposits from customers, raising $10m in a matter of makes its first and only test flight weeks March 2006: The first Falcon 1 launch fails a minute into Year 2006 ascent due to a fuel leak Year 2007 Sept 2008: The Falcon 1 becomes the first privately 2008: Blue Origin reveal plans for the 'New Shepard', stating December 2008: Launch vehicle VMS (Virgin Mother Ship) Year 2008 developed liquid-fuel rocket to enter Earth's orbit it will fly unmanned in 2011, and manned in 2012 Eve, has a successful test flight Year 2009 December 2010: SpaceX becomes the first private company March 2010: VSS (Virgin Space Ship) Enterprise, makes its Year 2010 to launch, orbit and recover a spacecraft first free flight, lasting 6 hours April 2011, Blue Origin receive a commitment from NASA for Year 2011 $22m under the CCDev phase 2 programme 25/05/2012: SpaceX is the first private company to send and Year 2012 dock a spacecraft at the I.S.S April 2013: The first rocket-powered test flight of Year 2013 SpaceShipTwo