Baseband Demodulation/Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

3 Characterization of Communication Signals and Systems

63 3 Characterization of Communication Signals and Systems 3.1 Representation of Bandpass Signals and Systems Narrowband communication signals are often transmitted using some type of carrier modulation. The resulting transmit signal s(t) has passband character, i.e., the bandwidth B of its spectrum S(f) = s(t) is much smaller F{ } than the carrier frequency fc. S(f) B f f f − c c We are interested in a representation for s(t) that is independent of the carrier frequency fc. This will lead us to the so–called equiv- alent (complex) baseband representation of signals and systems. Schober: Signal Detection and Estimation 64 3.1.1 Equivalent Complex Baseband Representation of Band- pass Signals Given: Real–valued bandpass signal s(t) with spectrum S(f) = s(t) F{ } Analytic Signal s+(t) In our quest to find the equivalent baseband representation of s(t), we first suppress all negative frequencies in S(f), since S(f) = S( f) is valid. − The spectrum S+(f) of the resulting so–called analytic signal s+(t) is defined as S (f) = s (t) =2 u(f)S(f), + F{ + } where u(f) is the unit step function 0, f < 0 u(f) = 1/2, f =0 . 1, f > 0 u(f) 1 1/2 f Schober: Signal Detection and Estimation 65 The analytic signal can be expressed as 1 s+(t) = − S+(f) F 1{ } = − 2 u(f)S(f) F 1{ } 1 = − 2 u(f) − S(f) F { } ∗ F { } 1 The inverse Fourier transform of − 2 u(f) is given by F { } 1 j − 2 u(f) = δ(t) + . -

7.3.7 Video Cassette Recorders (VCR) 7.3.8 Video Disk Recorders

/7 7.3.5 Fiber-Optic Cables (FO) 7.3.6 Telephone Company Unes (TELCO) 7.3.7 Video Cassette Recorders (VCR) 7.3.8 Video Disk Recorders 7.4 Transmission Security 8. Consumer Equipment Issues 8.1 Complexity of Receivers 8.2 Receiver Input/Output Characteristics 8.2.1 RF Interface 8.2.2 Baseband Video Interface 8.2.3 Baseband Audio Interface 8.2.4 Interfacing with Ancillary Signals 8.2.5 Receiver Antenna Systems Requirements 8.3 Compatibility with Existing NTSC Consumer Equipment 8.3.1 RF Compatibility 8.3.2 Baseband Video Compatibility 8.3.3 Baseband Audio Compatibility 8.3.4 IDTV Receiver Compatibility 8.4 Allows Multi-Standard Display Devices 9. Other Considerations 9.1 Practicality of Near-Term Technological Implementation 9.2 Long-Term Viability/Rate of Obsolescence 9.3 Upgradability/Extendability 9.4 Studio/Plant Compatibility Section B: EXPLANATORY NOTES OF ATTRIBUTES/SYSTEMS MATRIX Items on the Attributes/System Matrix for which no explanatory note is provided were deemed to be self-explanatory. I. General Description (Proponent) section I shall be used by a system proponent to define the features of the system being proposed. The features shall be defined and organized under the headings ot the following subsections 1 through 4. section I. General Description (Proponent) shall consist of a description of the proponent system in narrative form, which covers all of the features and characteris tics of the system which the proponent wishe. to be included in the public record, and which will be used by various groups to analyze and understand the system proposed, and to compare with other propo.ed systems. -

Baseband Harmonic Distortions in Single Sideband Transmitter and Receiver System

Baseband Harmonic Distortions in Single Sideband Transmitter and Receiver System Kang Hsia Abstract: Telecommunications industry has widely adopted single sideband (SSB or complex quadrature) transmitter and receiver system, and one popular implementation for SSB system is to achieve image rejection through quadrature component image cancellation. Typically, during the SSB system characterization, the baseband fundamental tone and also the harmonic distortion products are important parameters besides image and LO feedthrough leakage. To ensure accurate characterization, the actual frequency locations of the harmonic distortion products are critical. While system designers may be tempted to assume that the harmonic distortion products are simply up-converted in the same fashion as the baseband fundamental frequency component, the actual distortion products may have surprising results and show up on the different side of spectrum. This paper discusses the theory of SSB system and the actual location of the baseband harmonic distortion products. Introduction Communications engineers have utilized SSB transmitter and receiver system because it offers better bandwidth utilization than double sideband (DSB) transmitter system. The primary cause of bandwidth overhead for the double sideband system is due to the image component during the mixing process. Given data transmission bandwidth of B, the former requires minimum bandwidth of B whereas the latter requires minimum bandwidth of 2B. While the filtering of the image component is one type of SSB implementation, another type of SSB system is to create a quadrature component of the signal and ideally cancels out the image through phase cancellation. M(t) M(t) COS(2πFct) Baseband Message Signal (BB) Modulated Signal (RF) COS(2πFct) Local Oscillator (LO) Signal Figure 1. -

Baseband Video Testing with Digital Phosphor Oscilloscopes

Application Note Baseband Video Testing With Digital Phosphor Oscilloscopes Video signals are complex pose instrument that can pro- This application note demon- waveforms comprised of sig- vide accurate information – strates the use of a Tektronix nals representing a picture as quickly and easily. Finally, to TDS 700D-series Digital well as the timing informa- display all of the video wave- Phosphor Oscilloscope to tion needed to display the form details, a fast acquisi- make a variety of common picture. To capture and mea- tion technology teamed with baseband video measure- sure these complex signals, an intensity-graded display ments and examines some of you need powerful instru- give the confidence and the critical measurement ments tailored for this appli- insight needed to detect and issues. cation. But, because of the diagnose problems with the variety of video standards, signal. you also need a general-pur- Copyright © 1998 Tektronix, Inc. All rights reserved. Video Basics Video signals come from a for SMPTE systems, etc. The active portion of the video number of sources, including three derived component sig- signal. Finally, the synchro- cameras, scanners, and nals can then be distributed nization information is graphics terminals. Typically, for processing. added. Although complex, the baseband video signal Processing this composite signal is a sin- begins as three component gle signal that can be carried analog or digital signals rep- In the processing stage, video on a single coaxial cable. resenting the three primary component signals may be combined to form a single Component Video Signals. color elements – the Red, Component signals have an Green, and Blue (RGB) com- composite video signal (as in NTSC or PAL systems), advantage of simplicity in ponent signals. -

Digital Baseband Modulation Outline • Later Baseband & Bandpass Waveforms Baseband & Bandpass Waveforms, Modulation

Digital Baseband Modulation Outline • Later Baseband & Bandpass Waveforms Baseband & Bandpass Waveforms, Modulation A Communication System Dig. Baseband Modulators (Line Coders) • Sequence of bits are modulated into waveforms before transmission • à Digital transmission system consists of: • The modulator is based on: • The symbol mapper takes bits and converts them into symbols an) – this is done based on a given table • Pulse Shaping Filter generates the Gaussian pulse or waveform ready to be transmitted (Baseband signal) Waveform; Sampled at T Pulse Amplitude Modulation (PAM) Example: Binary PAM Example: Quaternary PAN PAM Randomness • Since the amplitude level is uniquely determined by k bits of random data it represents, the pulse amplitude during the nth symbol interval (an) is a discrete random variable • s(t) is a random process because pulse amplitudes {an} are discrete random variables assuming values from the set AM • The bit period Tb is the time required to send a single data bit • Rb = 1/ Tb is the equivalent bit rate of the system PAM T= Symbol period D= Symbol or pulse rate Example • Amplitude pulse modulation • If binary signaling & pulse rate is 9600 find bit rate • If quaternary signaling & pulse rate is 9600 find bit rate Example • Amplitude pulse modulation • If binary signaling & pulse rate is 9600 find bit rate M=2à k=1à bite rate Rb=1/Tb=k.D = 9600 • If quaternary signaling & pulse rate is 9600 find bit rate M=2à k=1à bite rate Rb=1/Tb=k.D = 9600 Binary Line Coding Techniques • Line coding - Mapping of binary information sequence into the digital signal that enters the baseband channel • Symbol mapping – Unipolar - Binary 1 is represented by +A volts pulse and binary 0 by no pulse during a bit period – Polar - Binary 1 is represented by +A volts pulse and binary 0 by –A volts pulse. -

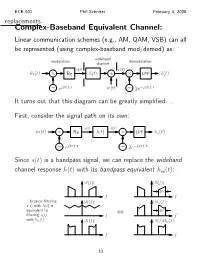

The Complex-Baseband Equivalent Channel Model

ECE-501 Phil Schniter February 4, 2008 replacements Complex-Baseband Equivalent Channel: Linear communication schemes (e.g., AM, QAM, VSB) can all be represented (using complex-baseband mod/demod) as: wideband modulation demodulation channel s(t) r(t) m˜ (t) Re h(t) + LPF v˜(t) × × j2πfct w(t) j2πfct ∼ e ∼ 2e− It turns out that this diagram can be greatly simplified. First, consider the signal path on its own: s(t) x(t) m˜ (t) Re h(t) LPF v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− Since s(t) is a bandpass signal, we can replace the wideband channel response h(t) with its bandpass equivalent hbp(t): S(f) S(f) | | | | 2W f f ...because filtering H(f) Hbp(f) s(t) with h(t) is | | | | equivalent to 2W filtering s(t) f ⇔ f with hbp(t): X(f) S(f)H (f) | | | bp | f f 33 ECE-501 Phil Schniter February 4, 2008 Then, notice that j2πfct j2πfct s(t) h (t) 2e− = s(τ)h (t τ)dτ 2e− ∗ bp bp − · # ! " j2πfcτ j2πfc(t τ) = s(τ)2e− h (t τ)e− − dτ bp − # j2πfct j2πfct = s(t)2e− h (t)e− , ∗ bp which means we can rewrit!e the block di"agr!am as " s(t) j2πfct m˜ (t) Re hbp(t)e− LPF v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− j2πfct We can now reverse the order of the LPF and hbp(t)e− (since both are LTI systems), giving s(t) j2πfct m˜ (t) Re LPF hbp(t)e− v˜s(t) × × j2πfct j2πfct ∼ e ∼ 2e− Since mod/demod is transparent (with synched oscillators), it can be removed, simplifying the block diagram to j2πfct m˜ (t) hbp(t)e− v˜s(t) 34 ECE-501 Phil Schniter February 4, 2008 Now, since m˜ (t) is bandlimited to W Hz, there is no need to j2πfct model the left component of Hbp(f + f ) = hbp(t)e− : c F{ } Hbp(f) Hbp(f + fc) H˜ (f) | | | | | | f f f fc fc 2fc 0 B 0 B − − − j2πfct Replacing hbp(t)e− with the complex-baseband response h˜(t) gives the “complex-baseband equivalent” signal path: m˜ (t) h˜(t) v˜s(t) The spectrums above show that h (t) = Re h˜(t) 2ej2πfct . -

IQ, Image Reject, & Single Sideband Mixer Primer

ICROWA M VE KI , R IN A C . M . S 1 H IQ, IMAGE REJECT 9 A 9 T T 1 E E R C I N N I G & single sideband S P S E R R E F I O R R R M A A B N E C Mixer Primer Input Isolated By: Doug Jorgesen introduction Double Sideband Available P Bandwidth Wireless communication and radar systems are under continuous pressure to reduce size, weight, and power while increasing dynamic range and bandwidth. The quest for higher performance in a smaller 1A) Double IF I R package motivates the use of IQ mixers: mixers that can simultaneously mix ‘in-phase’ and ‘quadrature’ Sided L P components (sometimes called complex mixers). System designers use IQ mixers to eliminate or relax the Upconversion ( ) ( ) f Filter Filtered requirements for filters, which are typically the largest and most expensive components in RF & microwave Sideband system designs. IQ mixers use phase manipulation to suppress signals instead of bulky, expensive filters. LO푎 푡@F푏LO1푡 FLO1 The goal of this application note is to introduce IQ, single sideband (SSB), and image reject (IR) mixers in both theory and practice. We will discuss basic applications, the concepts behind them, and practical Single Sideband considerations in their selection and use. While we will focus on passive diode mixers (of the type Marki Mixer I R sells), the concepts are generally applicable to all types of IQ mixers and modulators. P 0˚ L 1B) Single IF 0˚ P Sided Suppressed Upconversion I. What can an IQ mixer do for you? 90˚ Sideband ( ) ( ) f 90˚ We’ll start with a quick example of what a communication system looks like using a traditional mixer, a L I R F single sideband mixer (SSB), and an IQ mixer. -

Difference Between Baseband and Broadband Pdf

Difference between baseband and broadband pdf Continue There are two transmission methods used with local district networks, depending on the type of signaling (digital or analogue) used for communication between the two nodes - basic and broadband. These are two modes of switching, defined over the means of transmission and different type of signals used for multiplex transmissions from multiplex transmissions to a single transmission. The base range is defined as a transmission format that uses digital signaling and uses a means of transmission as a single-channel device. Broadband is defined as a transmission format in which signals are modulated as radiofrequency (2) analog waves that use different frequency ranges. The decision to use a base range or broadband is determined by the app. The base range refers to a communication channel in which information is transmitted digitally and which uses a means of transmission as a single-channel device. This means that one channel is used to communicate with devices on the network, allowing computers to transmit and receive data on one cable. Only one station can be transmitted at a time, and all stations must transmit and receive the same types of signals. A two-direction connection means that the same channel is used to transmit and receive signals. Each device in the base system has the same channel. When one node transmits data across the base channel, all other nodes on the network must wait until the transmission ends before they can transmit the data. The only problem with baseband LANs is their limited capacity over a limited distance that is no more than a few miles. -

Quadrature Amplitude Modulation (QAM)

Quadrature Amplitude Modulation (QAM) PAM signals occupy twice the bandwidth required for the baseband Transmit two PAM signals using carriers of the same frequency but in phase and quadrature Demodulation: QAM Transmitter Implementation QAM is widely used method for transmitting digital data over bandpass channels A basic QAM transmitter Inphase component Pulse a Impulse a*(t) a(t) n Shaping Modulator + d Map to Filter sin w t n Serial to c Constellation g (t) s(t) Parallel T Point * Pulse 1 Impulse b (t) b(t) Shaping - J Modulator bn Filter quadrature component gT(t) cos wct Using complex notation = − = ℜ s(t) a(t)cos wct b(t)sin wct s(t) {s+ (t)} which is the preenvelope of QAM ∞ ∞ − = + − jwct = jwckT − jwc (t kT ) s+ (t) (ak jbk )gT (t kT )e s+ (t) (ck e )gT (t kT)e −∞ −∞ Complex Symbols Quadriphase-Shift Keying (QPSK) 4-QAM Transmitted signal is contained in the phase imaginary imaginary real real M-ary PSK QPSK is a special case of M-ary PSK, where the phase of the carrier takes on one of M possible values imaginary real Pulse Shaping Filters The real pulse shaping g (t) filter response T = jwct = + Let h(t) gT (t)e hI (t) jhQ (t) = hI (t) gT (t)cos wct = where hQ (t) gT (t)sin wct = − This filter is a bandpass H (w) GT (w wc ) filter with the frequency response Transmitted Sequences Modulated sequences before passband shaping ' = ℜ jwc kT = − ak {ck e } ak cos wc kT bk sin wc kT ' = ℑ jwc kT = + bk {ck e } ak sin wc kT bk cos wc kT Using these definitions ∞ = ℜ = ' − − ' − s(t) {s+ (t)} ak hI (t kT) bk hQ (t kT) -

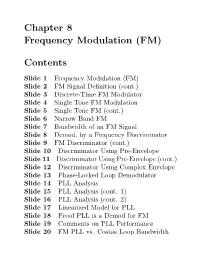

Chapter 8 Frequency Modulation (FM) Contents

Chapter 8 Frequency Modulation (FM) Contents Slide 1 Frequency Modulation (FM) Slide 2 FM Signal Definition (cont.) Slide 3 Discrete-Time FM Modulator Slide 4 Single Tone FM Modulation Slide 5 Single Tone FM (cont.) Slide 6 Narrow Band FM Slide 7 Bandwidth of an FM Signal Slide 8 Demod. by a Frequency Discriminator Slide 9 FM Discriminator (cont.) Slide 10 Discriminator Using Pre-Envelope Slide 11 Discriminator Using Pre-Envelope (cont.) Slide 12 Discriminator Using Complex Envelope Slide 13 Phase-Locked Loop Demodulator Slide 14 PLL Analysis Slide 15 PLL Analysis (cont. 1) Slide 16 PLL Analysis (cont. 2) Slide 17 Linearized Model for PLL Slide 18 Proof PLL is a Demod for FM Slide 19 Comments on PLL Performance Slide 20 FM PLL vs. Costas Loop Bandwidth Slide 21 Laboratory Experiments for FM Slide 21 Experiment 8.1 Making an FM Modulator Slide 22 Experiment 8.1 FM Modulator (cont. 1) Slide 23 Experiment 8.2 Spectrum of an FM Signal Slide 24 Experiment 8.2 FM Spectrum (cont. 1) Slide 25 Experiment 8.2 FM Spectrum (cont. 1) Slide 26 Experiment 8.2 FM Spectrum (cont. 3) Slide 26 Experiment 8.3 Demodulation by a Discriminator Slide 27 Experiment 8.3 Discriminator (cont. 1) Slide 28 Experiment 8.3 Discriminator (cont. 2) Slide 29 Experiment 8.4 Demodulation by a PLL Slide 30 Experiment 8.4 PLL (cont.) 8-ii ✬ Chapter 8 ✩ Frequency Modulation (FM) FM was invented and commercialized after AM. Its main advantage is that it is more resistant to additive noise than AM. Instantaneous Frequency The instantaneous frequency of cos θ(t) is d ω(t)= θ(t) (1) dt Motivational Example Let θ(t) = ωct. -

For Broadband Wireless Transmission Systems BCM85110 E-Band

BCM85110 E-Band Networking SoC Baseband System On-Chip (SoC) for Broadband Wireless Transmission Systems OVERVIEW BCM85110 MilliMeterWave Modem BCM985110 Evaluation Kit FEATURES ■■ 10Gbps link capacity ■■ Supports: Large CSs Span (50MHz to 2GHz) BPSK to 1024 QAM XPIC ■■ Configurable Low-Density Parity-Check (LDPC) or RS channel codes ■■ High link availability with ACMB ■■ Enhanced impairment correction capabilities and low phase noise support simple/fast HW design and lower RF cost The MaxLinear BCM85110 is a baseband SoC for broadband wireless ■■ Integrated AFE, DACs and ADCs enable transmission systems. With its exceptional 20Gbps throughput, glueless connectivity to RF/IF analog circuitry the BCM85110 provides a flexible solution that meets the evolving ■■ Large variety of services demands of fronthaul and backhaul networks, supporting both all XAUI / 1Gbps outdoor unit (AODU) and pure IP transport implementations for 4G and SGMII / 2.5GbE 5G cellular networks. GPI The comprehensive functionality supported by the BCM85110 includes ■■ Fixed mode and low latency mode a complete processing chain, from the various user IP interface APPLICATIONS technologies to analog baseband signals. The BCM85110 supports ■■ Macro cell backhauling large channel-spacing spans of between 50MHz and 2GHz, modulation ■■ Small cell backhauling rates from binary phase-shift keying (BPSK) to 1024-QAM, and high ■■ Fiber replacement and extension spectral efficiency supporting multiple-input and multiple-out (MIMO) ■■ Wireless fronthaul transceivers and cross pole -

Recommendation Itu-R Bo.650-2*,**

Rec. ITU-R BO.650-2 1 RECOMMENDATION ITU-R BO.650-2*,** Standards for conventional television systems for satellite broadcasting in the channels defined by Appendix 30 of the Radio Regulations (1986-1990-1992) The ITU Radiocommunication Assembly, considering a) that the introduction of the broadcasting-satellite service offers the possibility of reducing the disparity between television standards throughout the world; b) that this introduction also provides an opportunity, through technological developments, for improving the quality and increasing the quantity and diversity of the services offered to the public; additionally, it is possible to take advantage of new technology to introduce time-division multiplex systems in which the high degree of commonality can lead to economic multi-standard receivers; c) that it will no doubt be necessary to retain 625-line and 525-line television systems; d) that broadcasting-satellite services are being introduced using analogue composite coding according to Annex 1 of Recommendation ITU-R BT.470 for the vision signal; e) that it is generally intended that broadcasting-satellite standards should facilitate the maximum utilization of existing terrestrial equipment, especially that which concerns individual and community reception media (receivers, cable, re-broadcasting methods of distribution etc.). For this purpose a unique baseband signal which is common to the satellite-broadcasting system and the terrestrial distribution network is desirable; f) that the requirements as regards sensitivity to interference of the systems that can be used were defined by Appendix 30 of the Radio Regulations (RR); g) that complete compatibility with existing receivers is in any event not possible for frequency-modulated satellite broadcasting transmissions; ____________________ * Note – The following Reports of the ITU-R were considered in relation with this Recommendation: ITU- R BT.624-4, ITU-R BO.632-4, ITU-R BS.795-3, ITU-R BT.802-3, ITU-R BO.953-2, ITU-R BO.954-2, ITU-R BO.1073-1, ITU-R BO.1074-1 and ITU-R BO.1228.