State of Art of Real-Time Singing Voice Synthesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UNIVERZITET UMETNOSTI U BEOGRADU FAKULTET MUZIČKE UMETNOSTI Katedra Za Muzikologiju

UNIVERZITET UMETNOSTI U BEOGRADU FAKULTET MUZIČKE UMETNOSTI Katedra za muzikologiju Milan Milojković DIGITALNA TEHNOLOGIJA U SRPSKOJ UMETNIČKOJ MUZICI Doktorska disertacija Beograd, 2017. Mentor: dr Vesna Mikić, redovni profesor, Univerzitet umetnosti u Beogradu, Fakultet muzičke umetnosti, Katedra za muzikologiju Članovi komisije: 2 Digitalna tehnologija u srpskoj umetničkoj muzici Rezime Od prepravke vojnog digitalnog hardvera entuzijasta i amatera nakon Drugog svetskog rata, preko institucionalnog razvoja šezdesetih i sedamdesetih i globalne ekspanzije osamdesetih i devedesetih godina prošlog veka, računari su prešli dug put od eksperimenta do podrazumevanog sredstva za rad u gotovo svakoj ljudskoj delatnosti. Paralelno sa ovim razvojem, praćena je i nit njegovog „preseka“ sa umetničkim muzičkim poljem, koja se manifestovala formiranjem interdisciplinarne umetničke prakse računarske muzike koju stvaraju muzički inženjeri – kompozitori koji vladaju i veštinama programiranja i digitalne sinteze zvuka. Kako bi se muzički sistemi i teorije preveli u računarske programe, bilo je neophodno sakupiti i obraditi veliku količinu podataka, te je uspostavljena i zajednička humanistička disciplina – computational musicology. Tokom osamdesetih godina na umetničku scenu stupa nova generacija autora koji na računaru postepeno počinju da obavljaju sve više poslova, te se pojava „kućnih“ računara poklapa sa „prelaskom“ iz modernizma u postmodernizam, pa i ideja muzičkog inženjeringa takođe proživljava transformaciju iz objektivističke, sistematske autonomne -

HMM 기반 TTS와 Musicxml을 이용한 노래음 합성

Journal of The Korea Society of Computer and Information www.ksci.re.kr Vol. 20, No. 5, May 2015 http://dx.doi.org/10.9708/jksci.2015.20.5.053 HMM 기반 TTS와 MusicXML을 이용한 노래음 합성 1)칸 나지브 울라*, 이 정 철* Singing Voice Synthesis Using HMM Based TTS and MusicXML Najeeb Ullah Khan*, Jung-Chul Lee* 요 약 노래음 합성이란 주어진 가사와 악보를 이용하여 컴퓨터에서 노래음을 생성하는 것이다. 텍스트/음성 변환기에 널리 사용된 HMM 기반 음성합성기는 최근 노래음 합성에도 적용되고 있다. 그러나 기존의 구현방법에는 대용량의 노래음 데이터베이스 수집과 학습이 필요하여 구현에 어려움이 있다. 또한 기존의 상용 노래음 합성시스템은 피아 노 롤 방식의 악보 표현방식을 사용하고 있어 일반인에게는 익숙하지 않으므로 읽기 쉬운 표준 악보형식의 사용자 인터페이스를 지원하여 노래 학습의 편의성을 향상시킬 필요가 있다. 이 문제를 해결하기 위하여 본 논문에서는 기 존 낭독형 음성합성기의 HMM 모델을 이용하고 노래음에 적합한 피치값과 지속시간 제어방법을 적용하여 HMM 모 델 파라미터 값을 변화시킴으로서 노래음을 생성하는 방법을 제안한다. 그리고 음표와 가사를 입력하기 위한 MusicXML 기반의 악보편집기를 전단으로, HMM 기반의 텍스트/음성 변환 합성기를 합성기 후단으로서 사용하여 노래음 합성시스템을 구현하는 방법을 제안한다. 본 논문에서 제안하는 방법을 이용하여 합성된 노래음을 평가하였 으며 평가결과 활용 가능성을 확인하였다. ▸Keywords : 텍스트/음성변환, 은닉 마코프 모델, 노래음 합성, 악보편집기 Abstract Singing voice synthesis is the generation of a song using a computer given its lyrics and musical notes. Hidden Markov models (HMM) have been proved to be the models of choice for text to speech synthesis. HMMs have also been used for singing voice synthesis research, however, a huge database is needed for the training of HMMs for singing voice synthesis. -

The Race of Sound: Listening, Timbre, and Vocality in African American Music

UCLA Recent Work Title The Race of Sound: Listening, Timbre, and Vocality in African American Music Permalink https://escholarship.org/uc/item/9sn4k8dr ISBN 9780822372646 Author Eidsheim, Nina Sun Publication Date 2018-01-11 License https://creativecommons.org/licenses/by-nc-nd/4.0/ 4.0 Peer reviewed eScholarship.org Powered by the California Digital Library University of California The Race of Sound Refiguring American Music A series edited by Ronald Radano, Josh Kun, and Nina Sun Eidsheim Charles McGovern, contributing editor The Race of Sound Listening, Timbre, and Vocality in African American Music Nina Sun Eidsheim Duke University Press Durham and London 2019 © 2019 Nina Sun Eidsheim All rights reserved Printed in the United States of America on acid-free paper ∞ Designed by Courtney Leigh Baker and typeset in Garamond Premier Pro by Copperline Book Services Library of Congress Cataloging-in-Publication Data Title: The race of sound : listening, timbre, and vocality in African American music / Nina Sun Eidsheim. Description: Durham : Duke University Press, 2018. | Series: Refiguring American music | Includes bibliographical references and index. Identifiers:lccn 2018022952 (print) | lccn 2018035119 (ebook) | isbn 9780822372646 (ebook) | isbn 9780822368564 (hardcover : alk. paper) | isbn 9780822368687 (pbk. : alk. paper) Subjects: lcsh: African Americans—Music—Social aspects. | Music and race—United States. | Voice culture—Social aspects— United States. | Tone color (Music)—Social aspects—United States. | Music—Social aspects—United States. | Singing—Social aspects— United States. | Anderson, Marian, 1897–1993. | Holiday, Billie, 1915–1959. | Scott, Jimmy, 1925–2014. | Vocaloid (Computer file) Classification:lcc ml3917.u6 (ebook) | lcc ml3917.u6 e35 2018 (print) | ddc 781.2/308996073—dc23 lc record available at https://lccn.loc.gov/2018022952 Cover art: Nick Cave, Soundsuit, 2017. -

Masterarbeit

Masterarbeit Erstellung einer Sprachdatenbank sowie eines Programms zu deren Analyse im Kontext einer Sprachsynthese mit spektralen Modellen zur Erlangung des akademischen Grades Master of Science vorgelegt dem Fachbereich Mathematik, Naturwissenschaften und Informatik der Technischen Hochschule Mittelhessen Tobias Platen im August 2014 Referent: Prof. Dr. Erdmuthe Meyer zu Bexten Korreferent: Prof. Dr. Keywan Sohrabi Eidesstattliche Erklärung Hiermit versichere ich, die vorliegende Arbeit selbstständig und unter ausschließlicher Verwendung der angegebenen Literatur und Hilfsmittel erstellt zu haben. Die Arbeit wurde bisher in gleicher oder ähnlicher Form keiner anderen Prüfungsbehörde vorgelegt und auch nicht veröffentlicht. 2 Inhaltsverzeichnis 1 Einführung7 1.1 Motivation...................................7 1.2 Ziele......................................8 1.3 Historische Sprachsynthesen.........................9 1.3.1 Die Sprechmaschine.......................... 10 1.3.2 Der Vocoder und der Voder..................... 10 1.3.3 Linear Predictive Coding....................... 10 1.4 Moderne Algorithmen zur Sprachsynthese................. 11 1.4.1 Formantsynthese........................... 11 1.4.2 Konkatenative Synthese....................... 12 2 Spektrale Modelle zur Sprachsynthese 13 2.1 Faltung, Fouriertransformation und Vocoder................ 13 2.2 Phase Vocoder................................ 14 2.3 Spectral Model Synthesis........................... 19 2.3.1 Harmonic Trajectories........................ 19 2.3.2 Shape Invariance.......................... -

Expression Control in Singing Voice Synthesis

Expression Control in Singing Voice Synthesis Features, approaches, n the context of singing voice synthesis, expression control manipu- [ lates a set of voice features related to a particular emotion, style, or evaluation, and challenges singer. Also known as performance modeling, it has been ] approached from different perspectives and for different purposes, and different projects have shown a wide extent of applicability. The Iaim of this article is to provide an overview of approaches to expression control in singing voice synthesis. We introduce some musical applica- tions that use singing voice synthesis techniques to justify the need for Martí Umbert, Jordi Bonada, [ an accurate control of expression. Then, expression is defined and Masataka Goto, Tomoyasu Nakano, related to speech and instrument performance modeling. Next, we pres- ent the commonly studied set of voice parameters that can change and Johan Sundberg] Digital Object Identifier 10.1109/MSP.2015.2424572 Date of publication: 13 October 2015 IMAGE LICENSED BY INGRAM PUBLISHING 1053-5888/15©2015IEEE IEEE SIGNAL PROCESSING MAGAZINE [55] noVEMBER 2015 voices that are difficult to produce naturally (e.g., castrati). [TABLE 1] RESEARCH PROJECTS USING SINGING VOICE SYNTHESIS TECHNOLOGIES. More examples can be found with pedagogical purposes or as tools to identify perceptually relevant voice properties [3]. Project WEBSITE These applications of the so-called music information CANTOR HTTP://WWW.VIRSYN.DE research field may have a great impact on the way we inter- CANTOR DIGITALIS HTTPS://CANTORDIGITALIS.LIMSI.FR/ act with music [4]. Examples of research projects using sing- CHANTER HTTPS://CHANTER.LIMSI.FR ing voice synthesis technologies are listed in Table 1. -

NEA Grant Search - Data As of 02-10-2020 532 Matches

NEA Grant Search - Data as of 02-10-2020 532 matches Bay Street Theatre Festival, Inc. (aka Bay Street Theater and Sag Harbor 1853707-32-19 Center for the Arts) Sag Harbor, NY 11963-0022 To support Literature Live!, a theater education program that presents professional performances based on classic literature for middle and high school students. Plays are selected to support the curricula of local schools and New York State learning standards. The program includes talkbacks with the cast, and teachers are provided with free study guides and lesson plans. Fiscal Year: 2019 Congressional District: 1 Grant Amount: $10,000 Category: Art Works Discipline: Theater Grant Period: 06/2019 - 12/2019 Herstory Writers Workshop, Inc 1854118-52-19 Centereach, NY 11720-3597 To support writing workshops in correctional facilities and for public school students. Herstory will offer weekly literary memoir writing workshops for women and adolescent girls in Long Island jails. In addition, the organization's program for young writers will bring students from Long Island and Queens County school districts to college campuses to develop their craft. Fiscal Year: 2019 Congressional District: 1 Grant Amount: $20,000 Category: Art Works Discipline: Literature Grant Period: 06/2019 - 05/2020 Lindenhurst Memorial Library 1859011-59-19 Lindenhurst, NY 11757-5399 To support multidisciplinary performances and public programming in community locations throughout Lindenhurst, New York. Programming will include events such as live performances, exhibitions, local author programs, and other arts activities selected based on feedback from local residents. The library will feature cultural events reflecting the diversity of the area. Fiscal Year: 2019 Congressional District: 2 Grant Amount: $10,000 Category: Challenge America: Arts Discipline: Arts Engagement in American Grant Period: 07/2019 - 06/2020 Engagement in American Communities Communities Quintet of the Americas, Inc. -

What Is an Instrument?

What is an instrument? • Anything with which we can make music…? • Rhythm, melody, chords, • Pitched or un-pitched sounds… • Narrow definitions vs. Extremely broad definitions Tone Colour or Timbre (pronounced TAM-ber) • Refers to the sound of a note or pitch – Not the highness or lowness of the pitch itself • Different instruments have different timbres – We use words like smooth, rough, sweet, dark – Ineffable? Range • Instruments and voices have a range of notes they can play or sing – Demo guitar and voice • Lowest to highest sounds • Ways to push beyond the standard range Five Categories of Musical Instruments Classification system devised in India in the 3rd or 4th century B.C. 1. Aerophones • Wind instruments, anything using air 2. Chordophones • Stringed instruments 3. Membranophones • Drums with heads 4. Idiophones • Non-drum percussion 5. Electrophones • Electronic sounds 1. Aerophones • Wind instruments, anything using air • Aerophones are generally either: • Woodwind (Doesn’t have to be wood i.e. flute) • Reed (Small piece of wood i.e. saxophone) • Brass (Lip vibration i.e. trumpet) Flute • Woodwind family • At least 30,000 years old (bone) Ex: Claude Debussy – “Syrinx” (1913) https://www.youtube.com/watch?v=C_yf7FIyu1Y Ex: Jurassic 5 – “Flute Loop” (2000) Ex: Van Morrison – “Moondance” (1970) (chorus) Ex: Gil Scott-Heron – “The Bottle” (1974) Ex: Anchorman “Jazz Flute”(0:55) https://www.youtube.com/watch?v=Dh95taIdCo0 Bass Flute • One octave lower than a regular flute Ex: Overture from The Jungle Bookhttps://www.youtube.com/watch?v=UUH42ciR5SA • Other related instruments: • Piccolo (one octave higher than a flute) • Pan flutes • Bone or wooden flutes Accordion • Modern accordion: early 19th C. -

Attuning Speech-Enabled Interfaces to User and Context for Inclusive Design: Technology, Methodology and Practice

CORE Metadata, citation and similar papers at core.ac.uk Provided by Springer - Publisher Connector Univ Access Inf Soc (2009) 8:109–122 DOI 10.1007/s10209-008-0136-x LONG PAPER Attuning speech-enabled interfaces to user and context for inclusive design: technology, methodology and practice Mark A. Neerincx Æ Anita H. M. Cremers Æ Judith M. Kessens Æ David A. van Leeuwen Æ Khiet P. Truong Published online: 7 August 2008 Ó The Author(s) 2008 Abstract This paper presents a methodology to apply 1 Introduction speech technology for compensating sensory, motor, cog- nitive and affective usage difficulties. It distinguishes (1) Speech technology seems to provide new opportunities to an analysis of accessibility and technological issues for the improve the accessibility of electronic services and soft- identification of context-dependent user needs and corre- ware applications, by offering compensation for limitations sponding opportunities to include speech in multimodal of specific user groups. These limitations can be quite user interfaces, and (2) an iterative generate-and-test pro- diverse and originate from specific sensory, physical or cess to refine the interface prototype and its design cognitive disabilities—such as difficulties to see icons, to rationale. Best practices show that such inclusion of speech control a mouse or to read text. Such limitations have both technology, although still imperfect in itself, can enhance functional and emotional aspects that should be addressed both the functional and affective information and com- in the design of user interfaces (cf. [49]). Speech technol- munication technology-experiences of specific user groups, ogy can be an ‘enabler’ for understanding both the content such as persons with reading difficulties, hearing-impaired, and ‘tone’ in user expressions, and for producing the right intellectually disabled, children and older adults. -

Voice Synthesizer Application Android

Voice synthesizer application android Continue The Download Now link sends you to the Windows Store, where you can continue the download process. You need to have an active Microsoft account to download the app. This download may not be available in some countries. Results 1 - 10 of 603 Prev 1 2 3 4 5 Next See also: Speech Synthesis Receming Device is an artificial production of human speech. The computer system used for this purpose is called a speech computer or speech synthesizer, and can be implemented in software or hardware. The text-to-speech system (TTS) converts the usual text of language into speech; other systems display symbolic linguistic representations, such as phonetic transcriptions in speech. Synthesized speech can be created by concatenating fragments of recorded speech that are stored in the database. Systems vary in size of stored speech blocks; The system that stores phones or diphones provides the greatest range of outputs, but may not have clarity. For specific domain use, storing whole words or suggestions allows for high-quality output. In addition, the synthesizer may include a vocal tract model and other characteristics of the human voice to create a fully synthetic voice output. The quality of the speech synthesizer is judged by its similarity to the human voice and its ability to be understood clearly. The clear text to speech program allows people with visual impairments or reading disabilities to listen to written words on their home computer. Many computer operating systems have included speech synthesizers since the early 1990s. A review of the typical TTS Automatic Announcement System synthetic voice announces the arriving train to Sweden. -

Observations on the Dynamic Control of an Articulatory Synthesizer Using Speech Production Data

Observations on the dynamic control of an articulatory synthesizer using speech production data Dissertation zur Erlangung des Grades eines Doktors der Philosophie der Philosophischen Fakultäten der Universität des Saarlandes vorgelegt von Ingmar Michael Augustus Steiner aus San Francisco Saarbrücken, 2010 ii Dekan: Prof. Dr. Erich Steiner Berichterstatter: Prof. Dr. William J. Barry Prof. Dr. Dietrich Klakow Datum der Einreichung: 14.12.2009 Datum der Disputation: 19.05.2010 Contents Acknowledgments vii List of Figures ix List of Tables xi List of Listings xiii List of Acronyms 1 Zusammenfassung und Überblick 1 Summary and overview 4 1 Speech synthesis methods 9 1.1 Text-to-speech ................................. 9 1.1.1 Formant synthesis ........................... 10 1.1.2 Concatenative synthesis ........................ 11 1.1.3 Statistical parametric synthesis .................... 13 1.1.4 Articulatory synthesis ......................... 13 1.1.5 Physical articulatory synthesis .................... 15 1.2 VocalTractLab in depth ............................ 17 1.2.1 Vocal tract model ........................... 17 1.2.2 Gestural model ............................. 19 1.2.3 Acoustic model ............................. 24 1.2.4 Speaker adaptation ........................... 25 2 Speech production data 27 2.1 Full imaging ................................... 27 2.1.1 X-ray and cineradiography ...................... 28 2.1.2 Magnetic resonance imaging ...................... 30 2.1.3 Ultrasound tongue imaging ...................... 33 -

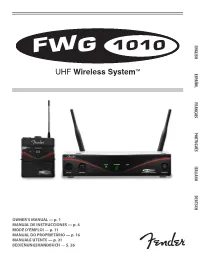

Fwg 1010 English

FWG 1010 ENGLISH UHF Wireless System™ ESPAÑOL FRANÇAIS PORTUGUÊS ITALIANO DEUTSCH OWNER’S MANUAL — p. 1 MANUAL DE INSTRUCCIONES — p. 6 MODE D’EMPLOI — p. 11 MANUAL DO PROPRIETÁRIO — p. 16 MANUALE UTENTE — p. 21 BEDIENUNGSHANDBUCH — S. 26 FWG 1010 Symbols Used Environment The lightning flash with arrow point in an equilateral trian- gle means that there are dangerous voltages present • The power supply unit consumes a small amount of electricity within the unit. even when the unit is switched off. To save energy, unplug the power supply unit from the socket if you are not going to be us- The exclamation point in an equilateral triangle on the ing the unit for some time. equipment indicates that it is necessary for the user to ENGLISH refer to the User Manual. In the User Manual, this symbol • The packaging is recyclable. Dispose of the packaging in an ap- marks instructions that the user must follow to ensure propriate recycling collection system. safe operation of the equipment. • If you scrap the unit, separate the case, electronics and cables and dispose of all the components in accordance with the appro- Safety and Environment priate waste disposal regulations. FCC STATEMENT Safety The transmitter has been tested and found to comply with the limits for a low-power auxiliary station pursuant to Part 74 of the • Do not spill any liquids on the equipment. FCC Rules. The receiver has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC • Do not place any containers containing liquid on the device or Rules. -

Make It New: Reshaping Jazz in the 21St Century

Make It New RESHAPING JAZZ IN THE 21ST CENTURY Bill Beuttler Copyright © 2019 by Bill Beuttler Lever Press (leverpress.org) is a publisher of pathbreaking scholarship. Supported by a consortium of liberal arts institutions focused on, and renowned for, excellence in both research and teaching, our press is grounded on three essential commitments: to be a digitally native press, to be a peer- reviewed, open access press that charges no fees to either authors or their institutions, and to be a press aligned with the ethos and mission of liberal arts colleges. This work is licensed under the Creative Commons Attribution- NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/ by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. DOI: https://doi.org/10.3998/mpub.11469938 Print ISBN: 978-1-64315-005- 5 Open access ISBN: 978-1-64315-006- 2 Library of Congress Control Number: 2019944840 Published in the United States of America by Lever Press, in partnership with Amherst College Press and Michigan Publishing Contents Member Institution Acknowledgments xi Introduction 1 1. Jason Moran 21 2. Vijay Iyer 53 3. Rudresh Mahanthappa 93 4. The Bad Plus 117 5. Miguel Zenón 155 6. Anat Cohen 181 7. Robert Glasper 203 8. Esperanza Spalding 231 Epilogue 259 Interview Sources 271 Notes 277 Acknowledgments 291 Member Institution Acknowledgments Lever Press is a joint venture. This work was made possible by the generous sup- port of