Entity Recognition in the Biomedical Domain Using a Hybrid Approach Marco Basaldella1†, Lenz Furrer2†, Carlo Tasso1 and Fabio Rinaldi2*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Novel Ve-Zinc Nger-Related-Gene Signature Related to Tumor Immune

A novel ve-zinc nger-related-gene signature related to tumor immune microenvironment allows for treatment stratication and predicts prognosis in clear cell renal cell carcinoma patients Feilong Zhang Beijing Chaoyang Hospital Jiyue Wu Beijing Chaoyang Hospital Jiandong Zhang Beijing Chaoyang Hospital Peng Cao Beijing Chaoyang Hospital Zejia Sun Beijing Chaoyang Hospital Wei Wang ( [email protected] ) Beijing Chaoyang Hospital https://orcid.org/0000-0003-2642-3338 Research Keywords: Posted Date: February 23rd, 2021 DOI: https://doi.org/10.21203/rs.3.rs-207677/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Page 1/25 Abstract Background Clear cell renal cell carcinoma (ccRCC) is one of the most prevalent renal malignant tumors, which survival rate and quality of life of ccRCC patients are not satisfactory. Therefore, identication of prognostic biomarkers of ccRCC patients will contribute to early and accurate clinical intervention and treatment, and then improve their prognosis. Methods We downloaded the original expression data of mRNAs from The Cancer Genome Atlas database and the zinc nger(ZNF)-related genes (ZRGs) from UniProt online database. Differentially expressed ZRGs (DE- ZRGs) was screened from tumor and adjacent nontumor tissues and functional enrichment analysis was conducted out. A ve-ZRG signature were constructed by univariate Cox regression, least absolute shrinkage and selection operator and multivariate Cox regression. Furthermore, we screened out independent prognosis-related factors to build a nomogram by univariate and multivariate Cox regression. Potential biological pathways of ve ZRGs were analyzed by Gene Set Enrichment Analysis (GSEA). Then, we further quantitatively analyze immune inltration and evaluate tumor microenvironment by single sample GSEA. -

Whole-Exome Sequencing Identifies Causative Mutations in Families

BASIC RESEARCH www.jasn.org Whole-Exome Sequencing Identifies Causative Mutations in Families with Congenital Anomalies of the Kidney and Urinary Tract Amelie T. van der Ven,1 Dervla M. Connaughton,1 Hadas Ityel,1 Nina Mann,1 Makiko Nakayama,1 Jing Chen,1 Asaf Vivante,1 Daw-yang Hwang,1 Julian Schulz,1 Daniela A. Braun,1 Johanna Magdalena Schmidt,1 David Schapiro,1 Ronen Schneider,1 Jillian K. Warejko,1 Ankana Daga,1 Amar J. Majmundar,1 Weizhen Tan,1 Tilman Jobst-Schwan,1 Tobias Hermle,1 Eugen Widmeier,1 Shazia Ashraf,1 Ali Amar,1 Charlotte A. Hoogstraaten,1 Hannah Hugo,1 Thomas M. Kitzler,1 Franziska Kause,1 Caroline M. Kolvenbach,1 Rufeng Dai,1 Leslie Spaneas,1 Kassaundra Amann,1 Deborah R. Stein,1 Michelle A. Baum,1 Michael J.G. Somers,1 Nancy M. Rodig,1 Michael A. Ferguson,1 Avram Z. Traum,1 Ghaleb H. Daouk,1 Radovan Bogdanovic,2 Natasa Stajic,2 Neveen A. Soliman,3,4 Jameela A. Kari,5,6 Sherif El Desoky,5,6 Hanan M. Fathy,7 Danko Milosevic,8 Muna Al-Saffar,1,9 Hazem S. Awad,10 Loai A. Eid,10 Aravind Selvin,11 Prabha Senguttuvan,12 Simone Sanna-Cherchi,13 Heidi L. Rehm,14 Daniel G. MacArthur,14,15 Monkol Lek,14,15 Kristen M. Laricchia,15 Michael W. Wilson,15 Shrikant M. Mane,16 Richard P. Lifton,16,17 Richard S. Lee,18 Stuart B. Bauer,18 Weining Lu,19 Heiko M. Reutter ,20,21 Velibor Tasic,22 Shirlee Shril,1 and Friedhelm Hildebrandt1 Due to the number of contributing authors, the affiliations are listed at the end of this article. -

Identification of Functionally Active, Low Frequency Copy Number Variants at 15Q21.3 and 12Q21.31 Associated with Prostate Cance

Identification of functionally active, low frequency copy number variants at 15q21.3 and 12q21.31 associated with prostate cancer risk Francesca Demichelisa,b,c,1,2, Sunita R. Setlurd,1, Samprit Banerjeee, Dimple Chakravartya, Jin Yun Helen Chend, Chen X. Chena, Julie Huanga, Himisha Beltranf, Derek A. Oldridgea, Naoki Kitabayashia, Birgit Stenzelg, Georg Schaeferg, Wolfgang Horningerg, Jasmin Bekticg, Arul M. Chinnaiyanh, Sagit Goldenbergi, Javed Siddiquih,j, Meredith M. Regank, Michale Kearneyl, T. David Soongb, David S. Rickmana, Olivier Elementob, John T. Weij, Douglas S. Scherri, Martin A. Sandal, Georg Bartschg, Charles Leed,1, Helmut Klockerg,1, and Mark A. Rubina,i,1,2 aDepartment of Pathology and Laboratory Medicine, eDepartment of Public Health, iDepartment of Urology, fDivision of Hematology and Medical Oncology, and bInstitute for Computational Biomedicine, Weill Cornell Medical College, New York, NY 10065; cCentre for Integrative Biology, University of Trento, 38122 Trento, Italy; dDepartment of Pathology, Brigham and Women’s Hospital and Harvard Medical School, Boston, MA 02115; gDepartment of Urology, Innsbruck Medical University, 6020 Innsbruck, Austria; jDepartment of Urology and hMichigan Center for Translational Pathology, University of Michigan Medical School, Ann Arbor, MI 48109; kBiostatistics and Computational Biology, Dana Farber Cancer Institute, Boston, MA 02115; and lDepartment of Urology, Beth Israel Deaconess Medical Center, Boston, MA 02115 Edited* by Patricia K. Donahoe, Massachusetts General Hospital and Harvard Medical School, Boston, MA, and approved March 8, 2012 (received for review October 22, 2011) Copy number variants (CNVs) are a recently recognized class of based prostate cancer screening program started in 1993 that human germ line polymorphisms and are associated with a variety intended to evaluate the utility of intensive PSA screening in of human diseases, including cancer. -

The Role of Pygopus 2 in Neural Crest

The role of Pygopus 2 in Neural Crest KEVIN ROBERT GILLINDER A Thesis submitted for the Degree of Doctor of Philosophy Institute of Genetic Medicine Newcastle University April 2012 Abstract Epidermal neural crest stem cells (EPI-NCSC) are remnants of the embryonic neural crest that reside in a postnatal location, the bulge of rodent and human hair follicles. They are multipotent stem cells and are easily accessible in the hairy skin. They do not form tumours after transplantation, and because they can be expanded in vitro, these cells are promising candidates for autologous transplantation in cell replacement therapy and biomedical engineering. Pygopus 2 (Pygo2) is a signature gene of EPI-NCSC being specifically expressed in embryonic neural crest stem cells (NCSC) and hair follicle-derived EPI-NCSC, but not in other known skin-resident stem cells. Pygo2 is particularly interesting, as it is an important transducer of the Wnt signaling pathway, known to play key roles in the regulation of NCSC migration, proliferation, and differentiation. This study focuses on the role of Pygo2 in the development of the neural crest (NC) in vertebrates during development. Three loss-of-function models were utilized to determine the role Pygo2 in mouse and zebrafish development, and EPI-NCSC ex vivo. A Wnt1-specific loss of Pygo2 in mice causes multi-organ birth defects in multiple NC derived organs. In addition, morpholino (MO) knockdown of pygo homologs within zebrafish leads to NC related craniofacial abnormalities, together with a gastrulation defect during early embryogenesis. While ex vivo studies using EPI-NCSC suggest a role for Pygo2 in cellular proliferation. -

Wingless Signaling: a Genetic Journey from Morphogenesis to Metastasis

| FLYBOOK CELL SIGNALING Wingless Signaling: A Genetic Journey from Morphogenesis to Metastasis Amy Bejsovec1 Department of Biology, Duke University, Durham, North Carolina 27708 ORCID ID: 0000-0002-8019-5789 (A.B.) ABSTRACT This FlyBook chapter summarizes the history and the current state of our understanding of the Wingless signaling pathway. Wingless, the fly homolog of the mammalian Wnt oncoproteins, plays a central role in pattern generation during development. Much of what we know about the pathway was learned from genetic and molecular experiments in Drosophila melanogaster, and the core pathway works the same way in vertebrates. Like most growth factor pathways, extracellular Wingless/ Wnt binds to a cell surface complex to transduce signal across the plasma membrane, triggering a series of intracellular events that lead to transcriptional changes in the nucleus. Unlike most growth factor pathways, the intracellular events regulate the protein stability of a key effector molecule, in this case Armadillo/b-catenin. A number of mysteries remain about how the “destruction complex” desta- bilizes b-catenin and how this process is inactivated by the ligand-bound receptor complex, so this review of the field can only serve as a snapshot of the work in progress. KEYWORDS beta-catenin; FlyBook; signal transduction; Wingless; Wnt TABLE OF CONTENTS Abstract 1311 Introduction 1312 Introduction: Origin of the Wnt Name 1312 Discovery of the fly gene: the wingless mutant phenotype 1312 Discovery of wg homology to the mammalian oncogene int-1 1313 -

The Changing Chromatome As a Driver of Disease: a Panoramic View from Different Methodologies

The changing chromatome as a driver of disease: A panoramic view from different methodologies Isabel Espejo1, Luciano Di Croce,1,2,3 and Sergi Aranda1 1. Centre for Genomic Regulation (CRG), Barcelona Institute of Science and Technology, Dr. Aiguader 88, Barcelona 08003, Spain 2. Universitat Pompeu Fabra (UPF), Barcelona, Spain 3. ICREA, Pg. Lluis Companys 23, Barcelona 08010, Spain *Corresponding authors: Luciano Di Croce ([email protected]) Sergi Aranda ([email protected]) 1 GRAPHICAL ABSTRACT Chromatin-bound proteins regulate gene expression, replicate and repair DNA, and transmit epigenetic information. Several human diseases are highly influenced by alterations in the chromatin- bound proteome. Thus, biochemical approaches for the systematic characterization of the chromatome could contribute to identifying new regulators of cellular functionality, including those that are relevant to human disorders. 2 SUMMARY Chromatin-bound proteins underlie several fundamental cellular functions, such as control of gene expression and the faithful transmission of genetic and epigenetic information. Components of the chromatin proteome (the “chromatome”) are essential in human life, and mutations in chromatin-bound proteins are frequently drivers of human diseases, such as cancer. Proteomic characterization of chromatin and de novo identification of chromatin interactors could thus reveal important and perhaps unexpected players implicated in human physiology and disease. Recently, intensive research efforts have focused on developing strategies to characterize the chromatome composition. In this review, we provide an overview of the dynamic composition of the chromatome, highlight the importance of its alterations as a driving force in human disease (and particularly in cancer), and discuss the different approaches to systematically characterize the chromatin-bound proteome in a global manner. -

The Pdx1 Bound Swi/Snf Chromatin Remodeling Complex Regulates Pancreatic Progenitor Cell Proliferation and Mature Islet Β Cell

Page 1 of 125 Diabetes The Pdx1 bound Swi/Snf chromatin remodeling complex regulates pancreatic progenitor cell proliferation and mature islet β cell function Jason M. Spaeth1,2, Jin-Hua Liu1, Daniel Peters3, Min Guo1, Anna B. Osipovich1, Fardin Mohammadi3, Nilotpal Roy4, Anil Bhushan4, Mark A. Magnuson1, Matthias Hebrok4, Christopher V. E. Wright3, Roland Stein1,5 1 Department of Molecular Physiology and Biophysics, Vanderbilt University, Nashville, TN 2 Present address: Department of Pediatrics, Indiana University School of Medicine, Indianapolis, IN 3 Department of Cell and Developmental Biology, Vanderbilt University, Nashville, TN 4 Diabetes Center, Department of Medicine, UCSF, San Francisco, California 5 Corresponding author: [email protected]; (615)322-7026 1 Diabetes Publish Ahead of Print, published online June 14, 2019 Diabetes Page 2 of 125 Abstract Transcription factors positively and/or negatively impact gene expression by recruiting coregulatory factors, which interact through protein-protein binding. Here we demonstrate that mouse pancreas size and islet β cell function are controlled by the ATP-dependent Swi/Snf chromatin remodeling coregulatory complex that physically associates with Pdx1, a diabetes- linked transcription factor essential to pancreatic morphogenesis and adult islet-cell function and maintenance. Early embryonic deletion of just the Swi/Snf Brg1 ATPase subunit reduced multipotent pancreatic progenitor cell proliferation and resulted in pancreas hypoplasia. In contrast, removal of both Swi/Snf ATPase subunits, Brg1 and Brm, was necessary to compromise adult islet β cell activity, which included whole animal glucose intolerance, hyperglycemia and impaired insulin secretion. Notably, lineage-tracing analysis revealed Swi/Snf-deficient β cells lost the ability to produce the mRNAs for insulin and other key metabolic genes without effecting the expression of many essential islet-enriched transcription factors. -

Transcriptome Profiling Reveals the Complexity of Pirfenidone Effects in IPF

ERJ Express. Published on August 30, 2018 as doi: 10.1183/13993003.00564-2018 Early View Original article Transcriptome profiling reveals the complexity of pirfenidone effects in IPF Grazyna Kwapiszewska, Anna Gungl, Jochen Wilhelm, Leigh M. Marsh, Helene Thekkekara Puthenparampil, Katharina Sinn, Miroslava Didiasova, Walter Klepetko, Djuro Kosanovic, Ralph T. Schermuly, Lukasz Wujak, Benjamin Weiss, Liliana Schaefer, Marc Schneider, Michael Kreuter, Andrea Olschewski, Werner Seeger, Horst Olschewski, Malgorzata Wygrecka Please cite this article as: Kwapiszewska G, Gungl A, Wilhelm J, et al. Transcriptome profiling reveals the complexity of pirfenidone effects in IPF. Eur Respir J 2018; in press (https://doi.org/10.1183/13993003.00564-2018). This manuscript has recently been accepted for publication in the European Respiratory Journal. It is published here in its accepted form prior to copyediting and typesetting by our production team. After these production processes are complete and the authors have approved the resulting proofs, the article will move to the latest issue of the ERJ online. Copyright ©ERS 2018 Copyright 2018 by the European Respiratory Society. Transcriptome profiling reveals the complexity of pirfenidone effects in IPF Grazyna Kwapiszewska1,2, Anna Gungl2, Jochen Wilhelm3†, Leigh M. Marsh1, Helene Thekkekara Puthenparampil1, Katharina Sinn4, Miroslava Didiasova5, Walter Klepetko4, Djuro Kosanovic3, Ralph T. Schermuly3†, Lukasz Wujak5, Benjamin Weiss6, Liliana Schaefer7, Marc Schneider8†, Michael Kreuter8†, Andrea Olschewski1, -

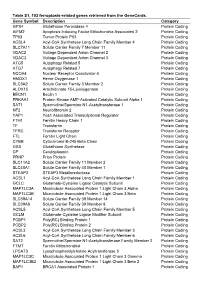

Table S1. 103 Ferroptosis-Related Genes Retrieved from the Genecards

Table S1. 103 ferroptosis-related genes retrieved from the GeneCards. Gene Symbol Description Category GPX4 Glutathione Peroxidase 4 Protein Coding AIFM2 Apoptosis Inducing Factor Mitochondria Associated 2 Protein Coding TP53 Tumor Protein P53 Protein Coding ACSL4 Acyl-CoA Synthetase Long Chain Family Member 4 Protein Coding SLC7A11 Solute Carrier Family 7 Member 11 Protein Coding VDAC2 Voltage Dependent Anion Channel 2 Protein Coding VDAC3 Voltage Dependent Anion Channel 3 Protein Coding ATG5 Autophagy Related 5 Protein Coding ATG7 Autophagy Related 7 Protein Coding NCOA4 Nuclear Receptor Coactivator 4 Protein Coding HMOX1 Heme Oxygenase 1 Protein Coding SLC3A2 Solute Carrier Family 3 Member 2 Protein Coding ALOX15 Arachidonate 15-Lipoxygenase Protein Coding BECN1 Beclin 1 Protein Coding PRKAA1 Protein Kinase AMP-Activated Catalytic Subunit Alpha 1 Protein Coding SAT1 Spermidine/Spermine N1-Acetyltransferase 1 Protein Coding NF2 Neurofibromin 2 Protein Coding YAP1 Yes1 Associated Transcriptional Regulator Protein Coding FTH1 Ferritin Heavy Chain 1 Protein Coding TF Transferrin Protein Coding TFRC Transferrin Receptor Protein Coding FTL Ferritin Light Chain Protein Coding CYBB Cytochrome B-245 Beta Chain Protein Coding GSS Glutathione Synthetase Protein Coding CP Ceruloplasmin Protein Coding PRNP Prion Protein Protein Coding SLC11A2 Solute Carrier Family 11 Member 2 Protein Coding SLC40A1 Solute Carrier Family 40 Member 1 Protein Coding STEAP3 STEAP3 Metalloreductase Protein Coding ACSL1 Acyl-CoA Synthetase Long Chain Family Member 1 Protein -

Pan-Cancer Multi-Omics Analysis and Orthogonal Experimental Assessment of Epigenetic Driver Genes

Downloaded from genome.cshlp.org on October 9, 2021 - Published by Cold Spring Harbor Laboratory Press Resource Pan-cancer multi-omics analysis and orthogonal experimental assessment of epigenetic driver genes Andrea Halaburkova, Vincent Cahais, Alexei Novoloaca, Mariana Gomes da Silva Araujo, Rita Khoueiry,1 Akram Ghantous,1 and Zdenko Herceg1 Epigenetics Group, International Agency for Research on Cancer (IARC), 69008 Lyon, France The recent identification of recurrently mutated epigenetic regulator genes (ERGs) supports their critical role in tumorigen- esis. We conducted a pan-cancer analysis integrating (epi)genome, transcriptome, and DNA methylome alterations in a cu- rated list of 426 ERGs across 33 cancer types, comprising 10,845 tumor and 730 normal tissues. We found that, in addition to mutations, copy number alterations in ERGs were more frequent than previously anticipated and tightly linked to ex- pression aberrations. Novel bioinformatics approaches, integrating the strengths of various driver prediction and multi- omics algorithms, and an orthogonal in vitro screen (CRISPR-Cas9) targeting all ERGs revealed genes with driver roles with- in and across malignancies and shared driver mechanisms operating across multiple cancer types and hallmarks. This is the largest and most comprehensive analysis thus far; it is also the first experimental effort to specifically identify ERG drivers (epidrivers) and characterize their deregulation and functional impact in oncogenic processes. [Supplemental material is available for this article.] Although it has long been known that human cancers harbor both gression, potentially acting as oncogenes or tumor suppressors genetic and epigenetic changes, with an intricate interplay be- (Plass et al. 2013; Vogelstein et al. 2013). -

Mutations in Bcl9 and Pygo Genes Cause Congenital Heart Defects by Tissue-Specific Perturbation of Wnt/Β-Catenin Signaling

bioRxiv preprint doi: https://doi.org/10.1101/249680; this version posted January 17, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Mutations in Bcl9 and Pygo genes cause congenital heart defects by tissue-specific perturbation of Wnt/-catenin signaling Claudio Cantù1,†, Anastasia Felker1,†, Dario Zimmerli1,†, Elena Chiavacci1, Elena María Cabello1, Lucia Kirchgeorg1, Tomas Valenta1, George Hausmann1, Jorge Ripoll2, Natalie Vilain3, Michel Aguet3, Konrad Basler1,*, Christian Mosimann1,* 1 Institute of Molecular Life Sciences, University of Zürich, Winterthurerstrasse 190, 8057 Zürich, Switzerland. 2 Department of Bioengineering and Aerospace Engineering, Universidad Carlos III de Madrid, 28911 Madrid, Spain. 3 Swiss Institute for Experimental Cancer Research (ISREC), École Polytechnique Fédérale de Lausanne (EPFL), School of Life Sciences, 1015 Lausanne, Switzerland. † contributed equally to this work * correspondence to: [email protected]; [email protected] Abstract Genetic alterations in human BCL9 genes have repeatedly been found in congenital heart disease (CHD) with as-of-yet unclear causality. BCL9 proteins and their Pygopus (Pygo) co-factors can participate in canonical Wnt signaling via binding to β-catenin. Nonetheless, their contributions to vertebrate heart development remain uncharted. Here, combining zebrafish and mouse genetics, we document tissue- specific functions in canonical Wnt signaling for BCL9 and Pygo proteins during heart development. In a CRISPR-Cas9-based genotype-phenotype association screen, we uncovered that zebrafish mutants for bcl9 and pygo genes largely retain β-catenin activity, yet develop cardiac malformations. -

H3K27 Methylation Dynamics During CD4 T Cell Activation: Regulation of JAK/STAT and IL12RB2 Expression by JMJD3

H3K27 Methylation Dynamics during CD4 T Cell Activation: Regulation of JAK/STAT and IL12RB2 Expression by JMJD3 This information is current as Sarah A. LaMere, Ryan C. Thompson, Xiangzhi Meng, H. of September 24, 2021. Kiyomi Komori, Adam Mark and Daniel R. Salomon J Immunol published online 25 September 2017 http://www.jimmunol.org/content/early/2017/09/23/jimmun ol.1700475 Downloaded from Supplementary http://www.jimmunol.org/content/suppl/2017/09/23/jimmunol.170047 Material 5.DCSupplemental http://www.jimmunol.org/ Why The JI? Submit online. • Rapid Reviews! 30 days* from submission to initial decision • No Triage! Every submission reviewed by practicing scientists • Fast Publication! 4 weeks from acceptance to publication by guest on September 24, 2021 *average Subscription Information about subscribing to The Journal of Immunology is online at: http://jimmunol.org/subscription Permissions Submit copyright permission requests at: http://www.aai.org/About/Publications/JI/copyright.html Email Alerts Receive free email-alerts when new articles cite this article. Sign up at: http://jimmunol.org/alerts The Journal of Immunology is published twice each month by The American Association of Immunologists, Inc., 1451 Rockville Pike, Suite 650, Rockville, MD 20852 Copyright © 2017 by The American Association of Immunologists, Inc. All rights reserved. Print ISSN: 0022-1767 Online ISSN: 1550-6606. Published September 25, 2017, doi:10.4049/jimmunol.1700475 The Journal of Immunology H3K27 Methylation Dynamics during CD4 T Cell Activation: Regulation of JAK/STAT and IL12RB2 Expression by JMJD3 Sarah A. LaMere,1 Ryan C. Thompson, Xiangzhi Meng, H. Kiyomi Komori,2 Adam Mark,1 and Daniel R.