1. Determinants: a Row Operation By-Product the Determinant Is Best Understood in Terms of Row Operations, in My Opinion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

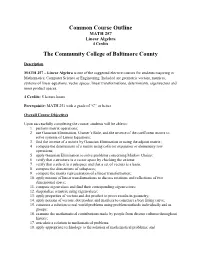

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

![Arxiv:2003.06292V1 [Math.GR] 12 Mar 2020 Eggnrtr N Ignlmti.Tedaoa Arxi Matrix Diagonal the Matrix](https://docslib.b-cdn.net/cover/0158/arxiv-2003-06292v1-math-gr-12-mar-2020-eggnrtr-n-ignlmti-tedaoa-arxi-matrix-diagonal-the-matrix-60158.webp)

Arxiv:2003.06292V1 [Math.GR] 12 Mar 2020 Eggnrtr N Ignlmti.Tedaoa Arxi Matrix Diagonal the Matrix

ALGORITHMS IN LINEAR ALGEBRAIC GROUPS SUSHIL BHUNIA, AYAN MAHALANOBIS, PRALHAD SHINDE, AND ANUPAM SINGH ABSTRACT. This paper presents some algorithms in linear algebraic groups. These algorithms solve the word problem and compute the spinor norm for orthogonal groups. This gives us an algorithmic definition of the spinor norm. We compute the double coset decompositionwith respect to a Siegel maximal parabolic subgroup, which is important in computing infinite-dimensional representations for some algebraic groups. 1. INTRODUCTION Spinor norm was first defined by Dieudonné and Kneser using Clifford algebras. Wall [21] defined the spinor norm using bilinear forms. These days, to compute the spinor norm, one uses the definition of Wall. In this paper, we develop a new definition of the spinor norm for split and twisted orthogonal groups. Our definition of the spinornorm is rich in the sense, that itis algorithmic in nature. Now one can compute spinor norm using a Gaussian elimination algorithm that we develop in this paper. This paper can be seen as an extension of our earlier work in the book chapter [3], where we described Gaussian elimination algorithms for orthogonal and symplectic groups in the context of public key cryptography. In computational group theory, one always looks for algorithms to solve the word problem. For a group G defined by a set of generators hXi = G, the problem is to write g ∈ G as a word in X: we say that this is the word problem for G (for details, see [18, Section 1.4]). Brooksbank [4] and Costi [10] developed algorithms similar to ours for classical groups over finite fields. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Partitioned (Or Block) Matrices This Version: 29 Nov 2018

Partitioned (or Block) Matrices This version: 29 Nov 2018 Intermediate Econometrics / Forecasting Class Notes Instructor: Anthony Tay It is frequently convenient to partition matrices into smaller sub-matrices. e.g. 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 A B (2×2) (2×3) 3 1 1 0 0 = 3 1 1 0 0 = C I 1 3 0 1 0 1 3 0 1 0 (3×2) (3×3) 2 0 0 0 1 2 0 0 0 1 The same matrix can be partitioned in several different ways. For instance, we can write the previous matrix as 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 a b0 (1×1) (1×4) 3 1 1 0 0 = 3 1 1 0 0 = c D 1 3 0 1 0 1 3 0 1 0 (4×1) (4×4) 2 0 0 0 1 2 0 0 0 1 One reason partitioning is useful is that we can do matrix addition and multiplication with blocks, as though the blocks are elements, as long as the blocks are conformable for the operations. For instance: A B D E A + D B + E (2×2) (2×3) (2×2) (2×3) (2×2) (2×3) + = C I C F 2C I + F (3×2) (3×3) (3×2) (3×3) (3×2) (3×3) A B d E Ad + BF AE + BG (2×2) (2×3) (2×1) (2×3) (2×1) (2×3) = C I F G Cd + F CE + G (3×2) (3×3) (3×1) (3×3) (3×1) (3×3) | {z } | {z } | {z } (5×5) (5×4) (5×4) 1 Intermediate Econometrics / Forecasting 2 Examples (1) Let 1 2 1 1 2 1 c 1 4 2 3 4 2 3 h i A = = = a a a and c = c 1 2 3 2 3 0 1 3 0 1 c 0 1 3 0 1 3 3 c1 h i then Ac = a1 a2 a3 c2 = c1a1 + c2a2 + c3a3 c3 The product Ac produces a linear combination of the columns of A. -

Orthogonal Reduction 1 the Row Echelon Form -.: Mathematical

MATH 5330: Computational Methods of Linear Algebra Lecture 9: Orthogonal Reduction Xianyi Zeng Department of Mathematical Sciences, UTEP 1 The Row Echelon Form Our target is to solve the normal equation: AtAx = Atb ; (1.1) m×n where A 2 R is arbitrary; we have shown previously that this is equivalent to the least squares problem: min jjAx−bjj : (1.2) x2Rn t n×n As A A2R is symmetric positive semi-definite, we can try to compute the Cholesky decom- t t n×n position such that A A = L L for some lower-triangular matrix L 2 R . One problem with this approach is that we're not fully exploring our information, particularly in Cholesky decomposition we treat AtA as a single entity in ignorance of the information about A itself. t m×m In particular, the structure A A motivates us to study a factorization A=QE, where Q2R m×n is orthogonal and E 2 R is to be determined. Then we may transform the normal equation to: EtEx = EtQtb ; (1.3) t m×m where the identity Q Q = Im (the identity matrix in R ) is used. This normal equation is equivalent to the least squares problem with E: t min Ex−Q b : (1.4) x2Rn Because orthogonal transformation preserves the L2-norm, (1.2) and (1.4) are equivalent to each n other. Indeed, for any x 2 R : jjAx−bjj2 = (b−Ax)t(b−Ax) = (b−QEx)t(b−QEx) = [Q(Qtb−Ex)]t[Q(Qtb−Ex)] t t t t t t t t 2 = (Q b−Ex) Q Q(Q b−Ex) = (Q b−Ex) (Q b−Ex) = Ex−Q b : Hence the target is to find an E such that (1.3) is easier to solve. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Chapter Four Determinants

Chapter Four Determinants In the first chapter of this book we considered linear systems and we picked out the special case of systems with the same number of equations as unknowns, those of the form T~x = ~b where T is a square matrix. We noted a distinction between two classes of T ’s. While such systems may have a unique solution or no solutions or infinitely many solutions, if a particular T is associated with a unique solution in any system, such as the homogeneous system ~b = ~0, then T is associated with a unique solution for every ~b. We call such a matrix of coefficients ‘nonsingular’. The other kind of T , where every linear system for which it is the matrix of coefficients has either no solution or infinitely many solutions, we call ‘singular’. Through the second and third chapters the value of this distinction has been a theme. For instance, we now know that nonsingularity of an n£n matrix T is equivalent to each of these: ² a system T~x = ~b has a solution, and that solution is unique; ² Gauss-Jordan reduction of T yields an identity matrix; ² the rows of T form a linearly independent set; ² the columns of T form a basis for Rn; ² any map that T represents is an isomorphism; ² an inverse matrix T ¡1 exists. So when we look at a particular square matrix, the question of whether it is nonsingular is one of the first things that we ask. This chapter develops a formula to determine this. (Since we will restrict the discussion to square matrices, in this chapter we will usually simply say ‘matrix’ in place of ‘square matrix’.) More precisely, we will develop infinitely many formulas, one for 1£1 ma- trices, one for 2£2 matrices, etc. -

Handout 9 More Matrix Properties; the Transpose

Handout 9 More matrix properties; the transpose Square matrix properties These properties only apply to a square matrix, i.e. n £ n. ² The leading diagonal is the diagonal line consisting of the entries a11, a22, a33, . ann. ² A diagonal matrix has zeros everywhere except the leading diagonal. ² The identity matrix I has zeros o® the leading diagonal, and 1 for each entry on the diagonal. It is a special case of a diagonal matrix, and A I = I A = A for any n £ n matrix A. ² An upper triangular matrix has all its non-zero entries on or above the leading diagonal. ² A lower triangular matrix has all its non-zero entries on or below the leading diagonal. ² A symmetric matrix has the same entries below and above the diagonal: aij = aji for any values of i and j between 1 and n. ² An antisymmetric or skew-symmetric matrix has the opposite entries below and above the diagonal: aij = ¡aji for any values of i and j between 1 and n. This automatically means the digaonal entries must all be zero. Transpose To transpose a matrix, we reect it across the line given by the leading diagonal a11, a22 etc. In general the result is a di®erent shape to the original matrix: a11 a21 a11 a12 a13 > > A = A = 0 a12 a22 1 [A ]ij = A : µ a21 a22 a23 ¶ ji a13 a23 @ A > ² If A is m £ n then A is n £ m. > ² The transpose of a symmetric matrix is itself: A = A (recalling that only square matrices can be symmetric). -

Math 217: Multilinearity of Determinants Professor Karen Smith (C)2015 UM Math Dept Licensed Under a Creative Commons By-NC-SA 4.0 International License

Math 217: Multilinearity of Determinants Professor Karen Smith (c)2015 UM Math Dept licensed under a Creative Commons By-NC-SA 4.0 International License. A. Let V −!T V be a linear transformation where V has dimension n. 1. What is meant by the determinant of T ? Why is this well-defined? Solution note: The determinant of T is the determinant of the B-matrix of T , for any basis B of V . Since all B-matrices of T are similar, and similar matrices have the same determinant, this is well-defined—it doesn't depend on which basis we pick. 2. Define the rank of T . Solution note: The rank of T is the dimension of the image. 3. Explain why T is an isomorphism if and only if det T is not zero. Solution note: T is an isomorphism if and only if [T ]B is invertible (for any choice of basis B), which happens if and only if det T 6= 0. 3 4. Now let V = R and let T be rotation around the axis L (a line through the origin) by an 21 0 0 3 3 angle θ. Find a basis for R in which the matrix of ρ is 40 cosθ −sinθ5 : Use this to 0 sinθ cosθ compute the determinant of T . Is T othogonal? Solution note: Let v be any vector spanning L and let u1; u2 be an orthonormal basis ? for V = L . Rotation fixes ~v, which means the B-matrix in the basis (v; u1; u2) has 213 first column 405. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

Week 8-9. Inner Product Spaces. (Revised Version) Section 3.1 Dot Product As an Inner Product

Math 2051 W2008 Margo Kondratieva Week 8-9. Inner product spaces. (revised version) Section 3.1 Dot product as an inner product. Consider a linear (vector) space V . (Let us restrict ourselves to only real spaces that is we will not deal with complex numbers and vectors.) De¯nition 1. An inner product on V is a function which assigns a real number, denoted by < ~u;~v> to every pair of vectors ~u;~v 2 V such that (1) < ~u;~v>=< ~v; ~u> for all ~u;~v 2 V ; (2) < ~u + ~v; ~w>=< ~u;~w> + < ~v; ~w> for all ~u;~v; ~w 2 V ; (3) < k~u;~v>= k < ~u;~v> for any k 2 R and ~u;~v 2 V . (4) < ~v;~v>¸ 0 for all ~v 2 V , and < ~v;~v>= 0 only for ~v = ~0. De¯nition 2. Inner product space is a vector space equipped with an inner product. Pn It is straightforward to check that the dot product introduces by ~u ¢ ~v = j=1 ujvj is an inner product. You are advised to verify all the properties listed in the de¯nition, as an exercise. The dot product is also called Euclidian inner product. De¯nition 3. Euclidian vector space is Rn equipped with Euclidian inner product < ~u;~v>= ~u¢~v. De¯nition 4. A square matrix A is called positive de¯nite if ~vT A~v> 0 for any vector ~v 6= ~0. · ¸ 2 0 Problem 1. Show that is positive de¯nite. 0 3 Solution: Take ~v = (x; y)T . Then ~vT A~v = 2x2 + 3y2 > 0 for (x; y) 6= (0; 0). -

Matrices and Tensors

APPENDIX MATRICES AND TENSORS A.1. INTRODUCTION AND RATIONALE The purpose of this appendix is to present the notation and most of the mathematical tech- niques that are used in the body of the text. The audience is assumed to have been through sev- eral years of college-level mathematics, which included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly, and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in under- graduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles, and the vector cross and triple scalar prod- ucts. The notation, as far as possible, will be a matrix notation that is easily entered into exist- ing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad. The desire to represent the components of three-dimensional fourth-order tensors that appear in anisotropic elasticity as the components of six-dimensional second-order tensors and thus rep- resent these components in matrices of tensor components in six dimensions leads to the non- traditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in §A.11, along with the rationale for this approach. A.2. DEFINITION OF SQUARE, COLUMN, AND ROW MATRICES An r-by-c matrix, M, is a rectangular array of numbers consisting of r rows and c columns: ¯MM..