CS 2401 - Introduction to Complexity Theory Lecture #5: Fall, 2015

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Complexity Theory Lecture 9 Co-NP Co-NP-Complete

Complexity Theory 1 Complexity Theory 2 co-NP Complexity Theory Lecture 9 As co-NP is the collection of complements of languages in NP, and P is closed under complementation, co-NP can also be characterised as the collection of languages of the form: ′ L = x y y <p( x ) R (x, y) { |∀ | | | | → } Anuj Dawar University of Cambridge Computer Laboratory NP – the collection of languages with succinct certificates of Easter Term 2010 membership. co-NP – the collection of languages with succinct certificates of http://www.cl.cam.ac.uk/teaching/0910/Complexity/ disqualification. Anuj Dawar May 14, 2010 Anuj Dawar May 14, 2010 Complexity Theory 3 Complexity Theory 4 NP co-NP co-NP-complete P VAL – the collection of Boolean expressions that are valid is co-NP-complete. Any language L that is the complement of an NP-complete language is co-NP-complete. Any of the situations is consistent with our present state of ¯ knowledge: Any reduction of a language L1 to L2 is also a reduction of L1–the complement of L1–to L¯2–the complement of L2. P = NP = co-NP • There is an easy reduction from the complement of SAT to VAL, P = NP co-NP = NP = co-NP • ∩ namely the map that takes an expression to its negation. P = NP co-NP = NP = co-NP • ∩ VAL P P = NP = co-NP ∈ ⇒ P = NP co-NP = NP = co-NP • ∩ VAL NP NP = co-NP ∈ ⇒ Anuj Dawar May 14, 2010 Anuj Dawar May 14, 2010 Complexity Theory 5 Complexity Theory 6 Prime Numbers Primality Consider the decision problem PRIME: Another way of putting this is that Composite is in NP. -

If Np Languages Are Hard on the Worst-Case, Then It Is Easy to Find Their Hard Instances

IF NP LANGUAGES ARE HARD ON THE WORST-CASE, THEN IT IS EASY TO FIND THEIR HARD INSTANCES Dan Gutfreund, Ronen Shaltiel, and Amnon Ta-Shma Abstract. We prove that if NP 6⊆ BPP, i.e., if SAT is worst-case hard, then for every probabilistic polynomial-time algorithm trying to decide SAT, there exists some polynomially samplable distribution that is hard for it. That is, the algorithm often errs on inputs from this distribution. This is the ¯rst worst-case to average-case reduction for NP of any kind. We stress however, that this does not mean that there exists one ¯xed samplable distribution that is hard for all probabilistic polynomial-time algorithms, which is a pre-requisite assumption needed for one-way func- tions and cryptography (even if not a su±cient assumption). Neverthe- less, we do show that there is a ¯xed distribution on instances of NP- complete languages, that is samplable in quasi-polynomial time and is hard for all probabilistic polynomial-time algorithms (unless NP is easy in the worst case). Our results are based on the following lemma that may be of independent interest: Given the description of an e±cient (probabilistic) algorithm that fails to solve SAT in the worst case, we can e±ciently generate at most three Boolean formulae (of increasing lengths) such that the algorithm errs on at least one of them. Keywords. Average-case complexity, Worst-case to average-case re- ductions, Foundations of cryptography, Pseudo classes Subject classi¯cation. 68Q10 (Modes of computation (nondetermin- istic, parallel, interactive, probabilistic, etc.) 68Q15 Complexity classes (hierarchies, relations among complexity classes, etc.) 68Q17 Compu- tational di±culty of problems (lower bounds, completeness, di±culty of approximation, etc.) 94A60 Cryptography 2 Gutfreund, Shaltiel & Ta-Shma 1. -

On the Randomness Complexity of Interactive Proofs and Statistical Zero-Knowledge Proofs*

On the Randomness Complexity of Interactive Proofs and Statistical Zero-Knowledge Proofs* Benny Applebaum† Eyal Golombek* Abstract We study the randomness complexity of interactive proofs and zero-knowledge proofs. In particular, we ask whether it is possible to reduce the randomness complexity, R, of the verifier to be comparable with the number of bits, CV , that the verifier sends during the interaction. We show that such randomness sparsification is possible in several settings. Specifically, unconditional sparsification can be obtained in the non-uniform setting (where the verifier is modelled as a circuit), and in the uniform setting where the parties have access to a (reusable) common-random-string (CRS). We further show that constant-round uniform protocols can be sparsified without a CRS under a plausible worst-case complexity-theoretic assumption that was used previously in the context of derandomization. All the above sparsification results preserve statistical-zero knowledge provided that this property holds against a cheating verifier. We further show that randomness sparsification can be applied to honest-verifier statistical zero-knowledge (HVSZK) proofs at the expense of increasing the communica- tion from the prover by R−F bits, or, in the case of honest-verifier perfect zero-knowledge (HVPZK) by slowing down the simulation by a factor of 2R−F . Here F is a new measure of accessible bit complexity of an HVZK proof system that ranges from 0 to R, where a maximal grade of R is achieved when zero- knowledge holds against a “semi-malicious” verifier that maliciously selects its random tape and then plays honestly. -

EXPSPACE-Hardness of Behavioural Equivalences of Succinct One

EXPSPACE-hardness of behavioural equivalences of succinct one-counter nets Petr Janˇcar1 Petr Osiˇcka1 Zdenˇek Sawa2 1Dept of Comp. Sci., Faculty of Science, Palack´yUniv. Olomouc, Czech Rep. [email protected], [email protected] 2Dept of Comp. Sci., FEI, Techn. Univ. Ostrava, Czech Rep. [email protected] Abstract We note that the remarkable EXPSPACE-hardness result in [G¨oller, Haase, Ouaknine, Worrell, ICALP 2010] ([GHOW10] for short) allows us to answer an open complexity ques- tion for simulation preorder of succinct one counter nets (i.e., one counter automata with no zero tests where counter increments and decrements are integers written in binary). This problem, as well as bisimulation equivalence, turn out to be EXPSPACE-complete. The technique of [GHOW10] was referred to by Hunter [RP 2015] for deriving EXPSPACE-hardness of reachability games on succinct one-counter nets. We first give a direct self-contained EXPSPACE-hardness proof for such reachability games (by adjust- ing a known PSPACE-hardness proof for emptiness of alternating finite automata with one-letter alphabet); then we reduce reachability games to (bi)simulation games by using a standard “defender-choice” technique. 1 Introduction arXiv:1801.01073v1 [cs.LO] 3 Jan 2018 We concentrate on our contribution, without giving a broader overview of the area here. A remarkable result by G¨oller, Haase, Ouaknine, Worrell [2] shows that model checking a fixed CTL formula on succinct one-counter automata (where counter increments and decre- ments are integers written in binary) is EXPSPACE-hard. Their proof is interesting and nontrivial, and uses two involved results from complexity theory. -

Dspace 6.X Documentation

DSpace 6.x Documentation DSpace 6.x Documentation Author: The DSpace Developer Team Date: 27 June 2018 URL: https://wiki.duraspace.org/display/DSDOC6x Page 1 of 924 DSpace 6.x Documentation Table of Contents 1 Introduction ___________________________________________________________________________ 7 1.1 Release Notes ____________________________________________________________________ 8 1.1.1 6.3 Release Notes ___________________________________________________________ 8 1.1.2 6.2 Release Notes __________________________________________________________ 11 1.1.3 6.1 Release Notes _________________________________________________________ 12 1.1.4 6.0 Release Notes __________________________________________________________ 14 1.2 Functional Overview _______________________________________________________________ 22 1.2.1 Online access to your digital assets ____________________________________________ 23 1.2.2 Metadata Management ______________________________________________________ 25 1.2.3 Licensing _________________________________________________________________ 27 1.2.4 Persistent URLs and Identifiers _______________________________________________ 28 1.2.5 Getting content into DSpace __________________________________________________ 30 1.2.6 Getting content out of DSpace ________________________________________________ 33 1.2.7 User Management __________________________________________________________ 35 1.2.8 Access Control ____________________________________________________________ 36 1.2.9 Usage Metrics _____________________________________________________________ -

The Complexity Zoo

The Complexity Zoo Scott Aaronson www.ScottAaronson.com LATEX Translation by Chris Bourke [email protected] 417 classes and counting 1 Contents 1 About This Document 3 2 Introductory Essay 4 2.1 Recommended Further Reading ......................... 4 2.2 Other Theory Compendia ............................ 5 2.3 Errors? ....................................... 5 3 Pronunciation Guide 6 4 Complexity Classes 10 5 Special Zoo Exhibit: Classes of Quantum States and Probability Distribu- tions 110 6 Acknowledgements 116 7 Bibliography 117 2 1 About This Document What is this? Well its a PDF version of the website www.ComplexityZoo.com typeset in LATEX using the complexity package. Well, what’s that? The original Complexity Zoo is a website created by Scott Aaronson which contains a (more or less) comprehensive list of Complexity Classes studied in the area of theoretical computer science known as Computa- tional Complexity. I took on the (mostly painless, thank god for regular expressions) task of translating the Zoo’s HTML code to LATEX for two reasons. First, as a regular Zoo patron, I thought, “what better way to honor such an endeavor than to spruce up the cages a bit and typeset them all in beautiful LATEX.” Second, I thought it would be a perfect project to develop complexity, a LATEX pack- age I’ve created that defines commands to typeset (almost) all of the complexity classes you’ll find here (along with some handy options that allow you to conveniently change the fonts with a single option parameters). To get the package, visit my own home page at http://www.cse.unl.edu/~cbourke/. -

The Polynomial Hierarchy

ij 'I '""T', :J[_ ';(" THE POLYNOMIAL HIERARCHY Although the complexity classes we shall study now are in one sense byproducts of our definition of NP, they have a remarkable life of their own. 17.1 OPTIMIZATION PROBLEMS Optimization problems have not been classified in a satisfactory way within the theory of P and NP; it is these problems that motivate the immediate extensions of this theory beyond NP. Let us take the traveling salesman problem as our working example. In the problem TSP we are given the distance matrix of a set of cities; we want to find the shortest tour of the cities. We have studied the complexity of the TSP within the framework of P and NP only indirectly: We defined the decision version TSP (D), and proved it NP-complete (corollary to Theorem 9.7). For the purpose of understanding better the complexity of the traveling salesman problem, we now introduce two more variants. EXACT TSP: Given a distance matrix and an integer B, is the length of the shortest tour equal to B? Also, TSP COST: Given a distance matrix, compute the length of the shortest tour. The four variants can be ordered in "increasing complexity" as follows: TSP (D); EXACTTSP; TSP COST; TSP. Each problem in this progression can be reduced to the next. For the last three problems this is trivial; for the first two one has to notice that the reduction in 411 j ;1 17.1 Optimization Problems 413 I 412 Chapter 17: THE POLYNOMIALHIERARCHY the corollary to Theorem 9.7 proving that TSP (D) is NP-complete can be used with DP. -

Interactive Proofs for Quantum Computations

Innovations in Computer Science 2010 Interactive Proofs For Quantum Computations Dorit Aharonov Michael Ben-Or Elad Eban School of Computer Science, The Hebrew University of Jerusalem, Israel [email protected] [email protected] [email protected] Abstract: The widely held belief that BQP strictly contains BPP raises fundamental questions: Upcoming generations of quantum computers might already be too large to be simulated classically. Is it possible to experimentally test that these systems perform as they should, if we cannot efficiently compute predictions for their behavior? Vazirani has asked [21]: If computing predictions for Quantum Mechanics requires exponential resources, is Quantum Mechanics a falsifiable theory? In cryptographic settings, an untrusted future company wants to sell a quantum computer or perform a delegated quantum computation. Can the customer be convinced of correctness without the ability to compare results to predictions? To provide answers to these questions, we define Quantum Prover Interactive Proofs (QPIP). Whereas in standard Interactive Proofs [13] the prover is computationally unbounded, here our prover is in BQP, representing a quantum computer. The verifier models our current computational capabilities: it is a BPP machine, with access to few qubits. Our main theorem can be roughly stated as: ”Any language in BQP has a QPIP, and moreover, a fault tolerant one” (providing a partial answer to a challenge posted in [1]). We provide two proofs. The simpler one uses a new (possibly of independent interest) quantum authentication scheme (QAS) based on random Clifford elements. This QPIP however, is not fault tolerant. Our second protocol uses polynomial codes QAS due to Ben-Or, Cr´epeau, Gottesman, Hassidim, and Smith [8], combined with quantum fault tolerance and secure multiparty quantum computation techniques. -

Ex. 8 Complexity 1. by Savich Theorem NL ⊆ DSPACE (Log2n)

Ex. 8 Complexity 1. By Savich theorem NL ⊆ DSP ACE(log2n) ⊆ DSP ASE(n). we saw in one of the previous ex. that DSP ACE(n2f(n)) 6= DSP ACE(f(n)) we also saw NL ½ P SP ASE so we conclude NL ½ DSP ACE(n) ½ DSP ACE(n3) ½ P SP ACE but DSP ACE(n) 6= DSP ACE(n3) as needed. 2. (a) We emulate the running of the s ¡ t ¡ conn algorithm in parallel for two paths (that is, we maintain two pointers, both starts at s and guess separately the next step for each of them). We raise a flag as soon as the two pointers di®er. We stop updating each of the two pointers as soon as it gets to t. If both got to t and the flag is raised, we return true. (b) As A 2 NL by Immerman-Szelepsc¶enyi Theorem (NL = vo ¡ NL) we know that A 2 NL. As C = A \ s ¡ t ¡ conn and s ¡ t ¡ conn 2 NL we get that A 2 NL. 3. If L 2 NICE, then taking \quit" as reject we get an NP machine (when x2 = L we always reject) for L, and by taking \quit" as accept we get coNP machine for L (if x 2 L we always reject), and so NICE ⊆ NP \ coNP . For the other direction, if L is in NP \coNP , then there is one NP machine M1(x; y) solving L, and another NP machine M2(x; z) solving coL. We can therefore de¯ne a new machine that guesses the guess y for M1, and the guess z for M2. -

Glossary of Complexity Classes

App endix A Glossary of Complexity Classes Summary This glossary includes selfcontained denitions of most complexity classes mentioned in the b o ok Needless to say the glossary oers a very minimal discussion of these classes and the reader is re ferred to the main text for further discussion The items are organized by topics rather than by alphab etic order Sp ecically the glossary is partitioned into two parts dealing separately with complexity classes that are dened in terms of algorithms and their resources ie time and space complexity of Turing machines and complexity classes de ned in terms of nonuniform circuits and referring to their size and depth The algorithmic classes include timecomplexity based classes such as P NP coNP BPP RP coRP PH E EXP and NEXP and the space complexity classes L NL RL and P S P AC E The non k uniform classes include the circuit classes P p oly as well as NC and k AC Denitions and basic results regarding many other complexity classes are available at the constantly evolving Complexity Zoo A Preliminaries Complexity classes are sets of computational problems where each class contains problems that can b e solved with sp ecic computational resources To dene a complexity class one sp ecies a mo del of computation a complexity measure like time or space which is always measured as a function of the input length and a b ound on the complexity of problems in the class We follow the tradition of fo cusing on decision problems but refer to these problems using the terminology of promise problems -

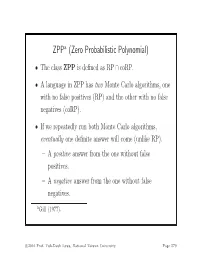

ZPP (Zero Probabilistic Polynomial)

ZPPa (Zero Probabilistic Polynomial) • The class ZPP is defined as RP ∩ coRP. • A language in ZPP has two Monte Carlo algorithms, one with no false positives (RP) and the other with no false negatives (coRP). • If we repeatedly run both Monte Carlo algorithms, eventually one definite answer will come (unlike RP). – A positive answer from the one without false positives. – A negative answer from the one without false negatives. aGill (1977). c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 579 The ZPP Algorithm (Las Vegas) 1: {Suppose L ∈ ZPP.} 2: {N1 has no false positives, and N2 has no false negatives.} 3: while true do 4: if N1(x)=“yes”then 5: return “yes”; 6: end if 7: if N2(x) = “no” then 8: return “no”; 9: end if 10: end while c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 580 ZPP (concluded) • The expected running time for the correct answer to emerge is polynomial. – The probability that a run of the 2 algorithms does not generate a definite answer is 0.5 (why?). – Let p(n) be the running time of each run of the while-loop. – The expected running time for a definite answer is ∞ 0.5iip(n)=2p(n). i=1 • Essentially, ZPP is the class of problems that can be solved, without errors, in expected polynomial time. c 2016 Prof. Yuh-Dauh Lyuu, National Taiwan University Page 581 Large Deviations • Suppose you have a biased coin. • One side has probability 0.5+ to appear and the other 0.5 − ,forsome0<<0.5. -

Solution of Exercise Sheet 8 1 IP and Perfect Soundness

Complexity Theory (fall 2016) Dominique Unruh Solution of Exercise Sheet 8 1 IP and perfect soundness Let IP0 be the class of languages that have interactive proofs with perfect soundness and perfect completeness (i.e., in the definition of IP, we replace 2=3 by 1 and 1=3 by 0). Show that IP0 ⊆ NP. You get bonus points if you only use the perfect soundness (not the perfect complete- ness). Note: In the practice we will show that dIP = NP where dIP is the class of languages that has interactive proofs with deterministic verifiers. You may use that fact. Hint: What happens if we replace the proof system by one where the verifier always uses 0 bits as its randomness? (More precisely, whenever V would use a random bit b, the modified verifier V0 choses b = 0 instead.) Does the resulting proof system still have perfect soundness? Does it still have perfect completeness? Solution. Let L 2 IP0. We want to show that L 2 NP. Since dIP = NP, it is sufficient to show that L 2 dIP. I.e., we need to show that there is an interactive proof for L with a deterministic verifier. Since L 2 IP0, there is an interactive proof (P; V ) for L with perfect soundness and completeness. Let V0 be the verifier that behaves like V , but whenever V uses a random bit, V0 uses 0. Note that V0 is deterministic. We show that (P; V0) still has perfect soundness and completeness. Let x 2 L. Then Pr[outV hV; P i(x) = 1] = 1.