ARM Architecture Overview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Storage the CPU-Memory

Data Storage •Disks • Hard disk (HDD) • Solid state drive (SSD) •Random Access Memory • Dynamic RAM (DRAM) • Static RAM (SRAM) •Registers • %rax, %rbx, ... Sean Barker 1 The CPU-Memory Gap 100,000,000.0 10,000,000.0 Disk 1,000,000.0 100,000.0 SSD Disk seek time 10,000.0 SSD access time 1,000.0 DRAM access time Time (ns) Time 100.0 DRAM SRAM access time CPU cycle time 10.0 Effective CPU cycle time 1.0 0.1 CPU 0.0 1985 1990 1995 2000 2003 2005 2010 2015 Year Sean Barker 2 Caching Smaller, faster, more expensive Cache 8 4 9 10 14 3 memory caches a subset of the blocks Data is copied in block-sized 10 4 transfer units Larger, slower, cheaper memory Memory 0 1 2 3 viewed as par@@oned into “blocks” 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 3 Cache Hit Request: 14 Cache 8 9 14 3 Memory 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 4 Cache Miss Request: 12 Cache 8 12 9 14 3 12 Request: 12 Memory 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 5 Locality ¢ Temporal locality: ¢ Spa0al locality: Sean Barker 6 Locality Example (1) sum = 0; for (i = 0; i < n; i++) sum += a[i]; return sum; Sean Barker 7 Locality Example (2) int sum_array_rows(int a[M][N]) { int i, j, sum = 0; for (i = 0; i < M; i++) for (j = 0; j < N; j++) sum += a[i][j]; return sum; } Sean Barker 8 Locality Example (3) int sum_array_cols(int a[M][N]) { int i, j, sum = 0; for (j = 0; j < N; j++) for (i = 0; i < M; i++) sum += a[i][j]; return sum; } Sean Barker 9 The Memory Hierarchy The Memory Hierarchy Smaller On 1 cycle to access CPU Chip Registers Faster Storage Costlier instrs can L1, L2 per byte directly Cache(s) ~10’s of cycles to access access (SRAM) Main memory ~100 cycles to access (DRAM) Larger Slower Flash SSD / Local network ~100 M cycles to access Cheaper Local secondary storage (disk) per byte slower Remote secondary storage than local (tapes, Web servers / Internet) disk to access Sean Barker 10. -

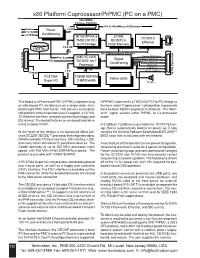

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Java (Programming Langua a (Programming Language)

Java (programming language) From Wikipedia, the free encyclopedialopedia "Java language" redirects here. For the natural language from the Indonesian island of Java, see Javanese language. Not to be confused with JavaScript. Java multi-paradigm: object-oriented, structured, imperative, Paradigm(s) functional, generic, reflective, concurrent James Gosling and Designed by Sun Microsystems Developer Oracle Corporation Appeared in 1995[1] Java Standard Edition 8 Update Stable release 5 (1.8.0_5) / April 15, 2014; 2 months ago Static, strong, safe, nominative, Typing discipline manifest Major OpenJDK, many others implementations Dialects Generic Java, Pizza Ada 83, C++, C#,[2] Eiffel,[3] Generic Java, Mesa,[4] Modula- Influenced by 3,[5] Oberon,[6] Objective-C,[7] UCSD Pascal,[8][9] Smalltalk Ada 2005, BeanShell, C#, Clojure, D, ECMAScript, Influenced Groovy, J#, JavaScript, Kotlin, PHP, Python, Scala, Seed7, Vala Implementation C and C++ language OS Cross-platform (multi-platform) GNU General Public License, License Java CommuniCommunity Process Filename .java , .class, .jar extension(s) Website For Java Developers Java Programming at Wikibooks Java is a computer programming language that is concurrent, class-based, object-oriented, and specifically designed to have as few impimplementation dependencies as possible.ble. It is intended to let application developers "write once, run ananywhere" (WORA), meaning that code that runs on one platform does not need to be recompiled to rurun on another. Java applications ns are typically compiled to bytecode (class file) that can run on anany Java virtual machine (JVM)) regardless of computer architecture. Java is, as of 2014, one of tthe most popular programming ng languages in use, particularly for client-server web applications, witwith a reported 9 million developers.[10][11] Java was originallyy developed by James Gosling at Sun Microsystems (which has since merged into Oracle Corporation) and released in 1995 as a core component of Sun Microsystems'Micros Java platform. -

Real-Time Java for Embedded Devices: the Javamen Project*

REAL-TIME JAVA FOR EMBEDDED DEVICES: THE JAVAMEN PROJECT* A. Borg, N. Audsley, A. Wellings The University of York, UK ABSTRACT: Hardware Java-specific processors have been shown to provide the performance benefits over their software counterparts that make Java a feasible environment for executing even the most computationally expensive systems. In most cases, the core of these processors is a simple stack machine on which stack operations and logic and arithmetic operations are carried out. More complex bytecodes are implemented either in microcode through a sequence of stack and memory operations or in Java and therefore through a set of bytecodes. This paper investigates the Figure 1: Three alternatives for executing Java code (take from (6)) state-of-the-art in Java processors and identifies two areas of improvement for specialising these processors timeliness as a key issue. The language therefore fails to for real-time applications. This is achieved through a allow more advanced temporal requirements of threads combination of the implementation of real-time Java to be expressed and virtual machine implementations components in hardware and by using application- may behave unpredictably in this context. For example, specific characteristics expressed at the Java level to whereas a basic thread priority can be specified, it is not drive a co-design strategy. An implementation of these required to be observed by a virtual machine and there propositions will provide a flexible Ravenscar- is no guarantee that the highest priority thread will compliant virtual machine that provides better preempt lower priority threads. In order to address this performance while still guaranteeing real-time shortcoming, two competing specifications have been requirements. -

45-Year CPU Evolution: One Law and Two Equations

45-year CPU evolution: one law and two equations Daniel Etiemble LRI-CNRS University Paris Sud Orsay, France [email protected] Abstract— Moore’s law and two equations allow to explain the a) IC is the instruction count. main trends of CPU evolution since MOS technologies have been b) CPI is the clock cycles per instruction and IPC = 1/CPI is the used to implement microprocessors. Instruction count per clock cycle. c) Tc is the clock cycle time and F=1/Tc is the clock frequency. Keywords—Moore’s law, execution time, CM0S power dissipation. The Power dissipation of CMOS circuits is the second I. INTRODUCTION equation (2). CMOS power dissipation is decomposed into static and dynamic powers. For dynamic power, Vdd is the power A new era started when MOS technologies were used to supply, F is the clock frequency, ΣCi is the sum of gate and build microprocessors. After pMOS (Intel 4004 in 1971) and interconnection capacitances and α is the average percentage of nMOS (Intel 8080 in 1974), CMOS became quickly the leading switching capacitances: α is the activity factor of the overall technology, used by Intel since 1985 with 80386 CPU. circuit MOS technologies obey an empirical law, stated in 1965 and 2 Pd = Pdstatic + α x ΣCi x Vdd x F (2) known as Moore’s law: the number of transistors integrated on a chip doubles every N months. Fig. 1 presents the evolution for II. CONSEQUENCES OF MOORE LAW DRAM memories, processors (MPU) and three types of read- only memories [1]. The growth rate decreases with years, from A. -

ARM Architecture

ARM Architecture Comppgzuter Organization and Assembly ygg Languages Yung-Yu Chuang with slides by Peng-Sheng Chen, Ville Pietikainen ARM history • 1983 developed by Acorn computers – To replace 6502 in BBC computers – 4-man VLSI design team – Its simp lic ity comes from the inexper ience team – Match the needs for generalized SoC for reasonable power, performance and die size – The first commercial RISC implemenation • 1990 ARM (Advanced RISC Mac hine ), owned by Acorn, Apple and VLSI ARM Ltd Design and license ARM core design but not fabricate Why ARM? • One of the most licensed and thus widespread processor cores in the world – Used in PDA, cell phones, multimedia players, handheld game console, digital TV and cameras – ARM7: GBA, iPod – ARM9: NDS, PSP, Sony Ericsson, BenQ – ARM11: Apple iPhone, Nokia N93, N800 – 90% of 32-bit embedded RISC processors till 2009 • Used especially in portable devices due to its low power consumption and reasonable performance ARM powered products ARM processors • A simple but powerful design • A whlhole filfamily of didesigns shiharing siilimilar didesign principles and a common instruction set Naming ARM •ARMxyzTDMIEJFS – x: series – y: MMU – z: cache – T: Thumb – D: debugger – M: Multiplier – I: EmbeddedICE (built-in debugger hardware) – E: Enhanced instruction – J: Jazell e (JVM) – F: Floating-point – S: SthiiblSynthesizible version (source code version for EDA tools) Popular ARM architectures •ARM7TDMI – 3 pipe line stages (ft(fetc h/deco de /execu te ) – High code density/low power consumption – One of the most used ARM-version (for low-end systems) – All ARM cores after ARM7TDMI include TDMI even if they do not include TDMI in their labels • ARM9TDMI – Compatible with ARM7 – 5 stages (fe tc h/deco de /execu te /memory /wr ite ) – Separate instruction and data cache •ARM11 ARM family comparison year 1995 1997 1999 2003 ARM is a RISC • RISC: simple but powerful instructions that execute within a single cycle at high clock speed. -

Cuda C Best Practices Guide

CUDA C BEST PRACTICES GUIDE DG-05603-001_v9.0 | June 2018 Design Guide TABLE OF CONTENTS Preface............................................................................................................ vii What Is This Document?..................................................................................... vii Who Should Read This Guide?...............................................................................vii Assess, Parallelize, Optimize, Deploy.....................................................................viii Assess........................................................................................................ viii Parallelize.................................................................................................... ix Optimize...................................................................................................... ix Deploy.........................................................................................................ix Recommendations and Best Practices.......................................................................x Chapter 1. Assessing Your Application.......................................................................1 Chapter 2. Heterogeneous Computing.......................................................................2 2.1. Differences between Host and Device................................................................ 2 2.2. What Runs on a CUDA-Enabled Device?...............................................................3 Chapter 3. Application Profiling............................................................................. -

OMAP 3 Family of Multimedia Applications

OMAP™ 3 family of multimedia applications processors Revolutionizing entertainment and productivity Key features in wireless handheld commumications • Combines mobile entertainment and high-performance productivity applications. Product Bulletin • Integrates the advanced Superscalar ARM Cortex-A8 RISC core, enabling up to The OMAP™ 3 family of multimedia applications processors from Texas Instruments (TI) 3x gain in performance versus ARM11. introduces a new level of performance that enables laptop-like productivity and advanced • Designed in 45-nm (OMAP36x platform) entertainment in multimedia-enabled handsets. OMAP 3 devices support all levels of and 65-nm (OMAP34x platform) CMOS handsets, from the entry-level multimedia-enabled handsets to high-end Mobile Internet process technologies for less power Devices (MIDs). consumption and increased device performance. Entry-level Mid-level High-end • Includes integrated IVA hardware multimedia-enabled multimedia-enabled multimedia-enabled accelerators to enable multi-standard encode handsets handsets handsets decode up to HD resolution. OMAP3410 OMAP3420 OMAP3430/3440 • Available integrated image signal OMAP3610 OMAP3620 OMAP3630/3640 processor (ISP) enables faster, higher quality image capture, lower system cost TI’s OMAP 3 family of applications processors These devices can operate at a higher and lower power consumption. • Provides seamless connectivity to hard integrate the ARM Cortex-A8 superscalar frequency than previous-generation OMAP diskdrive (HDD) devices for mass storage. microprocessor -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

S32 Design Studio for S32 Platform 3.4 Installation Guide

S32 Design Studio for S32 Platform 3.4 Installation Guide Document Number: S32DSIG Rev. 1.0, 12/2020 Contents System Requirements......................................................................................................................3 Installation prerequisites for Linux platforms.............................................................................5 Downloading S32DS 3.4................................................................................................................12 Downloading the S32DS 3.4 installer................................................................................................................12 Obtaining the activation code.............................................................................................................................12 Installing S32DS 3.4......................................................................................................................14 Debian 8 post-installation settings..................................................................................................................... 20 Installing product updates and packages................................................................................... 23 Installing Synopsys tools...............................................................................................................25 Installing VP Explorer........................................................................................................................................25 Post-installation settings.................................................................................................................................... -

Itanium-Based Solutions by Hp

Itanium-based solutions by hp an overview of the Itanium™-based hp rx4610 server a white paper from hewlett-packard june 2001 table of contents table of contents 2 executive summary 3 why Itanium is the future of computing 3 rx4610 at a glance 3 rx4610 product specifications 4 rx4610 physical and environmental specifications 4 the rx4610 and the hp server lineup 5 rx4610 architecture 6 64-bit address space and memory capacity 6 I/O subsystem design 7 special features of the rx4610 server 8 multiple upgrade and migration paths for investment protection 8 high availability and manageability 8 advanced error detection, correction, and containment 8 baseboard management controller (BMC) 8 redundant, hot-swap power supplies 9 redundant, hot-swap cooling 9 hot-plug disk drives 9 hot-plug PCI I/O slots 9 internal removable media 10 system control panel 10 ASCII console for hp-ux 10 space-saving rack density 10 complementary design and packaging 10 how hp makes the Itanium transition easy 11 binary compatibility 11 hp-ux operating system 11 seamless transition—even for home-grown applications 12 transition help from hp 12 Itanium quick start service 12 partner technology access centers 12 upgrades and financial incentives 12 conclusion 13 for more information 13 appendix: Itanium advantages in your computing future 14 hp’s CPU roadmap 14 Itanium processor architecture 15 predication enhances parallelism 15 speculation minimizes the effect of memory latency 15 inherent scalability delivers easy expansion 16 what this means in a server 16 2 executive The Itanium™ Processor Family is the next great stride in computing--and it’s here today.