Hierarchical Organization and Description of Music Collections at the Artist Level

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

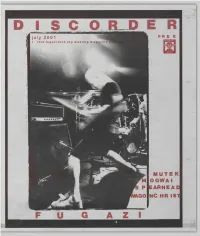

I S C O R D E R Free

I S C O R D E R FREE IUTE K OGWAI ARHEAD NC HR IS1 © "DiSCORDER" 2001 by the Student Radio Society of the University of British Columbia. All rights reserved. Circuldtion 1 7,500. Subscriptions, payable in advance, to Canadian residents are $15 for one year, to residents of the USA are $15 US; $24 CDN elsewhere. Single copies are $2 (to cover postage, of course). Please make cheques or money orders payable to DiSCORDER Mag azine. DEADLINES: Copy deadline for the August issue is July 14th. Ad space is available until July 21st and ccn be booked by calling Maren at 604.822.3017 ext. 3. Our rates are available upon request. DiS CORDER is not responsible for loss, damage, or any other injury to unsolicited mcnuscripts, unsolicit ed drtwork (including but not limited to drawings, photographs and transparencies), or any other unsolicited material. Material can be submitted on disc or in type. As always, English is preferred. Send e-mail to DSCORDER at [email protected]. From UBC to Langley and Squamish to Bellingham, CiTR can be heard at 101.9 fM as well as through all major cable systems in the Lower Mainland, except Shaw in White Rock. Call the CiTR DJ line at 822.2487, our office at 822.301 7 ext. 0, or our news and sports lines at 822.3017 ext. 2. Fax us at 822.9364, e-mail us at: [email protected], visit our web site at http://www.ams.ubc.ca/media/citr or just pick up a goddamn pen and write #233-6138 SUB Blvd., Vancouver, BC. -

Steve Waksman [email protected]

Journal of the International Association for the Study of Popular Music doi:10.5429/2079-3871(2010)v1i1.9en Live Recollections: Uses of the Past in U.S. Concert Life Steve Waksman [email protected] Smith College Abstract As an institution, the concert has long been one of the central mechanisms through which a sense of musical history is constructed and conveyed to a contemporary listening audience. Examining concert programs and critical reviews, this paper will briefly survey U.S. concert life at three distinct moments: in the 1840s, when a conflict arose between virtuoso performance and an emerging classical canon; in the 1910s through 1930s, when early jazz concerts referenced the past to highlight the music’s progress over time; and in the late twentieth century, when rock festivals sought to reclaim a sense of liveness in an increasingly mediatized cultural landscape. keywords: concerts, canons, jazz, rock, virtuosity, history. 1 During the nineteenth century, a conflict arose regarding whether concert repertories should dwell more on the presentation of works from the past, or should concentrate on works of a more contemporary character. The notion that works of the past rather than the present should be the focus of concert life gained hold only gradually over the course of the nineteenth century; as it did, concerts in Europe and the U.S. assumed a more curatorial function, acting almost as a living museum of musical artifacts. While this emphasis on the musical past took hold most sharply in the sphere of “high” or classical music, it has become increasingly common in the popular sphere as well, although whether it fulfills the same function in each realm of musical life remains an open question. -

Computational Models of Music Similarity and Their Applications In

Die approbierte Originalversion dieser Dissertation ist an der Hauptbibliothek der Technischen Universität Wien aufgestellt (http://www.ub.tuwien.ac.at). The approved original version of this thesis is available at the main library of the Vienna University of Technology (http://www.ub.tuwien.ac.at/englweb/). DISSERTATION Computational Models of Music Similarity and their Application in Music Information Retrieval ausgef¨uhrt zum Zwecke der Erlangung des akademischen Grades Doktor der technischen Wissenschaften unter der Leitung von Univ. Prof. Dipl.-Ing. Dr. techn. Gerhard Widmer Institut f¨urComputational Perception Johannes Kepler Universit¨atLinz eingereicht an der Technischen Universit¨at Wien Fakult¨atf¨urInformatik von Elias Pampalk Matrikelnummer: 9625173 Eglseegasse 10A, 1120 Wien Wien, M¨arz 2006 Kurzfassung Die Zielsetzung dieser Dissertation ist die Entwicklung von Methoden zur Unterst¨utzung von Anwendern beim Zugriff auf und bei der Entdeckung von Musik. Der Hauptteil besteht aus zwei Kapiteln. Kapitel 2 gibt eine Einf¨uhrung in berechenbare Modelle von Musik¨ahnlich- keit. Zudem wird die Optimierung der Kombination verschiedener Ans¨atze beschrieben und die gr¨oßte bisher publizierte Evaluierung von Musik¨ahnlich- keitsmassen pr¨asentiert. Die beste Kombination schneidet in den meisten Evaluierungskategorien signifikant besser ab als die Ausgangsmethode. Beson- dere Vorkehrungen wurden getroffen um Overfitting zu vermeiden. Um eine Gegenprobe zu den Ergebnissen der Genreklassifikation-basierten Evaluierung zu machen, wurde ein H¨ortest durchgef¨uhrt.Die Ergebnisse vom Test best¨a- tigen, dass Genre-basierte Evaluierungen angemessen sind um effizient große Parameterr¨aume zu evaluieren. Kapitel 2 endet mit Empfehlungen bez¨uglich der Verwendung von Ahnlichkeitsmaßen.¨ Kapitel 3 beschreibt drei Anwendungen von solchen Ahnlichkeitsmassen.¨ Die erste Anwendung demonstriert wie Musiksammlungen organisiert and visualisiert werden k¨onnen,so dass die Anwender den Ahnlichkeitsaspekt,¨ der sie interessiert, kontrollieren k¨onnen. -

Musikstile Quelle: Alphabetisch Geordnet Von Mukerbude

MusikStile Quelle: www.recordsale.de Alphabetisch geordnet von MukerBude - 2-Step/BritishGarage - AcidHouse - AcidJazz - AcidRock - AcidTechno - Acappella - AcousticBlues - AcousticChicagoBlues - AdultAlternative - AdultAlternativePop/Rock - AdultContemporary -Africa - AfricanJazz - Afro - Afro-Pop -AlbumRock - Alternative - AlternativeCountry - AlternativeDance - AlternativeFolk - AlternativeMetal - AlternativePop/Rock - AlternativeRap - Ambient - AmbientBreakbeat - AmbientDub - AmbientHouse - AmbientPop - AmbientTechno - Americana - AmericanPopularSong - AmericanPunk - AmericanTradRock - AmericanUnderground - AMPop Orchestral - ArenaRock - Argentina - Asia -AussieRock - Australia - Avant -Avant-Garde - Avntg - Ballads - Baroque - BaroquePop - BassMusic - Beach - BeatPoetry - BigBand - BigBeat - BlackGospel - Blaxploitation - Blue-EyedSoul -Blues - Blues-Rock - BluesRevival - Blues - Spain - Boogie Woogie - Bop - Bolero -Boogaloo - BoogieRock - BossaNova - Brazil - BrazilianJazz - BrazilianPop - BrillBuildingPop - Britain - BritishBlues - BritishDanceBands - BritishFolk - BritishFolk Rock - BritishInvasion - BritishMetal - BritishPsychedelia - BritishPunk - BritishRap - BritishTradRock - Britpop - BrokenBeat - Bubblegum - C -86 - Cabaret -Cajun - Calypso - Canada - CanterburyScene - Caribbean - CaribbeanFolk - CastRecordings -CCM -CCM - Celebrity - Celtic - Celtic - CelticFolk - CelticFusion - CelticPop - CelticRock - ChamberJazz - ChamberMusic - ChamberPop - Chile - Choral - ChicagoBlues - ChicagoSoul - Child - Children'sFolk - Christmas -

California State University, Northridge Where's The

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE WHERE’S THE ROCK? AN EXAMINATION OF ROCK MUSIC’S LONG-TERM SUCCESS THROUGH THE GEOGRAPHY OF ITS ARTISTS’ ORIGINS AND ITS STATUS IN THE MUSIC INDUSTRY A thesis submitted in partial fulfilment of the requirements for the Degree of Master of Arts in Geography, Geographic Information Science By Mark T. Ulmer May 2015 The thesis of Mark Ulmer is approved: __________________________________________ __________________ Dr. James Craine Date __________________________________________ __________________ Dr. Ronald Davidson Date __________________________________________ __________________ Dr. Steven Graves, Chair Date California State University, Northridge ii Acknowledgements I would like to thank my committee members and all of my professors at California State University, Northridge, for helping me to broaden my geographic horizons. Dr. Boroushaki, Dr. Cox, Dr. Craine, Dr. Davidson, Dr. Graves, Dr. Jackiewicz, Dr. Maas, Dr. Sun, and David Deis, thank you! iii TABLE OF CONTENTS Signature Page .................................................................................................................... ii Acknowledgements ............................................................................................................ iii LIST OF FIGURES AND TABLES.................................................................................. vi ABSTRACT ..................................................................................................................... viii Chapter 1 – Introduction .................................................................................................... -

Sooloos Collections: Advanced Guide

Sooloos Collections: Advanced Guide Sooloos Collectiions: Advanced Guide Contents Introduction ...........................................................................................................................................................3 Organising and Using a Sooloos Collection ...........................................................................................................4 Working with Sets ..................................................................................................................................................5 Organising through Naming ..................................................................................................................................7 Album Detail ....................................................................................................................................................... 11 Finding Content .................................................................................................................................................. 12 Explore ............................................................................................................................................................ 12 Search ............................................................................................................................................................. 14 Focus .............................................................................................................................................................. -

Record Dreams Catalog

RECORD DREAMS 50 Hallucinations and Visions of Rare and Strange Vinyl Vinyl, to: vb. A neologism that describes the process of immersing yourself in an antique playback format, often to the point of obsession - i.e. I’m going to vinyl at Utrecht, I may be gone a long time. Or: I vinyled so hard that my bank balance has gone up the wazoo. The end result of vinyling is a record collection, which is defned as a bad idea (hoarding, duplicating, upgrading) often turned into a good idea (a saleable archive). If you’re reading this, you’ve gone down the rabbit hole like the rest of us. What is record collecting? Is it a doomed yet psychologically powerful wish to recapture that frst thrill of adolescent recognition or is it a quite understandable impulse to preserve and enjoy totemic artefacts from the frst - perhaps the only - great age of a truly mass art form, a mass youth culture? Fingering a particularly juicy 45 by the Stooges, Sweet or Sylvester, you could be forgiven for answering: fuck it, let’s boogie! But, you know, you’re here and so are we so, to quote Double Dee and Steinski, what does it all mean? Are you looking for - to take a few possibles - Kate Bush picture discs, early 80s Japanese synth on the Vanity label, European Led Zeppelin 45’s (because of course they did not deign to release singles in the UK), or vastly overpriced and not so good druggy LPs from the psychedelic fatso’s stall (Rainbow Ffolly, we salute you)? Or are you just drifting, browsing, going where the mood and the vinyl takes you? That’s where Utrecht scores. -

Episode 2 Rock Music with an Attitude 1OVERVIEW Rock Music Has Been One of the Driving Forc- Es of Youth Culture Since the 1950’S

Unit 4 Music Styles Episode 2 Rock Music with an attitude 1OVERVIEW Rock music has been one of the driving forc- es of youth culture since the 1950’s. Expres- sive and emotional, rock is music with an exclamation point! To express their outrage that a local park is going to be turned into a mega-store, the band takes up Quaver’s chal- lenge to express themselves through rock music. While they work on their song in the studio, Quaver and Repairman dive into the genre by looking at its history, instrumenta- tion, sound, form, lyrics, and feel. LESSON OBJECTIVES Students will learn: • Rock songs have a number of basic elements: a rock drum rhythm, three simple chords, a repeated lyric about something you care about, verses (A), and a chorus (B). • Rock uses microphones and amplified instruments so music is louder and reaches a bigger audience. • Rock bands commonly employ: a lead singer, drummer, electric guitarist or two, and an electric bass player. • Rock music has a strong, heavily accented, driving meter of 4. • Rock music has developed into many separate rock styles including punk, grunge, heavy metal, pop rock, pop, folk rock, and country rock. Vocabulary A section Chorus B section Lyrics Chords Amplifier Verse © Quaver’s Marvelous World of Music • 2-1 Unit 4 Music Styles MUSIC STANDARDS IN LESSON 1: Singing alone and with others* 2: Playing instruments 4: Composing and arranging music* 6: Listening to, analyzing, and describing music 7: Evaluating music and music performance 8: Understanding the relationship between music and the other arts 9: Understanding music in relation to history, style, and culture Complete details at QuaverMusic.com Key Scenes Music What they teach Standard 1 Paving a park to put up a The themes of rock music are often about life and per- 9 parking lot sonal experience. -

STREET DATE: August 20, 2013 5% DISCOUNT on New Release Items Through August 27

AUGUST NEW RELEASE GUIDE STREET DATE: August 20, 2013 5% DISCOUNT on New Release Items through August 27 Burnside Distribution Corp, 6635 N. Baltimore Ave, Suite 285, Portland, OR, 97203 phone (503) 231-0876 / fax (503) 231-0420 / www.bdcdistribution.com BDC New Releases August 2013 (503) 231-0876 / www.bdcdistribution.com 2 August 2013 Welcome!!BDC Welcome!! New Releases Hickoids’ Hairy Chafin‚ Ape Suit album has been 23 years in the making - just ask the Guinness Book of World Records. No artist has ever dragged out a release this long, not even Axl Rose. The Holidays are coming! What you talking about Willis? I may be in a fowl mood sometimes but Was it worth the wait? You be the judge. I’m not referring to Turkey Day. I’m talking about those end-of-December days, and what better time to get ready for them but right now. We have about forty titles for you to consider and they are David Gogo got his first guitar at 5. When he was 15, no less than Stevie Ray Vaughan told him listed within. Don’t miss out; you’d better not pout. he had a career in the blues if he wanted it. David never looked back. As a nominee and winner of Junos, Maple Blues Awards and other Canadian honors, his career keeps moving forward. His 13th Kim Lenz is Red Hot; just take a look at those locks. Follow Me is her latest; great songs; great band; album is the result of a blues pilgrimage down Mississippi’s Highway 61. -

2019 Schedule of Entertainment and Special Events at Clear Water Harbor, Waupaca

2019 Schedule of Entertainment and Special Events At Clear Water Harbor, Waupaca. WI Join us Saturdays with live bands playing from 9:30pm-1:30am and select Tuesdays and Wednesdays for our sunset show on the deck. Enjoy your favorite live entertainment on the raised stage and dance floor! Also, don’t miss the fun on Sunday afternoons with bands playing on our floating stage off the waterfront deck starting at 3:00pm. Come by land or sea and get full menu service from our waterfront docks. “THE HARBOR” charges NO COVER for live entertainment!!! Reservations are required for our concert cruises aboard the Chief Waupaca. Call (715)258-2866 for more information. Check our website for updates at www.clearwaterharbor.com. April Thursday, April 18 Clear Water Harbor opens for the 2019 season @ 10:00am. Saturday, April 20 Hyde- Playing a variety of classic to modern rock hits. These talented musicians are taking the Valley by storm. 9:30pm Saturday, May 25 The Presidents- One of the most requested party bands in Wisconsin! The Presidents have the perfect mix of repertoire, talent, and charisma to rock your socks off!9:30pm Sunday, May 26- MEMORIAL DAY WEEKEND DOUBLEHEADER Face for Radio- This super group is a favorite! High energy band playing 90’s, current rock, pop and punk hits! 3pm AND Hyde - Playing a variety of classic to modern rock hits. These talented musicians are taking the Valley by storm. 9:30pm JUNE Saturday, June 1 The Bomb- They get the party started playing the best of rock and country from 80’s to today. -

American Popular Music

American Popular Music Larry Starr & Christopher Waterman Copyright © 2003, 2007 by Oxford University Press, Inc. This condensation of AMERICAN POPULAR MUSIC: FROM MINSTRELSY TO MP3 is a condensation of the book originally published in English in 2006 and is offered in this condensation by arrangement with Oxford University Press, Inc. Larry Starr is Professor of Music at the University of Washington. His previous publications include Clockwise from top: The Dickinson Songs of Aaron Bob Dylan and Joan Copland (2002), A Union of Baez on the road; Diana Ross sings to Diversities: Style in the Music of thousands; Louis Charles Ives (1992), and articles Armstrong and his in American Music, Perspectives trumpet; DJ Jazzy Jeff of New Music, Musical Quarterly, spins records; ‘NSync and Journal of Popular Music in concert; Elvis Studies. Christopher Waterman Presley sings and acts. is Dean of the School of Arts and Architecture at the University of California, Los Angeles. His previous publications include Jùjú: A Social History and Ethnography of an African Popular Music (1990) and articles in Ethnomusicology and Music Educator’s Journal. American Popular Music Larry Starr & Christopher Waterman CONTENTS � Introduction .............................................................................................. 3 CHAPTER 1: Streams of Tradition: The Sources of Popular Music ......................... 6 CHAPTER 2: Popular Music: Nineteenth and Early Twentieth Centuries .......... ... 1 2 An Early Pop Songwriter: Stephen Foster ........................................... 1 9 CHAPTER 3: Popular Jazz and Swing: America’s Original Art Form ...................... 2 0 CHAPTER 4: Tin Pan Alley: Creating “Musical Standards” ..................................... 2 6 CHAPTER 5: Early Music of the American South: “Race Records” and “Hillbilly Music” ....................................................................................... 3 0 CHAPTER 6: Rhythm & Blues: From Jump Blues to Doo-Wop ................................ -

Programming Policy : Can-Con & Hits

Programming Policy #003 Monday, March 21, 2011 Programming Policy : Can-Con & Hits 1.0 Reasoning: The purpose of this policy is to define the restrictions on Can-Con and Hits, as described by the CRTC. The role of the programming committee is to fulfill the mandate and broadcast licence requirements of CIVL Radio; as delegated by the board, the programming committee is directly responsible for upholding these requirements and standards. 2.0 Back Ground: CIVL Radio has maintained a 35% Canadian content requirement for all its category 2 music programs, a 12% Can-Con requirement for its category 3 music programs, and has been critical of programs whose content of hits exceeds 10%. Formerly, CIVL Radio developed its programming policies on hits from CRTC 1997-42. however, since then CRTC 2010-819, CRTC 2010-499, and CRTC 2009-61 have been released. It has been expressed that programmers have not been provided enough resources or direction from which to research hits. This policy is intended to provide direction to CIVL programmers on the content restrictions for Can-Con and hits placed on CIVL Radio by the CRTC. 3.0 Policy 3.1 Programs defined by Content Category 2*, unless granted an exemption, are required to uphold a 35% Canadian content requirement for their musical selections. 3.2 Programs defined by Content Category 3*, unless granted an exemption, are required to uphold 12% Canadian content requirement for their musical selections. 3.3 CIVL programs may not contain more hits than 10% of their total logged musical selections, unless granted an exception.