Application of Machine Learning Techniques for Text Generation DEGREE FINAL WORK

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Members of the House of Commons December 2019 Diane ABBOTT MP

Members of the House of Commons December 2019 A Labour Conservative Diane ABBOTT MP Adam AFRIYIE MP Hackney North and Stoke Windsor Newington Labour Conservative Debbie ABRAHAMS MP Imran AHMAD-KHAN Oldham East and MP Saddleworth Wakefield Conservative Conservative Nigel ADAMS MP Nickie AIKEN MP Selby and Ainsty Cities of London and Westminster Conservative Conservative Bim AFOLAMI MP Peter ALDOUS MP Hitchin and Harpenden Waveney A Labour Labour Rushanara ALI MP Mike AMESBURY MP Bethnal Green and Bow Weaver Vale Labour Conservative Tahir ALI MP Sir David AMESS MP Birmingham, Hall Green Southend West Conservative Labour Lucy ALLAN MP Fleur ANDERSON MP Telford Putney Labour Conservative Dr Rosena ALLIN-KHAN Lee ANDERSON MP MP Ashfield Tooting Members of the House of Commons December 2019 A Conservative Conservative Stuart ANDERSON MP Edward ARGAR MP Wolverhampton South Charnwood West Conservative Labour Stuart ANDREW MP Jonathan ASHWORTH Pudsey MP Leicester South Conservative Conservative Caroline ANSELL MP Sarah ATHERTON MP Eastbourne Wrexham Labour Conservative Tonia ANTONIAZZI MP Victoria ATKINS MP Gower Louth and Horncastle B Conservative Conservative Gareth BACON MP Siobhan BAILLIE MP Orpington Stroud Conservative Conservative Richard BACON MP Duncan BAKER MP South Norfolk North Norfolk Conservative Conservative Kemi BADENOCH MP Steve BAKER MP Saffron Walden Wycombe Conservative Conservative Shaun BAILEY MP Harriett BALDWIN MP West Bromwich West West Worcestershire Members of the House of Commons December 2019 B Conservative Conservative -

MEMO Is Produced by the Scottish Council of Jewish Communities (Scojec) in Partnership with BEMIS – Empowering Scotland's Ethnic and Cultural Minority Communities

Supported by Minority Ethnic Matters Overview 7 December 2020 ISSUE 685 MEMO is produced by the Scottish Council of Jewish Communities (SCoJeC) in partnership with BEMIS – empowering Scotland's ethnic and cultural minority communities. It provides an overview of information of interest to minority ethnic communities in Scotland, including parliamentary activity at Holyrood and Westminster, new publications, consultations, forthcoming conferences, and news reports. Contents Immigration and Asylum Bills in Progress Equality Consultations Racism, Religious Hatred, and Discrimination Job Opportunities Other Scottish Parliament and Government Funding Opportunities Other UK Parliament and Government Events, Conferences, and Training Health Information: Coronavirus (COVID-19) Useful Links Note that some weblinks, particularly of newspaper articles, are only valid for a short period of time, usually around a month, and that the Scottish and UK Parliament and Government websites have been redesigned, so that links published in previous issues of MEMO may no longer work. To find archive material on these websites, copy details from MEMO into the relevant search facility. Please send information for inclusion in MEMO to [email protected] and click here to be added to the mailing list. Immigration and Asylum UK Parliament, Ministerial Statement UK Points-based Immigration System The Parliamentary Under-Secretary of State for the Home Department (Kevin Foster) [HCWS614] I am pleased to confirm the Government have today launched a number of immigration routes under the new UK points-based system, including the skilled worker route. This is a significant milestone and delivers on this Government’s commitment to take back control of our borders by ending freedom of movement with the EU and replacing it with a global points-based system. -

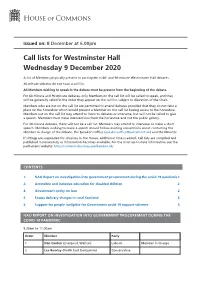

Westminster Hall Wednesday 9 December 2020

Issued on: 8 December at 6.00pm Call lists for Westminster Hall Wednesday 9 December 2020 A list of Members physically present to participate in 60- and 90-minute Westminster Hall debates. 30-minute debates do not have a call list. All Members wishing to speak in the debate must be present from the beginning of the debate. For 60-minute and 90-minute debates, only Members on the call list will be called to speak, and they will be generally called in the order they appear on the call list, subject to discretion of the Chair. Members who are not on the call list are permitted to attend debates provided that they do not take a place on the horseshoe which would prevent a Member on the call list having access to the horseshoe. Members not on the call list may attend to listen to debates or intervene, but will not be called to give a speech. Members must make interventions from the horseshoe and not the public gallery. For 30-minute debates, there will not be a call list. Members may attend to intervene or make a short speech. Members wishing to make a speech should follow existing conventions about contacting the Member in charge of the debate, the Speaker’s Office ([email protected]) and the Minister. If sittings are suspended for divisions in the House, additional time is added. Call lists are compiled and published incrementally as information becomes available. For the most up-to-date infor- mation see the parliament website: https://commonsbusiness. parliament.uk/ 2 Call lists for Westminster Hall Wednesday 9 December 2020 CONTENTS 1. -

MEETING of the BOARD of TRUSTEES Items in Red Are

Trust Board, 19 May 2021 MEETING OF THE BOARD OF TRUSTEES Items in red are confidential Minutes of a meeting of the Board of Trustees (“the Trustees”) of the Canal & River Trust (“the Trust”) held on Wednesday 19 May 2021 at 8:30am – 1pm at &Meetings, 150 Minories, London, EC3N 1LS Present: Allan Leighton, Chair Dame Jenny Abramsky, Deputy Chair Nigel Annett CBE (by Zoom) Ben Gordon Janet Hogben Sir Chris Kelly Jennie Price CBE (by Zoom) Tim Reeve Sarah Whitney (from 9.20am, during minute 21/032, by Zoom) Sue Wilkinson In attendance: Richard Parry, Chief Executive Julie Sharman, Chief Operating Officer Stuart Mills, Chief Investment Officer Simon Bamford, Asset Improvement Director Heather Clarke, Strategy, Engagement, and Impact Director Steve Dainty, Finance Director Tom Deards, Head of Legal & Governance Services and Company Secretary Mike Gooddie, People Director – by Zoom Gemma Towns, Corporate Governance Manager (minute-taker, by Zoom) Radojka Miljevic, Campbell Tickell (observer) Mandy Smith, Partner Engagement Team Manager (by Zoom, minute 21/035) Jodie Lees, Corporate Engagement & PPL Partner (by Zoom, minute 21/035) Stephen Gray, Corporate Engagement Partnerships Manager (by Zoom, 21/035) Hamish Shilliday, Head of Individual & Legacy Giving (by Zoom, minute 21/035) David Prisk, Asset Manager, Reservoirs (by Zoom, minute 21/037) Gwen Jefferson, Organisation Development Manager (by Zoom, minute 21/040) 21/029 WELCOME & APOLOGIES The Chair welcomed all attendees to the meeting. The Chair welcomed RM, who was observing the meeting as part of the Trust’s board effectiveness review. The Chair confirmed that notice of the meeting had been given to all Trustees and that a quorum was present. -

2019 General Election Results for the East Midlands

2019 General Election Results for the East Midlands CON HOLD Conservative Nigel Mills Votes:29,096 Amber Valley Labour Adam Thompson Votes:12,210 Liberal Democrat Kate Smith Votes:2,873 Green Lian Pizzey Votes:1,388 CON GAIN FROM LAB Conservative Lee Anderson Votes:19,231 Ashfield Independents Jason Zadrozny Votes:13,498 Ashfield Labour Natalie Fleet Votes:11,971 The Brexit Party Martin Daubney Votes:2,501 Liberal Democrat Rebecca Wain Votes:1,105 Green Rose Woods Votes:674 CON GAIN FROM LAB Conservative Brendan Clarke-Smith Votes:28,078 Bassetlaw Labour Keir Morrison Votes:14,065 The Brexit Party Debbie Soloman Votes:5,366 Liberal Democrat Helen Tamblyn-Saville Votes:3,332 CON GAIN FROM LAB Conservative Mark Fletcher Votes:21,791 Labour Dennis Skinner Votes:16,492 The Brexit Party Kevin Harper Votes:4,151 Bolsover Liberal Democrat David Hancock Votes:1,759 Green David Kesteven Votes:758 Independent Ross Walker Votes:517 Independent Natalie Hoy Votes:470 CON HOLD Conservative Matt Warman Votes:31,963 Boston & Skegness Labour Ben Cook Votes:6,342 Liberal Democrat Hilary Jones Votes:1,963 Independent Peter Watson Votes:1,428 2019 General Election Results for the East Midlands CON HOLD Conservative Luke Evans Votes:36,056 Bosworth Labour Rick Middleton Votes:9,778 Liberal Democrat Michael Mullaney Votes:9,096 Green Mick Gregg Votes:1,502 CON HOLD Conservative Darren Henry Votes:26,602 Labour Greg Marshall Votes:21,271 Independent Group for Change Anna Soubry Votes:4,668 Broxtowe Green Kat Boettge Votes:1,806 English Democrats Amy Dalla Mura -

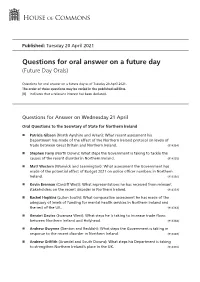

View Future Day Orals PDF File 0.11 MB

Published: Tuesday 20 April 2021 Questions for oral answer on a future day (Future Day Orals) Questions for oral answer on a future day as of Tuesday 20 April 2021. The order of these questions may be varied in the published call lists. [R] Indicates that a relevant interest has been declared. Questions for Answer on Wednesday 21 April Oral Questions to the Secretary of State for Northern Ireland Patricia Gibson (North Ayrshire and Arran): What recent assessment his Department has made of the effect of the Northern Ireland protocol on levels of trade between Great Britain and Northern Ireland. (914354) Stephen Farry (North Down): What steps the Government is taking to tackle the causes of the recent disorder in Northern Ireland. (914355) Matt Western (Warwick and Leamington): What assessment the Government has made of the potential effect of Budget 2021 on police officer numbers in Northern Ireland. (914356) Kevin Brennan (Cardiff West): What representations he has received from relevant stakeholders on the recent disorder in Northern Ireland. (914359) Rachel Hopkins (Luton South): What comparative assessment he has made of the adequacy of levels of funding for mental health services in Northern Ireland and the rest of the UK. (914363) Geraint Davies (Swansea West): What steps he is taking to increase trade flows between Northern Ireland and Holyhead. (914368) Andrew Gwynne (Denton and Reddish): What steps the Government is taking in response to the recent disorder in Northern Ireland. (914369) Andrew Griffith (Arundel and South Downs): What steps his Department is taking to strengthen Northern Ireland’s place in the UK. -

View Call Lists: Westminster Hall PDF File 0.05 MB

Issued on: 8 December at 6.00pm Call lists for Westminster Hall Wednesday 9 December 2020 A list of Members physically present to participate in 60- and 90-minute Westminster Hall debates. 30-minute debates do not have a call list. All Members wishing to speak in the debate must be present from the beginning of the debate. For 60-minute and 90-minute debates, only Members on the call list will be called to speak, and they will be generally called in the order they appear on the call list, subject to discretion of the Chair. Members who are not on the call list are permitted to attend debates provided that they do not take a place on the horseshoe which would prevent a Member on the call list having access to the horseshoe. Members not on the call list may attend to listen to debates or intervene, but will not be called to give a speech. Members must make interventions from the horseshoe and not the public gallery. For 30-minute debates, there will not be a call list. Members may attend to intervene or make a short speech. Members wishing to make a speech should follow existing conventions about contacting the Member in charge of the debate, the Speaker’s Office [email protected]( ) and the Minister. If sittings are suspended for divisions in the House, additional time is added. Call lists are compiled and published incrementally as information becomes available. For the most up-to-date information see the parliament website: https://commonsbusiness.parliament.uk/ CONTENTS 1. -

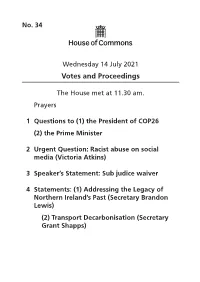

PDF File 0.03 MB

No. 34 Wednesday 14 July 2021 Votes and Proceedings The House met at 11.30 am. Prayers 1 Questions to (1) the President of COP26 (2) the Prime Minister 2 Urgent Question: Racist abuse on social media (Victoria Atkins) 3 Speaker’s Statement: Sub judice waiver 4 Statements: (1) Addressing the Legacy of Northern Ireland’s Past (Secretary Brandon Lewis) (2) Transport Decarbonisation (Secretary Grant Shapps) 2 Votes and Proceedings: 14 July 2021 No. 34 5 Planning and Local Representation: Motion for leave to bring in a Bill (Standing Order No. 23) Motion made and Question put, That leave be given to bring in a Bill to give people who have made representations about development plans the right to participate in associated examination hearings; to require public consultation on development proposals; to grant local authorities power to apply local design standards for permitted development and to refuse permitted development proposals that would be detrimental to the health and wellbeing of an individual or community; to make planning permission for major housing schemes subject to associated works starting within two years; and for connected purposes.—(Rachel Hopkins.) The House divided. Division No. 54 Ayes: 219 (Tellers: Taiwo Owatemi, Mary Glindon) Noes: 0 (Tellers: Marie Rimmer, Gill Furniss) Question accordingly agreed to. No. 34 Votes and Proceedings: 14 July 2021 3 Ordered, That Rachel Hopkins, Andrew Gwynne, Kim Johnson, Debbie Abrahams, Navendu Mishra, Liz Twist, Rachael Maskell, Kate Osborne, Ian Byrne, Sarah Owen, Barbara Keeley and Peter Dowd present the Bill. Rachel Hopkins accordingly presented the Bill. Bill read the first time; to be read a second time on Friday 22 October, and to be printed (Bill 147). -

Whole Day Download the Hansard

Monday Volume 687 18 January 2021 No. 161 HOUSE OF COMMONS OFFICIAL REPORT PARLIAMENTARY DEBATES (HANSARD) Monday 18 January 2021 © Parliamentary Copyright House of Commons 2021 This publication may be reproduced under the terms of the Open Parliament licence, which is published at www.parliament.uk/site-information/copyright/. 601 18 JANUARY 2021 602 David Linden [V]: Under the Horizon 2020 programme, House of Commons the UK consistently received more money out than it put in. Under the terms of this agreement, the UK is set to receive no more than it contributes. While universities Monday 18 January 2021 in Scotland were relieved to see a commitment to Horizon Europe in the joint agreement, what additional funding The House met at half-past Two o’clock will the Secretary of State make available to ensure that our overall level of research funding is maintained? PRAYERS Gavin Williamson: As the hon. Gentleman will be aware, the Government have been very clear in our [MR SPEAKER in the Chair] commitment to research. The Prime Minister has stated Virtual participation in proceedings commenced time and time again that our investment in research is (Orders, 4 June and 30 December 2020). absolutely there, ensuring that we deliver Britain as a [NB: [V] denotes a Member participating virtually.] global scientific superpower. That is why more money has been going into research, and universities will continue to play an incredibly important role in that, but as he Oral Answers to Questions will be aware, the Department for Business, Energy and Industrial Strategy manages the research element that goes into the funding of universities. -

UK Parliamentary Select Committees 2020 a Cicero/AMO Analysis Cicero/AMO / March 2020

/ UK Parliamentary Select Committees 2020 A Cicero/AMO Analysis Cicero/AMO / March 2020 / Cicero/AMO / 1 / / Contents Foreword 3 Treasury Select Committee 4 Business, Energy and Industrial Strategy Committee 6 Committee on the Future Relationship with the European Union 8 International Trade Committee 10 Home Affairs Committee 12 Transport Committee 14 Environmental Audit Committee 16 About Cicero/AMO 19 Foreword After December’s General Election, the House of Commons Select Committees have now been reconstituted. Cicero/AMO is pleased to share with you our analysis of the key Select Committees, including a look at their Chairs, members, the ‘ones to watch’ and their likely priorities. Select Committees – made up of backbench MPs – are charged with scrutinising Government departments and specific policy areas. They have become an increasingly important part of the parliamentary infrastructure, and never more so than in the last Parliament, where the lack of Government majority and party splits over Brexit allowed Select Committees to provide an authoritative form of Government scrutiny. However, this new Parliament looks very different. The large majority afforded to Boris Johnson in the election and the resulting Labour leadership contest give rise to a number of questions over Select Committee influence. Will the Government take Select Committee recommendations seriously as they form policy, or – without the need to keep every backbencher on side - will they feel at liberty to disregard the input of Committees? Will the Labour Party regroup when a new Leader is in place and provide a more effective Opposition or will a long period of navel-gazing leave space for Select Committees to fill this void? While Select Committees’ ability to effectively keep Government in check remains unclear, they will still be able to influence the media narrative around their chosen areas of inquiry. -

NOTICE of POLL Election of a Borough Councillor

NOTICE OF POLL High Peak Election of a Borough Councillor for Barms Notice is hereby given that: 1. A poll for the election of a Borough Councillor for Barms will be held on Thursday 2 May 2019, between the hours of 7:00 am and 10:00 pm. 2. The number of Borough Councillors to be elected is one. 3. The names, home addresses and descriptions of the Candidates remaining validly nominated for election and the names of all persons signing the Candidates nomination paper are as follows: Names of Signatories Name of Candidate Home Address Description (if any) Proposers(+), Seconders(++) & Assentors BROOKE (Address in High Peak) The Conservative Party Christopher J Seddon Sandra M J Seddon Seb Candidate (+) (++) Faye Warren Alec H R Warren Patricia A Barnsley Elaine J Bonsell Roger Parker Elizabeth J Hill Robert L Mosley Edward A Hill MAYERS Flat 6, 2 Crescent The Green Party Rennie F. Leech (+) Francesca J Gregory Daniel David View, Hall Bank, Clare M Foster (++) Buxton, Derbyshire, Zoie-Echo Campbell Stacey N Mayer SK17 6EN Lenora Kaye Allan Smith Deborah R Walker John G. Walker Rachael Hodgkinson QUINN 45 Nunsfield Road, Labour Party Lisa J. Donnelly (+) David S. Donnelly (++) Rachael Buxton, Derbyshire, Collette Solibun Matthew A Sale SK17 7BW Pamela J Smart David J Jones Natasha Braithwaite Anita A Harwood Isobel G R Harwood Martin S Quinn 4. The situation of Polling Stations and the description of persons entitled to vote there are as follows: Station Ranges of electoral register numbers of Situation of Polling Station Number persons entitled to vote thereat Fairfield Methodist Church, Off Fairfield Road, Buxton 1 BA1-1 to BA1-652 Fairfield Methodist Church, Off Fairfield Road, Buxton 2 BA2-1 to BA2-751 5. -

Daily Report Thursday, 1 April 2021 CONTENTS

Daily Report Thursday, 1 April 2021 This report shows written answers and statements provided on 1 April 2021 and the information is correct at the time of publication (03:32 P.M., 01 April 2021). For the latest information on written questions and answers, ministerial corrections, and written statements, please visit: http://www.parliament.uk/writtenanswers/ CONTENTS ANSWERS 10 Lime: EU Emissions Trading BUSINESS, ENERGY AND Scheme 18 INDUSTRIAL STRATEGY 10 Lime: UK Emissions Trading Africa: Research 10 Scheme 18 Bounce Back Loan Scheme 10 Members: Correspondence 19 Clothing: Manufacturing Overseas Workers: EU Industries 11 Countries 19 Companies: Human Rights 11 Products: Internet 20 Coronavirus: Research 12 Redundancy 20 Debt Relief Orders 12 Regional Planning and Development 21 Department for Business, Energy and Industrial Strategy: Restart Grant Scheme: West Iron and Steel 14 Yorkshire 21 Employment: Domestic Abuse 14 Tidal Power 21 Energy Supply 14 Weddings: Coronavirus 22 Energy Supply: Wakefield 15 Weddings: Females 22 Fossil Fuels: Exploration 15 CABINET OFFICE 23 Free Zones 16 10 Downing Street: Iron and Steel 23 Greensill: Coronavirus Large Business Interruption Loan Cabinet Office: Iron and Steel 23 Scheme 16 Caravan Sites and Holiday Hallmarking 17 Accommodation: Coronavirus 23 Hospitality Industry: Coronavirus: Death 24 Coronavirus 17 Coronavirus: Vaccination 25 Iron and Steel: Manufacturing Elections: Proof of Identity 25 Industries 17 Free Zones 25 Gender Based Violence: Ministry of Defence: Iron and Victim Support Schemes