Securing Arm Platform: from Software-Based to Hardware-Based Approaches

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Anurag Sharma | 1 © Vivekananda International Foundation Published in 2021 by Vivekananda International Foundation

Anurag Sharma | 1 © Vivekananda International Foundation Published in 2021 by Vivekananda International Foundation 3, San Martin Marg | Chanakyapuri | New Delhi - 110021 Tel: 011-24121764 | Fax: 011-66173415 E-mail: [email protected] Website: www.vifindia.org Follow us on Twitter | @vifindia Facebook | /vifindia All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form, or by any means electronic, mechanical, photocopying, recording or otherwise without the prior permission of the publisher. Anurag Sharma is a Research Associate at Vivekananda International Foundation (VIF). He has completed MPhil in Politics and International Relations on ‘International Security’ at the Dublin City University in Ireland, in 2018. His thesis is titled as “The Islamic State Foreign Fighter Phenomenon and the Jihadi Threat to India”. Anurag’s main research interests are terrorism and the Internet, Cybersecurity, Countering Violent Extremism/Online (CVE), Radicalisation, Counter-terrorism and Foreign (Terrorist) Fighters. Prior to joining the Vivekananda International Foundation, Anurag was employed as a Research Assistant at Institute for Conflict Management. As International affiliations, he is a Junior Researcher at TSAS (The Canadian Network for Research on Terrorism, Security, And Society) in Canada; and an Affiliate Member with AVERT (Addressing Violent Extremism and Radicalisation to Terrorism) Research Network in Australia. Anurag Sharma has an MSc in Information Security and Computer Crime, major in Computer Forensic from University of Glamorgan (now University of South Wales) in United Kingdom and has an online certificate in ‘Terrorism and Counterterrorism’ from Leiden University in the Netherlands, and an online certificate in ‘Understanding Terrorism and the Terrorist Threat’ from the University of Maryland, the United States. -

Prohibited Agreements with Huawei, ZTE Corp, Hytera, Hangzhou Hikvision, Dahua and Their Subsidiaries and Affiliates

Prohibited Agreements with Huawei, ZTE Corp, Hytera, Hangzhou Hikvision, Dahua and their Subsidiaries and Affiliates. Code of Federal Regulations (CFR), 2 CFR 200.216, prohibits agreements for certain telecommunications and video surveillance services or equipment from the following companies as a substantial or essential component of any system or as critical technology as part of any system. • Huawei Technologies Company; • ZTE Corporation; • Hytera Communications Corporation; • Hangzhou Hikvision Digital Technology Company; • Dahua Technology company; or • their subsidiaries or affiliates, Entering into agreements with these companies, their subsidiaries or affiliates (listed below) for telecommunications equipment and/or services is prohibited, as doing so could place the university at risk of losing federal grants and contracts. Identified subsidiaries/affiliates of Huawei Technologies Company Source: Business databases, Huawei Investment & Holding Co., Ltd., 2017 Annual Report • Amartus, SDN Software Technology and Team • Beijing Huawei Digital Technologies, Co. Ltd. • Caliopa NV • Centre for Integrated Photonics Ltd. • Chinasoft International Technology Services Ltd. • FutureWei Technologies, Inc. • HexaTier Ltd. • HiSilicon Optoelectronics Co., Ltd. • Huawei Device Co., Ltd. • Huawei Device (Dongguan) Co., Ltd. • Huawei Device (Hong Kong) Co., Ltd. • Huawei Enterprise USA, Inc. • Huawei Global Finance (UK) Ltd. • Huawei International Co. Ltd. • Huawei Machine Co., Ltd. • Huawei Marine • Huawei North America • Huawei Software Technologies, Co., Ltd. • Huawei Symantec Technologies Co., Ltd. • Huawei Tech Investment Co., Ltd. • Huawei Technical Service Co. Ltd. • Huawei Technologies Cooperative U.A. • Huawei Technologies Germany GmbH • Huawei Technologies Japan K.K. • Huawei Technologies South Africa Pty Ltd. • Huawei Technologies (Thailand) Co. • iSoftStone Technology Service Co., Ltd. • JV “Broadband Solutions” LLC • M4S N.V. • Proven Honor Capital Limited • PT Huawei Tech Investment • Shanghai Huawei Technologies Co., Ltd. -

Undersampled Pulse Width Modulation for Optical Camera Communications

Undersampled Pulse Width Modulation for Optical Camera Communications Pengfei Luo1, Tong Jiang1, Paul Anthony Haigh2, Zabih Ghassemlooy3,3a, Stanislav Zvanovec4 1Research Department of HiSilicon, Huawei Technologies Co., Ltd, Beijing, China E-mail: {oliver.luo, toni.jiang}@hisilicon.com 2Department of Electronic and Electrical Engineering, University College London, London, UK Email: [email protected] 3Optical Communications Research Group, NCRLab, Faculty of Engineering and Environment, Northumbria University, Newcastle-upon-Tyne, UK 3aQIEM, Haixi Institutes, Chinese Academy of Sciences, Quanzhou, China Email: [email protected] 4Department of Electromagnetic Field, Faculty of Electrical Engineering, Czech Technical University in Prague, 2 Technicka, 16627 Prague, Czech Republic Email: [email protected] Abstract—An undersampled pulse width modulation (UPWM) According to the Nyquist sampling theorem, if these FRs are scheme is proposed to enable users to establish a non-flickering adopted for sampling, the transmitted symbol rate Rs must be optical camera communications (OCC) link. With UPWM, only a lower than half the sampling rate. However, this will clearly digital light emitting diode (LED) driver is needed to send signals lead to light flickering due to the response time of the human using a higher order modulation. Similar to other undersample- eye. Therefore, a number of techniques have been proposed to based modulation schemes for OCC, a dedicated preamble is support non-flickering OCC using low speed cameras (e.g., ≤ required to assist the receiver to indicate the phase error 60 fps). More precisely, there are three main modulation introduced during the undersampling process, and to compensate categories for LFR-based OCC using both global shutter (GS) for nonlinear distortion caused by the in-built gamma correction and rolling shutter (RS) digital cameras: i) display-based [3], ii) function of the camera. -

Hi3519a V100 4K Smart IP Camera Soc Breif Data Sheet

Hi3519A V100 4K Smart IP Camera SoC Breif Data Sheet Issue 02 Date 2018-06-20 Copyright © HiSilicon Technologies Co., Ltd. 2018. All rights reserved. No part of this document may be reproduced or transmitted in any form or by any means without prior written consent of HiSilicon Technologies Co., Ltd. Trademarks and Permissions , , and other HiSilicon icons are trademarks of HiSilicon Technologies Co., Ltd. All other trademarks and trade names mentioned in this document are the property of their respective holders. Notice The purchased products, services and features are stipulated by the contract made between HiSilicon and the customer. All or part of the products, services and features described in this document may not be within the purchase scope or the usage scope. Unless otherwise specified in the contract, all statements, information, and recommendations in this document are provided "AS IS" without warranties, guarantees or representations of any kind, either express or implied. The information in this document is subject to change without notice. Every effort has been made in the preparation of this document to ensure accuracy of the contents, but all statements, information, and recommendations in this document do not constitute a warranty of any kind, express or implied. HiSilicon Technologies Co., Ltd. Address: New R&D Center, Wuhe Road, Bantian, Longgang District, Shenzhen 518129 P. R. China Website: http://www.hisilicon.com Email: [email protected] HiSilicon Proprietary and Confidential Issue 02 (2018-06-20) 1 Copyright © HiSilicon Technologies Co., Ltd. Hi3519A V100 Hi3519A V100 4K Smart IP Camera SoC Introduction Key Features Hi3519A V100 is a high-performance and low-power 4K Low Power Smart IP Camera SoC designed for IP cameras, action cameras, 1.9 W power consumption in a typical scenario for 4K x panoramic cameras, rear view mirrors, and UAVs. -

Analyzing Android GNSS Raw Measurements Flags Detection

Analyzing Android GNSS Raw Measurements Flags Detection Mechanisms for Collaborative Positioning in Urban Environment Thomas Verheyde, Antoine Blais, Christophe Macabiau, François-Xavier Marmet To cite this version: Thomas Verheyde, Antoine Blais, Christophe Macabiau, François-Xavier Marmet. Analyzing Android GNSS Raw Measurements Flags Detection Mechanisms for Collaborative Positioning in Urban Envi- ronment. ICL-GNSS 2020 International Conference on Localization and GNSS, Jun 2020, Tampere, Finland. pp.1-6, 10.1109/ICL-GNSS49876.2020.9115564. hal-02870213 HAL Id: hal-02870213 https://hal-enac.archives-ouvertes.fr/hal-02870213 Submitted on 17 Jun 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Analyzing Android GNSS Raw Measurements Flags Detection Mechanisms for Collaborative Positioning in Urban Environment Thomas Verheyde, Antoine Blais, Christophe Macabiau, François-Xavier Marmet To cite this version: Thomas Verheyde, Antoine Blais, Christophe Macabiau, François-Xavier Marmet. Analyzing Android GNSS Raw Measurements Flags Detection Mechanisms -

Hi3798m V200 Brief Data Sheet

Hi3798M V200 Hi3798M V200 Brief Data Sheet Key Specifications Processor Security Processing Multi-core 64-bit high-performance ARM Cortex Advanced CA and downloadable CA A53 DRM Multi-core high-performance GPU Secure boot, secure storage, and secure upgrade Memory Control Interfaces Graphics and Display Processing (Imprex 2.0 DDR3/4 interface Processing Engine) eMMC/NOR/NAND flash interface Multiple HDR formats Video Decoding (HiVXE 2.0 Processing Engine) 3D video processing and display Maximum 4K x 2K@60 fps 10-bit decoding 2D graphics acceleration engine Multiple decoding formats, including Audio and Video Interfaces H.265/HEVC, AVS, H.264/AVC MVC, MPEG-1/2/4, VC-1, and so on HDMI 2.0b output Analog video interface Image Decoding Digital and analog audio interfaces Full HD JPEG and PNG hardware decoding Peripheral Interfaces Video and Image Encoding GE and FE network ports 1080p@30 fps video encoding Multiple USB ports Audio Encoding and Decoding SDIO, UART, SCI, IR, KeyLED, and I2C interfaces Audio decoding in multiple formats Audio encoding in multiple formats Others DVB Interface Ultra-low-power design with less than 30 mW standby power consumption Multi-channel TS inputs and outputs BGA package Solution Is targeted for the DVB/Hybrid STB market. Complies with the broadcasting television-level Supports full 4K decoding. picture quality standards. Supports Linux, Android, and TVOS intelligent Metes the increasing value-added service operating systems requirements. Copyright © HiSilicon (Shanghai) Technologies -

Hi3559a V100 Ultra-HD Mobile Camera Soc Breif Data Sheet

Hi3559A V100 ultra-HD Mobile Camera SoC Brief Data Sheet Issue 01 Date 2017-10-30 Copyright © HiSilicon Technologies Co., Ltd. 2017. All rights reserved. No part of this document may be reproduced or transmitted in any form or by any means without prior written consent of HiSilicon Technologies Co., Ltd. Trademarks and Permissions , , and other HiSilicon icons are trademarks of HiSilicon Technologies Co., Ltd. All other trademarks and trade names mentioned in this document are the property of their respective holders. Notice The purchased products, services and features are stipulated by the contract made between HiSilicon and the customer. All or part of the products, services and features described in this document may not be within the purchase scope or the usage scope. Unless otherwise specified in the contract, all statements, information, and recommendations in this document are provided "AS IS" without warranties, guarantees or representations of any kind, either express or implied. The information in this document is subject to change without notice. Every effort has been made in the preparation of this document to ensure accuracy of the contents, but all statements, information, and recommendations in this document do not constitute a warranty of any kind, express or implied. HiSilicon Technologies Co., Ltd. Address: New R&D Center, Wuhe Road, Bantian, Longgang District, Shenzhen 518129 P. R. China Website: http://www.hisilicon.com Email: [email protected] Hi3559A V100 Hi3559A V100 Ultra-HD Mobile Camera SoC Brief Data Sheet Introduction Key Features Hi3559A V100 is a professional 8K ultra-HD mobile Low Power Consumption camera SoC. It supports 8K30/4K120 digital video recording Typical power consumption of 3 W in 8KP30 (7680 x with broadcast-level picture quality. -

View Document

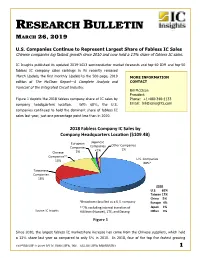

RESEARCH BULLETIN MARCH 26, 2019 U.S. Companies Continue to Represent Largest Share of Fabless IC Sales Chinese companies log fastest growth since 2010 and now hold a 13% share of fabless IC sales. IC Insights published its updated 2019-2023 semiconductor market forecasts and top-40 IDM and top-50 fabless IC company sales rankings in its recently released March Update, the first monthly Update to the 500-page, 2019 MORE INFORMATION edition of The McClean Report—A Complete Analysis and CONTACT Forecast of the Integrated Circuit Industry. Bill McClean President Figure 1 depicts the 2018 fabless company share of IC sales by Phone: +1-480-348-1133 company headquarters location. With 68%, the U.S. Email: [email protected] companies continued to hold the dominant share of fabless IC sales last year, just one percentage point less than in 2010. !"#$%&'()*++%,-./'01%2,%3')*+%(1%% ,-./'01%4*'567'89*8+%:-;'<-0%=>#"?@ABC%% 789&(,)*$ ;)()*,-,$ =>5,9$%&'()*+,-$ %&'()*+,-$ %&'()*+,-$ <40$ 40$ %5+*,-,$ :0$ %&'()*+,-11$ 460$ !"#"$%&'()*+,-$ ./01$ 2)+3)*,-,$ %&'()*+,-$ 4.0$ %%%%%%%!"#"% D@3@%%%%%%%E?F% G'HI'0%#JF% ,KH0'%%%%%LF% 1N9&)F?&'$?E)--+O,F$)-$)$!"#"$?&'()*P$ M78-/*%%%AF% 11C0$,D?E8F+*B$+*>,9*)E$>9)*-G,9-$&G$ N'/'0%%%%%#F% #&89?,@$A%$A*-+B5>-$ O9K*8%%%%%AF% H+#+E+?&*$IH8)3,+JK$L27K$)*F$M)>)*B$$ 1 Figure 1 Since 2010, the largest fabless IC marketshare increase has come from the Chinese suppliers, which held a 13% share last year as compared to only 5% in 2010. In 2018, four of the top five fastest growing COPYRIGHT © 2019 BY IC INSIGHTS, INC. -

Hi3798c V200 Brief Data Sheet

Hi3798C V200 Hi3798C V200 Brief Data Sheet Key Specifications High-Performance CPU z Dolby Digital/Dolby Digital Plus Decoder-Converter z Quad-core 64-bit high-performance ARM Cortex A53, z Dolby True HD decoding 15000 DMIPS z DTS HD and DTS M6 decoding z Integrated multimedia acceleration engine NEON z Dolby Digital/DTS transparent transmission z Hardware Java acceleration z AAC-LC and HE AAC V1/V2 decoding z Integrated hardware floating-point coprocessor z APE, FLAC, Ogg, AMR-NB, and AMR-WB decoding 3D GPU z G.711 (u/a) audio decoding z High-performance multi-core GPU Mali T720 z Dolby MS12 decoding and audio effect z OpenGL ES 3.1/3.0/2.0/1.1/1.0 OpenVG 1.1 z G.711(u/a), AMR-NB, AMR-WB, and AAC-LC audio z OpenCL 1.1/1.2 Full Profile/RenderScipt encoding z Microsoft DirectX 11 FL9_3 z HE-AAC transcoding DD (AC3) z Adaptive scalable texture compression (ASTC) TS Demultiplexing/PVR z Pixel fill rate greater than 2.7 Gpixel/s z Maximum six TS inputs (optional) Memory Control Interfaces z Two TS outputs (multiplexed with two TS inputs) z DDR3/3L/4 SDRAM interface, maximum 32-bit z DVB-CSA/AES/DES descrambling data width z Recording of scrambled and non-scrambled streams z SPI NOR flash interface Security Processing z SPI NAND flash interface z Trusted execution environment (TEE) z NAND flash interface z Secure video path (SVP) − SLC/MLC flash memory z Secure boot − Maximum 64-bit error-correcting code (ECC) z Secure storage z eMMC flash interface z Secure upgrade Video Decoding (HiVXE 2.0 Processing Engine) z Protection for JTAG and other -

Handset ODM Industry White Paper

Publication date: April 2020 Authors: Robin Li Lingling Peng Handset ODM Industry White Paper Smartphone ODM market continues to grow, duopoly Wingtech and Huaqin accelerate diversified layout Brought to you by Informa Tech Handset ODM Industry White Paper 01 Contents Handset ODM market review and outlook 2 Global smartphone market continued to decline in 2019 4 In the initial stage of 5G, China will continue to decline 6 Outsourcing strategies of the top 10 OEMs 9 ODM market structure and business model analysis 12 The top five mobile phone ODMs 16 Analysis of the top five ODMs 18 Appendix 29 © 2020 Omdia. All rights reserved. Unauthorized reproduction prohibited. Handset ODM Industry White Paper 02 Handset ODM market review and outlook In 2019, the global smartphone market shipped 1.38 billion units, down 2.2% year-over- year (YoY). The mature markets such as North America, South America, Western Europe, and China all declined. China’s market though is going through a transition period from 4G to 5G, and the shipments of mid- to high-end 4G smartphone models fell sharply in 2H19. China’s market shipped 361 million smartphones in 2019, a YoY decline of 7.6%. In the early stage of 5G switching, the operator's network coverage was insufficient. Consequently, 5G chipset restrictions led to excessive costs, and expectations of 5G led to short-term consumption suppression. The proportion of 5G smartphone shipments was relatively small while shipments of mid- to high-end 4G models declined sharply. The overall shipment of smartphones from Chinese mobile phone manufacturers reached 733 million units, an increase of 4.2% YoY. -

Global Network Investment Competition Fudan University Supreme Pole ‐ Allwinner Technology

Global Network Investment Competition Fudan University Supreme Pole ‐ Allwinner Technology Date: 31.10.2017 Fan Jiang Jianbin Gu Qianrong Lu Shijie Dong Zheng Xu Chunhua Xu Allwinner Technology ‐‐ Sail Again We initiate coverage on Allwinner Technology with a strong BUY rating, target price is derived by DCF at CNY ¥ 35.91 , indicating Price CNY ¥ 27.60 30.1% upside potential. Price Target CNY ¥ 35.91 Upside Potential 30.1% Target Period 1 Year We recommend based on: 52 week Low CNY ¥ 23.4 Broad prospects of the AI. 52 week High CNY ¥ 54.02 Supporting of the industry policy. Average Volume CNY ¥ 190.28 M Allwinner has finished its transition. Market Cap CNY ¥ 96.08 B The rise of the various new P/E 64 products will put the margins back Price Performance in the black. 60 The current valuation, 64.x P/E, is 50 lower than its competitor such as 40 Ingenic which is trading at more 30 than 100 and Nationz which is 20 trading at 76.x P/E. 10 0 Overview for Allwinner Allwinner Technology, founded in 2007, is a leading fabless design company dedicated to smart application processor SoCs and smart analog ICs. Its product line includes multi‐core application processors for smart devices and smart power management ICs used worldwide. These two categories of products are applied to various types of intelligent terminals into 3 major business lines: Consumer Electronics: Robot, Smart Hardware Open Platform, Tablets, Video Theater Device, E‐Reader, Video Story Machine, Action Camera, VR Home Entertainment: OTT Box, Karaoke Machine, IPC monitoring Connected Automotive Applications: Dash Cams, Smart Rear‐view Mirror, In Car Entertainment THE PROSPECT OF AI AI(Artificial Intelligence) has a wider range of global concern and is entering its third golden period of development. -

Huawei Mate 20 LTE 128GB 4GB Price: 241.00 € RAM

Mobileshop, s.r.o. https://www.mobileshop.eu Email: [email protected] Phone: +421233329584 Huawei Mate 20 LTE 128GB 4GB Price: 241.00 € RAM https://www.mobileshop.eu/huawei/mobile-phones/mate-20-lte-128gb-4gb-ram/ Technology: GSM / HSPA / LTE 2G bands: GSM 850 / 900 / 1800 / 1900 3G bands: HSDPA 800 / 850 / 900 / 1700(AWS) / 1900 / 2100 LTE band 1(2100), 2(1900), 3(1800), 4(1700/2100), 5(850), 6(900), 7(2600), 8(900), 9(1800), 12(700), 17(700), 18(800), 19(800), Network 4G bands: 20(800), 26(850), 28(700), 32(1500), 34(2000), 38(2600), 39(1900), 40(2300) Speed: HSPA 42.2/5.76 Mbps, LTE-A Cat21 1400/200 Mbps GPRS: Yes EDGE: Yes Announced: 2018, October Launch Status: Available. Released 2018, October Dimensions: 158.2 x 77.2 x 8.3 mm Weight: 188 g Body Build: Front/back glass & aluminum frame SIM: Nano-SIM IP53 dust and splash protection Type: IPS LCD capacitive touchscreen, 16M colors Size: 6.53 inches, 107.5 cm2 (~88.0% screen-to-body ratio) Resolution: 1080 x 2244 pixels, 18.7:9 ratio (~381 ppi density) Display Multitouch: Yes Protection: Corning Gorilla Glass (unspecified version) DCI-P3, HDR10, EMUI 9.0 OS: Android 9.0 (Pie) Chipset: HiSilicon Kirin 980 Platform CPU: Octa-core (2x2.6 GHz Cortex-A76 & 2x1.92 GHz Cortex-A76 & 4x1.8 GHz Cortex-A55) GPU: Mali-G76 MP10 Card slot: NM (Nano Memory), up to 256GB (uses SIM 2) Memory Internal: 128 GB, 4 GB RAM Alert types: Vibration; MP3, WAV ringtones Loudspeaker: Yes, with stereo speakers Sound 3.5mm jack: Yes 32-bit/384kHz audio, Active noise cancellation with dedicated mic, N/A WLAN: Wi-Fi