Assessment of Options for Handling Full Unicode in Character Encodings in MARC 21

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

IBM Db2 High Performance Unload for Z/OS User's Guide

5.1 IBM Db2 High Performance Unload for z/OS User's Guide IBM SC19-3777-03 Note: Before using this information and the product it supports, read the "Notices" topic at the end of this information. Subsequent editions of this PDF will not be delivered in IBM Publications Center. Always download the latest edition from the Db2 Tools Product Documentation page. This edition applies to Version 5 Release 1 of Db2 High Performance Unload for z/OS (product number 5655-AA1) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright IBM® Corporation 1999, 2021; Copyright Infotel 1999, 2021. All Rights Reserved. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. © Copyright International Business Machines Corporation . US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this information......................................................................................... vii Chapter 1. Db2 High Performance Unload overview................................................ 1 What does Db2 HPU do?..............................................................................................................................1 Db2 HPU benefits.........................................................................................................................................1 Db2 HPU process and components.............................................................................................................2 -

International Standard Iso/Iec 10646

This is a preview - click here to buy the full publication INTERNATIONAL ISO/IEC STANDARD 10646 Sixth edition 2020-12 Information technology — Universal coded character set (UCS) Technologies de l'information — Jeu universel de caractères codés (JUC) Reference number ISO/IEC 10646:2020(E) © ISO/IEC 2020 This is a preview - click here to buy the full publication ISO/IEC 10646:2020 (E) CONTENTS 1 Scope ..................................................................................................................................................1 2 Normative references .........................................................................................................................1 3 Terms and definitions .........................................................................................................................2 4 Conformance ......................................................................................................................................8 4.1 General ....................................................................................................................................8 4.2 Conformance of information interchange .................................................................................8 4.3 Conformance of devices............................................................................................................8 5 Electronic data attachments ...............................................................................................................9 6 General structure -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

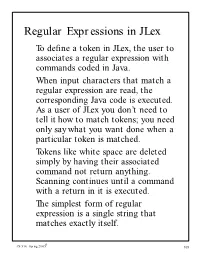

Regular Expressions in Jlex to Define a Token in Jlex, the User to Associates a Regular Expression with Commands Coded in Java

Regular Expressions in JLex To define a token in JLex, the user to associates a regular expression with commands coded in Java. When input characters that match a regular expression are read, the corresponding Java code is executed. As a user of JLex you don’t need to tell it how to match tokens; you need only say what you want done when a particular token is matched. Tokens like white space are deleted simply by having their associated command not return anything. Scanning continues until a command with a return in it is executed. The simplest form of regular expression is a single string that matches exactly itself. © CS 536 Spring 2005 109 For example, if {return new Token(sym.If);} If you wish, you can quote the string representing the reserved word ("if"), but since the string contains no delimiters or operators, quoting it is unnecessary. For a regular expression operator, like +, quoting is necessary: "+" {return new Token(sym.Plus);} © CS 536 Spring 2005 110 Character Classes Our specification of the reserved word if, as shown earlier, is incomplete. We don’t (yet) handle upper or mixed- case. To extend our definition, we’ll use a very useful feature of Lex and JLex— character classes. Characters often naturally fall into classes, with all characters in a class treated identically in a token definition. In our definition of identifiers all letters form a class since any of them can be used to form an identifier. Similarly, in a number, any of the ten digit characters can be used. © CS 536 Spring 2005 111 Character classes are delimited by [ and ]; individual characters are listed without any quotation or separators. -

Rank of Weighted Digraphs with Blocks

Rank of weighted digraphs with blocks∗ Ranveer Singh† Swarup Kumar Panda ‡ Naomi Shaked-Monderer§ Abraham Berman¶ October 10, 2018 Abstract Let G be a digraph and r(G) be its rank. Many interesting results on the rank of an undirected graph appear in the literature, but not much information about the rank of a digraph is available. In this article, we study the rank of a digraph using the ranks of its blocks. In particular, we define classes of digraphs, namely r2-digraph, and r0-digraph, for which the rank can be exactly determined in terms of the ranks of subdigraphs of the blocks. Furthermore, the rank of directed trees, simple biblock graphs, and some simple block graphs are studied. Keywords: r2-digraphs, r0-digraphs, tree digraphs, block graphs, biblock graphs AMS Subject Classifications. 15A15, 15A18, 05C50 1 Introduction A well-known problem, proposed in 1957 by Collatz and Sinogowitz, is to characterize graphs with positive nullity [19]. Nullity of graphs is applicable in various branches of science, in particular, quantum chemistry, H¨uckel molecular orbital theory [10], [13] and social network theory [14]. For more detail on the applications see [10]. Many significant results on the nullity of undirected graphs are available in the literature, see [4, 7, 11, 12, 15, 12, 2, 3, 20]. Recently in [9, 8, 6] nullity of undirected graphs was studied using cut-vertices and connected components. The results are used to calculate the nullity of line graphs of undirected graphs. Apart from the connected components, it turns out that the blocks are also interesting subgraphs of a graph, which can be utilized to know its determinant [17, 16]. -

31 Vowel Digraph Oo

Sort Vowel Digraph oo 31 Objectives • To identify spelling patterns of vowel digraph oo Words • To read, sort, and write words with vowel digraph oo o˘o oˉo = uˉ Oddball brook nook fool spool could Materials for Within Word Pattern crook soot groom spoon should Big Book of Rhymes, “The Puppet Show,” page 55 foot stood hoop stool would hood wood noon tool Whiteboard Activities DVD-ROM, Sort 31 hook wool root troop Teacher Resource CD-ROM, Sort 31 and Follow the Dragon Game Student Book, pages 121–124 Words Their Way Library, The House That Stood on Booker Hill Introduce/Model Small Groups • Read a Rhyme Read “The Puppet Show,” and Extend the Sort emphasize words with the vowel digraph oo. (wood, book, took; soon, noon) Ask students to locate these Alternative Sort: Brainstorming words, and help them write the words in two columns, Ask students to think of other words that contain according to vowel sound. Help students hear the oo. Write their responses on index cards. When different pronunciations of the vowel digraph oo. students have completed brainstorming, ask them to identify and sort all the words they named • Model Use the whiteboard DVD or the CD word according to the vowel sound of oo. cards. Define in context any that may be unfamiliar to students. Demonstrate how to sort the words ELL English Language Learners according to the sound of the digraph oo. Point out Explain that a nook is “a hidden place,” and that that would, could, and should do not contain the groom can have several meanings, including “a digraph oo, so they belong in the oddball category. -

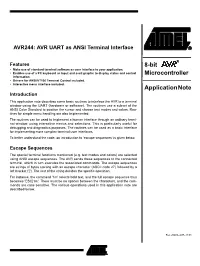

AVR244 AVR UART As ANSI Terminal Interface

AVR244: AVR UART as ANSI Terminal Interface Features 8-bit • Make use of standard terminal software as user interface to your application. • Enables use of a PC keyboard as input and ascii graphic to display status and control Microcontroller information. • Drivers for ANSI/VT100 Terminal Control included. • Interactive menu interface included. Application Note Introduction This application note describes some basic routines to interface the AVR to a terminal window using the UART (hardware or software). The routines use a subset of the ANSI Color Standard to position the cursor and choose text modes and colors. Rou- tines for simple menu handling are also implemented. The routines can be used to implement a human interface through an ordinary termi- nal window, using interactive menus and selections. This is particularly useful for debugging and diagnostics purposes. The routines can be used as a basic interface for implementing more complex terminal user interfaces. To better understand the code, an introduction to ‘escape sequences’ is given below. Escape Sequences The special terminal functions mentioned (e.g. text modes and colors) are selected using ANSI escape sequences. The AVR sends these sequences to the connected terminal, which in turn executes the associated commands. The escape sequences are strings of bytes starting with an escape character (ASCII code 27) followed by a left bracket ('['). The rest of the string decides the specific operation. For instance, the command '1m' selects bold text, and the full escape sequence thus becomes 'ESC[1m'. There must be no spaces between the characters, and the com- mands are case sensitive. The various operations used in this application note are described below. -

CJKV Unified Ideographs Extension C

22nd International Unicode Conference (IUC22) Unicode and the Web: Evolution or Revolution? September 9 - 13, 2002, San Jose, California http://www.unicode.org/iuc/iuc22/ CJKV Unified Ideographs Extension C Richard S. COOK Linguistics Department University of California, Berkeley [email protected] http://stedt.berkeley.edu/ 2002-09-18-10:31 INTRODUCTION This presentation is concerned with introducing the audience to some of the issues surrounding Ideographic Rapporteur Group (ISO/IEC JTC1/SC2/WG2/IRG) work on “CJK Unified Ideographs Extension C” (Ext C), including the following: (1) The IRG methodology constraining glyph submissions for Ext C1 (why more Han characters and which?) (2) The method of preparing glyph submissions for the Unicode Technical Committee (UTC) (3) IRG member submissions for Ext C1, introducing some of the submitted glyphs, the print sources for the glyph submissions (4) The IRG process of submission evaluation (5) The impact of submitted glyphs on the “Han Variant” problem (see Cook, IUC-19) (6) Plans for Ext C2 UTC submissions 22nd International Unicode Conference1 San Jose, California, September 2002 CJKV Unified Ideographs Extension C BACKGROUND As many people already know, The Unicode Standard 3.2 is the best thing ever to happen to the digitization of Chinese texts. The immense work done to produce the CJKV1 part of this standard, undertaken by the Ideographic Rapporteur Group (IRG)2, has pushed CJKV computing to higher levels than many had ever thought possible. With the IRG’s creation of “Extension B”, 42,711 new characters were added to The Unicode Standard, so that it now encodes a total of 70,207 unique “ideographs”.3 The issue is somewhat complicated by things such as “compatibility characters which are not actually compatibility characters”. -

Geometry and Art LACMA | | April 5, 2011 Evenings for Educators

Geometry and Art LACMA | Evenings for Educators | April 5, 2011 ALEXANDER CALDER (United States, 1898–1976) Hello Girls, 1964 Painted metal, mobile, overall: 275 x 288 in., Art Museum Council Fund (M.65.10) © Alexander Calder Estate/Artists Rights Society (ARS), New York/ADAGP, Paris EOMETRY IS EVERYWHERE. WE CAN TRAIN OURSELVES TO FIND THE GEOMETRY in everyday objects and in works of art. Look carefully at the image above and identify the different, lines, shapes, and forms of both GAlexander Calder’s sculpture and the architecture of LACMA’s built environ- ment. What is the proportion of the artwork to the buildings? What types of balance do you see? Following are images of artworks from LACMA’s collection. As you explore these works, look for the lines, seek the shapes, find the patterns, and exercise your problem-solving skills. Use or adapt the discussion questions to your students’ learning styles and abilities. 1 Language of the Visual Arts and Geometry __________________________________________________________________________________________________ LINE, SHAPE, FORM, PATTERN, SYMMETRY, SCALE, AND PROPORTION ARE THE BUILDING blocks of both art and math. Geometry offers the most obvious connection between the two disciplines. Both art and math involve drawing and the use of shapes and forms, as well as an understanding of spatial concepts, two and three dimensions, measurement, estimation, and pattern. Many of these concepts are evident in an artwork’s composition, how the artist uses the elements of art and applies the principles of design. Problem-solving skills such as visualization and spatial reasoning are also important for artists and professionals in math, science, and technology. -

L2/14-274 Title: Proposed Math-Class Assignments for UTR #25

L2/14-274 Title: Proposed Math-Class Assignments for UTR #25 Revision 14 Source: Laurențiu Iancu and Murray Sargent III – Microsoft Corporation Status: Individual contribution Action: For consideration by the Unicode Technical Committee Date: 2014-10-24 1. Introduction Revision 13 of UTR #25 [UTR25], published in April 2012, corresponds to Unicode Version 6.1 [TUS61]. As of October 2014, Revision 14 is in preparation, to update UTR #25 and its data files to the character repertoire of Unicode Version 7.0 [TUS70]. This document compiles a list of characters proposed to be assigned mathematical classes in Revision 14 of UTR #25. In this document, the term math-class is being used to refer to the character classification in UTR #25. While functionally similar to a UCD character property, math-class is applicable only within the scope of UTR #25. Math-class is different from the UCD binary character property Math [UAX44]. The relation between Math and math-class is that the set of characters with the property Math=Yes is a proper subset of the set of characters assigned any math-class value in UTR #25. As of Revision 13, the set relation between Math and math-class is invalidated by the collection of Arabic mathematical alphabetic symbols in the range U+1EE00 – U+1EEFF. This is a known issue [14-052], al- ready discussed by the UTC [138-C12 in 14-026]. Once those symbols are added to the UTR #25 data files, the set relation will be restored. This document proposes only UTR #25 math-class values, and not any UCD Math property values.