Full Virtualization on Low-End Hardware: a Case Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Create an Email with Subject Title “Embedded Software Engineer”, Email a Copy of Your Resume to [email protected]

To Apply for This Position: Create an email with subject title “Embedded Software Engineer”, email a copy of your resume to [email protected] Location Address: ALLEN PARK, MI,48101 Position Description: TITLE: Embedded Software Engineer ‐ Hypervisor OS technologies This position is responsible to develop QNX and Android operating system images for Ford infotainment products. This includes creating and integrating code for: bootloader, kernel, drivers, type 1 hypervisor, and build environment. Skills Required: • Lead the design, bring‐up and support of QNX and Android operating system images • Create virt‐io drivers for QNX or Android guest operating systems • Participate in root cause analysis of hardware quality problems and software defects • Participate in system design, documentation, and testing to deliver a best‐in‐class infotainment system Experience Required: • 5+ years operating system experience involving Linux or QNX • 5+ years C/C++ software development experience on embedded, mobile, or consumer electronic platforms Experience Preferred: • Experience with Type 1 hypervisors • Experience creating virt‐io drivers • Mastery of C/C++ language, GNU tool chain, and Unix (QNX, Linux, or equivalent) • Experience with embedded build systems including QNX system builder, buildroot, yocto, or equivalent • Knowledge of in‐vehicle signaling and communication mechanisms such as CAN • Proficiency with revision control including Git, Subversion, or equivalent • Multi‐site software project team experience Education Required: • Bachelor's degree in Computer Engineering, Electrical Engineering, Computer Science, or related Education Preferred: • Master's degree in Computer Engineering, Electrical Engineering or Computer Science Additional Information: Web Based Assessment not required for this position. Visa Sponsorship and Domestic Relocation is available for this position. -

Understanding Full Virtualization, Paravirtualization, and Hardware Assist

VMware Understanding Full Virtualization, Paravirtualization, and Hardware Assist Contents Introduction .................................................................................................................1 Overview of x86 Virtualization..................................................................................2 CPU Virtualization .......................................................................................................3 The Challenges of x86 Hardware Virtualization ...........................................................................................................3 Technique 1 - Full Virtualization using Binary Translation......................................................................................4 Technique 2 - OS Assisted Virtualization or Paravirtualization.............................................................................5 Technique 3 - Hardware Assisted Virtualization ..........................................................................................................6 Memory Virtualization................................................................................................6 Device and I/O Virtualization.....................................................................................7 Summarizing the Current State of x86 Virtualization Techniques......................8 Full Virtualization with Binary Translation is the Most Established Technology Today..........................8 Hardware Assist is the Future of Virtualization, but the Real Gains Have -

OS Selection for Dummies

OS SELECTION HOW TO CHOOSE HOW TO CHOOSE Choosing your OS is the first step, so take the time to consider your choice fully. There are many parameters to take into account: l Is this a new project or the evolution of an existing product? l Using the same SW stack? Re-using existing code? l Is your team familiar with a particular OS? Ø Using an OS you are already comfortable with can help l What are the HW constraints of your system? Ø Some operating systems require more memory/processing power than others l Have no SW team? Not sure about the above? Ø Contact us so we can help you decide! Ø We can also introduce you to one of our many partners! 1 OS SELECTION OPEN SOURCE VS. COMMERCIAL OS Embedded OS BSP Provider $ Cost Open-Source OS Boundary Devices • Embedded Linux / Android Embedded Linux $0, included • Large pool of developers available with Board Purchase • Strong community • Royalty-free And / or partners 3rd Party - Commercial OS Partners • QNX / Win10 IoT / Green Hills $>0, depends on • Professional support requirements • Unique set of development tools 2 OS SELECTION OPEN SOURCE SELECTION OS SELECTION PROS CONS Embedded Linux Most powerful / optimized Complexity for newcomers solution, maintained by NXP • Build systems Ø Yocto / Buildroot Simpler solution, makefile- Not as flexible as Yocto Ø Everything built from scratch based, maintained by BD Desktop-like approach, Harder to customize, non- Package-based distribution easy-to-use atomic updates, no cross- • Ubuntu / Debian compilation SDK Apt install / update, millions • Packages installed from server of prebuilt packages available Android Millions of apps available, same number of developers, Resource-hungry, complex • AOSP-based (no GMS) development environment, BSP modifications (HAL) • APK applications IDE + debugging tools 3 SOFTWARE PARTNERS Boundary Devices has an industry-leading group of software partners. -

A Lightweight Virtualization Layer with Hardware-Assistance for Embedded Systems

PONTIFICAL CATHOLIC UNIVERSITY OF RIO GRANDE DO SUL FACULTY OF INFORMATICS COMPUTER SCIENCE GRADUATE PROGRAM A LIGHTWEIGHT VIRTUALIZATION LAYER WITH HARDWARE-ASSISTANCE FOR EMBEDDED SYSTEMS CARLOS ROBERTO MORATELLI Dissertation submitted to the Pontifical Catholic University of Rio Grande do Sul in partial fullfillment of the requirements for the degree of Ph. D. in Computer Science. Advisor: Prof. Fabiano Hessel Porto Alegre 2016 To my family and friends. “I’m doing a (free) operating system (just a hobby, won’t be big and professional like gnu) for 386(486) AT clones.” (Linus Torvalds) ACKNOWLEDGMENTS I would like to express my sincere gratitude to those who helped me throughout all my Ph.D. years and made this dissertation possible. First of all, I would like to thank my advisor, Prof. Fabiano Passuelo Hessel, who has given me the opportunity to undertake a Ph.D. and provided me invaluable guidance and support in my Ph.D. and in my academic life in general. Thank you to all the Ph.D. committee members – Prof. Carlos Eduardo Pereira (dissertation proposal), Prof. Rodolfo Jardim de Azevedo, Prof. Rômulo Silva de Oliveira and Prof. Tiago Ferreto - for the time invested and for the valuable feedback provided.Thank you Dr. Luca Carloni and the other SLD team members at the Columbia University in the City of New York for receiving me and giving me the opportunity to work with them during my ‘sandwich’ research internship. Eu gostaria de agraceder minha esposa, Ana Claudia, por ter estado ao meu lado durante todo o meu período de doutorado. O seu apoio e compreensão foram e continuam sendo muito importantes para mim. -

Paravirtualization (PV)

Full and Para Virtualization Dr. Sanjay P. Ahuja, Ph.D. Fidelity National Financial Distinguished Professor of CIS School of Computing, UNF x86 Hardware Virtualization The x86 architecture offers four levels of privilege known as Ring 0, 1, 2 and 3 to operating systems and applications to manage access to the computer hardware. While user level applications typically run in Ring 3, the operating system needs to have direct access to the memory and hardware and must execute its privileged instructions in Ring 0. x86 privilege level architecture without virtualization Technique 1: Full Virtualization using Binary Translation This approach relies on binary translation to trap (into the VMM) and to virtualize certain sensitive and non-virtualizable instructions with new sequences of instructions that have the intended effect on the virtual hardware. Meanwhile, user level code is directly executed on the processor for high performance virtualization. Binary translation approach to x86 virtualization Full Virtualization using Binary Translation This combination of binary translation and direct execution provides Full Virtualization as the guest OS is completely decoupled from the underlying hardware by the virtualization layer. The guest OS is not aware it is being virtualized and requires no modification. The hypervisor translates all operating system instructions at run-time on the fly and caches the results for future use, while user level instructions run unmodified at native speed. VMware’s virtualization products such as VMWare ESXi and Microsoft Virtual Server are examples of full virtualization. Full Virtualization using Binary Translation The performance of full virtualization may not be ideal because it involves binary translation at run-time which is time consuming and can incur a large performance overhead. -

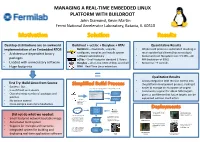

MANAGING a REAL-TIME EMBEDDED LINUX PLATFORM with BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510

MANAGING A REAL-TIME EMBEDDED LINUX PLATFORM WITH BUILDROOT John Diamond, Kevin Martin Fermi National Accelerator Laboratory, Batavia, IL 60510 Desktop distributions are an awkward Buildroot + ucLibc + Busybox + RTAI Quantitative Results implementation of an Embedded RTOS Buildroot – downloads, unpacks, • Whole build process is automated resulting in • Architecture-dependent binary configures, compiles and installs system much quicker build times (hours not days) software automatically • Kernel and root filesystem size: 3.5 MB – 20 packages uClibc – Small-footprint standard C library MB (reduction of 99%) • Loaded with unnecessary software Busybox – all-in-one UNIX utilities and shell • Boot-time: ~9 seconds • Huge footprints RTAI – Real-Time Linux extensions = Qualitative Results • Allows integration with revision control into First Try: Build Linux from Source the platform development process, making it • Success! But.. 2. Buildroot’s menuconfig generates a package configuration file easier to manage an ecosystem of targets • Is as difficult as it sounds and kernel configuration file • Community support for x86 & ARM targets Linux Kernel • Overwhelming number of packages and Configuration gives us confidence that future targets can be patches Package supported without much effort 1. Developer Configuration • No version control configures build via Buildroot’s • Cross-compile even more headaches menuconfig Internet Build Process Power Supply Control Quench Protection Git / CVS / SVN and Regulation for the System for Tevatron Did not do what we needed: Fermilab Linac Electron Lens (TEL II) 3. The build process pulls 4. The output from the software packages from build process is a kernel • Small-footprint network bootable image the internet and custom bzImage bzImage file with an softare packages from a integrated root filesystem ARM Cortex A-9 source code repository file PC/104 AMD • Automated build system Geode SBC Beam Position Monitor Power Supply Control prototype for Fermilab and Regulation for • Support for multiple architectures 5. -

Linux Based Mobile Operating Systems

INSTITUTO SUPERIOR DE ENGENHARIA DE LISBOA Área Departamental de Engenharia de Electrónica e Telecomunicações e de Computadores Linux Based Mobile Operating Systems DIOGO SÉRGIO ESTEVES CARDOSO Licenciado Trabalho de projecto para obtenção do Grau de Mestre em Engenharia Informática e de Computadores Orientadores : Doutor Manuel Martins Barata Mestre Pedro Miguel Fernandes Sampaio Júri: Presidente: Doutor Fernando Manuel Gomes de Sousa Vogais: Doutor José Manuel Matos Ribeiro Fonseca Doutor Manuel Martins Barata Julho, 2015 INSTITUTO SUPERIOR DE ENGENHARIA DE LISBOA Área Departamental de Engenharia de Electrónica e Telecomunicações e de Computadores Linux Based Mobile Operating Systems DIOGO SÉRGIO ESTEVES CARDOSO Licenciado Trabalho de projecto para obtenção do Grau de Mestre em Engenharia Informática e de Computadores Orientadores : Doutor Manuel Martins Barata Mestre Pedro Miguel Fernandes Sampaio Júri: Presidente: Doutor Fernando Manuel Gomes de Sousa Vogais: Doutor José Manuel Matos Ribeiro Fonseca Doutor Manuel Martins Barata Julho, 2015 For Helena and Sérgio, Tomás and Sofia Acknowledgements I would like to thank: My parents and brother for the continuous support and being the drive force to my live. Sofia for the patience and understanding throughout this challenging period. Manuel Barata for all the guidance and patience. Edmundo Azevedo, Miguel Azevedo and Ana Correia for reviewing this document. Pedro Sampaio, for being my counselor and college, helping me on each step of the way. vii Abstract In the last fifteen years the mobile industry evolved from the Nokia 3310 that could store a hopping twenty-four phone records to an iPhone that literately can save a lifetime phone history. The mobile industry grew and thrown way most of the proprietary operating systems to converge their efforts in a selected few, such as Android, iOS and Windows Phone. -

Operating System Components for an Embedded Linux System

INSTITUTEFORREAL-TIMECOMPUTERSYSTEMS TECHNISCHEUNIVERSITATM¨ UNCHEN¨ PROFESSOR G. F ARBER¨ Operating System Components for an Embedded Linux System Martin Hintermann Studienarbeit ii Operating System Components for an Embedded Linux System Studienarbeit Executed at the Institute for Real-Time Computer Systems Technische Universitat¨ Munchen¨ Prof. Dr.-Ing. Georg Farber¨ Advisor: Prof.Dr.rer.nat.habil. Thomas Braunl¨ Author: Martin Hintermann Kirchberg 34 82069 Hohenschaftlarn¨ Submitted in February 2007 iii Acknowledgements At first, i would like to thank my supervisor Prof. Dr. Thomas Braunl¨ for giving me the opportunity to take part at a really interesting project. Many thanks to Thomas Sommer, my project partner, for his contribution to our good work. I also want to thank also Bernard Blackham for his assistance by email and phone at any time. In my opinion, it was a great cooperation of all persons taking part in this project. Abstract Embedded systems can be found in more and more devices. Linux as a free operating system is also becoming more and more important in embedded applications. Linux even replaces other operating systems in certain areas (e.g. mobile phones). This thesis deals with the employment of Linux in embedded systems. Various architectures of embedded systems are introduced and the characteristics of common operating systems for these devices are reviewed. The architecture of Linux is examined by looking at the particular components such as kernel, standard C libraries and POSIX tools for embedded systems. Furthermore, there is a survey of real-time extensions for the Linux kernel. The thesis also treats software development for embedded Linux ranging from the prerequi- sites for compiling software to the debugging of binaries. -

Building Meego with OBS an Introduction to the Meego Build Infrastructure

» The Linux Foundation Building MeeGo with OBS An Introduction to the MeeGo Build Infrastructure .................. By Rudolf Streif, The Linux Foundation June 2011 A Publication By The Linux Foundation Characters from the MeeGo Project (http://www.meego.com) http://www.linuxfoundation.org Background Building a Linux distribution from scratch can be a daunting task. Besides the obligatory Linux kernel itself with its myriad of configuration options, a working Linux distribution requires hundreds if not thousands of additional packages to form a viable platform for desktop, server, mobile or any other type of specialized applications. For embedded Linux solutions, the complexity scales with the need to support multiple and different processor architectures, hardware platforms with limited resources, requirements for cross toolchains, and more. The MeeGo project leverages the strengths of the Open Build Service (OBS) for distribution and application development. MeeGo has established a 6-months release cadence mounting two releases per year in April and October in addition to maintenance releases in-between. To support this, the MeeGo OBS environment builds bootable MeeGo images for the various MeeGo device categories and user experiences (Handset, Netbook, Tablet PC, In-vehicle Infotainment, and Smart TV) targeting multiple different hardware platforms for IA, ARM, etc. For application development, the MeeGo OBS allows developers to easily build their software packages utilizing the same infrastructure the MeeGo distributions were built with, validating dependencies and providing binary compliance. In this whitepaper, we will provide a brief introduction to the MeeGo build infrastructure and how it is utilized to support the MeeGo development process. Then we demonstrate the use of the OBS WebUI and the command-line client osc with step-by-step instructions. -

Instituto Tecnológico De Costa Rica Escuela De Ingenier´Ia En

Instituto Tecnol´ogicode Costa Rica Escuela de Ingenier´ıaen Electr´onica Improvement of small satellite's software design with build system and continuous integration tools para optar por el t´ıtulode Ingeniero en Electr´onicacon ´enfasisen sistemas empotrados con el grado acad´emicode Maestr´ıa Allan Granados [email protected] Cartago, Diciembre, 2015 2 Contents 1 Introduction 8 1.1 Previous work focus on small satellites . .9 1.2 Problem statement . 11 1.3 Proposed solution . 13 1.3.1 Proposed development . 13 2 Software development approaches for small satellites 15 2.1 Software methodologies used for satellites design . 15 2.2 Small satellite design and structure . 17 2.3 Central computation system in satellites. Homogeneous and Het- erogeneous systems . 18 2.4 Different approach on software development for small satellites . 20 2.4.1 Software development: Monolithic approach . 20 2.4.2 Software development: Development by component . 21 2.5 Open Source tools on the design and implementation of software satellite . 23 3 Integration of build system for small satellite missions 24 3.1 Build systems as an improvement on the design methodology . 24 3.1.1 Yocto build system . 29 4 Development platforms 32 4.1 Beagleboard XM . 32 4.2 Pandaboard . 35 4.3 Beaglebone . 38 5 Design and implementation of the construction system 41 5.1 Construction System . 41 5.1.1 The hardware independent layer: meta-tecSat . 42 5.1.2 The hardware dependent later: meta-tecSat-target . 43 5.1.3 Integration of the dependent and independent hardware layers in the construction system . 44 5.1.4 Adding a new recipe to a layer . -

Consideration About Enabling Hypervisor in Open-Source firmware

Consideration about enabling hypervisor in open-source firmware OSFC 2019 Piotr Król 1 / 18 About me Piotr Król Founder & Embedded Systems Consultant open-source firmware @pietrushnic platform security [email protected] trusted computing linkedin.com/in/krolpiotr facebook.com/piotr.krol.756859 OSFC 2019 CC BY 4.0 | Piotr Król 2 / 18 Agenda Introduction Terminology Hypervisors Bareflank Hypervisor as coreboot payload Demo Issues and further work OSFC 2019 CC BY 4.0 | Piotr Król 3 / 18 Introduction Goal create firmware that can start multiple application in isolated virtual environments directly from SPI flash Motivation to improve virtualization and hypervisor-fu to understand hardware capabilities and limitation in area of virtualization to satisfy market demand OSFC 2019 CC BY 4.0 | Piotr Król 4 / 18 Terminology Virtualization is the application of the layering principle through enforced modularity, whereby the exposed virtual resource is identical to the underlying physical resource being virtualized. layering - single abstraction with well-defined namespace enforced modularity - guarantee that client of the layer cannot bypass it and access abstracted resources examples: virtual memory, RAID VM is an abstraction of complete compute environment Hypervisor is a software that manages VMs VMM is a portion of hypervisor that handle CPU and memory virtualization Edouard Bugnion, Jason Nieh, Dan Tsafrir, Synthesis Lectures on Computer Architecture, Hard- ware and Software Support for Virtualization, 2017 OSFC 2019 CC BY 4.0 | Piotr Król 5 / -

Taxonomy of Virtualization Technologies Master’S Thesis

A taxonomy of virtualization technologies Master’s thesis Delft University of Technology Faculty of Systems Engineering Policy Analysis & Management August, 2010 Paulus Kampert 1175998 Taxonomy of virtualization technologies Preface This thesis has been written as part of the Master Thesis Project (spm5910). This is the final course from the master programme Systems Engineering, Policy Analysis & Management (SEPAM), educated by Delft University of Technology at the Faculty of Technology, Policy and Management. This thesis is the product of six months of intensive work in order to obtain the Master degree. This research project provides an overview of the different types of virtualization technologies and virtualization trends. A taxonomy model has been developed that demonstrates the various layers of a virtual server architecture and virtualization domains in a structured way. Special thanks go to my supervisors. My effort would be useless without the priceless support from them. First, I would like to thank my professor Jan van Berg for his advice, support, time and effort for pushing me in the right direction. I am truly grateful. I would also like to thank my first supervisor Sietse Overbeek for frequently directing and commenting on my research. His advice and feedback was very constructive. Many thanks go to my external supervisor Joris Haverkort for his tremendous support and efforts. He made me feel right at home at Atos Origin and helped me a lot through the course of this research project. Especially, the lectures on virtualization technologies and his positive feedback gave me a better understanding of virtualization. Also, thanks go to Jeroen Klompenhouwer (operations manager at Atos Origin), who introduced me to Joris Haverkort and gave me the opportunity to do my graduation project at Atos Origin.