Implementation of Distance Transformation Using Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Issue 16, June 2019 -...CHESSPROBLEMS.CA

...CHESSPROBLEMS.CA Contents 1 Originals 746 . ISSUE 16 (JUNE 2019) 2019 Informal Tourney....... 746 Hors Concours............ 753 2 Articles 755 Andreas Thoma: Five Pendulum Retros with Proca Anticirce.. 755 Jeff Coakley & Andrey Frolkin: Multicoded Rebuses...... 757 Arno T¨ungler:Record Breakers VIII 766 Arno T¨ungler:Pin As Pin Can... 768 Arno T¨ungler: Circe Series Tasks & ChessProblems.ca TT9 ... 770 3 ChessProblems.ca TT10 785 4 Recently Honoured Canadian Compositions 786 5 My Favourite Series-Mover 800 6 Blast from the Past III: Checkmate 1902 805 7 Last Page 808 More Chess in the Sky....... 808 Editor: Cornel Pacurar Collaborators: Elke Rehder, . Adrian Storisteanu, Arno T¨ungler Originals: [email protected] Articles: [email protected] Chess drawing by Elke Rehder, 2017 Correspondence: [email protected] [ c Elke Rehder, http://www.elke-rehder.de. Reproduced with permission.] ISSN 2292-8324 ..... ChessProblems.ca Bulletin IIssue 16I ORIGINALS 2019 Informal Tourney T418 T421 Branko Koludrovi´c T419 T420 Udo Degener ChessProblems.ca's annual Informal Tourney Arno T¨ungler Paul R˘aican Paul R˘aican Mirko Degenkolbe is open for series-movers of any type and with ¥ any fairy conditions and pieces. Hors concours compositions (any genre) are also welcome! ! Send to: [email protected]. " # # ¡ 2019 Judge: Dinu Ioan Nicula (ROU) ¥ # 2019 Tourney Participants: ¥!¢¡¥£ 1. Alberto Armeni (ITA) 2. Rom´eoBedoni (FRA) C+ (2+2)ser-s%36 C+ (2+11)ser-!F97 C+ (8+2)ser-hsF73 C+ (12+8)ser-h#47 3. Udo Degener (DEU) Circe Circe Circe 4. Mirko Degenkolbe (DEU) White Minimummer Leffie 5. Chris J. Feather (GBR) 6. -

More About Checkmate

MORE ABOUT CHECKMATE The Queen is the best piece of all for getting checkmate because it is so powerful and controls so many squares on the board. There are very many ways of getting CHECKMATE with a Queen. Let's have a look at some of them, and also some STALEMATE positions you must learn to avoid. You've already seen how a Rook can get CHECKMATE XABCDEFGHY with the help of a King. Put the Black King on the side of the 8-+k+-wQ-+( 7+-+-+-+-' board, the White King two squares away towards the 6-+K+-+-+& middle, and a Rook or a Queen on any safe square on the 5+-+-+-+-% same side of the board as the King will give CHECKMATE. 4-+-+-+-+$ In the first diagram the White Queen checks the Black King 3+-+-+-+-# while the White King, two squares away, stops the Black 2-+-+-+-+" King from escaping to b7, c7 or d7. If you move the Black 1+-+-+-+-! King to d8 it's still CHECKMATE: the Queen stops the Black xabcdefghy King moving to e7. But if you move the Black King to b8 is CHECKMATE! that CHECKMATE? No: the King can escape to a7. We call this sort of CHECKMATE the GUILLOTINE. The Queen comes down like a knife to chop off the Black King's head. But there's another sort of CHECKMATE that you can ABCDEFGH do with a King and Queen. We call this one the KISS OF 8-+k+-+-+( DEATH. Put the Black King on the side of the board, 7+-wQ-+-+-' the White Queen on the next square towards the middle 6-+K+-+-+& and the White King where it defends the Queen and you 5+-+-+-+-% 4-+-+-+-+$ get something like our next diagram. -

Caissa Nieuws

rvd v CAISSA NIEUWS -,1. 374 Met een vraagtecken priJ"ken alleen die zetten, die <!-Cl'.1- logiSchcnloop der partij werkelijk ingrijpend vemol"en. Voor zichzelf·sprekende dreigingen zijn niet vermeld. · Ah, de klassieken ... april 1999 Caissanieuws 37 4 april 1999 Colofon Inhoud Redactioneel meer is, hij zegt toe uit de doeken te doen 4. Gerard analyseert de pijn. hoe je een eindspeldatabase genereert. CaïssaNieuws is het clubblad van de Wellicht is dat iets voor Ed en Leo, maar schaakvereniging Caïssa 6. Rikpareert Predrag. Dik opgericht 1-5-1951 6. Dennis komt met alle standen. verder ook voor elke Caïssaan die zijn of it is een nummer. Met een schaak haar spel wil verbeteren. Daar zijn we wel Clublokaal: Oranjehuis 8. Derde nipt naar zege. dik Van Ostadestraat 153 vrije avond en nog wat feestdagen in benieuwd naar. 10. Zesde langs afgrond. 1073 TKAmsterdam hetD vooruitzicht is dat wel prettig. Nog aan Tot slot een citaat uit de bespreking van Telefoon - clubavond: 679 55 59 dinsda_g_ 13. Maarten heeftnotatie-biljet op genamer is het te merken dat er mensen Robert Kikkert van een merkwaardig boek Voorzitter de rug. zijn die de moeite willen nemen een bij dat weliswaar de Grünfeld-verdediging als Frans Oranje drage aan het clubblad te leveren zonder onderwerp heeft, maar ogenschijnlijk ook Oudezijds Voorburgwal 109 C 15. Tuijl vloert Vloermans. 1012 EM Amsterdam 17. Johan ziet licht aan de horizon. dat daar om gevraagd is. Zo moet het! een ongebruikelijke visieop ons multi-culti Telefoon 020 627 70 17 18. Leo biedt opwarmertje. In deze aflevering van CN zet Gerard wereldje bevat en daarbij enpassant het we Wedstrijdleider interne com12etitie nauwgezet uiteen dat het beter kanen moet reldvoedselverdelingsvraagstukaan de orde Steven Kuypers 20. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

Chess Rules Ages 10 & up • for 2 Players

Front (Head to Head) Prints Pantone 541 Blue Chess Rules Ages 10 & Up • For 2 Players Contents: Game Board, 16 ivory and 16 black Play Pieces Object: To threaten your opponent’s King so it cannot escape. Play Pieces: Set Up: Ivory Play Pieces: Black Play Pieces: Pawn Knight Bishop Rook Queen King Terms: Ranks are the rows of squares that run horizontally on the Game Board and Files are the columns that run vertically. Diagonals run diagonally. Position the Game Board so that the red square is at the bottom right corner for each player. Place the Ivory Play Pieces on the first rank from left to right in order: Rook, Knight, Bishop, Queen, King, Bishop, Knight and Rook. Place all of the Pawns on the second rank. Then place the Black Play Pieces on the board as shown in the diagram. Note: the Ivory Queen will be on a red square and the black Queen will be on a black space. Play: Ivory always plays first. Players alternate turns. Only one Play Piece may be moved on a turn, except when castling (see description on back). All Play Pieces must move in a straight path, except for the Knight. Also, the Knight is the only Play Piece that is allowed to jump over another Play Piece. Play Piece Moves: A Pawn moves forward one square at a time. There are two exceptions to this rule: 1. On a Pawn’s first move, it can move forward one or two squares. 2. When capturing a piece (see description on back), a Pawn moves one square diagonally ahead. -

Chess Pieces – Left to Right: King, Rook, Queen, Pawn, Knight and Bishop

CCHHEESSSS by Wikibooks contributors From Wikibooks, the open-content textbooks collection Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". Image licenses are listed in the section entitled "Image Credits." Principal authors: WarrenWilkinson (C) · Dysprosia (C) · Darvian (C) · Tm chk (C) · Bill Alexander (C) Cover: Chess pieces – left to right: king, rook, queen, pawn, knight and bishop. Photo taken by Alan Light. The current version of this Wikibook may be found at: http://en.wikibooks.org/wiki/Chess Contents Chapter 01: Playing the Game..............................................................................................................4 Chapter 02: Notating the Game..........................................................................................................14 Chapter 03: Tactics.............................................................................................................................19 Chapter 04: Strategy........................................................................................................................... 26 Chapter 05: Basic Openings............................................................................................................... 36 Chapter 06: -

CHESS How to Play Chess: Rules and Basics

CHESS By Mind Games How to Play Chess: Rules and Basics It's never too late to learn how to play chess - the most popular game in the world! Learning the rules of chess is easy: Step 1. How to Setup the Chessboard At the beginning of the game the chessboard is laid out so that each player has the white (or light) color square in the bottom right-hand side. The chess pieces are then arranged the same way each time. The second row (or rank) is filled with pawns. The rooks go in the corners, then the knights next to them, followed by the bishops, and finally the queen, who always goes on her own matching color (white queen on white, black queen on black), and the king on the remaining square. Step 2. How the Chess Pieces Move Each of the 6 different kinds of pieces moves differently. Pieces cannot move through other pieces (though the knight can jump over other pieces), and can never move onto a square with one of their own pieces. However, they can be moved to take the place of an opponent's piece which is then captured. Pieces are generally moved into positions where they can capture other pieces (by landing on their square and then replacing them), defend their own pieces in case of capture, or control important squares in the game. How to Move the King in Chess The king is the most important piece, but is one of the weakest. The king can only move one square in any direction - up, down, to the sides, and diagonally. -

Drive Monitor FX3-MOC

ONLINEH ELP Drive Monitor FX3-MOC Motion Control GB Onlinehelp Drive Monitor FX3-MOC Copyright Copyright © 2012 SICK AG Waldkirch Industrial Safety Systems Erwin-Sick-Str. 1 79183 Waldkirch Germany This document is protected by the law of copyright, whereby all rights established therein remain with the company SICK AG. Reproduction of this document or parts of this document is only permissible within the limits of the legal determination of Copyright Law. Alteration or abridgement of the document is not permitted without the explicit written approval of the company SICK AG. 2 © SICK AG • Industrial Safety Systems • Germany • All rights reserved 8017009/2014-02-17 Subject to change without notice Onlinehelp Content Drive Monitor FX3-MOC Content 1 About the Drive Monitor FX3-MOC .................................................................................. 5 1.1 Product Features ................................................................................................... 5 1.1.1 Features ............................................................................................... 5 1.1.2 Your benefits ....................................................................................... 6 1.1.3 Target applications.............................................................................. 6 1.1.4 Industries ............................................................................................. 6 1.2 Application Examples ............................................................................................ 7 1.2.1 -

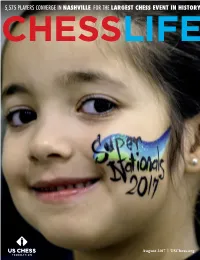

Chess Life: to Receive Chess Life As a Premium Member, Join US Chess Or Enter a US Chess Tournament, Go to Uschess.Org Or Call 1-800-903-USCF (8723)

5,575 PLAYERS CONVERGE IN NASHVILLE FOR THE LARGEST CHESS EVENT IN HISTORY August 2017 | USChess.org The Uniteed States’ Largest Chess Sppecialty Retailer '''%! %!"$#&& Wild SSttyle BooaINTRaardsRODUCING THE NEW EXCITING FULL COLOR VINYL CHESS BOARDS EMOJI WILD HORSES FIRREFIGHTER RAINBOW CATCH THE WAVE FLAG OF USA SPLATTTERED PAINTA GOLDEN GATE CRYSTALA DRREAMS 8 BIT HHEAVEN PUNK ARMY OVER 80 DESIGNS AT GM Viswanathan ANAND GM Hikaru NAKAMURA GM Levon ARONIAN GM Ian NEPOMNIACHTCHI GM Magnus CARLSEN GM Wesley SO GM Fabiano CARUANA GM Peter SVIDLER GM Sergey KARJAKIN GM Maxime VACHIER-LAGRAVE TUESDAY AUGUST 1 TBA Autograph Session 6 PM Opening Ceremony WEDNESDAY AUGUST 2 1 PM Round 1 THURSDAY AUGUST 3 1 PM Round 2 FRIDAY AUGUST 4 1 PM Round 3 SATURDAY AUGUST 5 1 PM Round 4 SUNDAY AUGUST 6 1 PM Round 5 MONDAY AUGUST 7 — Rest Day TUESDAY AUGUST 8 1 PM Round 6 WEDNESDAY AUGUST 9 1 PM Round 7 THURSDAY AUGUST 10 1 PM Round 8 FRIDAY AUGUST 11 1 PM Round 9 AUGUST 2-12 SATURDAY AUGUST 12 1 PM (if necessary) #GRANDCHESSTOUR #SINQUEFIELDCUP 6 PM Closing Ceremony GM Viswanathan ANAND GM Garry KASPAROV GM Levon ARONIAN GM Le Quang LIEM GM Fabiano CARUANA GM Hikaru NAKAMURA GM Lenier DOMINGUEZ GM Ian NEPOMNIACHTCHI GM Sergey KARJAKIN GM Wei YI SUNDAY AUGUST 13 6 PM Opening Ceremony MONDAY AUGUST 14 1 PM Rapid Rounds 1-3 TUESDAY AUGUST 15 1 PM Rapid Rounds 4-6 WEDNESDAY AUGUST 16 1 PM Rapid Rounds 7-9 THURSDAY AUGUST 17 1 PM Blitz Rounds 1-9 FRIDAY AUGUST 18 1 PM Blitz Rounds 10-18 SATURDAY AUGUST 19 1 PM (if necessary) TBA Ultimate Moves AUGUST 14-19 6 PM Closing Ceremony #GRANDCHESSTOUR #STLRAPIDBLITZ WATCH LIVE ON GRANDCHESSTOUR.ORG ROUNDS DAILY AT 1 P.M. -

EMPIRE CHESS Summer 2015 Volume XXXVIII, No

Where Organized Chess in America Began EMPIRE CHESS Summer 2015 Volume XXXVIII, No. 2 $5.00 Honoring Brother John is the Right Move. Empire Chess P.O. Box 340969 Brooklyn, NY 11234 Election Issue – Be Sure to Vote! 1 NEW YORK STATE CHESS ASSOCIATION, INC. www.nysca.net The New York State Chess Association, Inc., America‘s oldest chess organization, is a not-for-profit organization dedicated to promoting chess in New York State at all levels. As the State Affiliate of the United States Chess Federation, its Directors also serve as USCF Voting Members and Delegates. President Bill Goichberg PO Box 249 Salisbury Mills, NY 12577 Looking for Hall of Famers. [email protected] Vice President Last year’s Annual Meeting asked for new ideas on how the New York Polly Wright State Chess Association inducts members into its Hall of Fame. 57 Joyce Road Eastchester, NY 10709 [email protected] One issue NYSCA would like to address is how to evaluate many of the historical figures that have participated in chess in New York. NYSCA Treasurer Karl Heck started its Hall of Fame with contemporaries, and while there are players 5426 Wright Street, CR 67 with long careers in the Hall of Fame, true players of the historical past East Durham, NY 12423 aren’t in our Hall of Fame. [email protected] Membership Secretary For example, it is almost infathomable that Frank Marshall, a former New Phyllis Benjamin York State Champion and namesake and founder of the State’s most famous P.O. Box 340511 chess club, is not in our Hall of Fame. -

The Queen's Gambit

Master Class with Aagaard | Shankland on the Online Olympiad | Spiegel’s Three Questions NOVEMBER 2020 | USCHESS.ORG The Queen’s Gambit A new Netflix limited series highlights the Royal Game A seasonal gift from US CHESS: A free copy of Chess Life! NOVEMBER 17, 2020 Dear Chess Friends: GM ELIZABETH SPIEGEL When one of our members has a good idea, we take it seriously. Tweeting on October 31 – Halloween Day! – National Master Han Schut GM JESSE suggested we provide a “holiday present” to chess players around the world. KRAAI GM JACOB AAGAARD What a swell idea. Chess Life is the official magazine of US Chess. Each month we here at FM CARSTEN Chess Life work to publish the best of American chess in all of its facets. HANSEN In recent issues we have brought you articles by GM Jesse Kraai on chess in the time of coronavirus; GM Jon Tisdall’s look at online chess; IM Eric Rosen on “the new chess boom,” featuring a cover that went Michael Tisserand IM JOHN viral on social media!; on Charlie Gabriel, the WATSON coolest octogenarian jazz player and chess fan in New Orleans; and GM Maurice Ashley on 11-year-old phenom IM Abhimanyu Mishra. IM ERIC Our November issue has gained wide attention across the world for its cover ROSEN story on the Netflix limited series The Queen’s Gambit by longtime Chess Life columnist Bruce Pandolfini.It also features articles by GM Jacob Aagaard GM Sam Shankland WFM Elizabeth Spiegel GM MAURICE , , and , made ASHLEY famous in the 2012 documentary Brooklyn Castle. -

VARIANT CHESS 8 Page 97

July-December 1992 VARIANT CHESS 8 page 97 @ Copyright. 1992. rssN 0958-8248 Publisher and Editor G. P. Jelliss 99 Bohemia Road Variant Chess St Leonards on Sea TN37 6RJ (rJ.K.) In this issue: Hexagonal Chess, Modern Courier Chess, Escalation, Games Consultant Solutions, Semi-Pieces, Variants Duy, Indexes, New Editor/Publisher. Malcolm Horne Volume 1 complete (issues 1-8, II2 pages, A4 size, unbound): f10. 10B Windsor Square Exmouth EX8 1JU New Varieties of Hexagonal Chess find that we need 8 pawns on the second rank plus by G. P. Jelliss 5 on the third rank. I prefer to add 2 more so that there are 5 pawns on each colour, and the rooks are Various schemes have been proposed for playing more securely blocked in. There is then one pawn in chess on boards on which the cells are hexagons each file except the edge files. A nm being a line of instead of squares. A brief account can be found in cells perpendicular to the base-line. The Oxford Companion to Chess 1984 where games Now how should the pawns move? If they are to by Siegmund Wellisch I9L2, H.D.Baskerville L929, continue to block the files we must allow them only Wladyslaw Glinski L949 and Anthony Patton L975 to move directly forward. This is a fers move. For are mentioned. To these should be added the variety their capture moves we have choices: the other two by H. E. de Vasa described in Joseph Boyer's forward fers moves, or the two forward wazit NouveoLx, Jeux d'Ecltecs Non Orthodoxes 1954 moves, or both.