Digital Control Engineering Analysis and Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Control Theory

Control theory S. Simrock DESY, Hamburg, Germany Abstract In engineering and mathematics, control theory deals with the behaviour of dynamical systems. The desired output of a system is called the reference. When one or more output variables of a system need to follow a certain ref- erence over time, a controller manipulates the inputs to a system to obtain the desired effect on the output of the system. Rapid advances in digital system technology have radically altered the control design options. It has become routinely practicable to design very complicated digital controllers and to carry out the extensive calculations required for their design. These advances in im- plementation and design capability can be obtained at low cost because of the widespread availability of inexpensive and powerful digital processing plat- forms and high-speed analog IO devices. 1 Introduction The emphasis of this tutorial on control theory is on the design of digital controls to achieve good dy- namic response and small errors while using signals that are sampled in time and quantized in amplitude. Both transform (classical control) and state-space (modern control) methods are described and applied to illustrative examples. The transform methods emphasized are the root-locus method of Evans and fre- quency response. The state-space methods developed are the technique of pole assignment augmented by an estimator (observer) and optimal quadratic-loss control. The optimal control problems use the steady-state constant gain solution. Other topics covered are system identification and non-linear control. System identification is a general term to describe mathematical tools and algorithms that build dynamical models from measured data. -

Microcontroller Based Applied Digital Control

JWBK063-FM JWBK063-Ibrahim January 4, 2006 18:11 Char Count= 0 Microcontroller Based Applied Digital Control Microcontroller Based Applied Digital Control D. Ibrahim C 2006 John Wiley & Sons, Ltd. ISBN: 0-470-86335-8 i JWBK063-FM JWBK063-Ibrahim January 4, 2006 18:11 Char Count= 0 Microcontroller Based Applied Digital Control Dogan Ibrahim Department of Computer Engineering Near East University, Cyprus iii JWBK063-FM JWBK063-Ibrahim January 4, 2006 18:11 Char Count= 0 Copyright C 2006 John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England Telephone (+44) 1243 779777 Email (for orders and customer service enquiries): [email protected] Visit our Home Page on www.wiley.com All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in writing of the Publisher. Requests to the Publisher should be addressed to the Permissions Department, John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England, or emailed to [email protected], or faxedto(+44) 1243 770620. Designations used by companies to distinguish their products are often claimed as trademarks. All brand names and product names used in this book are trade names, service marks, trademarks or registered trademarks of their respective owners. -

Digital Control Networks for Virtual Creatures

Digital control networks for virtual creatures Christopher James Bainbridge Doctor of Philosophy School of Informatics University of Edinburgh 2010 Abstract Robot control systems evolved with genetic algorithms traditionally take the form of floating-point neural network models. This thesis proposes that digital control sys- tems, such as quantised neural networks and logical networks, may also be used for the task of robot control. The inspiration for this is the observation that the dynamics of discrete networks may contain cyclic attractors which generate rhythmic behaviour, and that rhythmic behaviour underlies the central pattern generators which drive low- level motor activity in the biological world. To investigate this a series of experiments were carried out in a simulated physically realistic 3D world. The performance of evolved controllers was evaluated on two well known control tasks — pole balancing, and locomotion of evolved morphologies. The performance of evolved digital controllers was compared to evolved floating-point neu- ral networks. The results show that the digital implementations are competitive with floating-point designs on both of the benchmark problems. In addition, the first re- ported evolution from scratch of a biped walker is presented, demonstrating that when all parameters are left open to evolutionary optimisation complex behaviour can result from simple components. iii Acknowledgements “I know why you’re here... I know what you’ve been doing... why you hardly sleep, why you live alone, and why night after night, you sit by your computer.” I would like to thank my parents and grandmother for all of their support over the years, and for giving me the freedom to pursue my interests from an early age. -

Applications of Neural Networks to Control Systems

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Universidade do Algarve APPLICATIONS OF NEURAL NETWORKS TO CONTROL SYSTEMS by António Eduardo de Barros Ruano Thesis submitted to the UNIVERSITY OF WALES for the degree of DOCTOR OF PHILOSOPHY School of Electronic Engineering Science University of Wales, Bangor February, 1992 Statement of Originality The work presented in this thesis was carried out by the candidate. It has not been previously presented for any degree, nor is at present under consideration by any other degree awarding body. Candidate:- Antonio E. B. Ruano Directors of Studies:- Dr. Dewi I. Jones Prof. Peter J. Fleming ACKNOWLEDGMENTS I would like to express my sincere thanks and gratitude to Prof. Peter Fleming and Dr. Dewi Jones for their motivation, guidance and friendship throughout the course of this work. Thanks are due to all the students and staff of the School of Electronic Engineering Science. Special thanks to the Head of Department, Prof. J.J. O’Reilly, for his encouragement; Siân Hope, Tom Crummey, Keith France and David Whitehead for their advice on software; Andrew Grace, for fruitful discussions in optimization; and Fabian Garcia, for his advice in parallel processing. I am also very grateful to Prof. Anibal Duarte from the University of Aveiro, Portugal, whose advice was decisive to my coming to Bangor. I would like to acknowledge the University of Aveiro, University College of North Wales and the ‘Comissão Permanente Invotan’ for their financial support. I am also very grateful to all my friends in Bangor, who made my time here a most enjoyable one. -

Topic 6: a Practical Introduction to Digital Power Supply Control

A Practical Introduction to Digital Power Supply Control Laszlo Balogh ABSTRACT The quest for increased integration, more features, and added flexibility – all under constant cost pressure – continually motivates the exploration of new avenues in power management. An area gaining significant industry attention today is the application of digital technology to power supply control. This topic attempts to clarify some of the mysteries of digital control for the practicing analog power supply designer. The benefits, limitations, and performance of the digital control concept will be reviewed. Special attention will be focused on the similarities and differences between analog and digital implementations of basic control functions. Finally, several examples will highlight the contributions of digital control to switched-mode power supplies. I. INTRODUCTION Power management is one of the most digital delay techniques to adjust proper timing of interdisciplinary areas of modern electronics, gate drive signals, microcontrollers and state merging hard core analog circuit design with machines for battery charging and management, expertise from mechanical and RF engineering, and the list could go on. safety and EMI, knowledge of materials, If power conversion already incorporates semiconductors and magnetic components. such a large amount of digital circuitry, it is a Understandably, power supply design is regarded legitimate question to ask: what has changed? as a pure analog field. But from the very early What is this buzz about “digital power”? days, by the introduction of relays and later the first rectifiers, power management is slowly II. GOING DIGITAL incorporating more and more ideas from the Despite the tremendous amount of digital digital world. -

Roundoff Noise in Digital Feedback Control Systems

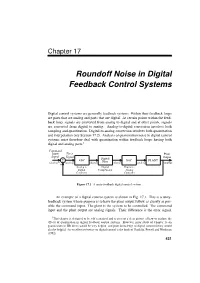

Chapter 17 Roundoff Noise in Digital Feedback Control Systems Digital control systems are generally feedback systems. Within their feedback loops are parts that are analog and parts that are digital. At certain points within the feed- back loop, signals are converted from analog to digital and at other points, signals are converted from digital to analog. Analog-to-digital conversion involves both sampling and quantization. Digital-to-analog conversion involves both quantization and interpolation (see Section 17.2). Analysis of quantization noise in digital control systems must therefore deal with quantization within feedback loops having both digital and analog parts.1 Command Input Error Plant Signal Signal Digital Output − ADC DAC PLANT (analog) (analog) (digital) Filter (digital) (analog) (analog) + Analog to Digital Digital to Digital Compensator Analog Converter Converter Figure 17.1 A unity-feedback digital control system. An example of a digital control system is shown in Fig. 17.1. This is a unity- feedback system whose purpose is to have the plant output follow as closely as pos- sible the command input. The plant is the system to be controlled. The command input and the plant output are analog signals. Their difference is the error signal. 1This chapter is designed to be self-contained and to present a clear picture of how to analyze the effects of quantization in digital feedback control systems. However, prior study of Chapter 16 on quantization in IIR filters would be very helpful, and prior knowledge of digital control theory would also be helpful. An excellent reference on digital control is the book of Franklin, Powell and Workman (1992). -

Introduction to Applied Digital Control Second Edition

Introduction to Applied Digital Control Second Edition Gregory P. Starr Department of Mechanical Engineering The University of New Mexico November 2006 ii c 2006 Gregory P. Starr Preface This book is intended to give the senior or beginning graduate student in mechanical engineering an introduction to digital control of mechanical systems with an emphasis on applications. The desire to write this book arose from my frustration with the existing texts on digital control, which|while they were exhaustive|were better suited to reference needs than for tutorial use. While the appearance of several new texts with a more student-oriented perspective has reduced my frustration a little, I still feel there is a need for an introductory text such as this. MATLAB has become an almost indispensable tool in the real-world analysis and design of control systems, and this text includes many MATLAB scripts and examples. My thanks go to my wife Anne, and four boys Paul, Keith, Mark, and Jeff for being patient during the preparation and revision of this manuscript|also my late father Duke Starr who recognized that any kid who liked mathematics and motorcycles can't be all bad. Albuquerque, New Mexico November 2006 iii iv PREFACE Contents Preface iii 1 Introduction and Scope of this Book 1 1.1 Continuous and Digital Control . 1 1.1.1 Feedback control . 1 1.1.2 Digital control . 2 1.2 Philosophy and Text Coverage . 4 2 Linear Discrete Systems and the Z-Transform 7 2.1 Chapter Overview . 7 2.2 Linear Difference Equations . 8 2.2.1 Solving Difference Equations . -

A Kalman Filter for Active Feedback on Rotating External Kink Instabilities in a Tokamak Plasma

A Kalman Filter for Active Feedback on Rotating External Kink Instabilities in a Tokamak Plasma Jeremy Mark Hanson SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN THE GRADUATE SCHOOL OF ARTS AND SCIENCES Columbia University 2009 ○c 2009 Jeremy Mark Hanson All Rights Reserved ABSTRACT A Kalman Filter for Active Feedback on Rotating External Kink Instabilities in a Tokamak Plasma Jeremy M. Hanson The first experimental demonstration of feedback suppression of rotating external kink modes near the ideal wall limit in a tokamak using Kalman filtering to discrimi- nate the n = 1 kink mode from background noise is reported. In order to achieve the highest plasma pressure limits in tokamak fusion experiments, feedback stabilization of long-wavelength, external instabilities will be required, and feedback algorithms will need to distinguish the unstable mode from noise due to other magnetohydrodynamic activity. When noise is present in measurements of a system, a Kalman filter can be used to compare the measurements with an internal model, producing a realtime, op- timal estimate for the system’s state. For the work described here, the Kalman filter contains an internal model that captures the dynamics of a rotating, growing instabil- ity and produces an estimate for the instability’s amplitude and spatial phase. On the High Beta Tokamak-Extended Pulse (HBT-EP) experiment, the Kalman filter algo- rithm is implemented using a set of digital, field-programmable gate array controllers with 10 microsecond latencies. The feedback system with the Kalman filter is able to suppress the external kink mode over a broad range of spatial phase angles between the sensed mode and applied control field, and performance is robust at noise levels that render feedback with a classical, proportional gain algorithm ineffective. -

Application of Kalman Filtering and Pid Control For

APPLICATION OF KALMAN FILTERING AND PID CONTROL FOR DIRECT INVERTED PENDULUM CONTROL ____________ A Project Presented to the Faculty of California State University, Chico ____________ In Partial Fulfillment of the Requirement for the Degree Master of Science in Electrical and Computer Engineering Electronic Engineering Option ____________ by José Luis Corona Miranda Spring 2009 APPLICATION OF KALMAN FILTERING AND PID CONTROL FOR DIRECT INVERTED PENDULUM CONTROL A Project by José Luis Corona Miranda Spring 2009 APPROVED BY THE DEAN OF THE SCHOOL OF GRADUATE, INTERNATIONAL, AND INTERDISCIPLINARY STUDIES: _________________________________ Susan E. Place, Ph.D. APPROVED BY THE GRADUATE ADVISORY COMMITTEE: _________________________________ _________________________________ Adel A. Ghandakly, Ph.D. Dale Word, M.S., Chair Graduate Coordinator _________________________________ Adel A. Ghandakly, Ph.D. TABLE OF CONTENTS PAGE List of Figures............................................................................................................. v Abstract....................................................................................................................... vi CHAPTER I. Introduction.............................................................................................. 1 Purpose of the Project................................................................... 2 Limitations of the Project ............................................................. 3 II. Review of Related Literature................................................................... -

Applied Digital Control--Theory, Design and Implementation*

Automatica, Vol. 30, No. 11, pp. 1807-1813, 1994 Copyright © 1994 ElsevierScience Ltd Pergamon Printed in Great Britain. All rights reserved 0005-1098194 $7.00 + 0.00 Book Reviews Applied Digital Control--Theory, Design and Implementation* R. J. Leigh Reviewer: JOB VAN AMERONGEN 4--Methods of analysis and design (pp. 69-110: root locus, University of Twente, P.O. Box 217, Enschede, The frequency response (w-transformation), stability tests); Netherlands. 5--Digital control algorithms (pp. 111-197: various approaches to the design of digital controllers: ACCORDING TO the introduction, 'the book aims to establish continuous modelling, discrete controller design, trans- a strong theoretical background to support applications, formation of continuous-time controllers to discrete material relevant to the design and implementation of digital controllers, dead beat, including modified versions, use control systems'. It is meant for 'industrial 'engineers and of hill climbing for controller tuning, w'-domain (why scientists as well as for students on formal courses'. here w' and in Chapter 4 w?), design realizations such A first scan of the contents of the book shows that there is as parallel method, factorization, choice of sampling a lot of technology in the book. Examples of industrial interval, word length effects. Recent developments such control systems, such as the Honeywell TDC 3000 system are as the delta-operator are not mentioned. Emphasis is on given in the chapter on commercially available distributed translation of continuous-time designs to discrete control systems and in several case studies. The preface to algorithms, less on a complete digital design); the second edition indicates that the book has been updated 6--Elements in the control loop (pp. -

Teaching Analog and Digital Control Theory in One Course

Session 3663 Teaching Analog And Digital Control Theory In One Course Hakan B. Gurocak Manufacturing Engineering Washington State University 14204 NE Salmon Creek Ave. Vancouver, WA 98686 Abstract: Today's trend is towards a high level of manufacturing automation and design of smart products. All of these products or their manufacturing processes contain control systems. As indicated in a recent survey, both analog and digital control modes are used by the industry to implement controllers. In a typical undergraduate engineering curriculum a control systems course introducing the fundamental notions of analog control theory is offered. To learn digital control theory, students would have to take an extra course on digital control systems, usually at the graduate level. This paper explains the development of a hybrid classical/digital control systems course*. Also, laboratory experiments designed to support the new format are presented. Introduction Manufacturing engineering is a very broad discipline. Consequently, manufacturing engineers typically engage in a diverse range of activities such as plant engineering, manufacturing processes, machine design, and product design. In just about any of these roles a manufacturing engineer is challenged by a control system since today's trend is towards a high level of manufacturing automation and design of smart products. For example, such products include active suspensions in cars that can adjust to the road conditions or video cameras that can stabilize images that would otherwise be fuzzy due to the shaking of the hand holding the camera. As the industry continues to introduce more sophisticated control applications in its products and manufacturing processes, the engineers who design, develop and build these products and systems will face a challenging, dramatic change in their role. -

Implementation of Kalman Filter on Visual Tracking Using Pid Controller

Mechatronics and Applications: An International Journal (MECHATROJ), Vol. 1, No.1, January 2017 IMPLEMENTATION OF KALMAN FILTER ON VISUAL TRACKING USING PID CONTROLLER Abdurrahman,F.* 1, Gunawan Sugiarta*2 and Feriyonika*3 *Department of Electrical Engineering, Bandung State of Polytechnic, Bandung, Indonesia ABSTRACT This paper explain the design of visual control system which equip Kalman filter as an additional sub- system to predict object movement. It is a method to overcome some weakness, such as low range view of camera and low FPS (Frame per Second). It alsoassist the system to track a fast moving object. The system is implemented to 2 motor servos, which are move on horizontal and vertical axis. Digital PID (Proportional, Integral, and Derivative) controller is used in the system, and bilinear transformation is used to approximate the value of derivative in transforming the analogue to digital controller on z- transform.In conclusion, we can get the value of system responses time from both motor servos. The rise time and settling time of motor servo in horizontal axis are 0.402s and 1.63s, and vertical axis’s responses are 0.38s and 1.34s. KEYWORDS Kalman filter, PID, bilinear transformation, z-transform 1. INTRODUCTION In this digital era, robotic technologies are implementing vision-based control, such as object tracking [1], face tracking [2], and position-based control [3]. It was developed as one of the control method in robotic community. Researchers keep learning and involving the control system to transform itas close as human capability. Recent paper describes the vision-based control and computer vision as numerical theory [4], unfortunately there are lack of paper which describedand design the system in a more practical way.