Schema Matching for Structured Document Transformations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microsoft, Adobe & W3C to Shake up Electronic Forms Market

Vol. 11, No, 8 October 2003 www.gilbane.com Published by: Bluebill Advisors, Inc. 763 Massachusetts Ave. Cambridge, MA 02139 USA (617) 497.9443 Fax (617) 497.5256 www.bluebilladvisors.com Editor: Frank Gilbane [email protected] (617) 497.9443 Content Technology Works! Editors Emeriti: Tim Bray [email protected] (604) 708.9592 MICROSOFT, ADOBE & XFORMS TO David Weinberger [email protected] (617) 738.8323 SHAKE UP ELECTRONIC FORMS MARKET Senior Editors: Sebastian Holst [email protected] Our title this month reads like a news headline on purpose. There are a number Bill Trippe [email protected] of new, and upcoming, developments in electronic forms (eForms) technology (617) 497.9443 that should be grabbing your attention. Some of these are of major importance Recent Contributors: on their own, but taken together, they signal the start of a major improvement Kathleen Reidy in businesses’ ability to easily collect, integrate, and process information. [email protected] Bob Doyle [email protected] “Electronic forms” have been around for years, but the term refers to a wide variety of technologies – from scanned image applications to HTML forms – Production Assistant: Sarah G. Dionne that are not at all similar and far from equal in their ability to accelerate and [email protected] smooth business processes. What eForm technology has shared is: a level of (617) 497.9443 difficulty that kept it out of the reach of office professionals who were com- Subscriptions: fortable enough with documents and spreadsheets, but scared-off by forms, [email protected] (617) 497.9443 and proprietary data formats that made information integration costly and complex. -

Relatório Comparativo Da Produção De Programas Hipermídia Interativos Em XHTML, SMIL/Grins E NCL/Maestro

Rafael Ferreira Rodrigues Rodrigo Laiola Guimarães Relatório comparativo da produção de programas hipermídia interativos em XHTML, SMIL/GRiNS e NCL/Maestro MONOGRAFIA DA DISCIPLINA DE FUNDAMENTOS DE SISTEMAS MULTIMÍDIA DEPARTAMENTO DE INFORMÁTICA Programa de Pós-Graduação em Informática Rio de Janeiro Dezembro de 2005 Rafael Ferreira Rodrigues Rodrigo Laiola Guimarães Relatório comparativo da produção de programas hipermídia interativos em XHTML, SMIL/GRiNS e NCL/Maestro Monografia da Disciplina de Fundamentos de Sistemas Multimídia Monografia apresentada como requisito parcial para aprovação na disciplina de Fundamentos de Sistemas Multimídia do Programa de Pós- Graduação em Informática da PUC-Rio. Orientador: Luiz Fernando Gomes Soares Rio de Janeiro, dezembro de 2005 Rafael Ferreira Rodrigues Rodrigo Laiola Guimarães Relatório comparativo da produção de programas hipermídia interativos em XHTML, SMIL/GRiNS e NCL/Maestro Monografia apresentada como requisito parcial para aprovação na disciplina de Fundamentos de Sistemas Multimídia do Programa de Pós -Graduação em Informática da PUC-Rio. Luiz Fernando Gomes Soares Orientador Departamento de Informática - PUC -Rio Rio de Janeiro, 12 de dezembro de 2005 Todos os direitos reservados. É proibida a reprodução total ou parcial do trabalho sem autorização da universidade, dos autores e do orientador. Rafael Ferreira Rodrigues Graduado em Engenharia de Computação pelo Instituto Militar de Engenharia (IME) em 2004. Atualmente, integra o grupo de pesquisadores do Laboratório TeleMídia da PUC-Rio, desenvolvendo pesquisa na área de Redes de Computadores e Sistemas Hipermídia. Rodrigo Laiola Guimarães Graduado em Engenharia de Computação pela Universidade Federal do Espírito Santo (UFES) em 2004. Atualmente, integra o grupo de pesquisadores do Laboratório TeleMídia da PUC-Rio, desenvolvendo pesquisa na área de Redes de Computadores e Sistemas Hipermídia. -

Modularization of XHTML™ 2.0 Modularization of XHTML™ 2.0

Modularization of XHTML™ 2.0 Modularization of XHTML™ 2.0 Modularization of XHTML™ 2.0 W3C Editor’s Draft 23 January 2009 This version: http://www.w3.org/MarkUp/2009/ED-xhtml-modularization2-20090123 Latest public version: http://www.w3.org/TR/xhtml-modularization2 Previous Recommendation: http://www.w3.org/TR/2008/REC-xhtml-modularization-20081008 Editors: Mark Birbeck, x-port.net Markus Gylling, DAISY Consortium Shane McCarron, Applied Testing and Technology Steven Pemberton, CWI (XHTML 2 Working Group Co-Chair) This document is also available in these non-normative formats: Single XHTML file [p.1] , PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. Copyright © 2001-2009 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract This Working Draft specifies an abstract modularization of XHTML and an implementation of the abstraction using XML Document Type Definitions (DTDs), XML Schema, and RelaxNG. This modularization provides a means for subsetting and extending XHTML, a feature needed to permit the definition of custom markup languages that are targeted at specific classes of devices or specific types of documents. Status of This Document This section describes the status of this document at the time of its publication. Other documents may supersede this document. The latest status of this document series is maintained at the W3C. This document is the development version of a very early form and is riddled with errors. It should in no way be considered stable, and should not be referenced for any purposes whatsoever. - 1 - Quick Table of Contents Modularization of XHTML™ 2.0 This document has been produced by the W3C XHTML 2 Working Group as part of the HTML Activity. -

XSL Formatting Objects XSL Formatting Objects

XSL Formatting Objects XSL Formatting Objects http://en.wikipedia.org/wiki/XSL_Formatting_Objects This Book Is Generated By WikiType using RenderX DiType, XML to PDF XSL-FO Formatter Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". 29 September 2008 XSL Formatting Objects Table of Contents 1. XSL Formatting Objects.........................................................4 XSL-FO basics. ...............4 XSL-FO language concepts. 6 XSL-FO document structure. 6 Capabilities of XSL-FO v1.0. 7 Multiple columns. ...........7 Lists.........................................................................8 Pagination controls. 8 Footnotes....................................................................8 Tables................................................ ........................8 Text orientation controls. 9 Miscellaneous...............................................................9 Capabilities of XSL-FO v1.1. 9 Multiple flows and flow mapping. 9 Bookmarks.................................................................10 Indexing....................................................................10 Last page citation. 10 Table markers. 10 Inside/outside floats. 10 Refined graphic sizing. 11 Advantages of XSL-FO. 11 Drawbacks -

Web Application Development And/Or Html5

Web Application Development and/or html5 Toni Ruottu <toni.ruottu@cs. helsinki.fi> Why Develop Web Apps? secure portable easy good quality debugger everywhere Really Short Computer System History 1. mainframe 2. mainframe - cable - multiple terminals 3. personal computer (PC) 4. PC - cable - PC 5. PC - acoustic coupler - phone - acoustic coupler - PC 6. PC - modem - PC 7. bulletin board system - modem - multiple PCs 8. multiple servers - Internet - multiple PCs The Early Internet Servers that reveal hierarchies: ftp Usenet news gopher HyperText Markup Language (HTML) html a new (at that time) hypertext format text with links Standard Generalized Markup Language (SGML) based web 1.0 is born! html2 standardization html3 tables lists web forms ~feature complete Clean-up html4 styling markup separated to css xhtml1 changed from SGML to XML as base xhtml2 move out "as much as possible" (xforms, xframes) making the syntax elegant Xhtml2 Implementations not too much support from industrial browser-vendors Mozilla, Opera, IE instead separate translators Chiba (server-side "browser") Deng (flash based browser in a browser) special xml-browsers X-smiles (TKK heavily involved afaik) The Era of Java, and NPAPI Plug-ins (x)html focuses on textual documents you can use javascript, but it is sloooow and the sandbox is lacking lots of useful interfaces to do something "cool" you need to use the Netscape Plug- in Application Programming Interface (NPAPI) which makes doing something "cool" less cool the most used NPAPI plug-ins include: flash, java, pdf, audio, and video still, there are empires built on top of these plug-ins no-one can deny impact of flash in taking the web forward ( e.g. -

Adaptation De Contenu Multimédia Avec MPEG-21: Conversion De Ressources Et Adaptation Sémantique De Scènes Mariam Kimiaei Asadi

Adaptation de Contenu Multimédia avec MPEG-21: Conversion de Ressources et Adaptation Sémantique de Scènes Mariam Kimiaei Asadi To cite this version: Mariam Kimiaei Asadi. Adaptation de Contenu Multimédia avec MPEG-21: Conversion de Ressources et Adaptation Sémantique de Scènes. domain_other. Télécom ParisTech, 2005. English. pastel- 00001615 HAL Id: pastel-00001615 https://pastel.archives-ouvertes.fr/pastel-00001615 Submitted on 13 Mar 2006 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Thèse présentée pour obtenir le grade de docteur de l’Ecole Nationale Supérieure des Télécommunications Spécialité : Informatique et Réseaux Mariam Kimiaei Asadi Adaptation de Contenu Multimédia avec MPEG-21 : Conversion de Ressources et Adaptation Sémantique de Scènes Soutenue le 30 juin 2005 devant le jury composé de: Cécile Roisin Rapporteurs Fernando Pereira Yves Mathieu Examinateurs Vincent Charvillat Nabil Layaïda Alexandre Cotarmanac’h Invité Jean-Claude Dufourd Directeur de thèse ام دل، ـــــ دل ــــ ــر ـــ ج رهـــ رهـــ، رهـــ ز ــــ ه ــ او دور، ـ دل &%$ #د"! ـــ ه ـ #د"!، ازو )ــــــ'ا، )ــــ'ا +ــــ* دل &ـــــ"(، د در & "( ــــ ـــــ$* -ـ,ــــ ـــ"(، "د .$ــــــ &ــــــ ر هــــ 0ـــــــ/ در &3ـــــن اــــي د6ــــــ* -5 اي دو&4، هـــــاي -"ــ : ﺱ Acknowledgments I have very limited space to thank all the people who contributed in a way or another to the accomplishment of this thesis. -

Motifs, Enhanced 6Th Edition

MOTIFS, ENHANCED 6TH EDITION Author: Kimberly Jansma Number of Pages: --- Published Date: --- Publisher: --- Publication Country: --- Language: --- ISBN: 9781285228167 DOWNLOAD: MOTIFS, ENHANCED 6TH EDITION Motifs, Enhanced 6th edition PDF Book Now you can sleep well before the Boards by using the breakthrough new SleepWell Review Series. By the end of this book, you'll have expanded your iPhone and iPad development knowledge and be well on your way to building elegant solutions that are ready for whatever project you take on next. If you write in your journal like someone is going to read it, you will ever allow yourself to fully express what needs to be expressed. An overview of the nonprofit sector provides needed background, and sidebars from professional fundraisers and students enhance and complement the content of each chapter. Taylor reed. It just takes a few minor mental adjustments in the way we, as parents, react. com (details inside). Learn how to buy a house without getting ripped off. Standard and exemplar documents - includes fees and appointment worked examples; examples of a health and safety file; and other useful templates which will be modified and updated as experience of the Regulations develop The key CDM roles - outlines the key roles and responsibilities for the Client, Designer, Principal Designer, Principal Contractor, Contractors, Workers, and CDM Advisor. They also share the positive view of people with dementia, which concentrates on personhood focussing upon the whole person, drawing upon their strengths as well as taking into account declining abilities in some areas. Adam Grant shows how to improve the world by championing novel ideas and values that go against the grain, battling conformity, and bucking outdated traditions. -

Dissertacao Lucia Chamelete N

1 Pró -Reitoria de Pós -Graduação e Pesquisa Stricto Sensu em Gestão do Conhecimento e da Tecnologia da Informação AVALIAÇÃO DA ACESSIBILIDADE DE SÍTIOS WEB PARA DEFICIENTES VISUAIS Autora: Lúcia Regina Bueno Chamelete Alvares da Silva Orientador: Prof. Dr. Fábio Bianchi Campos Brasília - DF 2010 LÚCIA REGINA BUENO CHAMELETE ALVARES DA SILVA AVALIAÇÃO DA ACESSIBILIDADE DE SÍTIOS WEB PARA DEFICIENTES VISUAIS Dissertação apresentada ao Programa de Pós-Graduação Strictu Sensu em Gestão do Conhecimento e Tecnologia da Informação da Universidade Católica de Brasília, como requisito para obtenção do Título de Mestre em Gestão do Conhecimento e da Tecnologia da Informação. Orientador : Prof. Dr. Fábio Bianchi Campos Brasília 2010 S586a Silva, Lúcia Regina Bueno Chamelete Alvares da Avaliação da acessibilidade de sítios web para deficientes visuais. / Lúcia Regina Bueno Chamelete Alvares da Silva – 2010. 376f.; il. ; 30 cm Dissertação (mestrado) – Universidade7,5cm Católica de Brasília, 2010. Orientação: Fábio Bianchi Campos 1. Portais da Web. 2. Avaliação. 3. Deficientes visuais. 4. Tecnologia da informação. I. Campos, Fábio Bianchi , orient. II.Título. CDU 004.738.5-056.262 Ficha elaborada pela Biblioteca Pós-Graduação da UCB Dedico este trabalho a Deus, agradeçendo pela oportunidade de pesquisar um tema tão inspirador quanto este, e que me deu forças para continuar mesmo nas horas mais difíceis. Agradecimentos Agradeço a todos que me apoiaram e acreditaram que este sonho pudesse se realizar, como minha família, meu marido, colegas de trabalho e amigos. Agradeço ao professor Fábio Bianchi que sempre me orientou e apoiou, à professora Káthia Oliveira que me apresentou este tema fascinante, à professora Sheila Costa que me passou o contato da Andressa Queiroz, do Serviço de Orientação Inclusiva da UCB, a quem agradeço muito a atenção, e agradeço especialmente aos amigos portadores de deficiência visual Clodomir, Deni e Marcos que contribuíram para a realização da aplicação do processo aqui proposto. -

HTML: a Beginner’S Guide

HTML: A Beginner’s Guide Fourth Edition About the Author Wendy Willard is a freelance consultant offering design and art direction services to clients. She also teaches and writes on these topics, and is the author of several other books including Web Design: A Beginner’s Guide (also published by McGraw-Hill). She holds a degree in Illustration from Art Center College of Design in Pasadena, California, where she first learned HTML in 1995. Wendy enjoys all aspects of digital design, reading, cooking, and anything related to the Web. She lives and works in Maryland with her husband, Wyeth, and their two daughters. About the Technical Editor Todd Meister has been developing and using Microsoft technologies for over ten years. He’s been a technical editor on over 50 titles ranging from SQL Server to the .NET Framework. Besides serving as a technical editor for titles, he is an assistant director for Computing Services at Ball State University in Muncie, Indiana. He lives in central Indiana with his wife, Kimberly, and their four remarkable children. HTML: A Beginner’s Guide Fourth Edition Wendy Willard New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2009 by The McGraw-Hill Companies. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher. ISBN: 978-0-07-161144-2 MHID: 0-07-161144-4 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-161143-5, MHID: 0-07-161143-6. -

The Kst Handbook I

The Kst Handbook i The Kst Handbook The Kst Handbook ii Copyright © 2004 The University of British Columbia Copyright © 2005 The University of Toronto Copyright © 2007,2008,2009,2010 The University of British Columbia Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License" [184]. Funding of this project was provided in part by the Canadian Space Agency. The Kst Handbook iii COLLABORATORS TITLE : REFERENCE : The Kst Handbook ACTION NAME DATE SIGNATURE WRITTEN BY Duncan Hanson, 2010-11-29 Rick Chern, Philip Rodrigues, Barth Netterfield, Yiwen Mao, and Zongyi Zhang The Kst Handbook iv Contents 1 Introduction 1 1.1 What is Kst? . 1 1.2 Getting Started . 1 1.2.1 Importing Data . 1 1.2.2 Plot Manipulation . 8 1.2.3 Basic Analysis and the Data Manager . 10 2 Common Tasks 13 2.1 Plotting Simple Graphs . 13 2.2 Customizing Plot Settings . 15 2.3 Curve Fitting . 21 2.4 Generating a Histogram . 23 2.5 Creating an Event Monitor . 25 2.6 Creating a Power Spectrum . 31 3 Working With Data 36 3.1 Data Objects Overview . 36 3.1.1 The Data Manager . 37 3.1.2 Creating and Deleting Data Objects . 38 3.2 Data Types . 38 3.2.1 Vectors . -

{FREE} HTML and XHTML: the Definitive Guide Ebook

HTML AND XHTML: THE DEFINITIVE GUIDE PDF, EPUB, EBOOK Chuck Musciano,Bill Kennedy | 680 pages | 27 Oct 2006 | O'Reilly Media, Inc, USA | 9780596527327 | English | Sebastopol, United States HTML and XHTML: The Definitive Guide PDF Book In his spare time he enjoys life in Florida with his wife Cindy, daughter Courtney, and son Cole. Embedded Versus Referenced Content 5. The visibility attribute H. The list-style property 8. The file path 6. Referencing Audio, Video, and Images 5. Want to know how to make your pages look beautiful, communicate your message effectively, guide visitors through your website with ease, and get everything approved by the accessibility and usability police at the same time? The query URL 6. Font Selection and Synthesis 8. The table-layout property 8. For a better shopping experience, please upgrade now. The alt and longdesc attributes 5. Comments Starting and Ending Tags 3. The class, id, style, and title attributes 4. Forging Ahead 3. The coords attribute 6. HTML and CSS are the workhorses of web design, and using them together to build consistent, reliable web pages requires both skill and knowledge. Design Versus Intent The width attribute The http document fragment 6. Attribute Selectors 8. The clip property 8. Executable Content XHTML uses built in language defining functionality attribute. Want to know how to make your pages look beautiful, communicate your message effectively, guide visitors through your website with ease, and get everything approved by the accessibility and usability police at the same time? Named Frame or Window Targets Comments 3. The border attribute Contextual Selectors 8. -

Html-Elements.Pdf

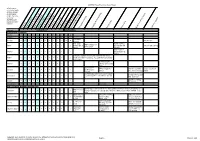

All elements are sorted with in their groups in prioritized order. Recom mended (X)HTML ver sions are mint- colored. - Element Document structure - <html> - <head> <body> <title> <meta> HTML 3.2 <link> HTML 4.01 strict <style> HTML 4.01 transitional <script> HTML 4.01 frameset <noscript> Standards XHTML 1.0 strict <base> <basefont> Non element markup constructs XHTML 1.0 transitional <?xml ?> XHTML 1.0 frameset <!DOCTYPE> XHTML 1.1 <!-- --> XHTML 1.1 Basic <![CDATA[ ]]> XHTML 2.0 D (X)HTML 5.0 Information D [B]lock/[I]nline/[T]able D B [I]nvisible/[N]ormal/[R]eplaced Copyright: Lars Gunther. Creative Commons: Attribution-NonCommercial-ShareAlike 2.5 B http://creativecommons.org/licenses/by-nc-sa/2.5/ D N (X)HTML Best Practice Cheat Sheet I D N Semantic meaning The main I D content The docu ments title or I name I s Indicates explicit relationship between- this docu ment and other resources. As such there are I good uses! Usability & Accessibility I* Make unique for I every page s I I I Best practice I Unobtrusive DOM-scripts, s XML declara please! I tion Unnecessary with unobtrusive script What flavour ing techniques! Forbidden in XHTML of (X)HTML is 5. I used? Code com I ment External style - Unparsed Better skip. It will put MSIE <= 6 in quirks mode and is NOT obligatory. In sheets are usually character theory best place to specify encoding for offline usage of an XHTML docubest SEO notes data ment.