Hypothesis Testing: the Classical Approach (Test of One Mean)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Summarize — Summary Statistics

Title stata.com summarize — Summary statistics Description Quick start Menu Syntax Options Remarks and examples Stored results Methods and formulas References Also see Description summarize calculates and displays a variety of univariate summary statistics. If no varlist is specified, summary statistics are calculated for all the variables in the dataset. Quick start Basic summary statistics for continuous variable v1 summarize v1 Same as above, and include v2 and v3 summarize v1-v3 Same as above, and provide additional detail about the distribution summarize v1-v3, detail Summary statistics reported separately for each level of catvar by catvar: summarize v1 With frequency weight wvar summarize v1 [fweight=wvar] Menu Statistics > Summaries, tables, and tests > Summary and descriptive statistics > Summary statistics 1 2 summarize — Summary statistics Syntax summarize varlist if in weight , options options Description Main detail display additional statistics meanonly suppress the display; calculate only the mean; programmer’s option format use variable’s display format separator(#) draw separator line after every # variables; default is separator(5) display options control spacing, line width, and base and empty cells varlist may contain factor variables; see [U] 11.4.3 Factor variables. varlist may contain time-series operators; see [U] 11.4.4 Time-series varlists. by, collect, rolling, and statsby are allowed; see [U] 11.1.10 Prefix commands. aweights, fweights, and iweights are allowed. However, iweights may not be used with the detail option; see [U] 11.1.6 weight. Options Main £ £detail produces additional statistics, including skewness, kurtosis, the four smallest and four largest values, and various percentiles. meanonly, which is allowed only when detail is not specified, suppresses the display of results and calculation of the variance. -

U3 Introduction to Summary Statistics

Presentation Name Course Name Unit # – Lesson #.# – Lesson Name Statistics • The collection, evaluation, and interpretation of data Introduction to Summary Statistics • Statistical analysis of measurements can help verify the quality of a design or process Summary Statistics Mean Central Tendency Central Tendency • The mean is the sum of the values of a set • “Center” of a distribution of data divided by the number of values in – Mean, median, mode that data set. Variation • Spread of values around the center – Range, standard deviation, interquartile range x μ = i Distribution N • Summary of the frequency of values – Frequency tables, histograms, normal distribution Project Lead The Way, Inc. Copyright 2010 1 Presentation Name Course Name Unit # – Lesson #.# – Lesson Name Mean Central Tendency Mean Central Tendency x • Data Set μ = i 3 7 12 17 21 21 23 27 32 36 44 N • Sum of the values = 243 • Number of values = 11 μ = mean value x 243 x = individual data value Mean = μ = i = = 22.09 i N 11 xi = summation of all data values N = # of data values in the data set A Note about Rounding in Statistics Mean – Rounding • General Rule: Don’t round until the final • Data Set answer 3 7 12 17 21 21 23 27 32 36 44 – If you are writing intermediate results you may • Sum of the values = 243 round values, but keep unrounded number in memory • Number of values = 11 • Mean – round to one more decimal place xi 243 Mean = μ = = = 22.09 than the original data N 11 • Standard Deviation: Round to one more decimal place than the original data • Reported: Mean = 22.1 Project Lead The Way, Inc. -

Descriptive Statistics

Descriptive Statistics Fall 2001 Professor Paul Glasserman B6014: Managerial Statistics 403 Uris Hall Histograms 1. A histogram is a graphical display of data showing the frequency of occurrence of particular values or ranges of values. In a histogram, the horizontal axis is divided into bins, representing possible data values or ranges. The vertical axis represents the number (or proportion) of observations falling in each bin. A bar is drawn in each bin to indicate the number (or proportion) of observations corresponding to that bin. You have probably seen histograms used, e.g., to illustrate the distribution of scores on an exam. 2. All histograms are bar graphs, but not all bar graphs are histograms. For example, we might display average starting salaries by functional area in a bar graph, but such a figure would not be a histogram. Why not? Because the Y-axis values do not represent relative frequencies or proportions, and the X-axis values do not represent value ranges (in particular, the order of the bins is irrelevant). Measures of Central Tendency 1. Let X1,...,Xn be data points, such as the result of n measurements or observations. What one number best characterizes or summarizes these values? The answer depends on the context. Some familiar summary statistics are these: • The mean is given by the arithemetic average X =(X1 + ···+ Xn)/n.(No- tation: We will often write n Xi for X1 + ···+ Xn. i=1 n The symbol i=1 Xi is read “the sum from i equals 1 upto n of Xi.”) 1 • The median is larger than one half of the observations and smaller than the other half. -

Measures of Dispersion for Multidimensional Data

European Journal of Operational Research 251 (2016) 930–937 Contents lists available at ScienceDirect European Journal of Operational Research journal homepage: www.elsevier.com/locate/ejor Computational Intelligence and Information Management Measures of dispersion for multidimensional data Adam Kołacz a, Przemysław Grzegorzewski a,b,∗ a Faculty of Mathematics and Computer Science, Warsaw University of Technology, Koszykowa 75, Warsaw 00–662, Poland b Systems Research Institute, Polish Academy of Sciences, Newelska 6, Warsaw 01–447, Poland article info abstract Article history: We propose an axiomatic definition of a dispersion measure that could be applied for any finite sample of Received 22 February 2015 k-dimensional real observations. Next we introduce a taxonomy of the dispersion measures based on the Accepted 4 January 2016 possible behavior of these measures with respect to new upcoming observations. This way we get two Available online 11 January 2016 classes of unstable and absorptive dispersion measures. We examine their properties and illustrate them Keywords: by examples. We also consider a relationship between multidimensional dispersion measures and mul- Descriptive statistics tidistances. Moreover, we examine new interesting properties of some well-known dispersion measures Dispersion for one-dimensional data like the interquartile range and a sample variance. Interquartile range © 2016 Elsevier B.V. All rights reserved. Multidistance Spread 1. Introduction are intended for use. It is also worth mentioning that several terms are used in the literature as regards dispersion measures like mea- Various summary statistics are always applied wherever deci- sures of variability, scatter, spread or scale. Some authors reserve sions are based on sample data. The main goal of those characteris- the notion of the dispersion measure only to those cases when tics is to deliver a synthetic information on basic features of a data variability is considered relative to a given fixed point (like a sam- set under study. -

Summary Statistics, Distributions of Sums and Means

Summary statistics, distributions of sums and means Joe Felsenstein Department of Genome Sciences and Department of Biology Summary statistics, distributions of sums and means – p.1/18 Quantiles In both empirical distributions and in the underlying distribution, it may help us to know the points where a given fraction of the distribution lies below (or above) that point. In particular: The 2.5% point The 5% point The 25% point (the first quartile) The 50% point (the median) The 75% point (the third quartile) The 95% point (or upper 5% point) The 97.5% point (or upper 2.5% point) Note that if a distribution has a small fraction of very big values far out in one tail (such as the distributions of wealth of individuals or families), the may not be a good “typical” value; the median will do much better. (For a symmetric distribution the median is the mean). Summary statistics, distributions of sums and means – p.2/18 The mean The mean is the average of points. If the distribution is the theoretical one, it is called the expectation, it’s the theoretical mean we would be expected to get if we drew infinitely many points from that distribution. For a sample of points x1, x2,..., x100 the mean is simply their average ¯x = (x1 + x2 + x3 + ... + x100) / 100 For a distribution with possible values 0, 1, 2, 3,... where value k has occurred a fraction fk of the time, the mean weights each of these by the fraction of times it has occurred (then in effect divides by the sum of these fractions, which however is actually 1): ¯x = 0 f0 + 1 f1 + 2 f2 + .. -

Numerical Summary Values for Quantitative Data 35

3.1 Numerical summary values for quantitative data 35 Chapter 3 Descriptive Statistics II: Numerical Summary Values 3.1 Numerical summary values for quantitative data For many purposes a few well–chosen numerical summary values (statistics) will suffice as a description of the distribution of a quantitative variable. A statistic is a numerical characteristic of a sample. More formally, a statistic is a numerical quantity computed from the values of a variable, or variables, corresponding to the units in a sample. Thus a statistic serves to quantify some interesting aspect of the distribution of a variable in a sample. Summary statistics are particularly useful for comparing and contrasting the distribution of a variable for two different samples. If we plan to use a small number of summary statistics to characterize a distribution or to compare two distributions, then we first need to decide which aspects of the distribution are of primary interest. If the distributions of interest are essentially mound shaped with a single peak (unimodal), then there are three aspects of the distribution which are often of primary interest. The first aspect of the distribution is its location on the number line. Generally, when speaking of the location of a distribution we are referring to the location of the “center” of the distribution. The location of the center of a symmetric, mound shaped distribution is clearly the point of symmetry. There is some ambiguity in specifying the location of the center of an asymmetric, mound shaped distribution and we shall see that there are at least two standard ways to quantify location in this context. -

Fan Chart: Methodology and Its Application to Inflation Forecasting in India

W P S (DEPR) : 5 / 2011 RBI WORKING PAPER SERIES Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee and Abhiman Das DEPARTMENT OF ECONOMIC AND POLICY RESEARCH MAY 2011 The Reserve Bank of India (RBI) introduced the RBI Working Papers series in May 2011. These papers present research in progress of the staff members of RBI and are disseminated to elicit comments and further debate. The views expressed in these papers are those of authors and not that of RBI. Comments and observations may please be forwarded to authors. Citation and use of such papers should take into account its provisional character. Copyright: Reserve Bank of India 2011 Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee 1 (Email) and Abhiman Das (Email) Abstract Ever since Bank of England first published its inflation forecasts in February 1996, the Fan Chart has been an integral part of inflation reports of central banks around the world. The Fan Chart is basically used to improve presentation: to focus attention on the whole of the forecast distribution, rather than on small changes to the central projection. However, forecast distribution is a technical concept originated from the statistical sampling distribution. In this paper, we have presented the technical details underlying the derivation of Fan Chart used in representing the uncertainty in inflation forecasts. The uncertainty occurs because of the inter-play of the macro-economic variables affecting inflation. The uncertainty in the macro-economic variables is based on their historical standard deviation of the forecast errors, but we also allow these to be subjectively adjusted. -

Finding Basic Statistics Using Minitab 1. Put Your Data Values in One of the Columns of the Minitab Worksheet

Finding Basic Statistics Using Minitab 1. Put your data values in one of the columns of the Minitab worksheet. 2. Add a variable name in the gray box just above the data values. 3. Click on “Stat”, then click on “Basic Statistics”, and then click on "Display Descriptive Statistics". 4. Choose the variable you want the basic statistics for click on “Select”. 5. Click on the “Statistics” box and then check the box next to each statistic you want to see, and uncheck the boxes next to those you do not what to see. 6. Click on “OK” in that window and click on “OK” in the next window. 7. The values of all the statistics you selected will appear in the Session window. Example (Navidi & Monk, Elementary Statistics, 2nd edition, #31 p. 143, 1st 4 columns): This data gives the number of tribal casinos in a sample of 16 states. 3 7 14 114 2 3 7 8 26 4 3 14 70 3 21 1 Open Minitab and enter the data under C1. The table below shows a portion of the entered data. ↓ C1 C2 Casinos 1 3 2 7 3 14 4 114 5 2 6 3 7 7 8 8 9 26 10 4 Now click on “Stat” and then choose “Basic Statistics” and “Display Descriptive Statistics”. Click in the box under “Variables:”, choose C1 from the window at left, and then click on the “Select” button. Next click on the “Statistics” button and choose which statistics you want to find. We will usually be interested in the following statistics: mean, standard error of the mean, standard deviation, minimum, maximum, range, first quartile, median, third quartile, interquartile range, mode, and skewness. -

Measures of Dispersion

MEASURES OF DISPERSION Measures of Dispersion • While measures of central tendency indicate what value of a variable is (in one sense or other) “average” or “central” or “typical” in a set of data, measures of dispersion (or variability or spread) indicate (in one sense or other) the extent to which the observed values are “spread out” around that center — how “far apart” observed values typically are from each other and therefore from some average value (in particular, the mean). Thus: – if all cases have identical observed values (and thereby are also identical to [any] average value), dispersion is zero; – if most cases have observed values that are quite “close together” (and thereby are also quite “close” to the average value), dispersion is low (but greater than zero); and – if many cases have observed values that are quite “far away” from many others (or from the average value), dispersion is high. • A measure of dispersion provides a summary statistic that indicates the magnitude of such dispersion and, like a measure of central tendency, is a univariate statistic. Importance of the Magnitude Dispersion Around the Average • Dispersion around the mean test score. • Baltimore and Seattle have about the same mean daily temperature (about 65 degrees) but very different dispersions around that mean. • Dispersion (Inequality) around average household income. Hypothetical Ideological Dispersion Hypothetical Ideological Dispersion (cont.) Dispersion in Percent Democratic in CDs Measures of Dispersion • Because dispersion is concerned with how “close together” or “far apart” observed values are (i.e., with the magnitude of the intervals between them), measures of dispersion are defined only for interval (or ratio) variables, – or, in any case, variables we are willing to treat as interval (like IDEOLOGY in the preceding charts). -

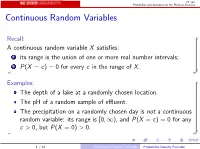

Continuous Random Variables

ST 380 Probability and Statistics for the Physical Sciences Continuous Random Variables Recall: A continuous random variable X satisfies: 1 its range is the union of one or more real number intervals; 2 P(X = c) = 0 for every c in the range of X . Examples: The depth of a lake at a randomly chosen location. The pH of a random sample of effluent. The precipitation on a randomly chosen day is not a continuous random variable: its range is [0; 1), and P(X = c) = 0 for any c > 0, but P(X = 0) > 0. 1 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences Discretized Data Suppose that we measure the depth of the lake, but round the depth off to some unit. The rounded value Y is a discrete random variable; we can display its probability mass function as a bar graph, because each mass actually represents an interval of values of X . In R source("discretize.R") discretize(0.5) 2 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences As the rounding unit becomes smaller, the bar graph more accurately represents the continuous distribution: discretize(0.25) discretize(0.1) When the rounding unit is very small, the bar graph approximates a smooth function: discretize(0.01) plot(f, from = 1, to = 5) 3 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences The probability that X is between two values a and b, P(a ≤ X ≤ b), can be approximated by P(a ≤ Y ≤ b). -

Binomial and Normal Distributions

Probability distributions Introduction What is a probability? If I perform n experiments and a particular event occurs on r occasions, the “relative frequency” of r this event is simply . This is an experimental observation that gives us an estimate of the n probability – there will be some random variation above and below the actual probability. If I do a large number of experiments, the relative frequency gets closer to the probability. We define the probability as meaning the limit of the relative frequency as the number of experiments tends to infinity. Usually we can calculate the probability from theoretical considerations (number of beads in a bag, number of faces of a dice, tree diagram, any other kind of statistical model). What is a random variable? A random variable (r.v.) is the value that might be obtained from some kind of experiment or measurement process in which there is some random uncertainty. A discrete r.v. takes a finite number of possible values with distinct steps between them. A continuous r.v. takes an infinite number of values which vary smoothly. We talk not of the probability of getting a particular value but of the probability that a value lies between certain limits. Random variables are given names which start with a capital letter. An r.v. is a numerical value (eg. a head is not a value but the number of heads in 10 throws is an r.v.) Eg the score when throwing a dice (discrete); the air temperature at a random time and date (continuous); the age of a cat chosen at random. -

On Median and Quartile Sets of Ordered Random Variables*

ISSN 2590-9770 The Art of Discrete and Applied Mathematics 3 (2020) #P2.08 https://doi.org/10.26493/2590-9770.1326.9fd (Also available at http://adam-journal.eu) On median and quartile sets of ordered random variables* Iztok Banicˇ Faculty of Natural Sciences and Mathematics, University of Maribor, Koroskaˇ 160, SI-2000 Maribor, Slovenia, and Institute of Mathematics, Physics and Mechanics, Jadranska 19, SI-1000 Ljubljana, Slovenia, and Andrej Marusiˇ cˇ Institute, University of Primorska, Muzejski trg 2, SI-6000 Koper, Slovenia Janez Zerovnikˇ Faculty of Mechanical Engineering, University of Ljubljana, Askerˇ cevaˇ 6, SI-1000 Ljubljana, Slovenia, and Institute of Mathematics, Physics and Mechanics, Jadranska 19, SI-1000 Ljubljana, Slovenia Received 30 November 2018, accepted 13 August 2019, published online 21 August 2020 Abstract We give new results about the set of all medians, the set of all first quartiles and the set of all third quartiles of a finite dataset. We also give new and interesting results about rela- tionships between these sets. We also use these results to provide an elementary correctness proof of the Langford’s doubling method. Keywords: Statistics, probability, median, first quartile, third quartile, median set, first quartile set, third quartile set. Math. Subj. Class. (2020): 62-07, 60E05, 60-08, 60A05, 62A01 1 Introduction Quantiles play a fundamental role in statistics: they are the critical values used in hypoth- esis testing and interval estimation. Often they are the characteristics of distributions we usually wish to estimate. The use of quantiles as primary measure of performance has *This work was supported in part by the Slovenian Research Agency (grants J1-8155, N1-0071, P2-0248, and J1-1693.) E-mail addresses: [email protected] (Iztok Banic),ˇ [email protected] (Janez Zerovnik)ˇ cb This work is licensed under https://creativecommons.org/licenses/by/4.0/ 2 Art Discrete Appl.