ML-Ask: Open Source Affect Analysis Software for Textual Input in Japanese

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![[PDF] Beginning Raku](https://docslib.b-cdn.net/cover/0681/pdf-beginning-raku-210681.webp)

[PDF] Beginning Raku

Beginning Raku Arne Sommer Version 1.00, 22.12.2019 Table of Contents Introduction. 1 The Little Print . 1 Reading Tips . 2 Content . 3 1. About Raku. 5 1.1. Rakudo. 5 1.2. Running Raku in the browser . 6 1.3. REPL. 6 1.4. One Liners . 8 1.5. Running Programs . 9 1.6. Error messages . 9 1.7. use v6. 10 1.8. Documentation . 10 1.9. More Information. 13 1.10. Speed . 13 2. Variables, Operators, Values and Procedures. 15 2.1. Output with say and print . 15 2.2. Variables . 15 2.3. Comments. 17 2.4. Non-destructive operators . 18 2.5. Numerical Operators . 19 2.6. Operator Precedence . 20 2.7. Values . 22 2.8. Variable Names . 24 2.9. constant. 26 2.10. Sigilless variables . 26 2.11. True and False. 27 2.12. // . 29 3. The Type System. 31 3.1. Strong Typing . 31 3.2. ^mro (Method Resolution Order) . 33 3.3. Everything is an Object . 34 3.4. Special Values . 36 3.5. :D (Defined Adverb) . 38 3.6. Type Conversion . 39 3.7. Comparison Operators . 42 4. Control Flow . 47 4.1. Blocks. 47 4.2. Ranges (A Short Introduction). 47 4.3. loop . 48 4.4. for . 49 4.5. Infinite Loops. 53 4.6. while . 53 4.7. until . 54 4.8. repeat while . 55 4.9. repeat until. 55 4.10. Loop Summary . 56 4.11. if . .. -

Files: Cpan/Test-Harness/* Copyright: Copyright (C) 2007-2011

Files: cpan/Test-Harness/* Copyright: Copyright (c) 2007-2011, Andy Armstrong <[email protected]>. All rights reserved. License: GPL-1+ or Artistic Comment: This module is free software; you can redistribute it and/or modify it under the same terms as Perl itself. Files: cpan/Test-Harness/lib/TAP/Parser.pm Copyright: Copyright 2006-2008 Curtis "Ovid" Poe, all rights reserved. License: GPL-1+ or Artistic Comment: This program is free software; you can redistribute it and/or modify it under the same terms as Perl itself. Files: cpan/Test-Harness/lib/TAP/Parser/YAMLish/Reader.pm Copyright: Copyright 2007-2011 Andy Armstrong. Portions copyright 2006-2008 Adam Kennedy. License: GPL-1+ or Artistic Comment: This program is free software; you can redistribute it and/or modify it under the same terms as Perl itself. Files: cpan/Test-Simple/* Copyright: Copyright 2001-2008 by Michael G Schwern <[email protected]>. License: GPL-1+ or Artistic Comment: This program is free software; you can redistribute it and/or modify it under the same terms as Perl itself. Files: cpan/Test-Simple/lib/Test/Builder.pm Copyright: Copyright 2002-2008 by chromatic <[email protected]> and Michael G Schwern E<[email protected]>. License: GPL-1+ or Artistic 801 Comment: This program is free software; you can redistribute it and/or modify it under the same terms as Perl itself. Files: cpan/Test-Simple/lib/Test/Builder/Tester/Color.pm Copyright: Copyright Mark Fowler <[email protected]> 2002. License: GPL-1+ or Artistic Comment: This program is free software; you can redistribute it and/or modify it under the same terms as Perl itself. -

Mysql NDB Cluster 7.5.16 (And Later)

Licensing Information User Manual MySQL NDB Cluster 7.5.16 (and later) Table of Contents Licensing Information .......................................................................................................................... 2 Licenses for Third-Party Components .................................................................................................. 3 ANTLR 3 .................................................................................................................................... 3 argparse .................................................................................................................................... 4 AWS SDK for C++ ..................................................................................................................... 5 Boost Library ............................................................................................................................ 10 Corosync .................................................................................................................................. 11 Cyrus SASL ............................................................................................................................. 11 dtoa.c ....................................................................................................................................... 12 Editline Library (libedit) ............................................................................................................. 12 Facebook Fast Checksum Patch .............................................................................................. -

Learning Perl Through Examples Part 2 L1110@BUMC 2/22/2017

www.perl.org Learning Perl Through Examples Part 2 L1110@BUMC 2/22/2017 Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Tutorial Resource Before we start, please take a note - all the codes and www.perl.org supporting documents are accessible through: • http://rcs.bu.edu/examples/perl/tutorials/ Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Sign In Sheet We prepared sign-in sheet for each one to sign www.perl.org We do this for internal management and quality control So please SIGN IN if you haven’t done so Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Evaluation One last piece of information before we start: www.perl.org • DON’T FORGET TO GO TO: • http://rcs.bu.edu/survey/tutorial_evaluation.html Leave your feedback for this tutorial (both good and bad as long as it is honest are welcome. Thank you) Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 Today’s Topic • Basics on creating your code www.perl.org • About Today’s Example • Learn Through Example 1 – fanconi_example_io.pl • Learn Through Example 2 – fanconi_example_str_process.pl • Learn Through Example 3 – fanconi_example_gene_anno.pl • Extra Examples (if time permit) Yun Shen, Programmer Analyst [email protected] IS&T Research Computing Services Spring 2017 www.perl.org Basics on creating your code How to combine specs, tools, modules and knowledge. Yun Shen, Programmer Analyst [email protected] IS&T Research Computing -

Konlpy Documentation 출시 0.4.1

KoNLPy Documentation 출시 0.4.1 Lucy Park 2015D 02월 25| Contents 1 Standing on the shoulders of giants2 2 License 3 3 Contribute 4 4 Getting started 5 4.1 What is NLP?............................................5 4.2 What do I need to get started?....................................5 5 User guide 6 5.1 Installation..............................................6 5.2 Morphological analysis and POS tagging..............................7 5.3 Data.................................................. 10 5.4 Examples............................................... 11 5.5 Running tests............................................. 23 5.6 References.............................................. 23 6 API 26 6.1 konlpy Package............................................ 26 7 Indices and tables 34 Python ¨È ©] 35 i KoNLPy Documentation, 출시 0.4.1 (https://travis-ci.org/konlpy/konlpy) (https://readthedocs.org/projects/konlpy/?badge=latest) KoNLPy (pro- nounced “ko en el PIE”) is a Python package for natural language processing (NLP) of the Korean language. For installation directions, see here (page 6). For users new to NLP, go to Getting started (page 5). For step-by-step instructions, follow the User guide (page 6). For specific descriptions of each module, go see the API (page 26) documents. >>> from konlpy.tag import Kkma >>> from konlpy.utils import pprint >>> kkma= Kkma() >>> pprint(kkma.sentences(u’$, HUX8요. 반갑습니다.’)) [$, HUX8요.., 반갑습니다.] >>> pprint(kkma.nouns(u’È8t나 tX¬m@ C헙 t슈 ¸래커Ð ¨¨주8요.’)) [È8, tX, tX¬m, ¬m, C헙, t슈, ¸래커] >>> pprint(kkma.pos(u’$X보고는 실행X½, Ð러T8지@h께 $명D \대\Á8히!^^’)) [($X, NNG), (보고, NNG), (는, JX), (실행, NNG), (X½, NNG), (,, SP), (Ð러, NNG), (T8지, NNG), (@, JKM), (h께, MAG), ($명, NNG), (D, JKO), (\대\, NNG), (Á8히, MAG), (!, SF), (^^, EMO)] Contents 1 CHAPTER 1 Standing on the shoulders of giants Korean, the 13th most widely spoken language in the world (http://www.koreatimes.co.kr/www/news/nation/2014/05/116_157214.html), is a beautiful, yet complex language. -

An Introduction to Raku

Perl6 An Introduction Perl6 Raku An Introduction The nuts and bolts ● Spec tests ○ Complete test suite for the language. ○ Anything that passes the suite is Raku. The nuts and bolts ● Spec tests ○ Complete test suite for the language. ○ Anything that passes the suite is Raku. ● Rakudo ○ Compiler, compiles Raku to be run on a number of target VM’s (92% written in Raku) The nuts and bolts ● Spec tests ○ Complete test suite for the language. ○ Anything that passes the suite is Raku. ● Rakudo ○ Compiler, compiles Raku to be run on a number of target VM’s (92% written in Raku) ● MoarVM ○ Short for "Metamodel On A Runtime" ○ Threaded, garbage collected VM optimised for Raku The nuts and bolts ● Spec tests ○ Complete test suite for the language. ○ Anything that passes the suite is Raku. ● Rakudo ○ Compiler, compiles Raku to be run on a number of target VM’s (92% written in Raku) ● MoarVM ○ Short for "Metamodel On A Runtime" ○ Threaded, garbage collected VM optimised for Raku ● JVM ○ The Java Virtual machine. The nuts and bolts ● Spec tests ○ Complete test suite for the language. ○ Anything that passes the suite is Raku. ● Rakudo ○ Compiler, compiles Raku to be run on a number of target VM’s (92% written in Raku) ● MoarVM ○ Short for "Metamodel On A Runtime" ○ Threaded, garbage collected VM optimised for Raku ● JVM ○ The Java Virtual machine. ● Rakudo JS (Experimental) ○ Compiles your Raku to a Javascript file that can run in a browser Multiple Programming Paradigms What’s your poison? Multiple Programming Paradigms What’s your poison? ● Functional -

LAMP and the REST Architecture Step by Step Analysis of Best Practice

LAMP and the REST Architecture Step by step analysis of best practice Santiago Gala High Sierra Technology S.L.U. Minimalistic design using a Resource Oriented Architecture What is a Software Platform (Ray Ozzie ) ...a relevant and ubiquitous common service abstraction Creates value by leveraging participants (e- cosystem) Hardware developers (for OS level platforms) Software developers Content developers Purchasers Administrators Users Platform Evolution Early stage: not “good enough” solution differentiation, innovation, value flows Later: modular architecture, commoditiza- tion, cloning no premium, just speed to market and cost driven The platform effect - ossification, followed by cloning - is how Chris- tensen-style modularity comes to exist in the software industry. What begins as a value-laden proprietary platform becomes a replaceable component over time, and the most successful of these components finally define the units of exchange that power commodity networks. ( David Stutz ) Platform Evolution (II) Example: PostScript Adobe Apple LaserWriter Aldus Pagemaker Desktop Publishing Linotype imagesetters NeWS (Display PostScript) OS X standards (XSL-FO -> PDF, Scribus, OOo) Software Architecture ...an abstraction of the runtime elements of a software system during some phase of its oper- ation. A system may be composed of many lev- els of abstraction and many phases of opera- tion, each with its own software architecture. Roy Fielding (REST) What is Architecture? Way to partition a system in components -

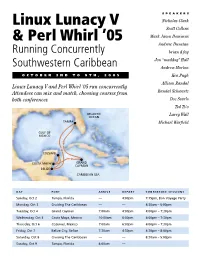

Linux Lunacy V & Perl Whirl

SPEAKERS Linux Lunacy V Nicholas Clark Scott Collins & Perl Whirl ’05 Mark Jason Dominus Andrew Dunstan Running Concurrently brian d foy Jon “maddog” Hall Southwestern Caribbean Andrew Morton OCTOBER 2ND TO 9TH, 2005 Ken Pugh Allison Randal Linux Lunacy V and Perl Whirl ’05 run concurrently. Attendees can mix and match, choosing courses from Randal Schwartz both conferences. Doc Searls Ted Ts’o Larry Wall Michael Warfield DAY PORT ARRIVE DEPART CONFERENCE SESSIONS Sunday, Oct 2 Tampa, Florida — 4:00pm 7:15pm, Bon Voyage Party Monday, Oct 3 Cruising The Caribbean — — 8:30am – 5:00pm Tuesday, Oct 4 Grand Cayman 7:00am 4:00pm 4:00pm – 7:30pm Wednesday, Oct 5 Costa Maya, Mexico 10:00am 6:00pm 6:00pm – 7:30pm Thursday, Oct 6 Cozumel, Mexico 7:00am 6:00pm 6:00pm – 7:30pm Friday, Oct 7 Belize City, Belize 7:30am 4:30pm 4:30pm – 8:00pm Saturday, Oct 8 Cruising The Caribbean — — 8:30am – 5:00pm Sunday, Oct 9 Tampa, Florida 8:00am — Perl Whirl ’05 and Linux Lunacy V Perl Whirl ’05 are running concurrently. Attendees can mix and match, choosing courses Seminars at a Glance from both conferences. You may choose any combination Regular Expression Mastery (half day) Programming with Iterators and Generators of full-, half-, or quarter-day seminars Speaker: Mark Jason Dominus Speaker: Mark Jason Dominus (half day) for a total of two-and-one-half Almost everyone has written a regex that failed Sometimes you’ll write a function that takes too (2.5) days’ worth of sessions. The to match something they wanted it to, or that long to run because it produces too much useful conference fee is $995 and includes matched something they thought it shouldn’t, and information. -

Current Issues in Perl Programming Overview

Current Issues In Perl Programming Lukas Thiemeier Current Issues In Perl Programming DESY, Zeuthen, 2011-04-26 Overview > Introduction > Moose – modern object-orientation in Perl > DBIx::Class – comfortable an flexible database access > Catalyst – a MVC web application framework Lukas Thiemeier | Current issues in Perl programming | 2011-04-26 | Page 2 Introduction > What is this talk about? . Modern Perl can do more than most people know . A quick overview about some modern features . Illustrated with some short examples > What is this talk not about? . Not an introduction to the Perl programming language . Not a Perl tutorial . Not a complete list of all current issues in Perl 5 . Not a complete HowTo for the covered topics Lukas Thiemeier | Current issues in Perl programming | 2011-04-26 | Page 3 Overview > Introduction > Moose – modern object-orientation in Perl . About Moose . Creating and extending classes . Some advanced features > DBIx::Class – comfortable an flexible database access > Catalyst – a MVC web application framework Lukas Thiemeier | Current issues in Perl programming | 2011-04-26 | Page 4 About Moose > “A postmodern object system for Perl 5” > Based on Class::MOP, a metaclass system for Perl 5 > Look and feel similar to the Perl 6 object syntax “The main goal of Moose is to make Perl 5 Object Oriented programming easier, more consistent and less tedious. With Moose you can to think more about what you want to do and less about the mechanics of OOP.” Lukas Thiemeier | Current issues in Perl programming | 2011-04-26 | Page 5 Creating Classes > A very simple Moose-Class: . Create a file called “MyAnimalClass.pm” with the following content: package MyAnimalClass; use Moose; no Moose; 1; Lukas Thiemeier | Current issues in Perl programming | 2011-04-26 | Page 6 Creating Classes > A very simple Moose-Class: The package name is used as class name. -

How the Camel Is De-Cocooning (YAPCNA)

How the Camel! is de-cocooning Elizabeth Mattijsen! YAPC::NA, 23 June 2014 The Inspiration coccoon? Perl 5 Recap: 2000 - 2010 • 2000 - Perl 5.6 • 2002 - Perl 5.8 • 2007 - Perl 5.10 • 2010 - Perl 5.12 + yearly release • The lean years have passed! Perl 6 Recap: 2000 - 2010 • Camel Herders Meeting / Request for Comments • Apocalypses, Exegeses, Synopses • Parrot as a VM for everybody • Pugs (on Haskell) / Perl 6 test-suite • Rakudo (on Parrot) / Niecza (on mono/.NET) • Nothing “Production Ready” The 0’s - Cocooning Years • Perl was busy with itself • Redefining itself • Re-inventing itself • What is Perl ? • These years have passed! Not your normal de-cocooning Perl 5 and Perl 6 will co-exist for a long time to come! Perl 5 in the 10’s • A new release every year! • Many optimisations,5.20 internalis codeout! cleanup! ! • Perl 6-like features: say, state, given/when, ~~, //, …, packageGo {}, lexical getsubs, sub signaturesit! • Perl 6-like Modules: Moose / Moo / Mouse, Method::Signatures,and Promisesuse it! • and a Monthly development release Perl 6 in the 10’s • Niecza more feature-complete, initially • Not Quite Perl (NQP) developed and stand-alone • 6model on NQP with multiple backends • MoarVM - a Virtual Machine for Perl 6 • Rakudo runs on Parrot, JVM, MoarVM • also a Monthly development release Co-existence? Yes! But Perl 6 will become larger and be more future proof! Cool Perl 6 features in Perl 5 • say • yada yada yada (…) • state variables • defined-or (//)! • lexical subs • subroutine signatures • OK as long as it doesn’t involve types print "Foo\n"; Foo ! say "Foo"; Foo print "Foo\n"; !Foo say "Foo"; Foo print "Foo\n"; Foo ! say "Foo"; Foo print "Foo\n"; Foo ! say "Foo"; Foo sub a { .. -

Learning to Generate Pseudo-Code from Source Code Using Statistical Machine Translation

Learning to Generate Pseudo-code from Source Code using Statistical Machine Translation Yusuke Oda, Hiroyuki Fudaba, Graham Neubig, Hideaki Hata, Sakriani Sakti, Tomoki Toda, and Satoshi Nakamura Graduate School of Information Science, Nara Institute of Science and Technology 8916-5 Takayama, Ikoma, Nara 630-0192, Japan foda.yusuke.on9, fudaba.hiroyuki.ev6, neubig, hata, ssakti, tomoki, [email protected] Abstract—Pseudo-code written in natural language can aid comprehension of beginners because it explicitly describes the comprehension of source code in unfamiliar programming what the program is doing, but is more readable than an languages. However, the great majority of source code has no unfamiliar programming language. corresponding pseudo-code, because pseudo-code is redundant and laborious to create. If pseudo-code could be generated Fig. 1 shows an example of Python source code, and En- automatically and instantly from given source code, we could glish pseudo-code that describes each corresponding statement allow for on-demand production of pseudo-code without human in the source code.1 If the reader is a beginner at Python effort. In this paper, we propose a method to automatically (or a beginner at programming itself), the left side of Fig. generate pseudo-code from source code, specifically adopting the 1 may be difficult to understand. On the other hand, the statistical machine translation (SMT) framework. SMT, which was originally designed to translate between two natural lan- right side of the figure can be easily understood by most guages, allows us to automatically learn the relationship between English speakers, and we can also learn how to write specific source code/pseudo-code pairs, making it possible to create a operations in Python (e.g. -

“Computer Programming IV” As Capstone Design and Laboratory Attachment Shoichi Yokoyama† Yamagata University, Yonezawa, Japan

Journal of Engineering Education Research Vol. 15, No. 5, pp. 31~35, September, 2012 “Computer Programming IV” as Capstone Design and Laboratory Attachment Shoichi Yokoyama† Yamagata University, Yonezawa, Japan ABSTRACT A new obligatory subject, Computer Programming IV, is organized in the Department of Informatics, Faculty of Engineering, Yamagata University. The purposes of the subject are as follows: (1) Attachment to each laboratory for bachelor thesis was usually at the initial stage of the student’s fourth academic year. This subject actually moves up the attachment because students are tentatively attached to a laboratory for this subject. The interval to complete their bachelor thesis is extended by half a year. (2) In each laboratory, students cooperate with each other to complete their project. The project becomes capstone design which JABEE (Japan Accreditation Board for Engineering Education) is recently emphasizing. We not only explain the introduction of this subject, but also report some case studies. Keywords: Engineering education, Capstone design, Laboratory attachment, Project, JABEE I. Introduction 1) third academic year, so that students first took the subject in 2009. The detailed plan created before this first use is The education program of the Department of Informatics, described in [2]. Faculty of Engineering, Yamagata University (YUDI) was The present paper describes the syllabus and proceeds accredited in 2003 by Japan Accreditation Board for to describe case studies of some laboratories. We explain Engineering Education (JABEE) [1], the second to be three years of results. accredited for information engineering. Through the in- Computer Programming IV has the following two purposes: termediate examination in 2005, the program was re- accredited in 2008 for the 2009 to 2014 period.