1

Lecture 2 More Motivation of Course Concepts via the Bell Curve

At this point in your lives, most, if not all of you have been exposed to the bell curve to one degree or another. In this lecture we will use this familiarity to give you a better understanding of just what this curve is. In doing so, we will also motivate key concepts to be addressed throughout this course. The bell curve is a lay term for the normal (or Gaussian) distribution. From the goddess Wiki (aka Athena)

https://en.wikipedia.org/wiki/Normal_distribution we have

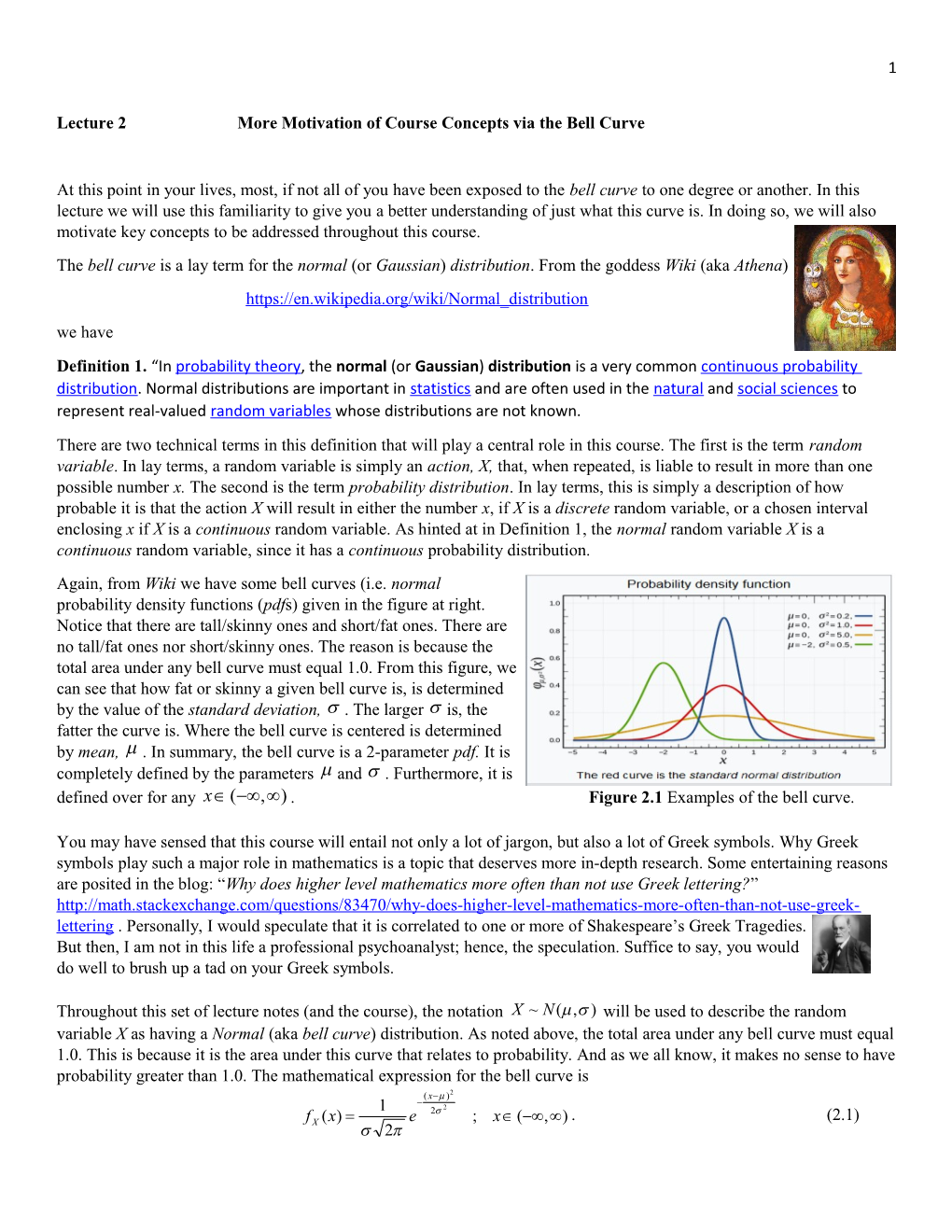

Definition 1. “In probability theory, the normal (or Gaussian) distribution is a very common continuous probability distribution. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. There are two technical terms in this definition that will play a central role in this course. The first is the term random variable. In lay terms, a random variable is simply an action, X, that, when repeated, is liable to result in more than one possible number x. The second is the term probability distribution. In lay terms, this is simply a description of how probable it is that the action X will result in either the number x, if X is a discrete random variable, or a chosen interval enclosing x if X is a continuous random variable. As hinted at in Definition 1, the normal random variable X is a continuous random variable, since it has a continuous probability distribution. Again, from Wiki we have some bell curves (i.e. normal probability density functions (pdfs) given in the figure at right. Notice that there are tall/skinny ones and short/fat ones. There are no tall/fat ones nor short/skinny ones. The reason is because the total area under any bell curve must equal 1.0. From this figure, we can see that how fat or skinny a given bell curve is, is determined by the value of the standard deviation, . The larger is, the fatter the curve is. Where the bell curve is centered is determined by mean, . In summary, the bell curve is a 2-parameter pdf. It is completely defined by the parameters and . Furthermore, it is defined over for any x (,) . Figure 2.1 Examples of the bell curve.

You may have sensed that this course will entail not only a lot of jargon, but also a lot of Greek symbols. Why Greek symbols play such a major role in mathematics is a topic that deserves more in-depth research. Some entertaining reasons are posited in the blog: “Why does higher level mathematics more often than not use Greek lettering?” http://math.stackexchange.com/questions/83470/why-does-higher-level-mathematics-more-often-than-not-use-greek- lettering . Personally, I would speculate that it is correlated to one or more of Shakespeare’s Greek Tragedies. But then, I am not in this life a professional psychoanalyst; hence, the speculation. Suffice to say, you would do well to brush up a tad on your Greek symbols.

Throughout this set of lecture notes (and the course), the notation X ~ N(, ) will be used to describe the random variable X as having a Normal (aka bell curve) distribution. As noted above, the total area under any bell curve must equal 1.0. This is because it is the area under this curve that relates to probability. And as we all know, it makes no sense to have probability greater than 1.0. The mathematical expression for the bell curve is (x )2 1 2 f (x) e 2 ; x (,) . (2.1) X 2 2

Now, to many high school students, as well as university students not involved in the mathematical sciences, the quantity (2.1) may well be nothing more than a bunch of Greek symbols. Indeed, there are two such symbols! But any student who has had a course in algebra should be at least semi-comfortable with (2.1) as simply some rather ugly function of x. As alluded to above, (2.1) is the probability density function (pdf) for the random variable X ~ N(, ) . As a proper density function, it has units of [probability per unit length]. Since the length of any number x is zero, the probability that X can have the exact value x is zero. While this may seem confusing, think about the analogy of pressure, which is force per unit area. At a point x with pressure p(x) , the associated force F(x) 0 , simply because the area associated with x is zero. In other words, pressure is a force density distribution.

Now, here is where things might seem to be getting a bit ‘creepy’. For example, let’s use the Matlab command ‘normrnd’ to sample a random number x from X ~ N( 20, 3) : >> normrnd(20,3) ans =19.6425. Why, one might ask, is this in any way creepy? Well, it is because we just agreed that the probability that the action (in this case, code) X normrnd(20,3) could generate the number x 19.6425 is zero. And yet, lo and behold, we generated this number! Even the probability of winning a Power Ball lottery is greater than zero. Yet, here’s the number x 19.6425. However, before you run out to buy a bunch of lottery tickets, let’s think about this number x 19.6425. Yes, it is a number. But- it is only defined to four decimal places. So, what are the values of the higher decimal places? It is incorrect to assume that the number x 19.6425 is the same as the number x 19.6425000⋯. In fact, we can never measure the latter number, because no measuring device has infinite measurement accuracy.

Indeed, the probability of measuring x 19.6425000⋯is zero! Whereas, the probability of measuring the interval

(x1, x2 ) (19.6425 0.00005,19.6425 0.00005) is not zero. Let’s use the Matlab command ‘normcdf’ to compute the probability of this interval:

>> normcdf(19.6425+.00005,20,3)-normcdf(19.6425-.00005,20,3) ans = 1.3204e-05 (2.2)

The width of the interval (x1, x2 ) (19.6425 0.00005,19.6425 0.00005) is 0.0001. The value of f X (x 19.6425) normpdf(19.6425,20,3) =0.1320. And so, the probability of this interval is approximately .1320(.0001)=1.23(10-5). This is close to (2.2).

The letters ‘cdf’ refer to the term cumulative distribution function. We will denote this as FX (x) . Unlike the pdf f X (x) , which is a density function, the cdf FX (x) accumulates all of the probability associated with the interval (, x]. Hence,

(v)2 x x 1 2 F (x) f (v)dv e 2 dv . (2.3) X X 2

To appreciate the value of the cdf function, one could have computed the probability associated with the interval

(x1, x2 ) (19.6425 0.00005,19.6425 0.00005) by directly integrating the pdf f X (x) over this interval:

(v)2 x2 x2 1 2 Pr[x X x ] f (v)dv e 2 dv . (2.4) 1 2 X 2 x1 x1

However, the integral (2.4) would need to be computed numerically, since there is no closed form expression for the indefinite integral of f X (x) . What (2.2) did was to compute the value of (2.4) via the far easier subtraction:

2 x x (v ) 2 1 1 2 Pr[x X x ] f (v)dv e 2 dv F (x ) F (x ) . (2.5) 1 2 X X 2 X 1 2 3

To summarize: The bell curve is a specific pdf that has the shape of a bell. Because it is a probability density function, it is the area under the curve that gives probability. That is why the total area under the curve must equal 1.0. The bell curve is completely specified by two parameters: and . These parameters are, respectively, the mean and the standard deviation of the random variable X. To compute Pr[x1 X x2 ] , we can avoid integration by using the cdf, FX (x) . Finally, to simulate a measurement of X (to a finite degree of accuracy), we can use the Matlab command ‘normrnd’.

A real-life example to demonstrate the value of simulations The above material was dense, so to speak. We covered a number of key concepts that this course will address. The goal was not to go into gory details. Rather, it was to give you a better understanding of that famous (and sometimes infamous) thing called the bell curve. It was also intended to provide you with some idea of the concepts that will be covered in detail in this course. We will now present a simple, yet very practical example of how the bell curve relates to many real life situations. Suppose that the random variable X=The act of measuring the force needed to remove a cap from a bottle of medicine. Furthermore, suppose that, in truth, X ~ N( 20, 3) . As a human who has been assigned the task of investigating X, we do not know the truth. If we did, there would be no need for an investigation. We assumed this truth model for two reasons. First, it allows us to simulate measurements of X without the expense associated with collecting real data. Second, the fact that we do know the truth allows us to ascertain how good (or bad) our data-based analysis is. In this example we will limit ourselves to the following task:

Task: To determine how good an estimate of the true mean 20 we can get for various choices of the sample size, n.

The importance of this task cannot be overstated. Of course, the more data one collects, the better the estimate of will be. It’s simply common sense. However, collecting data takes time and money. We would like to collect only enough data so that we have a good estimate of . The first step of our investigation includes the act of collection n measurements of breaking forces. To this end, we define n the data collection random variables {X k }k1 , where X k is, in words: The act of measuring the breaking force associated th with the k cap. We will assume that each X k has the same distribution as the generic random variable X. To estimate the mean , we will used the sample mean:

1 n X k X . (2.6) n k1

To simulate one measurement of (2.6) we will use the Matlab command sequence:

>> x=normrnd(20,3,50,1); >> muhat=mean(x) muhat = 19.8429 (2.7a) The leftmost command ‘normrnd(20,3,50,1) is a 50x1 random variable that results in a 50x1 array of numbers. This array is called ‘x’. The command ‘mean(x)’ then computes the average of the numbers in the array ‘x’. As we see, the simulated sample mean of 19.8429 is close to the true mean. But close is in the eye of the beholder. Let’s run the command sequence again:

>> x=normrnd(20,3,50,1); >> muhat=mean(x) muhat = 19.6991. (2.7b) This time, the sample mean is further away from the true value 20 than it was in the simulation (2.7a). This observation may lead you to suggest that we run a large number of simulations, to better understand the distribution of the estimator (2.6). And that is exactly what we now do. In fact, we will run nsim 106 simulations! While this may sound crazy, because of the vector-structure of the Matlab programming language, it is actually quite easy, as we now show: >> x=normrnd(20,3,50,10^6); >> muhat=mean(x); >> mean(muhat) = 19.9999 >> std(muhat) = 0.4249. 4

The leftmost command is a 50x106 random variable that results in a 50x106 array of numbers. Taking the mean of this array results in a 1x106 array of sample means associated with the estimator (2.6). From these numbers, we can use the ‘mean’ and ‘std’ commands to approximate the true mean and standard deviation of (2.6). The fact that that the simulation-based mean of the estimator (2.6) is 19.9999 should be no real surprise, since we know that the true mean is 20. What we didn’t know was the standard deviation of (2.6). What we found from simulations is that it is ~0.425. Hence, we can conclude the following:

For a sample size of 50 caps, the 3 uncertainty of the estimator (2.6) covers the breaking force range 3(0.425) lbf . This allows us to report a quantified uncertainty for the estimator (2.6) for any specified sample size, n. So, to complete our task, we can write a simple ‘for’ loop involving chosen values of n. Rather than writing this code in the Matlab workspace, we will create a ‘script’, and then run it: 3-sigma value for muhat 3 %PROGRAM NAME:lec2.m muX=20; stdX=3; nsim=10^6; n=10:10:100; %Array of sample sizes 2.5 Nn=length(n); %Number of sample sizes std_muhat=zeros(1,Nn); %Initialize array for k=1:Nn 2 x=normrnd(muX,stdX,n(k),nsim); muhat=mean(x); std_muhat(k)=std(muhat); 1.5 end std3=3*std_muhat; %3-sigma value for muhat figure(1) 1 plot(n,std3,'o-') xlabel('Sample Size (n)') 0.5 title('3-sigma value for muhat') 10 20 30 40 50 60 70 80 90 100 grid Sample Size (n) Figure 2.2 3 uncertainty for (2.6) as a function of sample size, n.

Suppose that we desire a 3 uncertainty for the estimator (2.6) of no more than 1#. From Figure 2.2, we see that the smallest possible sample size would be 80 caps. One might well note: But we had to know the truth here. In the real world we don’t know the truth.

Good point: But- IF one can argue that X has a bell curve pdf, and IF one can get some rough idea of the value for X (say, from similar studies or from a single sample set of data), then this truth model is valid. We did not need to know the true value for . Any chosen value would give exactly Figure 2.2.

A Final Remark: Soon enough we will prove the following mathematical result: X / n . In relation to the above

example, for n 50 this gives 3/ 50 0.4243. This compares well with the simulation-based value 0.4249. Using # this formula, we can also determine the minimum sample size to ensure that 3 1 . Simply: 2 2 3 3 X / n 1 n (3 X ) (33) 81. □