Fast Bit Gather, Bit Scatter and Bit Permutation Instructions for Commodity Microprocessors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ADSP-21065L SHARC User's Manual; Chapter 2, Computation

&20387$7,2181,76 Figure 2-0. Table 2-0. Listing 2-0. The processor’s computation units provide the numeric processing power for performing DSP algorithms, performing operations on both fixed-point and floating-point numbers. Each computation unit executes instructions in a single cycle. The processor contains three computation units: • An arithmetic/logic unit (ALU) Performs a standard set of arithmetic and logic operations in both fixed-point and floating-point formats. • A multiplier Performs floating-point and fixed-point multiplication as well as fixed-point dual multiply/add or multiply/subtract operations. •A shifter Performs logical and arithmetic shifts, bit manipulation, field deposit and extraction operations on 32-bit operands and can derive exponents as well. ADSP-21065L SHARC User’s Manual 2-1 PM Data Bus DM Data Bus Register File Multiplier Shifter ALU 16 × 40-bit MR2 MR1 MR0 Figure 2-1. Computation units block diagram The computation units are architecturally arranged in parallel, as shown in Figure 2-1. The output from any computation unit can be input to any computation unit on the next cycle. The computation units store input operands and results locally in a ten-port register file. The Register File is accessible to the processor’s pro- gram memory data (PMD) bus and its data memory data (DMD) bus. Both of these buses transfer data between the computation units and internal memory, external memory, or other parts of the processor. This chapter covers these topics: • Data formats • Register File data storage and transfers -

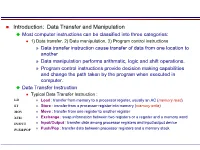

Most Computer Instructions Can Be Classified Into Three Categories

Introduction: Data Transfer and Manipulation Most computer instructions can be classified into three categories: 1) Data transfer, 2) Data manipulation, 3) Program control instructions » Data transfer instruction cause transfer of data from one location to another » Data manipulation performs arithmatic, logic and shift operations. » Program control instructions provide decision making capabilities and change the path taken by the program when executed in computer. Data Transfer Instruction Typical Data Transfer Instruction : LD » Load : transfer from memory to a processor register, usually an AC (memory read) ST » Store : transfer from a processor register into memory (memory write) MOV » Move : transfer from one register to another register XCH » Exchange : swap information between two registers or a register and a memory word IN/OUT » Input/Output : transfer data among processor registers and input/output device PUSH/POP » Push/Pop : transfer data between processor registers and a memory stack MODE ASSEMBLY REGISTER TRANSFER CONVENTION Direct Address LD ADR ACM[ADR] Indirect Address LD @ADR ACM[M[ADR]] Relative Address LD $ADR ACM[PC+ADR] Immediate Address LD #NBR ACNBR Index Address LD ADR(X) ACM[ADR+XR] Register LD R1 ACR1 Register Indirect LD (R1) ACM[R1] Autoincrement LD (R1)+ ACM[R1], R1R1+1 8 Addressing Mode for the LOAD Instruction Data Manipulation Instruction 1) Arithmetic, 2) Logical and bit manipulation, 3) Shift Instruction Arithmetic Instructions : NAME MNEMONIC Increment INC Decrement DEC Add ADD Subtract SUB Multiply -

RISC-V Vector Extension Webinar II

RISC-V Vector Extension Webinar II August 3th, 2021 Thang Tran, Ph.D. Principal Engineer Webinar II - Agenda • Andes overview • Vector technology background – SIMD/vector concept – Vector processor basic • RISC-V V extension ISA – Basic – CSR • RISC-V V extension ISA – Memory operations – Compute instructions • Sample codes – Matrix multiplication – Loads with RVV versions 0.8 and 1.0 • AndesCore™ NX27V • Summary Copyright© 2020 Andes Technology Corp. 2 Terminology • ACE: Andes Custom Extension • ISA: Instruction Set Architecture • CSR: Control and Status Register • GOPS: Giga Operations Per Second • SEW: Element Width (8-64) • GFLOPS: Giga Floating-Point OPS • ELEN: Largest Element Width (32 or 64) • XRF: Integer register file • XLEN: Scalar register length in bits (64) • FRF: Floating-point register file • FLEN: FP register length in bits (16-64) • VRF: Vector register file • VLEN: Vector register length in bits (128-512) • SIMD: Single Instruction Multiple Data • LMUL: Register grouping multiple (1/8-8) • MMX: Multi Media Extension • EMUL: Effective LMUL • SSE: Streaming SIMD Extension • VLMAX/MVL: Vector Length Max • AVX: Advanced Vector Extension • AVL/VL: Application Vector Length • Configurable: parameters are fixed at built time, i.e. cache size • Extensible: added instructions to ISA includes custom instructions to be added by customer • Standard extension: the reserved codes in the ISA for special purposes, i.e. FP, DSP, … • Programmable: parameters can be dynamically changed in the program Copyright© 2020 Andes Technology Corp. 3 RISC-V V Extension ISA Basic Copyright© 2020 Andes Technology Corp. 4 Vector Register ISA • Vector-Register ISA Definition: − All vector operations are between vector registers (except for load and store). -

Optimizing Packed String Matching on AVX2 Platform

Optimizing Packed String Matching on AVX2 Platform M. Akif Aydo˘gmu¸s1,2 and M.O˘guzhan Külekci1 1 Informatics Institute, Istanbul Technical University, Istanbul, Turkey [email protected], [email protected] 2 TUBITAK UME, Gebze-Kocaeli, Turkey Abstract. Exact string matching, searching for all occurrences of given pattern P on a text T , is a fundamental issue in computer science with many applica- tions in natural language processing, speech processing, computational biology, information retrieval, intrusion detection systems, data compression, and etc. Speeding up the pattern matching operations benefiting from the SIMD par- allelism has received attention in the recent literature, where the empirical results on previous studies revealed that SIMD parallelism significantly helps, while the performance may even be expected to get automatically enhanced with the ever increasing size of the SIMD registers. In this paper, we provide variants of the previously proposed EPSM and SSEF algorithms, which are orig- inally implemented on Intel SSE4.2 (Streaming SIMD Extensions 4.2 version with 128-bit registers). We tune the new algorithms according to Intel AVX2 platform (Advanced Vector Extensions 2 with 256-bit registers) and analyze the gain in performance with respect to the increasing length of the SIMD registers. Profiling the new algorithms by using the Intel Vtune Amplifier for detecting performance bottlenecks led us to consider the cache friendliness and shared-memory access issues in the AVX2 platform. We applied cache op- timization techniques to overcome the problems particularly addressing the search algorithms based on filtering. Experimental comparison of the new solutions with the previously known-to- be-fast algorithms on small, medium, and large alphabet text files with diverse pattern lengths showed that the algorithms on AVX2 platform optimized cache obliviously outperforms the previous solutions. -

Designing PCI Cards and Drivers for Power Macintosh Computers

Designing PCI Cards and Drivers for Power Macintosh Computers Revised Edition Revised 3/26/99 Technical Publications © Apple Computer, Inc. 1999 Apple Computer, Inc. Adobe, Acrobat, and PostScript are Even though Apple has reviewed this © 1995, 1996 , 1999 Apple Computer, trademarks of Adobe Systems manual, APPLE MAKES NO Inc. All rights reserved. Incorporated or its subsidiaries and WARRANTY OR REPRESENTATION, EITHER EXPRESS OR IMPLIED, WITH No part of this publication may be may be registered in certain RESPECT TO THIS MANUAL, ITS reproduced, stored in a retrieval jurisdictions. QUALITY, ACCURACY, system, or transmitted, in any form America Online is a service mark of MERCHANTABILITY, OR FITNESS or by any means, mechanical, Quantum Computer Services, Inc. FOR A PARTICULAR PURPOSE. AS A electronic, photocopying, recording, Code Warrior is a trademark of RESULT, THIS MANUAL IS SOLD “AS or otherwise, without prior written Metrowerks. IS,” AND YOU, THE PURCHASER, ARE permission of Apple Computer, Inc., CompuServe is a registered ASSUMING THE ENTIRE RISK AS TO except to make a backup copy of any trademark of CompuServe, Inc. ITS QUALITY AND ACCURACY. documentation provided on Ethernet is a registered trademark of CD-ROM. IN NO EVENT WILL APPLE BE LIABLE Xerox Corporation. The Apple logo is a trademark of FOR DIRECT, INDIRECT, SPECIAL, FrameMaker is a registered Apple Computer, Inc. INCIDENTAL, OR CONSEQUENTIAL trademark of Frame Technology Use of the “keyboard” Apple logo DAMAGES RESULTING FROM ANY Corporation. (Option-Shift-K) for commercial DEFECT OR INACCURACY IN THIS purposes without the prior written Helvetica and Palatino are registered MANUAL, even if advised of the consent of Apple may constitute trademarks of Linotype-Hell AG possibility of such damages. -

Faster Base64 Encoding and Decoding Using AVX2 Instructions

Faster Base64 Encoding and Decoding using AVX2 Instructions WOJCIECH MUŁA, DANIEL LEMIRE, Universite´ du Quebec´ (TELUQ) Web developers use base64 formats to include images, fonts, sounds and other resources directly inside HTML, JavaScript, JSON and XML files. We estimate that billions of base64 messages are decoded every day. We are motivated to improve the efficiency of base64 encoding and decoding. Compared to state-of-the-art implementations, we multiply the speeds of both the encoding (≈ 10×) and the decoding (≈ 7×). We achieve these good results by using the single-instruction-multiple-data (SIMD) instructions available on recent Intel processors (AVX2). Our accelerated software abides by the specification and reports errors when encountering characters outside of the base64 set. It is available online as free software under a liberal license. CCS Concepts: •Theory of computation ! Vector / streaming algorithms; General Terms: Algorithms, Performance Additional Key Words and Phrases: Binary-to-text encoding, Vectorization, Data URI, Web Performance 1. INTRODUCTION We use base64 formats to represent arbitrary binary data as text. Base64 is part of the MIME email protocol [Linn 1993; Freed and Borenstein 1996], used to encode binary attachments. Base64 is included in the standard libraries of popular programming languages such as Java, C#, Swift, PHP, Python, Rust, JavaScript and Go. Major database systems such as Oracle and MySQL include base64 functions. On the Web, we often combine binary resources (images, videos, sounds) with text- only documents (XML, JavaScript, HTML). Before a Web page can be displayed, it is often necessary to retrieve not only the HTML document but also all of the separate binary resources it needs. -

Bit-Mask Based Compression of FPGA Bitstreams

International Journal of Soft Computing and Engineering (IJSCE) ISSN: 2231-2307, Volume-3 Issue-1, March 2013 Bit-Mask Based Compression of FPGA Bitstreams S.Vigneshwaran, S.Sreekanth The efficiency of bitstream compression is measured using Abstract— In this paper, bitmask based compression of FPGA Compression Ratio (CR). It is defined as the ratio between the bit-streams has been implemented. Reconfiguration system uses compressed bitstream size (CS) and the original bitstream bitstream compression to reduce bitstream size and memory size (OS) (ie CR=CS/OS). A smaller compression ratio requirement. It also improves communication bandwidth and implies a better compression technique. Among various thereby decreases reconfiguration time. The three major compression techniques that has been proposed compression contributions of this paper are; i) Efficient bitmask selection [5] seems to be attractive for bitstream compression, because technique that can create a large set of matching patterns; ii) Proposes a bitmask based compression using the bitmask and of its good compression ratio and relatively simple dictionary selection technique that can significantly reduce the decompression scheme. This approach combines the memory requirement iii) Efficient combination of bitmask-based advantages of previous compression techniques with good compression and G o l o m b coding of repetitive patterns. compression ratio and those with fast decompression. Index Terms—Bitmask-based compression, Decompression II. RELATED WORK engine, Golomb coding, Field Programmable Gate Array (FPGA). The existing bitstream compression techniques can be classified into two categories based on whether they need I. INTRODUCTION special hardware support during decompression. Some Field-Programmable Gate Arrays (Fpga) is widely used in approaches require special hardware features to access the reconfigurable systems. -

The Central Processor Unit

Systems Architecture The Central Processing Unit The Central Processing Unit – p. 1/11 The Computer System Application High-level Language Operating System Assembly Language Machine level Microprogram Digital logic Hardware / Software Interface The Central Processing Unit – p. 2/11 CPU Structure External Memory MAR: Memory MBR: Memory Address Register Buffer Register Address Incrementer R15 / PC R11 R7 R3 R14 / LR R10 R6 R2 R13 / SP R9 R5 R1 R12 R8 R4 R0 User Registers Booth’s Multiplier Barrel IR Shifter Control Unit CPSR 32-Bit ALU The Central Processing Unit – p. 3/11 CPU Registers Internal Registers Condition Flags PC Program Counter C Carry IR Instruction Register Z Zero MAR Memory Address Register N Negative MBR Memory Buffer Register V Overflow CPSR Current Processor Status Register Internal Devices User Registers ALU Arithmetic Logic Unit Rn Register n CU Control Unit n = 0 . 15 M Memory Store SP Stack Pointer MMU Mem Management Unit LR Link Register Note that each CPU has a different set of User Registers The Central Processing Unit – p. 4/11 Current Process Status Register • Holds a number of status flags: N True if result of last operation is Negative Z True if result of last operation was Zero or equal C True if an unsigned borrow (Carry over) occurred Value of last bit shifted V True if a signed borrow (oVerflow) occurred • Current execution mode: User Normal “user” program execution mode System Privileged operating system tasks Some operations can only be preformed in a System mode The Central Processing Unit – p. 5/11 Register Transfer Language NAME Value of register or unit ← Transfer of data MAR ← PC x: Guard, only if x true hcci: MAR ← PC (field) Specific field of unit ALU(C) ← 1 (name), bit (n) or range (n:m) R0 ← MBR(0:7) Rn User Register n R0 ← MBR num Decimal number R0 ← 128 2_num Binary number R1 ← 2_0100 0001 0xnum Hexadecimal number R2 ← 0x40 M(addr) Memory Access (addr) MBR ← M(MAR) IR(field) Specified field of IR CU ← IR(op-code) ALU(field) Specified field of the ALU(C) ← 1 Arithmetic and Logic Unit The Central Processing Unit – p. -

Chapter 17: Filters to Detect, Filters to Protect Page 1 of 11

Chapter 17: Filters to Detect, Filters to Protect Page 1 of 11 Chapter 17: Filters to Detect, Filters to Protect You learned about the concepts of discovering events of interest by configuring filters in Chapter 8, "Introduction to Filters and Signatures." That chapter showed you various different products with unique filtering languages to assist you in discovering malicious types of traffic. What if you are a do- it-yourself type, inclined to watch Bob Vila or "Men in Tool Belts?" The time-honored TCPdump program comes with an extensive filter language that you can use to look at any field, combination of fields, or bits found in an IP datagram. If you like puzzles and don’t mind a bit of tinkering, you can use TCPdump filters to extract different anomalous traffic. Mind you, this is not the tool for the faint of heart or for those of you who shy away from getting your brain a bit frazzled. Those who prefer a packaged solution might be better off using the commercial products and their GUIs or filters. This chapter introduces the concept of using TCPdump and TCPdump filters to detect events of interest. TCPdump and TCPdump filters are the backbone of Shadow, and so the recommended suggestion is to download the current version of Shadow at http://www.nswc.navy.mil/ISSEC/CID/ to examine and enhance its native filters. This takes care of automating the collection and processing of traffic, freeing you to concentrate on improving the TCPdump filters for better detects. Specifically, this chapter discusses the mechanics of creating TCPdump filters. -

This Is a Free, User-Editable, Open Source Software Manual. Table of Contents About Inkscape

This is a free, user-editable, open source software manual. Table of Contents About Inkscape....................................................................................................................................................1 About SVG...........................................................................................................................................................2 Objectives of the SVG Format.................................................................................................................2 The Current State of SVG Software........................................................................................................2 Inkscape Interface...............................................................................................................................................3 The Menu.................................................................................................................................................3 The Commands Bar.................................................................................................................................3 The Toolbox and Tool Controls Bar........................................................................................................4 The Canvas...............................................................................................................................................4 Rulers......................................................................................................................................................5 -

Optimizing Software Performance Using Vector Instructions Invited Talk at Speed-B Conference, October 19–21, 2016, Utrecht, the Netherlands

Agner Fog, Technical University of Denmark Optimizing software performance using vector instructions Invited talk at Speed-B conference, October 19–21, 2016, Utrecht, The Netherlands. Abstract Microprocessor factories have a problem obeying Moore's law because of physical limitations. The answer is increasing parallelism in the form of multiple CPU cores and vector instructions (Single Instruction Multiple Data - SIMD). This is a challenge to software developers who have to adapt to a moving target of new instruction set additions and increasing vector sizes. Most of the software industry is lagging several years behind the available hardware because of these problems. Other challenges are tasks that cannot easily be executed with vector instructions, such as sequential algorithms and lookup tables. The talk will discuss methods for overcoming these problems and utilize the continuously growing power of microprocessors on the market. A few problems relevant to cryptographic software will be covered, and the outlook for the future will be discussed. Find more on these topics at author website: www.agner.org/optimize Moore's law The clock frequency has stopped growing due to physical limitations. Instead, the number of CPU cores and the size of vector registers is growing. Hierarchy of bottlenecks Program installation Program load, JIT compile, DLL's System database Network access Speed → File input/output Graphical user interface RAM access, cache utilization Algorithm Dependency chains CPU pipeline and execution units Remove -

Chapter 4 Data-Level Parallelism in Vector, SIMD, and GPU Architectures

Computer Architecture A Quantitative Approach, Fifth Edition Chapter 4 Data-Level Parallelism in Vector, SIMD, and GPU Architectures Copyright © 2012, Elsevier Inc. All rights reserved. 1 Contents 1. SIMD architecture 2. Vector architectures optimizations: Multiple Lanes, Vector Length Registers, Vector Mask Registers, Memory Banks, Stride, Scatter-Gather, 3. Programming Vector Architectures 4. SIMD extensions for media apps 5. GPUs – Graphical Processing Units 6. Fermi architecture innovations 7. Examples of loop-level parallelism 8. Fallacies Copyright © 2012, Elsevier Inc. All rights reserved. 2 Classes of Computers Classes Flynn’s Taxonomy SISD - Single instruction stream, single data stream SIMD - Single instruction stream, multiple data streams New: SIMT – Single Instruction Multiple Threads (for GPUs) MISD - Multiple instruction streams, single data stream No commercial implementation MIMD - Multiple instruction streams, multiple data streams Tightly-coupled MIMD Loosely-coupled MIMD Copyright © 2012, Elsevier Inc. All rights reserved. 3 Introduction Advantages of SIMD architectures 1. Can exploit significant data-level parallelism for: 1. matrix-oriented scientific computing 2. media-oriented image and sound processors 2. More energy efficient than MIMD 1. Only needs to fetch one instruction per multiple data operations, rather than one instr. per data op. 2. Makes SIMD attractive for personal mobile devices 3. Allows programmers to continue thinking sequentially SIMD/MIMD comparison. Potential speedup for SIMD twice that from MIMID! x86 processors expect two additional cores per chip per year SIMD width to double every four years Copyright © 2012, Elsevier Inc. All rights reserved. 4 Introduction SIMD parallelism SIMD architectures A. Vector architectures B. SIMD extensions for mobile systems and multimedia applications C.