Multi-Mention Learning for Reading Comprehension with Neural Cascades

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vector 285 Mcfarlane 2017-Sp

Vector The Critical Journal of the British Science Fiction Association Best of 2016 Issue No. 285 Spring 2017 £4.00 Vector The Critical Journal of the British Science Fiction Association Features, Editorial Anna McFarlane ARTICLES and Letters: [email protected] Twitter: @mariettarosetta Torque Control Editorial by Anna McFarlane....................... 3 Book Reviews: Susan Oke 18 Cromer Road, Death Mettle: Jason Arnopp interviewed Barnet EN5 5HT [email protected] by Scott K Andrews Production: Alex Bardy by Andrew Wallace ..................................... 4 [email protected] Best of Science Fiction Television 2016 British Science Fiction Association Ltd by Molly Cobb ............................................ 6 The BSFA was founded in 1958 and is a non-profitmaking organisation entirely staffed by unpaid volunteers. Best Films of 2016 Registered in England. Limited by guarantee. by various .............................................. 11 BSFA Website www.bsfa.co.uk Company No. 921500 2016 in SF Audio Registered address: 61 Ivycroft Road, Warton, by Tony Jones ............................................ 19 Tamworth, Staffordshire B79 0JJ Best Videogames of 2016 President Stephen Baxter by Conor McKeown .................................. 24 Chair Donna Scott [email protected] RECURRENT Treasurer Martin Potts 61 Ivy Croft Road, Warton, Kincaid in Short: Paul Kincaid ............... 28 Nr. Tamworth B79 0JJ [email protected] Foundation Favourites: Andy Sawyer .... 31 Resonances: Stephen Baxter .................. 34 Membership Services Dave Lally (incl. changes of address) [email protected] Your BSFA Membership No. is shown on the address label of the envelope this magazine came in, please note THE BSFA REVIEW it down NOW and use it in all BSFA communications re. Membership and Renewals. If using Paypal to renew, The BSFA Review please use the Comments/Notes box to quote your Edited by Susan Oke ................................. -

Ebook Download Terror Firma Ebook Free Download

TERROR FIRMA PDF, EPUB, EBOOK Joseph Lidster,Gary Russell,Terry Molloy,Conrad Westmaas,India Fisher,Paul McGann | none | 31 Jul 2005 | Big Finish Productions Ltd | 9781844351374 | English | Maidenhead, United Kingdom Terror Firma PDF Book Running time. Vespers give these poor souls a new chance of life but at a cost, they become vampires or Vetostryx as they call themselves. Peri , Erimem. Casey Kaufman. Alternate Versions. Director: Lloyd Kaufman. Gemma then spread Davros's virus, giving him enough Dalek creatures to create an army of Daleks. Davros shows him what Charley and C'rizz are doing and tells Gemma to give C'rizz a gun and ask him to shoot her. Toddster : Right upstairs. Welcome to Terror Firma, an urban fantasy world with elements of horror and science fiction. All other stories are represented in other navigation boxes. At the climax, the entire film crew bands together both physically and sexually against the mortal threat in their midst. October Streaming Picks. Audrey Benjamin Gary Hrbek External Sites. In Remembrance. This darkness counters light but is not powerful enough to consume it, at least not yet. Gary Russell Jason Haigh-Ellery. Now let's go make some art! Dalek Attack. They would take food, urine, feces, vomit and semen and create shocking works of art. The Companion Chronicles. Samson now has his memory back, and Harriet reveals that her party guests are in fact the British resistance and that the parties are just a cover story. Add links. The first five minutes of the film contain a leg decapitation, an abortion, death by corn flakes and a suicidal shot in the head. -

2016 Topps Doctor Who Extraterrestrial

Base Cards 1 The First Doctor 34 Judoon 67 Dragonfire 2 The Second Doctor 35 The Family of Blood 68 Silver Nemesis 3 The Third Doctor 36 The Adipose 69 Ghost Light 4 The Fourth Doctor 37 Vashta 70 The End of the World 5 The Fifth Doctor 38 Tritovores 71 Aliens of London 6 The Sixth Doctor 39 Prisoner Zero 72 Dalek 7 The Seventh Doctor 40 The Tenza 73 The Parting of the Ways 8 The Eighth Doctor 41 The Silence 74 School Reunion 9 The War Doctor 42 The Wooden King 75 The Impossible Planet 10 The Ninth Doctor 43 The Great Intelligence 76 "42" 11 The Tenth Doctor 44 Ice Warrior Skaldak 77 Utopia 12 The Eleventh Doctor 45 Strax 78 Planet of the Ood 13 The Twelfth Doctor 46 Slitheen 79 The Sontaran Stratagem 14 Susan 47 Zygons 80 The Doctor's Daughter 15 Zoe 48 Sycorax 81 Midnight 16 Sarah Jane 49 The Master 82 Journey's End 17 Ace 50 Missy 83 The Waters of Mars 18 Rose 51 The Daleks 84 Victory of the Daleks 19 Captain Jack 52 The Sensorites 85 The Pandorica Opens 20 Martha 53 The Dalek Invasion of Earth 86 Closing Time 21 Donna 54 Tomb of the Cybermen 87 A Town Called Mercy 22 Amy 55 The Invasion 88 The Power of Three 23 Rory 56 The Claws of Axos 89 The Rings of Akhaten 24 Clara 57 Frontier in Space 90 The Night of the Doctor 25 Osgood 58 The Time Warrior 91 The Time of the Doctor 26 River 59 Death to the Daleks 92 Into the Dalek 27 Davros 60 Pyramids of Mars 93 Time Heist 28 Rassilon 61 The Keeper of Traken 94 In the Forest of the Night 29 The Ood 62 The Five Doctors 95 Before the Flood 30 The Weeping Angels 63 Resurrection of the Daleks 96 -

Doctor Who: Destiny of the Daleks: (4Th Doctor TV Soundtrack) Free

FREE DOCTOR WHO: DESTINY OF THE DALEKS: (4TH DOCTOR TV SOUNDTRACK) PDF Terry Nation,Full Cast,Lalla Ward,Tom Baker | 1 pages | 26 Mar 2013 | BBC Audio, A Division Of Random House | 9781471301469 | English | London, United Kingdom Doctor Who: Destiny of the Daleks (TV soundtrack) Audiobook | Terry Nation | The lowest-priced, brand-new, unused, unopened, undamaged item in its original packaging where packaging is applicable. Packaging should be the same as what is found in a retail store, unless the item is handmade or was packaged by the manufacturer in non-retail packaging, such as an unprinted box or plastic bag. See details for additional description. Skip to main content. About this product. Stock photo. Brand new: Lowest price The lowest-priced, brand-new, unused, unopened, undamaged item in its original packaging where packaging is applicable. When the Doctor realises what the Doctor Who: Destiny of the Daleks: (4th Doctor TV Soundtrack) are Doctor Who: Destiny of the Daleks: (4th Doctor TV Soundtrack) to, he is compelled to intervene. Terry Nation was born in Llandaff, near Cardiff, in He realised that the creatures had to truly look alien, and 'In order to make it non-human what you have to do is take the legs off. Read full description. See all 8 brand new listings. Buy it now. Add to basket. All listings for this product Buy it now Buy it now. Any condition Any condition. Last one Free postage. See all 10 - All listings for this product. About this product Product Information On Skaro the home world of the Daleks the Doctor encounters the militaristic Movellans - who have come to Skaro on a secret mission - whilst his companion Romana falls into the hands of the Daleks themselves. -

The Tenth Doctor Adventures!

WWW.BIGFINISH.COM • NEW AUDIO ADVENTURES ISSUE 87 • MAY 2016 2016 MAY • 87 ISSUE DOCTOR WHO: THE TENTH DOCTOR ALLONS-Y! DAVID TENNANT AND CATHERINE TATE RETURN TO THE TARDIS FOR THREE NEW ADVENTURES! JOURNEYS IN SPACE AND TIME! PLUS! CLASSIC HORROR GALLIFREY BIG FINISH’S DRACULA ENEMY LINES BIG GUIDE! MARK GATISS BARES HIS FANGS ROMANA, LEELA AND ACE PREPARE 21 ESSENTIAL RELEASES FOR IN OUR NEW ADAPTATION! THE TIME LORDS FOR WAR! NEWCOMERS TO LISTEN TO! WELCOME TO BIG FINISH! We love stories and we make great full-cast audio dramas and audiobooks you can buy on CD and/or download Big Finish… Subscribers get more We love stories! at bigfinish.com! Our audio productions are based on much- If you subscribe, depending on the range you loved TV series like Doctor Who, Torchwood, subscribe to, you get free audiobooks, PDFs Dark Shadows, Blake’s 7, The Avengers and of scripts, extra behind-the-scenes material, a Survivors as well as classic characters such as bonus release, downloadable audio readings of Sherlock Holmes, The Phantom of the Opera new short stories and discounts. and Dorian Gray, plus original creations such as Graceless, Charlotte Pollard and The You can access a video guide to the site at Adventures of Bernice Summerfield. www.bigfinish.com/news/v/website-guide-1 WWW.BIGFINISH.COM @ BIGFINISH THEBIGFINISH Sneak Previews & Whispers Editorial ELL, this is all rather exciting, isn’t it? David Tennant is back as the Doctor! W For those of us who are long-standing Big Finish fans, we knew of David’s work long before he was given the key to the TARDIS, and when we heard he had been cast, we were already hoping that one day he’d be back in the Big Finish studio to play the Doctor. -

CM 12 STD Format.Pdf

1 Cydney, her eyes wide. She doesn’t FANS VICTORIOUS need to tell me what it looks like. Editorial by Nick Smith ‘She made me a great one,’ says Bran- don cheerfully. ‘If it was colder, I’d be wearing it.’ Since we’ve been working in 40-degree heat, he’s smart to leave it at home. We smile and move on to anoth- er subject. So, I’m thousands of miles from my birth town of Bristol with people I’ve only known for a few weeks and yet we have this connection, a TV show that seems as well-travelled as the TARDIS. From South Croydon, England to Cor- sicana, Texas, Doctor Who is in the zeit- I’m currently working as a Production geist, with fans everywhere Manager on a movie in East Texas. I say this, not to brag – I’m a glorified When DWAS Publications Officer Rik office boy with a walkie talkie – but Moran graciously asked me to become because Doctor Who helped bring me the new editor of Cosmic Masque, I here, instilling a passion in me for visu- looked forward to connecting with al storytelling, film and telly-making. some fellow Whovians and sharing my passion for the show. I did not know After a long day of filming, I’m eating that everyone I talked to for Cosmic pizza in Napoli’s, a downtown joint Masque would all have a deep connec- with a bar next door that’s also called tion to the show, whether it was excep- Napoli’s. -

Fish Fingers and Custard Issue 2 Where Do You Go When All the Love Has Gone?

Fish Fingers and Custard Issue 2 Where Do You Go When All The Love Has Gone? Those are lyrics that I have just made up. I’m sure you’ll agree that I’m up there with all the modern musical geniuses, such as Prince, Stevie Wonder, Eric Clapton and Justin Bieber. But where am I going with this? I have an idea and it WILL make sense. Or so I hope… The beauty with Doctor Who is that even when the 13 weeks worth of episodes have gone, we don’t need to cry and mourn for its disappearance (or in my case – ran off without a word and never contacted me again). There are all sorts of commodities out there that we can lay our hands on and enjoy. (I’m starting to regret making this analogy now, as the drug-crazed aliens from Torchwood: Children of Earth would have been a better comparison!) The sheer size of Doctor Who fandom is incredibly huge and you’ll always be able to pick up something that’ll make you feel the way you do when you’re stuck in the middle of an episode. (This fanzine is akin to Love and Monsters than Empty Child/The Doctor Dances, to be honest!) Whether it be a magazine, book, DVD, audiobook, toy (they ARE toys btw), convention, podcast or even a fanzine, it’s all out there for us to explore and enjoy when a series ends, so we’ve no need to get upset and pine until Christmas! And wasn’t Series 5 (or whatever you want to call it) just superb? I can be quite smug here and say that I was never worried about Matt Smith nailing the role of The Doctor. -

Table of Contents

TABLE OF CONTENTS Acknowledgments ...........................................................................................................................5 Table of Contents ..............................................................................................................................7 Foreword by Gary Russell ............................................................................................................9 Preface ..................................................................................................................................................11 Introduction .......................................................................................................................................13 Chapter 1 The New World ............................................................................................................16 Chapter 2 Daleks Are (Almost) Everywhere ........................................................................25 Chapter 3 The Television Landscape is Changing ..............................................................38 Chapter 4 The Doctor Abroad .....................................................................................................45 Sidebar: The Importance of Earnest Videotape Trading .......................................49 Chapter 5 Nothing But Star Wars ..............................................................................................53 Chapter 6 King of the Airwaves .................................................................................................62 -

Curriculum Vitae

CURRICULUM VITAE ACCENTS & DIALECTS: TYNESIDE (NATIVE), BIRMINGHAM (AUTHENTIC), COCKNEY (AUTHENTIC), BLACK COUNTRY, MANCHESTER, YORKSHIRE, EAST ANGLIAN, WELSH, EDINBURGH, DUBLIN, AUSTRALIAN, USA. HIGHLY PROFICIENT IN MOST ACCENTS AND DIALECTS. SKILLS: MUSICAL INSTRUMENTS: ALTO SAXAPHONE, CLARINET, CORNET, TENOR HORN, PENNY WHISTLE, BANJO-UKELELE, HARMONICA. SPORTS: BADMINTON, ARCHERY, GOLF, SUB-AQUA DIVING. OTHER SKILLS: COMPUTER LITERATE, FULL DRIVING LICENCE. FILM CREDITS: CHASING THE DEER MR GARVIE CROMWELL FILMS GRAHAM HOLLOWAY T.V. CREDITS: BYKER GROVE DAVE ROSS ZENITH NORTH MICHAEL HINES DOCTORS DAVE MALLIN BBC DOMINIC KEAVEY HOLLYOAKS GYNAECOLOGIST MERSEY TELEVISION MICHAEL ROLFE ALL ABOUT ME SITE MANAGER CELADOR NICK WOOD GYPSY GIRL DC CHADWICK FILM AND GENERAL TV MARCUS D F WHITE URBAN GOTHIC DR GREER CHANNEL 5 MARCUS D.F. WHITE DAD CHRISTMAS SPECIAL TURKEY FARMER BBC ANGIE DE CHASTELAINE SMITH DANGERFIELD HARRY BBC LAWRENCE GORDON CLARK THE BILL MAL ERICSON THAMES JAN SARGENT EASTENDERS CHALKIE BBC PETER EDWARDS RESURRECTION OF THE DALEKS DAVROS BBC MATTHEW ROBINSON REVELATION OF THE DALEKS DAVROS BBC GRAEME HARPER REMEMBERANCE OF THE DALEKS DAVROS BBC ANDREW MORGAN ATTACK OF THE CYBERMEN RUSSELL BBC MATTHEW ROBINSON BEADLE’S ABOUT (SERIES 3-7) ‘HIT SQUAD’ LWT CHRIS FOX SPECIALS (9 EPS) BARACLOUGH BBC CHRIS BAKER CHALKFACE (4 EPS) GEORGE MELLOR BBC TIM FYWELL TALES OF SHERWOOD (4 EPS) BOB LOMAX CENTRAL PEDR JAMES THE REAL EDDY ENGLISH (4 EPS) HARRY ENGLISH CHANNEL 4 DAVID ATTWOOD RADIO PHOENIX (26 EPS) MIKE BARKER TVS MATTHEW ROBINSON JUPITER MOON (30 EPS) CATCHPOLE ANDROMEDATV CLIVE FLUREY WHIZZIWIG MR. HIGGS CARLTON TV NEVILLE GREEN BAD COMPANY PHILLIP COX QC BBC DAVID DRURY HEAD OVER HEELS PALAIS MC CARNIVAL FILMS CHRISTOPHER KING BERGERAC JAY CARLTON BBC GRAEME HARPER THE INDEX HAS GONE FISHING BOB CENTRAL GARETH DAVIES ALL TOGETHER NOW DON BBC DAVID ATTWOOD CARROT DEL SOL KEVIN LWT TERRY RYAN OLIVER TWIST BRITTLES BBC GARETH DAVIES CONNIE ROY CENTRAL MICHAEL ROLFE THE EXERCISE LT. -

Published Bi-Monthly Volume 29 • No. 4 August/September 2018

PUBLISHED BI-MONTHLY VOLUME 29 • NO. 4 AUGUST/SEPTEMBER 2018 NON-SPORT UPDATE Aug.-Sept. 2018 • u.S. $5.99 • CAN. $6.49 Issue Code: 2018-08 • Display until 10/16/18 PRINTED IN THE U.S.A. 08 0 71486 02957 1 VOLUME 29 • NO. 4 AUGUST/SEPTEMBER 2018 FEATURES DEPARTMENTS OUR STAFF 4 CARDBOARD CONVERSATION: 6 PROMO PICKS EDITORIAL DIRECTOR - Mike Payne ROLLING IN DʼOH! “Whatever, Iʼll be at Moeʼs.” 8 NON-SPORT NEIGHBORHOOD: EDITOR-IN-CHIEF - Alan Biegel MEET MICHAEL BEAM 10 TRUE LOVE IS NEVER LOST Time to Beam up with this PRODUCTION MANAGER - Harris Toser What if your future was the past? experienced card collector. Find out with Outlander Season 3. ART DIRECTOR - Lindsey Jones 26 NEW & NOTEWORTHY GRAPHIC DESIGN - Eric Knagg, Chris Duncan 16 VINTAGE SPOTLIGHT: LOVE ME DO Classic Mythology III: Goddesses, COLLECTIBLES DATA PUBLISHING Get a ticket to ride with this Beatles beauty. Topps Star Wars Archives Signatures, Manager, Senior Market Analyst - Brian Fleischer 22 STAR TREK: THE ORIGINAL DC Bombshells Trading Cards II. SERIES CAPTAINʼS COLLECTION 28 DATELINE PRICE GUIDE STAFF: Jeff Camay, Arsenio Tan, Captain on the bridge for this new Lloyd Almonguera, Kristian Redulla, Justin Grunert, Matt 30 NON-SPORT NEWS compilation from Rittenhouse. Bible, Eric Norton, Irish Desiree Serida, Paul Wirth, Ian 32 DANGER, WILL ROBINSON! 38 THE HOT LIST McDaries, Sam Zimmer, Steve Dalton Jump aboard the Jupiter 2 for a 41 HOW TO USE THE PRICE GUIDE WRITERS: Arnold Bailey, Matt Bible, Alan Biegel, wild non-sport ride. 42 PRICE GUIDE Ryan Cracknell, Don Norton, Charlie Novinskie, Rudy Panucci, Harris Toser, Chick Veditz Non-Sport Update (ISSN: 10598383) is Beckett Collectibles Inc. -

TTZ10 Master Document Pp9.1

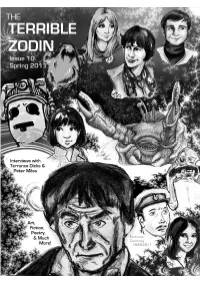

Table of Contents EDITOR ’S NOTE Editor’s NoteNote.............................................................2 A Christmas Carol Review Jamie Beckwith ............3 Though I only knew Patrick Troughton from SFX Weekender Will Forbes & A. Vandenbussche.4 “TheThe Five Doctors ” by the time I rediscovered Out of Time Nick Mellish .....................................7 Doctor Who when I was in my teens, I knew A Scribbled Note Jamie Beckwith ...........................9 I loved the Second Doctor. He always seemed Donna Noble vs Amy Pond Aya Vandenbussche .10 like the only Doctor one could reasonably go to for a hug. My admiration continued to The Real Pancho Villa Leslie McMurtry ...............13 grow; my first story from the Second Doctor’s Tea with Nyder: TTZ Interviews Peter Miles ........14 era—“SeedsSeeds of Death ”—may have left a rather The War Games’ Civil War Leslie McMurtry & Evan lukewarm impression, but the Missing Adven- L. Keraminas ...........................................................21 tures novel The Dark Path made up for it. Season 6(b) Matthew Kresal ....................................25 In this issue, we have gone monochrome, in No! Not The Mind Probe - The Web Planet Tony honor of Troughton, his last story “ The War Games ,” and of the significant part the Cross & Steve Sautter ............................................28 Second Doctor’s era had to play in influencing The Blue Peter Guide to The Web Planet Robert all subsequent incarnations of Doctor Who— a Beckwith ..................................................................30 -

Terry Molloy Photo: MUG Photography

59 St. Martin's Lane London WC2N 4JS Phone: 0207 836 7849 Email: [email protected] Website: www.nikiwinterson.com Terry Molloy Photo: MUG Photography "Mike Tucker" in BBC R4 'The Archers' Location: Norwich, Norfolk, United Kingdom Hair Colour: Silver Height: 5'6" (167cm) Hair Length: Short Weight: 14st. 5lb. (91kg) Facial Hair: Beard Playing Age: 61 - 70 years Voice Character: Engaging Appearance: White Voice Quality: Smokey Eye Colour: Brown Audio Audio, Edmund Fennick, The Omega Factor - "The Hollow Earth", Big Finish Productions, Ken Bently Audio, Narrator, "Remembrance of The Daleks" - Audio Book, BBC Audio, Neil Gardner Audio, Captain Quinn, "The Phantom Wreck" - Bernice Summerfield, Big Finish Productions, Scott Handcock Audio, Father Sumner, "The Confessions of Dorian Gray" - Series 3, Big Finish Productions, Scott Handcock Audio, Professor Edward Dunning, Scarifyers 9 - "The King of Winter", Bafflegabble, Simon Barnard Audio, Christensen, "Frankenstein", Big Finish, Scott Handcock Audio, Jaques Beronne, "The Avengers", Big Finish, Ken Bentley Audio, John Redgrave, "Survivors", Big Finish, Ken Bentley Audio, Davros, Doctor Who - "Daleks Among Us!", Big Finish, Ken Bentley Audio, Stoyn, The Companion Chronicles - "The Dying Light", Big Finish, Lisa Bowerman Audio, Stoyn, The Companion Chronicles - "Luna Romana", Big Finish, Lisa Bowerman Audio, Stoyn, The Companion Chronicles - "The Beginning", Big Finish, Lisa Bowerman Audio, Professor Edward Dunning, Scarifyers 8 - "The Thirteen Hallows", Cosmic Hobo Productions, Simon Barnard