Human-Machine-Ecology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

20 14 Men's XC Records Book 20 15

Men’s XC Records Book 20 20 14 15 Table of Contents All-time Heptagonal/Ivy Champions ...................................... 1 Year-by-Year Results ........................................................ 2-11 Heptagonal Championship Records ............................... 12-14 NCAA Championship .......................................................... 15 NCAA Individual Top-25 Finishes ........................................ 16 All-Ivy Teams/Notes ........................................................ 17-19 All-Americans ..................................................................... 20 Academic All-Americans ..................................................... 20 The Ivy League Men’s Cross Country Record Book was last updated July 23, 2014. Please forward edits and/or additions to [email protected]. 14 1 15 Ivy League Records Book MEN’S CROSS COUNTRY All-Time Heptagonal Team Champions YEAR CHAMPION HEPTAGONAL CHAMPIONS 1975 Princeton 1976 Princeton Total First Last Total 1977 Princeton Champs Champ Champ Ivy Champs* 1978 Princeton Army 11 1945 1965 — 1979 Columbia Brown 1 2003 2003 1 1980 Princeton Columbia 4 1979 2013 4 1981 Princeton Cornell 9 1939 1993 5 1982 Princeton Dartmouth 16 1941 2005 14 1983 Princeton Harvard 6 1956 1972 10 1984 Dartmouth Navy 8 1946 1996 — 1985 Dartmouth Penn 2 1971 1973 3 1986 Dartmouth Princeton 17 1975 2012 18 1987 Dartmouth Yale 1 1942 1942 3 1988 Dartmouth 1989 Dartmouth *Beginning in 1956 if a Heptagonal champion was not an Ivy school, the next 1990 Dartmouth highest Ivy finisher was considered to be the Ivy champion. 1991 Dartmouth 1992 Navy (Cornell) The first Heptagonal Cross Country Championship was held in 1939 with six of the Ivy 1993 Cornell League institutions competing. By 1941, seven of the eight Ivies (minus Brown) were 1994 Dartmouth competing, along with Army and Navy. Brown joined the fold in 1949 and the 10 1995 Dartmouth teams competed annually until 1993 when Army departed to join the Patriot League. -

Teen Sensation Athing Mu

• ALL THE BEST IN RUNNING, JUMPING & THROWING • www.trackandfieldnews.com MAY 2021 The U.S. Outdoor Season Explodes Athing Mu Sets Collegiate 800 Record American Records For DeAnna Price & Keturah Orji T&FN Interview: Shalane Flanagan Special Focus: U.S. Women’s 5000 Scene Hayward Field Finally Makes Its Debut NCAA Formchart Faves: Teen LSU Men, USC Women Sensation Athing Mu Track & Field News The Bible Of The Sport Since 1948 AA WorldWorld Founded by Bert & Cordner Nelson E. GARRY HILL — Editor JANET VITU — Publisher EDITORIAL STAFF Sieg Lindstrom ................. Managing Editor Jeff Hollobaugh ................. Associate Editor BUSINESS STAFF Ed Fox ............................ Publisher Emeritus Wallace Dere ........................Office Manager Teresa Tam ..................................Art Director WORLD RANKINGS COMPILERS Jonathan Berenbom, Richard Hymans, Dave Johnson, Nejat Kök SENIOR EDITORS Bob Bowman (Walking), Roy Conrad (Special AwaitsAwaits You.You. Projects), Bob Hersh (Eastern), Mike Kennedy (HS Girls), Glen McMicken (Lists), Walt Murphy T&FN has operated popular sports tours since 1952 and has (Relays), Jim Rorick (Stats), Jack Shepard (HS Boys) taken more than 22,000 fans to 60 countries on five continents. U.S. CORRESPONDENTS Join us for one (or more) of these great upcoming trips. John Auka, Bob Bettwy, Bret Bloomquist, Tom Casacky, Gene Cherry, Keith Conning, Cheryl Davis, Elliott Denman, Peter Diamond, Charles Fleishman, John Gillespie, Rich Gonzalez, Ed Gordon, Ben Hall, Sean Hartnett, Mike Hubbard, ■ 2022 The U.S. Nationals/World Champion- ■ World Track2023 & Field Championships, Dave Hunter, Tom Jennings, Roger Jennings, Tom ship Trials. Dates and site to be determined, Budapest, Hungary. The 19th edition of the Jordan, Kim Koffman, Don Kopriva, Dan Lilot, but probably Eugene in late June. -

Women's Outdoor Track and Field 2013 Season in Review

Editor: Kristy McNeil AUGUST 2013 Michael Franklin ’13 finishes the 10k at the NCAA Championships in fifth Julia Ratcliffe’16 earned second-team All-America honors in her first NCAA place, the best finish in the event for a Tiger in NCAA history. He was ranked Outdoor Championship in the hammer throw. She had the second-longest 18th entering the meet. throw in the nation this season. Chairman’s Statement What has become clear is that the Princeton track and cross country program is now taking on a national presence as never before. Princeton has always had performers on the national stage: Bill Bonthron, David Pellegrini, Augie Wolf, Tora Harris, Donn Cabral, Al Dyer, Craig Masback, Lynn Jennings, Ashley Higginson, Nicole Harrison and Debbie St. Phard. What is happening now is that our presence on the national stage is becoming more frequent. This has profound implications for Princeton, the program and certainly our student athletes. After lengthy conversations with the coaches it’s clear that the financial support from Friends has had a dramatic impact on recruiting and performance. As such, know that your contributions are having the impact that you want and deserve. Our coaching staff from top to bottom may be the best in the country. We know Princeton is. With that package and proper funding our dominance and national presence can be assured to continue. On behalf of the coaches, our student athletes and the board, may I respectfully thank you for your contributions last year and ask you for your continued support this year. Enjoy Tiger Tracks. -

Shanghai 2018

Men's 100m Diamond Discipline 12.05.2018 Start list 100m Time: 20:53 Records Lane Athlete Nat NR PB SB 1 Isiah YOUNG USA 9.69 9.97 10.02 WR 9.58 Usain BOLT JAM Berlin 16.08.09 2 Andre DE GRASSE CAN 9.84 9.91 10.15 AR 9.91 Femi OGUNODE QAT Wuhan 04.06.15 AR 9.91 Femi OGUNODE QAT Gainesville 22.04.16 3 Justin GATLIN USA 9.69 9.74 10.05 NR 9.99 Bingtian SU CHN Eugene 30.05.15 4 Bingtian SU CHN 9.99 9.99 10.28 NR 9.99 Bingtian SU CHN Beijing 23.08.15 5 Chijindu UJAH GBR 9.87 9.96 10.08 WJR 9.97 Trayvon BROMELL USA Eugene 13.06.14 6 Ramil GULIYEV TUR 9.92 9.97 MR 9.69 Tyson GAY USA 20.09.09 7 Yoshihide KIRYU JPN 9.98 9.98 DLR 9.69 Yohan BLAKE JAM Lausanne 23.08.12 8 Zhenye XIE CHN 9.99 10.04 SB 9.97 Ronnie BAKER USA Torrance 21.04.18 9 Reece PRESCOD GBR 9.87 10.03 10.39 2018 World Outdoor list 9.97 +0.5 Ronnie BAKER USA Torrance 21.04.18 Medal Winners Shanghai previous 10.01 +0.8 Zharnel HUGHES GBR Kingston 24.02.18 10.02 +1.9 Isiah YOUNG USA Des Moines, IA 28.04.18 2017 - London IAAF World Ch. in Winners 10.03 +0.8 Akani SIMBINE RSA Gold Coast 09.04.18 Athletics 17 Bingtian SU (CHN) 10.09 10.03 +1.9 Michael RODGERS USA Des Moines, IA 28.04.18 1. -

Mrs. Cahill's Escape. '

Founded in 1800.] An Entertaining and Instructive Home Journal^ Especially Devoted to Local Mews and Interests. : * : [$1.00 a Tear. VOL. XCV.—NO. I6. A; NORWALK, CONN., FRIDAY, APRIL 19,1895. PRICE TWO CENTS. j no attack was attempted. Gen. Meade eral, do you know that when you let foot are approaching paying figures for claimed that the delay was for a more Gen. Lee's army get away from yon rAZETTE. growers and feeders. But they do not critical examination of the enemy's there at Williamsport, you made me MRS. CAHILL'S ESCAPE. ' NORW position; he then'issued orders for a think of an old women I used to see warrant any such sensational rise in combined attack of the whole army out in Illinois, trying to shoo her ducks She Batt'es With an Insane Wo the price of dressed beef to butchers OF A next dav, Tuesday, the 14th. Tuesday across the river ? " Then laughing so came, but Meade's bird had flown! heartily at the satirical fun in his little man Who Wanted to Kill Her. THE FAVORITE HOME PAPER. by the great slaughtering establish Monday, Lee was still hemmed in on story, he soon had Gen. Meade shaking ments, or an advance such as has been WAR the eastern three-quarter circle of the his sides in a merriment that took itife Potomac Banks. During Monday away any sting lurking in the simile. Her Cheek Bitten and Her Face reported on the part of the retail deal night, by means of an improvised Ever after, when reference was made • : i.rf Scratched. -

Andy Bayer of Indiana, Jordan Hasay of Oregon Top Capital One Academic All-America® Division I Track & Field/Cross Country Teams

CAPITAL ONE ACADEMIC ALL-AMERICA® TEAM S ELECTED BY C O SIDA FOR RELEASE: Thursday, June 27 – 12 noon (EDT) Please visit the Capital One Academic All-America® website http://www.capitaloneacademicallamerica.com/ ANDY BAYER OF INDIANA, JORDAN HASAY OF OREGON TOP CAPITAL ONE ACADEMIC ALL-AMERICA® DIVISION I TRACK & FIELD/CROSS COUNTRY TEAMS TOWSON, Md. – Senior distance runners Andy Bayer of Indiana University and Jordan Hasay of the University of Oregon lead the 2013 Capital One Academic All-America® Division I men’s and women’s track and field/cross country teams, as selected by the College Sports Information Directors of America (CoSIDA). Bayer and Hasay have been chosen as the winners of the Capital One Academic All-America® of the Year award for the Division I Men’s and Women’s track and field and cross country programs, respectively. Bayer is a biology major who graduated this spring from Indiana University with a 3.60 G.P.A. A native of Leo, Ind., Bayer is the only Hoosiers’ male track and field student-athlete to earn Capital One Division I Academic All-America® honors. He was a second team Capital One Academic All-America® selection in 2011 and 2012. The 2012 NCAA outdoor 1,500 meter champion, Bayer finished his career as an 11-time All-American including an eighth place finish in the 1,500 at the 2013 national meet. He was a two-time NCAA runner- up, and also earned indoor All-America honors in the distance medley relay and 3,000 meters. Bayer was a six-time Big Ten champion and became the third runner in Big Ten history to win both the 1,500 and 5,000 meter titles at the 2013 Big Ten Outdoor Championships. -

Men's Mile International 20.08.2021

Men's Mile International 20.08.2021 Start list Mile Time: 21:12 Records Lane Athlete Nat NR PB SB 1 Peter CALLAHAN BEL 3:51.84 3:56.14 WR 3:43.13 Hicham EL GUERROUJ MAR Stadio Olimpico, Roma 07.07.99 2 Tripp HURT USA 3:46.91 3:56.02 3:57.95 AR 3:46.91 Alan WEBB USA Brasschaat 21.07.07 3 George BEAMISH NZL 3:49.08 3:54.92 3:54.92 NR 3:46.91 Alan WEBB USA Brasschaat 21.07.07 WJR 3:49.29 Ilham Tanui ÖZBILEN KEN Bislett Stadion, Oslo 03.07.09 4 Archie DAVIS GBR 3:46.32 3:54.27 3:54.27 MR 3:47.32 Ayanleh SOULEIMAN DJI 31.05.14 5 Vincent CIATTEI USA 3:46.91 3:55.28 3:55.28 DLR 3:47.32 Ayanleh SOULEIMAN DJI Hayward Field, Eugene, OR 31.05.14 6 Amos BARTELSMEYER GER 3:49.22 3:53.33 6:17.62 SB 3:48.37 Stewart MCSWEYN AUS Bislett Stadion, Oslo 01.07.21 7 Charles PHILIBERT-THIBOUTOT CAN 3:50.26 3:52.97 3:52.97 8 Samuel PRAKEL USA 3:46.91 3:54.64 3:55.33 9 Craig ENGELS USA 3:46.91 3:51.60 3:53.97 2021 World Outdoor list 10 Henry WYNNE USA 3:46.91 3:56.86 3:48.37 Stewart MCSWEYN AUS Bislett Stadion, Oslo (NOR) 01.07.21 11 Craig NOWAK (PM) USA 3:46.91 3:57.97 3:57.97 3:49.11 Marcin LEWANDOWSKI POL Bislett Stadion, Oslo (NOR) 01.07.21 3:49.26 Matthew CENTROWITZ USA Portland, OR (USA) 24.07.21 Pace: 400m - 56-57 | 800m - !:53-54 | 1000m - 2:21 3:49.27 Jye EDWARDS AUS Bislett Stadion, Oslo (NOR) 01.07.21 3:49.40 Charles Cheboi SIMOTWO KEN Bislett Stadion, Oslo (NOR) 01.07.21 3:52.49 Elliot GILES GBR Gateshead (GBR) 13.07.21 3:52.50 Jake HEYWARD GBR Gateshead (GBR) 13.07.21 3:52.70 Ismael DEBJANI BEL Bislett Stadion, Oslo (NOR) 01.07.21 Medal Winners in 1500m Previous Meeting 3:52.97 Charles PHILIBERT-THIBOUTOT CAN Falmouth, MA (USA) 14.08.21 3:53.04 Ronald MUSAGALA UGA Bislett Stadion, Oslo (NOR) 01.07.21 2021 - The XXXII Olympic Games Winners 3:53.15 Adam Ali MUSAB QAT Bislett Stadion, Oslo (NOR) 01.07.21 1. -

Choice Outstanding Book for State and Nation in LA and Spain

MIGUEL ANGEL CENTENO Department of Sociology Princeton University Princeton, New Jersey 08544 (609) 258-4452/4180 (office) 2180 (fax) http://www.princeton.edu/~cenmiga EDUCATION 1990 Ph.D., Sociology, Yale University 1987 MBA, School of Organization and Management, Yale University 1980 BA, History, Yale College HONORS, GRANTS AND FELLOWSHIPS 2017 Conferencias Magistrales, PUCP, Lima; URU, Montevideo 2016 Choice Outstanding Book for War and Society 2014-16 Faculty Council, WWS 2013 Choice Outstanding Book for State and Nation in LA and Spain 2013 Watson Institute, Brown U., Board of Overseers 2013 Musgrave Professorship, Princeton University 2012 MLK Journey Award 2012-2015 Fung Global Fellows, Princeton University 2011-2014 Princeton Society of Fellows 2008 Conferencia Magistral, Universidad Católica de Chile 2005 McFarlin Lecture, University of Tulsa 2005 Elected member Sociological Research Association 2004-2006 ASA, Comparative Historical Section Council 2004 Bonner Foundation Award 2004 Jefferson Award for Community Service, Trenton Times 2003 Mattei Dogan Prize. Honorable Mention for Blood and Debt. 2001 Advising Award, Department of Sociology, Princeton University 1997 Presidential Teaching Award, Princeton 1997-98 Harry Frank Guggenheim Foundation, Research Grant 1995 Leadership Award, Princeton 1995 Latin American Studies Association, Nominated for Executive Council 1995 National Endowment for the Humanities Fellowship 1994-97 Class of 1936 Bicentennial Preceptorship, Princeton 1994 Choice Outstanding Academic Book for Democracy within Reason. 1993 Center for Advanced Study in the Behavioral Sciences, Invitation 1990-2017 Faculty Research Grants, Princeton University 1992 Fulbright Lecturing Fellowship, RGGU, Moscow 1990 Ph.D. Thesis awarded Distinction, Yale University 1989-90 UCSD Center for U.S.-Mexican Studies, Visiting Fellowship 1989-90 Woodrow Wilson Foundation, Spencer Fellowship Centeno CV, p.2 1989 John F. -

Survey Responses

Workshop on Historical Systemic Collapse Friday & Saturday, April 26-27, 2019 Princeton University Survey Responses Workshop Participants Marty Anderies Arizona State University – Professor, School of Evolution and Social Change & School of Sustainability Haydn Belfield University of Cambridge – Academic Project Manager, Centre for the Study of Existential Risk (CSER) Seth Baum Global Catastrophic Risk Institute – Executive Director Richard Bookstaber University of California – Chief Risk Officer, Office of the CIO Ingrid Burrington USC – Fellow, Annenberg Innovation Lab Freelance Writer and Artist Peter Callahan Princeton University –PIIRS Global Systemic Risk research community Miguel Centeno Princeton University – Professor, Sociology & WWS; Director, PIIRS Global Systemic Risk research community Eric H. Cline George Washington University – Director, Capitol Archaeological Institute Samuel Cohn, Jr. University of Glasgow – Professor, Medieval History Adam Elga Princeton University – Professor, Philosophy Sheldon Garon Princeton University – Professor, History and East Asian Studies Jack Goldstone George Mason University – Professor, Public Policy Christina Grozinger Penn State University – Professor, Entomology Director, Center for Pollination Research John Haldon Princeton University – Professor, History Jeff Hass University of Richmond – Professor, Sociology and Anthropology Sherwat Elwan American University in Cairo – Professor, Management Ibrahim Robert Jensen University of Texas – Emeritus Professor, School of Journalism Luke -

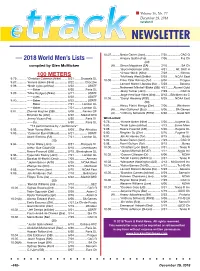

— 2018 World Men's Lists —

Volume 16, No. 77 December 29, 2018 version ii 10.07 .......... Nesta Carter (Jam) .................. 7/30 ................CAC G — 2018 World Men’s Lists — .......... Amaury Golitin (Fra) ................. 7/06 ................ Fra Ch (40) compiled by Glen McMicken (A) ..... Simon Magakwe (SA) .............. 3/16 .................SA Ch .......... *Bryce Robinson (US) .............. 4/21 .......... Mt. SAC R .......... *Ameer Webb (Nike) ................ 7/28 ................Ninove 100 METERS .......... *McKinely West (SnMs) ........... 5/25 ......... NCAA East 9.79 ............ *Christian Coleman (Nike) ....... 8/31 ........Brussels DL 10.08 .......... Emre Zafer Barnes (Tur)........... 6/04 ................Prague 9.87 ............ *Ronnie Baker (Nike) ............... 8/22 .............Chorzów .......... Lamont Marcell Jacobs (Ita) .... 5/23 ............... Savona 9.88 ............ *Noah Lyles (adidas) ................ 6/22 ................ USATF .......... Nethaneel Mitchell-Blake (GB) 4/21 ........Alumni Gold ................... ——Baker ................................ 6/30 ..............Paris DL .......... Javoy Tucker (Jam) ................. 7/29 ................CAC G 9.89 ............ *Mike Rodgers (Nike) ............... 6/21 ................ USATF .......... Jorge Henrique Vides (Bra) ...... 8/18 ....São Bern do C ................... ——Lyles ................................. 6/22 ................ USATF 10.09 .......... *Darryl Haraway (FlSt) ............. 5/25 ......... NCAA East 9.90 ............ ——Baker ............................... -

Batty, Roth, Morton, Ziegler Earn Scholar-Athlete of the Year Nods For

Contact: Tom Lewis USTFCCCA 1100 Poydras St., Suite 1750 Phone: (504) 599-8904 New Orleans, LA 70163 Fax: (504) 599-8909 www.ustfccca.org Batty, Roth, Morton, Ziegler Earn Scholar-Athlete of the Year Nods for DI Men’s Track & Field In addition, 420 individuals receive All-Academic status for the track & field seasons August 3, 2011 NEW ORLEANS – BYU’s Miles Batty, Washington’s Scott Roth, Stanford’s Amaechi Morton, and Virginia Tech’s Alexander Ziegler were named on Wednesday as Scholar Athletes of the Year in Division I for the 2011 men’s track & field seasons by the U.S. Track & Field and Cross Country Coaches Association (USTFCCCA). The group represents collegiate track & field’s best in both athletics and academics. In addition, 420 student‐athletes were tapped as USTFCCCA All‐Academic honorees. Scholar Athlete of the Year Awards are determined from among those who earned All‐Academic status and placed highest in individual events at the most recent NCAA Championships. Those who earn multiple individual championship titles rank higher in the tie‐breaking process, and cumulative GPA is weighed as the final tiebreaker to establish a winner. Separate awards are given to track athletes and field athletes for the indoor and outdoor seasons, resulting in four categories. Indoor Track Scholar Athlete of the Year – Miles Batty, BYU Batty, a senior from Sandy, Utah, was the NCAA indoor champion in the mile run (3:59.49) and led the Cougars to a national championship in the distance medley relay as anchor. Batty accumulated a 3.95 cumulative GPA in neuroscience and exercise science while an undergrad at BYU. -

Men's Mile International 20.08.2021

Men's Mile International 20.08.2021 Start list Mile Time: 21:12 Records Lane Athlete Nat NR PB SB 1 Peter CALLAHAN BEL 3:51.84 3:56.14 WR 3:43.13 Hicham EL GUERROUJ MAR Stadio Olimpico, Roma 07.07.99 2 Tripp HURT USA 3:46.91 3:56.02 3:57.95 AR 3:46.91 Alan WEBB USA Brasschaat 21.07.07 3 George BEAMISH NZL 3:49.08 3:54.92 3:54.92 NR 3:46.91 Alan WEBB USA Brasschaat 21.07.07 WJR 3:49.29 Ilham Tanui ÖZBILEN KEN Bislett Stadion, Oslo 03.07.09 4 Archie DAVIS GBR 3:46.32 3:54.27 3:54.27 MR 3:47.32 Ayanleh SOULEIMAN DJI 31.05.14 5 Vincent CIATTEI USA 3:46.91 3:55.28 3:55.28 DLR 3:47.32 Ayanleh SOULEIMAN DJI Hayward Field, Eugene, OR 31.05.14 6 Amos BARTELSMEYER GER 3:49.22 3:53.33 6:17.62 SB 3:48.37 Stewart MCSWEYN AUS Bislett Stadion, Oslo 01.07.21 7 Charles PHILIBERT-THIBOUTOT CAN 3:50.26 3:52.97 3:52.97 8 Samuel PRAKEL USA 3:46.91 3:54.64 3:55.33 9 Craig ENGELS USA 3:46.91 3:51.60 3:53.97 2021 World Outdoor list 10 Henry WYNNE USA 3:46.91 3:56.86 3:48.37 Stewart MCSWEYN AUS Bislett Stadion, Oslo (NOR) 01.07.21 11 Craig NOWAK (PM) USA 3:46.91 3:57.97 3:57.97 3:49.11 Marcin LEWANDOWSKI POL Bislett Stadion, Oslo (NOR) 01.07.21 3:49.26 Matthew CENTROWITZ USA Portland, OR (USA) 24.07.21 Pace: 400m - 56-57 | 800m - !:53-54 | 1000m - 2:21 3:49.27 Jye EDWARDS AUS Bislett Stadion, Oslo (NOR) 01.07.21 3:49.40 Charles Cheboi SIMOTWO KEN Bislett Stadion, Oslo (NOR) 01.07.21 3:52.49 Elliot GILES GBR Gateshead (GBR) 13.07.21 3:52.50 Jake HEYWARD GBR Gateshead (GBR) 13.07.21 3:52.70 Ismael DEBJANI BEL Bislett Stadion, Oslo (NOR) 01.07.21 Medal Winners in 1500m Previous Meeting 3:52.97 Charles PHILIBERT-THIBOUTOT CAN Falmouth, MA (USA) 14.08.21 3:53.04 Ronald MUSAGALA UGA Bislett Stadion, Oslo (NOR) 01.07.21 2021 - The XXXII Olympic Games Winners 3:53.15 Adam Ali MUSAB QAT Bislett Stadion, Oslo (NOR) 01.07.21 1.