Chapter 11 Tools for Lexical Analysis and Parsing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter # 9 LEX and YACC

Chapter # 9 LEX and YACC Dr. Shaukat Ali Department of Computer Science University of Peshawar Lex and Yacc Lex and yacc are a matched pair of tools. Lex breaks down files into sets of "tokens," roughly analogous to words. Yacc takes sets of tokens and assembles them into higher-level constructs, analogous to sentences. Lex's output is mostly designed to be fed into some kind of parser. Yacc is designed to work with the output of Lex. 2 Lex and Yacc Lex and yacc are tools for building programs. Their output is itself code – Which needs to be fed into a compiler – May be additional user code is added to use the code generated by lex and yacc 3 Lex : A lexical analyzer generator Lex is a program designed to generate scanners, also known as tokenizers, which recognize lexical patterns in text Lex is an acronym that stands for "lexical analyzer generator.“ The main purpose is to facilitate lexical analysis The processing of character sequences in source code to produce tokens for use as input to other programs such as parsers Another tool for lexical analyzer generation is Flex 4 Lex : A lexical analyzer generator lex.lex is an a input file written in a language which describes the generation of lexical analyzer. The lex compiler transforms lex.l to a C program known as lex.yy.c. lex.yy.c is compiled by the C compiler to a file called a.out. The output of C compiler is the working lexical analyzer which takes stream of input characters and produces a stream of tokens. -

Project1: Build a Small Scanner/Parser

Project1: Build A Small Scanner/Parser Introducing Lex, Yacc, and POET cs5363 1 Project1: Building A Scanner/Parser Parse a subset of the C language Support two types of atomic values: int float Support one type of compound values: arrays Support a basic set of language concepts Variable declarations (int, float, and array variables) Expressions (arithmetic and boolean operations) Statements (assignments, conditionals, and loops) You can choose a different but equivalent language Need to make your own test cases Options of implementation (links available at class web site) Manual in C/C++/Java (or whatever other lang.) Lex and Yacc (together with C/C++) POET: a scripting compiler writing language Or any other approach you choose --- must document how to download/use any tools involved cs5363 2 This is just starting… There will be two other sub-projects Type checking Check the types of expressions in the input program Optimization/analysis/translation Do something with the input code, output the result The starting project is important because it determines which language you can use for the other projects Lex+Yacc ===> can work only with C/C++ POET ==> work with POET Manual ==> stick to whatever language you pick This class: introduce Lex/Yacc/POET to you cs5363 3 Using Lex to build scanners lex.yy.c MyLex.l lex/flex lex.yy.c a.out gcc/cc Input stream a.out tokens Write a lex specification Save it in a file (MyLex.l) Compile the lex specification file by invoking lex/flex lex MyLex.l A lex.yy.c file is generated -

User Manual for Ox: an Attribute-Grammar Compiling System Based on Yacc, Lex, and C Kurt M

Computer Science Technical Reports Computer Science 12-1992 User Manual for Ox: An Attribute-Grammar Compiling System based on Yacc, Lex, and C Kurt M. Bischoff Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Bischoff, Kurt M., "User Manual for Ox: An Attribute-Grammar Compiling System based on Yacc, Lex, and C" (1992). Computer Science Technical Reports. 21. http://lib.dr.iastate.edu/cs_techreports/21 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. User Manual for Ox: An Attribute-Grammar Compiling System based on Yacc, Lex, and C Abstract Ox generalizes the function of Yacc in the way that attribute grammars generalize context-free grammars. Ordinary Yacc and Lex specifications may be augmented with definitions of synthesized and inherited attributes written in C syntax. From these specifications, Ox generates a program that builds and decorates attributed parse trees. Ox accepts a most general class of attribute grammars. The user may specify postdecoration traversals for easy ordering of side effects such as code generation. Ox handles the tedious and error-prone details of writing code for parse-tree management, so its use eases problems of security and maintainability associated with that aspect of translator development. The translators generated by Ox use internal memory management that is often much faster than the common technique of calling malloc once for each parse-tree node. -

Yacc a Tool for Syntactic Analysis

1 Yacc a tool for Syntactic Analysis CS 315 – Programming Languages Pinar Duygulu Bilkent University CS315 Programming Languages © Pinar Duygulu Lex and Yacc 2 1) Lexical Analysis: Lexical analyzer: scans the input stream and converts sequences of characters into tokens. Lex is a tool for writing lexical analyzers. 2) Syntactic Analysis (Parsing): Parser: reads tokens and assembles them into language constructs using the grammar rules of the language. Yacc is a tool for constructing parsers. Yet Another Compiler to Compiler Reads a specification file that codifies the grammar of a language and generates a parsing routine CS315 Programming Languages © Pinar Duygulu 3 Yacc • Yacc specification describes a Context Free Grammar (CFG), that can be used to generate a parser. Elements of a CFG: 1. Terminals: tokens and literal characters, 2. Variables (nonterminals): syntactical elements, 3. Production rules, and 4. Start rule. CS315 Programming Languages © Pinar Duygulu 4 Yacc Format of a production rule: symbol: definition {action} ; Example: A → Bc is written in yacc as a: b 'c'; CS315 Programming Languages © Pinar Duygulu 5 Yacc Format of a yacc specification file: declarations %% grammar rules and associated actions %% C programs CS315 Programming Languages © Pinar Duygulu 6 Declarations To define tokens and their characteristics %token: declare names of tokens %left: define left-associative operators %right: define right-associative operators %nonassoc: define operators that may not associate with themselves %type: declare the type of variables %union: declare multiple data types for semantic values %start: declare the start symbol (default is the first variable in rules) %prec: assign precedence to a rule %{ C declarations directly copied to the resulting C program %} (E.g., variables, types, macros…) CS315 Programming Languages © Pinar Duygulu 7 A simple yacc specification to accept L={ anbn | n>1}. -

Binpac: a Yacc for Writing Application Protocol Parsers

binpac: A yacc for Writing Application Protocol Parsers Ruoming Pang∗ Vern Paxson Google, Inc. International Computer Science Institute and New York, NY, USA Lawrence Berkeley National Laboratory [email protected] Berkeley, CA, USA [email protected] Robin Sommer Larry Peterson International Computer Science Institute Princeton University Berkeley, CA, USA Princeton, NJ, USA [email protected] [email protected] ABSTRACT Similarly, studies of Email traffic [21, 46], peer-to-peer applica- A key step in the semantic analysis of network traffic is to parse the tions [37], online gaming, and Internet attacks [29] require under- traffic stream according to the high-level protocols it contains. This standing application-level traffic semantics. However, it is tedious, process transforms raw bytes into structured, typed, and semanti- error-prone, and sometimes prohibitively time-consuming to build cally meaningful data fields that provide a high-level representation application-level analysis tools from scratch, due to the complexity of the traffic. However, constructing protocol parsers by hand is a of dealing with low-level traffic data. tedious and error-prone affair due to the complexity and sheer num- We can significantly simplify the process if we can leverage a ber of application protocols. common platform for various kinds of application-level traffic anal- This paper presents binpac, a declarative language and com- ysis. A key element of such a platform is application-protocol piler designed to simplify the task of constructing robust and effi- parsers that translate packet streams into high-level representations cient semantic analyzers for complex network protocols. We dis- of the traffic, on top of which we can then use measurement scripts cuss the design of the binpac language and a range of issues that manipulate semantically meaningful data elements such as in generating efficient parsers from high-level specifications. -

An Attribute- Grammar Compiling System Based on Yacc, Lex, and C Kurt M

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital Repository @ Iowa State University Computer Science Technical Reports Computer Science 12-1992 Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Kurt M. Bischoff Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Programming Languages and Compilers Commons Recommended Citation Bischoff, Kurt M., "Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C" (1992). Computer Science Technical Reports. 23. http://lib.dr.iastate.edu/cs_techreports/23 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Design, Implementation, Use, and Evaluation of Ox: An Attribute- Grammar Compiling System based on Yacc, Lex, and C Abstract Ox generalizes the function of Yacc in the way that attribute grammars generalize context-free grammars. Ordinary Yacc and Lex specifications may be augmented with definitions of synthesized and inherited attributes written in C syntax. From these specifications, Ox generates a program that builds and decorates attributed parse trees. Ox accepts a most general class of attribute grammars. The user may specify postdecoration traversals for easy ordering of side effects such as code generation. Ox handles the tedious and error-prone details of writing code for parse-tree management, so its use eases problems of security and maintainability associated with that aspect of translator development. -

Lex and Yacc

Lex and Yacc A Quick Tour HW8–Use Lex/Yacc to Turn this: Into this: <P> Here's a list: Here's a list: * This is item one of a list <UL> * This is item two. Lists should be <LI> This is item one of a list indented four spaces, with each item <LI>This is item two. Lists should be marked by a "*" two spaces left of indented four spaces, with each item four-space margin. Lists may contain marked by a "*" two spaces left of four- nested lists, like this: space margin. Lists may contain * Hi, I'm item one of an inner list. nested lists, like this:<UL><LI> Hi, I'm * Me two. item one of an inner list. <LI>Me two. * Item 3, inner. <LI> Item 3, inner. </UL><LI> Item 3, * Item 3, outer list. outer list.</UL> This is outside both lists; should be back This is outside both lists; should be to no indent. back to no indent. <P><P> Final suggestions: Final suggestions 2 if myVar == 6.02e23**2 then f( .. ! char stream LEX token stream if myVar == 6.02e23**2 then f( ! tokenstream YACC parse tree if-stmt == fun call var ** Arg 1 Arg 2 float-lit int-lit . ! 3 Lex / Yacc History Origin – early 1970’s at Bell Labs Many versions & many similar tools Lex, flex, jflex, posix, … Yacc, bison, byacc, CUP, posix, … Targets C, C++, C#, Python, Ruby, ML, … We’ll use jflex & byacc/j, targeting java (but for simplicity, I usually just say lex/yacc) 4 Uses “Front end” of many real compilers E.g., gcc “Little languages”: Many special purpose utilities evolve some clumsy, ad hoc, syntax Often easier, simpler, cleaner and more flexible to use lex/yacc or similar tools from the start 5 Lex: A Lexical Analyzer Generator Input: Regular exprs defining "tokens" my.flex Fragments of declarations & code Output: jflex A java program “yylex.java” Use: yylex.java Compile & link with your main() Calls to yylex() read chars & return successive tokens. -

Time-Sharing System

UNIXTM TIME-SHARING SYSTEM: UNIX PROGRAMMER'S MANUAL Seventh Edition, Volume 2B January, 1979 Bell Telephone Laboratories, Incorporated Murray Hill, New Jersey Yacc: Yet Another Compiler-Compiler Stephen C. Johnson Bell Laboratories Murray Hill, New Jersey 07974 ABSTRACT Computer program input generally has some structure; in fact, every computer program that does input can be thought of as de®ning an ``input language'' which it accepts. An input language may be as complex as a programming language, or as sim- ple as a sequence of numbers. Unfortunately, usual input facilities are limited, dif®cult to use, and often are lax about checking their inputs for validity. Yacc provides a general tool for describing the input to a computer program. The Yacc user speci®es the structures of his input, together with code to be invoked as each such structure is recognized. Yacc turns such a speci®cation into a subroutine that handles the input process; frequently, it is convenient and appropriate to have most of the ¯ow of control in the user's application handled by this subroutine. The input subroutine produced by Yacc calls a user-supplied routine to return the next basic input item. Thus, the user can specify his input in terms of individual input characters, or in terms of higher level constructs such as names and numbers. The user-supplied routine may also handle idiomatic features such as comment and con- tinuation conventions, which typically defy easy grammatical speci®cation. Yacc is written in portable C. The class of speci®cations accepted is a very gen- eral one: LALR(1) grammars with disambiguating rules. -

Ce 322 (0246Er ©Erc D00HP Compiler Construction Prof. Levy Problem Set 6 Due Monday 08 March Reading Assignm

¢¡¤£¦¥¨§ © ¨ ¨ ! #"%$'&)(©*&)('+ ,.-/-1032 46587:9<;>=?A@4B5C*DFEG@IH*JKEL;5C MONQPSRTU%VXWFY Z []\_^a`cb¤d efb g h i*jGV]klPnmpoLqrY sStuklqSNQvxw ?AzO{|;}C*~DGDp;~C<7:?C8E% @LzO~58C¢55¨# y @I;EFEp?CDGDG;}~C<7:?C8E M3qSN>xIi*NqnPnm 8PFPnV )V¡NQv¢¤£>V¦¥A§©¨I¨_ª>«p¬®ª}¯¬ M3qSN>]Qx°V¡v¡qS±¤±²PSjpN|nNqS³´³uqSNµR¶PnN¸·n¹Sº¤VX)»pNQV£Q£>¢¤Pnmp£¦¢¤m¼k½UO¾ANQV»pNQPFopjpvV¡o6¿1V¡±©PÁÀaÀ¢©Qw6qopop¢©Q¢¤PnmpqS±ÂN>jp±¤V£ R¶PnNqNQ¢©Qwp³´V¢©v*qSmGol¿1P)PS±¤VrqmÃVX)»pNQV£Q£>¢¤Pnmp£ÅÄ ª ¬ «Fá «cä Æ>ÇÈ1ÉËÊ Ì¤ÆXÍÏÎQÆ>ÇÈ1ÉlÐ.ÑSÉXÒÓÍÓÔFÎQÆ>ÇÈ1ɽÐ.ÕXÖÁÖ'Ì×ÎQÆ>ÇÈ1É Ð Æ>ÇÈ1É Ð)ØÚÙÛÐFÜ/ÝÞàß ÐcßOâ/â1ã Ü «æå « ̤ÆXÍÚÎQÆ>ÇÈ1ÉËÊ ã Õ¡ÒÓç1èSÒÓçFé'ê]ØÏÜÆ>ÇÈ1É Ü3Ù ÑSÉ ÒÓͶÔFÎQÆ>ÇÈ1É¨Ê Æ>ÇÈ1ÉËëÆ>ÇÈ1ɽÐ/Æ>ÇÈ1Éuì Æ>ÇÈ1É ä á ÕXÖÁÖ'Ì×ÎQÆ>ÇÈ1É¨Ê Æ>ÇÈ1É ÜÙÆ>ÇÈ1ɽÐ/Æ>ÇÈ1ɸâ Æ>ÇÈ1É Õ¡ÒÓç1èSÒÓçFé'êíÊ Õ¡ÒÓç1èSÒÓçFé¼Ð.Õ¡ÒÓç1èSÒÓçFé.î%Õ¡ÒÓç1èSÒÓçFé'ê ä Õ¡ÒÓç1èSÒÓçFéÃÊ ï ã8ð'ÑSÉuñòÆ>ÇÈ1É À¢ów wpV]jp£QjpqS±Âq£Q£QjG³u»GQ¢¤PnmG£q¿.Pnj)µ»pN>V¡vV¡opVmpv¡V ôõËö ¾1ä ö á÷X¾1qSmpo wpV|R¶Pn±¤±©PÁÀ¢¤mp¨N>V¡njp±¤qSNopVø)ù ë Ü3Ù â mp¢ó¢©Pnmp£¡ ùþ/q'ùÿ¡ ù}þ/q®ùÿrs®ù£¢¤ ¦¥ ØÚÙúÊ ûýü ûýü «Fá s'ù£¢§ ©¨ Ü/ÝÞàß Ê û «Fä åÁá « ¯ä « ßOâ/â1ã Ü¢Ê Ý Ð ã jG»p».PS£QV¨£QPS³uVPnmpV¸³uqSSV£µwGVíR¶Pn±¤±©PÁÀ¢¤mp´£QjpSnV¡£}¢¤PSm/R¶PSNwG¢¤£*£>PnN><PR£Q¢¤³´»p±©V¦»pN>PnnNQqS³u³´¢¤mGu±¤qSmpnjLqnVS¾ ÀV¦v¡qSmqrWSPn¢¤o ÀNQ¢ó¢¤mGuqËop¢©£>¢©mpvYF»1VXùvxwGV¡vxc¢¤mpv¡Pn³´».PSmpV¡mær¾I¿æY ¢¤mGv¡PnN>».PnNQq¢©mp¸YF».V¦¢¤mGR¶PSNQ³uq¢©Pnm ¢¤mæQP QwpVnNQqS³´³qSN<¢ó£>V¡±©RT LPnN|VXGqS³´»p±©VS¾3QP»pN>Pnwp¢¤¿G¢©]³u¢Å)¢¤mp jp»B¿1PFPn±¤V¡qSmp£¦qSmpo6mcjp³í¿.VNQ£¡¾²ÀVvPnjp±©oB³uPÁWSV «cá¸R¶NQPn³QwpV¸° PSR²wGV NQjp±©V]QPuQwpVí° PSR²wGV -

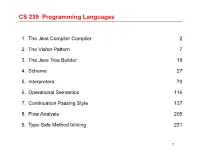

Lambda-Calculus

CS 239 Programming Languages 1. The Java Compiler Compiler 2 2. The Visitor Pattern 7 3. The Java Tree Builder 19 4. Scheme 27 5. Interpreters 70 6. Operational Semantics 116 7. Continuation Passing Style 137 8. Flow Analysis 205 9. Type-Safe Method Inlining 231 1 Chapter 1: The Java Compiler Compiler 2 The Java Compiler Compiler (JavaCC) Can be thought of as “Lex and Yacc for Java.” • It is based on LL(k) rather than LALR(1). • Grammars are written in EBNF. • The Java Compiler Compiler transforms an EBNF grammar into an • LL(k) parser. The JavaCC grammar can have embedded action code written in Java, • just like a Yacc grammar can have embedded action code written in C. The lookahead can be changed by writing LOOKAHEAD(...). • The whole input is given in just one file (not two). • 3 The JavaCC input format One file: header • token specifications for lexical analysis • grammar • 4 The JavaCC input format Example of a token specification: TOKEN : { < INTEGER_LITERAL: ( ["1"-"9"] (["0"-"9"])* | "0" ) > } Example of a production: void StatementListReturn() : {} { ( Statement() )* "return" Expression() ";" } 5 Generating a parser with JavaCC javacc fortran.jj // generates a parser with a specified name javac Main.java // Main.java contains a call of the parser java Main < prog.f // parses the program prog.f 6 Chapter 2: The Visitor Pattern 7 The Visitor Pattern For object-oriented programming, the Visitor pattern enables the definition of a new operation on an object structure without changing the classes of the objects. Gamma, Helm, Johnson, Vlissides: Design Patterns, 1995. 8 Sneak Preview When using the Visitor pattern, the set of classes must be fixed in advance, and • each class must have an accept method. -

Comparing and Merging Files

Comparing and Merging Files for Diffutils 3.8 and patch 2.5.4 2 January 2021 David MacKenzie, Paul Eggert, and Richard Stallman This manual is for GNU Diffutils (version 3.8, 2 January 2021), and documents theGNU diff, diff3, sdiff, and cmp commands for showing the differences between files and the GNU patch command for using their output to update files. Copyright c 1992{1994, 1998, 2001{2002, 2004, 2006, 2009{2021 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License." i Short Contents Overview :::::::::::::::::::::::::::::::::::::::::::::::: 1 1 What Comparison Means ::::::::::::::::::::::::::::::: 3 2 diff Output Formats :::::::::::::::::::::::::::::::::: 9 3 Incomplete Lines ::::::::::::::::::::::::::::::::::::: 27 4 Comparing Directories :::::::::::::::::::::::::::::::: 29 5 Making diff Output Prettier ::::::::::::::::::::::::::: 31 6 diff Performance Tradeoffs :::::::::::::::::::::::::::: 33 7 Comparing Three Files :::::::::::::::::::::::::::::::: 35 8 Merging From a Common Ancestor :::::::::::::::::::::: 39 9 Interactive Merging with sdiff ::::::::::::::::::::::::: 43 10 Merging with patch :::::::::::::::::::::::::::::::::: 45 11 Tips for Making and Using Patches :::::::::::::::::::::: 55 12 -

Awk — a Pattern Scanning and Processing Language (Second

Awk—APattern Scanning and Processing Language USD:19-73 Awk—APattern Scanning and Processing Language (Second Edition) Alfred V. Aho Brian W. Kernighan Peter J. Weinberger ABSTRACT Awk is a programming language whose basic operation is to search a set of files for patterns, and to perform specified actions upon lines or fields of lines which contain instances of those patterns. Awk makes certain data selection and transformation opera- tions easy to express; for example, the awk program length > 72 prints all input lines whose length exceeds 72 characters; the program NF % 2 == 0 prints all lines with an even number of fields; and the program {$1=log($1); print } replaces the first field of each line by its logarithm. Awk patterns may include arbitrary boolean combinations of regular expressions and of relational operators on strings, numbers, fields, variables, and array elements. Actions may include the same pattern-matching constructions as in patterns, as well as arithmetic and string expressions and assignments, if-else, while, for statements, and multiple output streams. This report contains a user’s guide, a discussion of the design and implementation of awk , and some timing statistics. 1. Introduction Awk is a programming language designed to merely printing the matching line. For example, the make many common information retrieval and text awk program manipulation tasks easy to state and to perform. {print $3, $2} The basic operation of awk is to scan a set of input lines in order, searching for lines which match prints the third and second columns of a table in that any of a set of patterns which the user has specified.