Dissemination of Vaccine Misinformation on Twitter and Its Countermeasures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Controversial CFS Researcher Arrested and Jailed - Scienceinsider

Controversial CFS Researcher Arrested and Jailed - ScienceInsider http://news.sciencemag.org/scienceinsider/2011/11/controversial-cfs-researcher-arr.html?ref=em... AAAS.ORG FEEDBACK HELP LIBRARIANS Daily News Enter Search Term ADVANCED ALERTS ACCESS RIGHTS MY ACCOUNT SIGN IN News Home ScienceNOW ScienceInsider Premium Content from Science About Science News Home > News > ScienceInsider > November 2011 > Controversial CFS Researcher Arrested and Jailed Controversial CFS Researcher Arrested and Jailed by Jon Cohen on 19 November 2011, 6:46 PM | 16 Comments Email Print | 0 More PREVIOUS ARTICLE NEXT ARTICLE 1 of 5 12/1/11 10:06 AM Controversial CFS Researcher Arrested and Jailed - ScienceInsider http://news.sciencemag.org/scienceinsider/2011/11/controversial-cfs-researcher-arr.html?ref=em... Judy Mikovits, who has been in the spotlight for the past 2 years after Science published a controversial report by her group that tied a novel mouse retrovirus to chronic fatigue syndrome (CFS), is now behind bars. Sheriffs in Ventura County, California, arrested Mikovits yesterday on felony charges that she is a fugitive from justice. She is being held at the Todd Road Jail in Santa Paula without bail. But ScienceInsider could obtain only sketchy details about the specific charges against her. The Ventura County sheriff's office told ScienceInsider that it had no available details about the charges and was acting upon a warrant issued by Washoe County in Nevada. A spokesperson for the Washoe County Sheriff's Office told ScienceInsider that it did not issue the warrant, nor did the Reno or Sparks police department. He said it could be from one of several federal agencies in Washoe County. -

Ketogenic Diet and Immunotherapy in Cancer and Neurological Diseases

American Journal of Immunology Editorials Ketogenic Diet and Immunotherapy in Cancer and Neurological Diseases. 4th International Congress on Integrative Medicine, 1, 2 April 2017, Fulda, Germany 1Thomas Peter and 2Marco Ruggiero 1Therapy Center Anuvindati, Bensheim, Germany 2Silver Spring Sagl, Arzo-Mendrisio, Switzerland Article history Abstract: In this Meeting Report, we review proceedings and comment Received: 23-08-2017 on talks given at the 4 th International Congress on Integrative Medicine Revised: 23-08-2017 Accepted: 25-09-2017 held on April 1 and 2, 2017, in Fulda, Germany. The Theme this year was “Ketogenic Diet and Immunotherapy in Cancer and Neurological Diseases.” Corresponding Author: Speakers came from Europe and the United States and the Congress was Marco Ruggiero attended by more than 100 interested parties from all over the world. Silver Spring Sagl. Via Raimondo Rossi 24, Arzo- Mendrisio 6864, Switzerland Keywords: Ketogenic Diet, Immunotherapy, Integrative Medicine, Email: [email protected] Cancer, Autism, Lyme Disease Introduction a talk entitled "Exploiting cancer metabolism with th ketosis and hyperbaric oxygen". Dr. Poff lectured on the The 4 International Congress on Integrative synergism between nutritional ketosis and hyperbaric Medicine, was held on 1 and 2 April, 2017, in Fulda, oxygen in reducing tumor burden in experimental Germany. The theme of this Congress was "Ketogenic animals and offered insights on the mechanism of action Diet and Immunotherapy in Cancer and Neurological of ketone bodies in a variety of conditions from seizures Diseases" (Ketogene Ernährung und Immuntherapie bei to cancer proliferation. The role of exogenous ketone Krebs und Neurologischen Erkrankungen). bodies in counteracting cancer cell proliferation even in The Congress brought together researchers from all the absence of carbohydrate restriction was also over the world to share their views and research on the discussed. -

Executive Summary: Under the Surface: Covid-19 Vaccine Narratives, Misinformation and Data Deficits on Social Media

WWW.FIRSTDRAFTNEWS.ORG @FIRSTDRAFTNEWS Executive summary Under the surface: Covid-19 vaccine narratives, misinformation and data deficits on social media AUTHORS: RORY SMITH, SEB CUBBON AND CLAIRE WARDLE CONTRIBUTING RESEARCHERS: JACK BERKEFELD AND TOMMY SHANE WWW.FIRSTDRAFTNEWS.ORG @FIRSTDRAFTNEWS Mapping competing vaccine narratives across English, Spanish and Francophone social media This research demonstrates the complexity of the vaccine Cite this report: Smith, R., Cubbon, S. & Wardle, C. (2020). Under the information ecosystem, where a cacophony of voices and surface: Covid-19 vaccine narratives, misinformation & data deficits on narratives have coalesced to create an environment of extreme social media. First Draft. https://firstdraftnews.org/vaccine- uncertainty. Two topics are driving a large proportion of the narratives-report-summary- november-2020 current global vaccine discourse, especially around a Covid-19 vaccine: the “political and economic motives” of actors and institutions involved in vaccine development and the “safety, efficacy and necessity” concerns around vaccines. Narratives challenging the safety of vaccines have been perennial players in the online vaccine debate. Yet this research shows that narratives related to mistrust in the intentions of institutions and key figures surrounding vaccines are now driving as much of the online conversation and vaccine skepticism as safety concerns. This issue is compounded by the complexities and vulnerabilities of this information ecosystem. It is full of “data deficits” — situations where demand for information about a topic is high, but the supply of credible information is low — that are being exploited by bad actors. These data deficits complicate efforts to accurately make sense of the development of a Covid-19 vaccine and vaccines more generally. -

REALM Research Briefing: Vaccines, Variants, and Venitlation

Briefing: Vaccines, Variants, and Ventilation A Briefing on Recent Scientific Literature Focused on SARS-CoV-2 Vaccines and Variants, Plus the Effects of Ventilation on Virus Spread Dates of Search: 01 January 2021 through 05 July 2021 Published: 22 July 2021 This document synthesizes various studies and data; however, the scientific understanding regarding COVID-19 is continuously evolving. This material is being provided for informational purposes only, and readers are encouraged to review federal, state, tribal, territorial, and local guidance. The authors, sponsors, and researchers are not liable for any damages resulting from use, misuse, or reliance upon this information, or any errors or omissions herein. INTRODUCTION Purpose of This Briefing • Access to the latest scientific research is critical as libraries, archives, and museums (LAMs) work to sustain modified operations during the continuing severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) pandemic. • As an emerging event, the SARS-CoV-2 pandemic continually presents new challenges and scientific questions. At present, SARS-CoV-2 vaccines and variants in the US are two critical areas of focus. The effects of ventilation-based interventions on the spread of SARS-CoV-2 are also an interest area for LAMs. This briefing provides key information and results from the latest scientific literature to help inform LAMs making decisions related to these topics. How to Use This Briefing: This briefing is intended to provide timely information about SARS-CoV-2 vaccines, variants, and ventilation to LAMs and their stakeholders. Due to the evolving nature of scientific research on these topics, the information provided here is not intended to be comprehensive or final. -

Assessing Non-Consensual Human Experimentation During the War on Terror

ACEVES_FINAL(DO NOT DELETE) 11/26/2018 9:05 AM INTERROGATION OR EXPERIMENTATION? ASSESSING NON-CONSENSUAL HUMAN EXPERIMENTATION DURING THE WAR ON TERROR WILLIAM J. ACEVES* The prohibition against non-consensual human experimentation has long been considered sacrosanct. It traces its legal roots to the Nuremberg trials although the ethical foundations dig much deeper. It prohibits all forms of medical and scientific experimentation on non-consenting individuals. The prohibition against non-consensual human experimentation is now well established in both national and international law. Despite its status as a fundamental and non-derogable norm, the prohibition against non-consensual human experimentation was called into question during the War on Terror by the CIA’s treatment of “high-value detainees.” Seeking to acquire actionable intelligence, the CIA tested the “theory of learned helplessness” on these detainees by subjecting them to a series of enhanced interrogation techniques. This Article revisits the prohibition against non-consensual human experimentation to determine whether the CIA’s treatment of detainees violated international law. It examines the historical record that gave rise to the prohibition and its eventual codification in international law. It then considers the application of this norm to the CIA’s treatment of high-value detainees by examining Salim v. Mitchell, a lawsuit brought by detainees who were subjected to enhanced interrogation techniques. This Article concludes that the CIA breached the prohibition against non-consensual human experimentation when it conducted systematic studies on these detainees to validate the theory of learned helplessness. Copyright © 2018 William J. Aceves *Dean Steven R. Smith Professor of Law at California Western School of Law. -

Stanley A. Plotkin, MD

55936.qxp 3/10/09 4:27 PM Page 4 Stanley A. Plotkin, MD Recipient of the 2009 Maxwell Finland Award for Scientific Achievement hysician, scientist, scholar—Stanley Plotkin Dr. Plotkin was born in New York City. His father was a has successfully juggled all three roles during commercial telegrapher. His mother, who occasionally his decades of service. filled in as an accountant, mostly stayed home with Stanley PFrom his first job following graduation from and his younger sister, Brenda. At age 15, Stanley, a student medical school as an intern at Cleveland Met- at the Bronx High School of Science, discovered what he ropolitan General Hospital to his present role as consultant wanted to do with his life. After reading the novel Arrow - to the vaccine manufacturer sanofi pasteur, Dr. Plotkin has smith by Sinclair Lewis and the nonfictional work Microbe explored the world of infectious diseases and Hunters by Paul de Kruif—two books about sci- has been actively involved in developing some entists battling diseases—Stanley set his sights of the most potent vaccines against those dis- on becoming a physician and a research scien- eases. tist. “Dr. Plotkin has been a tireless advocate for Dr. Plotkin graduated from New York Uni- the protection of humans, and children in par- versity in 1952 and obtained a medical degree ticular, from preventable infectious diseases. at Downstate Medical Center in Brooklyn. He His lifetime of work on vaccines has led to pro- was a resident in pediatrics at the Children’s found reductions in both morbidity and mortality not only Hospital of Philadelphia and at the Hospital for Sick Chil- in the United States, but throughout the world,” says Vi- dren in London. -

ALTIMMUNE, INC. (Exact Name of Registrant As Specified in Its Charter)

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K (Mark One) ☒ Annual Report Pursuant to Section 13 or 15(d) of the Securities Exchange Act of 1934 For the fiscal year ended December 31, 2019 ☐ Transition Report under Section 13 or 15(d) of the Securities Exchange Act of 1934 For the transition period from to Commission File Number: 001-32587 ALTIMMUNE, INC. (Exact name of registrant as specified in its charter) Delaware 20-2726770 (State or other jurisdiction of (I.R.S. Employer incorporation or organization) Identification No.) 910 Clopper Road, Suite 201S, Gaithersburg, MD 20878 (Address of principal executive offices) (Zip Code) Registrant’s telephone number, including area code (240) 654-1450 Securities registered pursuant to Section 12(b) of the Act: Title of each class Trading Symbol(s) Name of each exchange on which registered Common stock, par value $0.0001 per share ALT The NASDAQ Global Market Securities registered pursuant to Section 12(g) of the Act: None Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes ☐ No ☒ Indicate by check mark if the registrant is not required to file reports pursuant to Section 13 or 15(d) of the Act. Yes ☐ No ☒ Indicate by check mark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

Case 1:21-Cv-00756 ECF No. 1, Pageid.1 Filed 08/27/21 Page 1 of 49

Case 1:21-cv-00756 ECF No. 1, PageID.1 Filed 08/27/21 Page 1 of 49 IN THE UNITED STATES DISTRICT COURT FOR THE WESTERN DISTRICT OF MICHIGAN JEANNA NORRIS, on behalf of herself ) and all others similarly situated, ) ) Plaintiffs, ) ) v. ) ) CLASS ACTION COMPLAINT SAMUEL L. STANLEY, JR. ) FOR DECLARATORY AND in his official capacity as President of ) INJUNCTIVE RELIEF Michigan State University; DIANNE ) BYRUM, in her official capacity as Chair ) JURY TRIAL DEMANDED of the Board of Trustees, DAN KELLY, ) in his official capacity as Vice Chair ) of the Board of Trustees; and RENEE ) JEFFERSON, PAT O’KEEFE, ) BRIANNA T. SCOTT, KELLY TEBAY, ) and REMA VASSAR, in their official ) capacities as Members of the Board of ) Trustees of Michigan State University, ) and JOHN and JANE DOES 1-10, ) ) Defendants. ) Plaintiff and those similarly situated, by and through their attorneys at the New Civil Liberties Alliance (“NCLA”), hereby complains and alleges the following: INTRODUCTORY STATEMENT a. By the spring of 2020, the novel coronavirus SARS-CoV-2, which can cause the disease COVID-19, had spread across the globe. Since then, and because of the federal government’s “Operation Warp Speed,” three separate coronavirus vaccines have been developed and approved more swiftly than any other vaccines in our nation’s history. The Food and Drug Administration (“FDA”) issued an Emergency Use Authorization (“EUA”) for the Pfizer- 1 Case 1:21-cv-00756 ECF No. 1, PageID.2 Filed 08/27/21 Page 2 of 49 BioNTech COVID-19 Vaccine (“BioNTech Vaccine”) on December 11, 2020.1 Just one week later, FDA issued a second EUA for the Moderna COVID-19 Vaccine (“Moderna Vaccine”).2 FDA issued its most recent EUA for the Johnson & Johnson COVID-19 Vaccine (“Janssen Vaccine”) on February 27, 2021 (the only EUA for a single-shot vaccine).3 b. -

Understanding the Qanon Conspiracy from the Perspective of Canonical Information

The Gospel According to Q: Understanding the QAnon Conspiracy from the Perspective of Canonical Information Max Aliapoulios*;y, Antonis Papasavva∗;z, Cameron Ballardy, Emiliano De Cristofaroz, Gianluca Stringhini, Savvas Zannettou◦, and Jeremy Blackburn∓ yNew York University, zUniversity College London, Boston University, ◦Max Planck Institute for Informatics, ∓Binghamton University [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], [email protected] — iDRAMA, https://idrama.science — Abstract administration of a vaccine [54]. Some of these theories can threaten democracy itself [46, 50]; e.g., Pizzagate emerged The QAnon conspiracy theory claims that a cabal of (literally) during the 2016 US Presidential elections and claimed that blood-thirsty politicians and media personalities are engaged Hillary Clinton was involved in a pedophile ring [51]. in a war to destroy society. By interpreting cryptic “drops” of A specific example of the negative consequences social me- information from an anonymous insider calling themself Q, dia can have is the QAnon conspiracy theory. It originated on adherents of the conspiracy theory believe that Donald Trump the Politically Incorrect Board (/pol/) of the anonymous im- is leading them in an active fight against this cabal. QAnon ageboard 4chan via a series of posts from a user going by the has been covered extensively by the media, as its adherents nickname Q. Claiming to be a US government official, Q de- have been involved in multiple violent acts, including the Jan- scribed a vast conspiracy of actors who have infiltrated the US uary 6th, 2021 seditious storming of the US Capitol building. -

![Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels](https://docslib.b-cdn.net/cover/8862/archons-commanders-notice-they-are-not-anlien-parasites-and-then-in-a-mirror-image-of-the-great-emanations-of-the-pleroma-hundreds-of-lesser-angels-438862.webp)

Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels

A R C H O N S HIDDEN RULERS THROUGH THE AGES A R C H O N S HIDDEN RULERS THROUGH THE AGES WATCH THIS IMPORTANT VIDEO UFOs, Aliens, and the Question of Contact MUST-SEE THE OCCULT REASON FOR PSYCHOPATHY Organic Portals: Aliens and Psychopaths KNOWLEDGE THROUGH GNOSIS Boris Mouravieff - GNOSIS IN THE BEGINNING ...1 The Gnostic core belief was a strong dualism: that the world of matter was deadening and inferior to a remote nonphysical home, to which an interior divine spark in most humans aspired to return after death. This led them to an absorption with the Jewish creation myths in Genesis, which they obsessively reinterpreted to formulate allegorical explanations of how humans ended up trapped in the world of matter. The basic Gnostic story, which varied in details from teacher to teacher, was this: In the beginning there was an unknowable, immaterial, and invisible God, sometimes called the Father of All and sometimes by other names. “He” was neither male nor female, and was composed of an implicitly finite amount of a living nonphysical substance. Surrounding this God was a great empty region called the Pleroma (the fullness). Beyond the Pleroma lay empty space. The God acted to fill the Pleroma through a series of emanations, a squeezing off of small portions of his/its nonphysical energetic divine material. In most accounts there are thirty emanations in fifteen complementary pairs, each getting slightly less of the divine material and therefore being slightly weaker. The emanations are called Aeons (eternities) and are mostly named personifications in Greek of abstract ideas. -

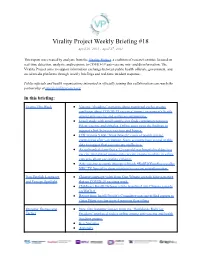

Virality Project Weekly Briefing #18 April 20, 2021 - April 27, 2021

Virality Project Weekly Briefing #18 April 20, 2021 - April 27, 2021 This report was created by analysts from the Virality Project, a coalition of research entities focused on real-time detection, analysis, and response to COVID-19 anti-vaccine mis- and disinformation. The Virality Project aims to support information exchange between public health officials, government, and social media platforms through weekly briefings and real-time incident response. Public officials and health organizations interested in officially joining this collaboration can reach the partnership at [email protected]. In this briefing: Events This Week ● Vaccine “shedding” narrative about menstrual cycles creates confusion about COVID-19 vaccines’ impact on women’s health among anti-vaccine and wellness communities. ● Israeli study with small sample size finds correlation between Pfizer vaccine and shingles. Online users twist the findings to suggest a link between vaccines and herpes. ● CDC reports 5,800 “breakthrough” cases of people getting coronavirus after vaccination. Some accounts have seized on this data to suggest that vaccines are ineffective. ● An unfounded claim that a 12-year-old was hospitalized due to a vaccine trial spread among anti-vaccine groups resulting in safety concerns about vaccinating children. ● Anti-vaccine accounts attempt to hijack #RollUpYourSleeves after NBC TV Special to draw attention to vaccine misinformation. Non-English Language ● Chinese language video from Guo Wengui spreads false narrative and Foreign Spotlight that no COVID-19 vaccines work. ● Children’s Health Defense article translated into Chinese spreads on WeChat. ● Report from Israeli People’s Committee uses unverified reports to claim Pfizer vaccine more dangerous than others Ongoing Themes and ● New film featuring footage from the “Worldwide Rally for Tactics Freedom” marketed widely online among anti-vaccine and health freedom groups. -

Articles & Reports

1 Reading & Resource List on Information Literacy Articles & Reports Adegoke, Yemisi. "Like. Share. Kill.: Nigerian police say false information on Facebook is killing people." BBC News. Accessed November 21, 2018. https://www.bbc.co.uk/news/resources/idt- sh/nigeria_fake_news. See how Facebook posts are fueling ethnic violence. ALA Public Programs Office. “News: Fake News: A Library Resource Round-Up.” American Library Association. February 23, 2017. http://www.programminglibrarian.org/articles/fake-news-library-round. ALA Public Programs Office. “Post-Truth: Fake News and a New Era of Information Literacy.” American Library Association. Accessed March 2, 2017. http://www.programminglibrarian.org/learn/post-truth- fake-news-and-new-era-information-literacy. This has a 45-minute webinar by Dr. Nicole A. Cook, University of Illinois School of Information Sciences, which is intended for librarians but is an excellent introduction to fake news. Albright, Jonathan. “The Micro-Propaganda Machine.” Medium. November 4, 2018. https://medium.com/s/the-micro-propaganda-machine/. In a three-part series, Albright critically examines the role of Facebook in spreading lies and propaganda. Allen, Mike. “Machine learning can’g flag false news, new studies show.” Axios. October 15, 2019. ios.com/machine-learning-cant-flag-false-news-55aeb82e-bcbb-4d5c-bfda-1af84c77003b.html. Allsop, Jon. "After 10,000 'false or misleading claims,' are we any better at calling out Trump's lies?" Columbia Journalism Review. April 30, 2019. https://www.cjr.org/the_media_today/trump_fact- check_washington_post.php. Allsop, Jon. “Our polluted information ecosystem.” Columbia Journalism Review. December 11, 2019. https://www.cjr.org/the_media_today/cjr_disinformation_conference.php. Amazeen, Michelle A.