15 Pecision System Clock Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Washington, Wednesday, January 25, 1950

VOLUME 15 ■ NUMBER 16 Washington, Wednesday, January 25, 1950 TITLE 3— THE PRESIDENT in paragraph (a) last above, to the in CONTENTS vention is insufficient equitably to justify EXECUTIVE ORDER 10096 a requirement of assignment to the Gov THE PRESIDENT ernment of the entire right, title and P roviding for a U niform P atent P olicy Executive Order Pase for the G overnment W ith R espect to interest to such invention, or in any case where the Government has insufficient Inventions made by Government I nventions M ade by G overnment interest in an invention to obtain entire employees; providing, for uni E mployees and for the Administra form patent policy for Govern tion of Such P olicy right, title and interest therein (although the Government could obtain some under ment and for administration of WHEREAS inventive advances in paragraph (a), above), the Government such policy________________ 389 scientific and technological fields fre agency concerned, subject to the ap quently result from governmental ac proval of the Chairman of the Govern EXECUTIVE AGENCIES tivities carried on by Government ment Patents Board (provided for in Agriculture Department employees; and paragraph 3 of this order and herein See Commodity Credit Corpora WHEREAS the Government of the after referred to as the Chairman), shall tion ; Forest Service; Production United States is expending large sums leave title to such invention in the and Marketing Administration. of money annually for the conduct of employee, subject, however, to the reser these activities; -

Mike Zornek • March 2020

Working with Time Zones Inside a Phoenix App Mike Zornek • March 2020 Terminology Layers of Wall Time International Atomic Time (ITA) Layers of Wall Time Universal Coordinated Time (UTC) International Atomic Time (ITA) Layers of Wall Time Universal Coordinated Time (UTC) Leap Seconds International Atomic Time (ITA) Layers of Wall Time Standard Time Universal Coordinated Time (UTC) Leap Seconds International Atomic Time (ITA) Layers of Wall Time Standard Time Time Zone UTC Offset Universal Coordinated Time (UTC) Leap Seconds International Atomic Time (ITA) Layers of Wall Time Wall Time Standard Time Time Zone UTC Offset Universal Coordinated Time (UTC) Leap Seconds International Atomic Time (ITA) Layers of Wall Time Wall Time Standard Offset Standard Time Time Zone UTC Offset Universal Coordinated Time (UTC) Leap Seconds International Atomic Time (ITA) Things Change Wall Time Standard Offset Politics Standard Time Time Zone UTC Offset Politics Universal Coordinated Time (UTC) Leap Seconds Celestial Mechanics International Atomic Time (ITA) Things Change Wall Time Standard Offset changes ~ 2 / year Standard Time Time Zone UTC Offset changes ~ 10 / year Universal Coordinated Time (UTC) 27 changes so far Leap Seconds last was in Dec 2016 ~ 37 seconds International Atomic Time (ITA) "Time Zone" How Elixir Represents Time Date Time year hour month minute day second nanosecond NaiveDateTime Date Time year hour month minute day second nanosecond DateTime time_zone NaiveDateTime utc_offset std_offset zone_abbr Date Time year hour month minute day second -

Operation Guide 3448

MO1611-EA © 2016 CASIO COMPUTER CO., LTD. Operation Guide 3448 ENGLISH Congratulations upon your selection of this CASIO watch. Warning ! • The measurement functions built into this watch are not intended for taking measurements that require professional or industrial precision. Values produced by this watch should be considered as reasonable representations only. • Note that CASIO COMPUTER CO., LTD. assumes no responsibility for any damage or loss suffered by you or any third party arising through the use of your watch or its malfunction. E-1 About This Manual • Keep the watch away from audio speakers, magnetic necklaces, cell phones, and other devices that generate strong magnetism. Exposure to strong • Depending on the model of your watch, display text magnetism can magnetize the watch and cause incorrect direction readings. If appears either as dark figures on a light background, or incorrect readings continue even after you perform bidirectional calibration, it light figures on a dark background. All sample displays could mean that your watch has been magnetized. If this happens, contact in this manual are shown using dark figures on a light your original retailer or an authorized CASIO Service Center. background. • Button operations are indicated using the letters shown in the illustration. • Note that the product illustrations in this manual are intended for reference only, and so the actual product may appear somewhat different than depicted by an illustration. E-2 E-3 Things to check before using the watch Contents 1. Check the Home City and the daylight saving time (DST) setting. About This Manual …………………………………………………………………… E-3 Use the procedure under “To configure Home City settings” (page E-16) to configure your Things to check before using the watch ………………………………………… E-4 Home City and daylight saving time settings. -

Computers Shouldn't Be Limitations Advertising & Distribution a Handicap Is a Limitation

.. No. 48 July-August 1989 $3.95 T HE MICRO TECHN I CAL J 0 URN A L Tools For The Physically Impaired What's important to people who have handicaps? We asked them. (Their responses may surprise you.) The Adventure Begins page 8 Dallas Vordahl demonstrates what you can do with a computer, a mouthstick, and a very limited amount of head motion. Writing Software For page 16 The Blind Don' t exclude the blind from your next software package. File Transfer Via The page 20 Parallel Port The LIMBO Project page 28 Build a maze-running robot. And More ... Debugging A Processor page 44 Growing Your Own page 66 Software Business Great SOGs page 83 And Much, Much, More 07 a 7447019388 3 U[J!]£?[jj) J/@[J!]£? !fJ©llfU/5Ju O[jj)(]@ (j] /JJ@r:'.JQ£?fj[J!]O /LfI1@@£?(JJ(]@£?J/ (j][jj)@ @[jj)@]O[jj)QQ£?O[jj)@] (]@@ODDD Introducing ... The PC-LabCard Family Only from HSC Electronic Supply Digital 1/0 and Prototype Development IBM PC/XT/AT and it's Counter Card compatible models are moving into Card Industrial/Laboratory applications at • 32 Digital Input Channels • Large breadboard area (3290 holes) - TTL compatible an increasing rate. The reasons for • Independent memory and I/O address - Low loading: 0.2 rnA at O.4V input this include their price/performance decoders built-in • 32 Digital Output Channels ratio and short user learning curve. • Memory and I/O ports are jumper - TTL compatible PC-based data acquisition boards selectable - Driving capacity: Sink 24 rnA, are now taking the place of the • All bus signals are buffered, marked, source 15 rnA and ready for use • Intel 8253 Timer/Counter traditional data loggers or recorders - 3 channels of timer/counter which cost several times more. -

GLONASS Time. 2. Generation of System Timescale

GLONASS TIME SCALE DESCRIPTION Definition of System 1. System timescale: GLONASS Time. 2. Generation of system timescale: on the basis of time scales of GLONASS Central Synchronizers (CS). 3. Is the timescale steered to a reference UTC timescale: Yes. a. To which reference timescale: UTC(SU), generated by State Time and Frequency Reference (STFR). b. Whole second offset from reference timescale: 10800 s (03 hrs 00 min 00 s). tGLONASS =UTC (SU ) + 03 hrs 00 min c. Maximum offset (modulo 1s) from reference timescale: 660 ns (probability 0.95) – in 2014; 4 ns (probability 0.95) – in 2020. 4. Corrections to convert from satellite to system timescale: SVs broadcast corrections τn(tb) and γn(tb) in L1, L2 frequency bands for 30-minute segments of prediction interval. a. Type of corrections given; include statement on relativistic corrections: Linear coefficients broadcast in operative part of navigation message for each SV (in accordance with GLONASS ICD). Periodic part of relativistic corrections taking into account the deviation of individual SVs orbits from GLONASS nominal orbits is incorporated in calculation of broadcast corrections to convert from satellite timescale to GLONASS Time. b. Specified accuracy of corrections to system timescale: The accuracy of calculated offset between SV timescale and GLONASS Time – 5,6 ns (rms). c. Location of corrections in broadcast messages: L1/L2 - τn(t b) – line 4, bits 59 – 80 of navigation frame; - γn(t b) - line 3, bits 69 – 79 of navigation frame. d. Equation to correct satellite timescale to system timescale: L1/L2 tGLONASS = t +τ n (tb ) − γ n (tb )( t − tb ) where t - satellite time; τn(t b), γn(tb) - coefficients of frequency/time correction; tb - time of locking frequency/time parameters. -

Philip Gibbs EVENT-SYMMETRIC SPACE-TIME

Event-Symmetric Space-Time “The universe is made of stories, not of atoms.” Muriel Rukeyser Philip Gibbs EVENT-SYMMETRIC SPACE-TIME WEBURBIA PUBLICATION Cyberspace First published in 1998 by Weburbia Press 27 Stanley Mead Bradley Stoke Bristol BS32 0EF [email protected] http://www.weburbia.com/press/ This address is supplied for legal reasons only. Please do not send book proposals as Weburbia does not have facilities for large scale publication. © 1998 Philip Gibbs [email protected] A catalogue record for this book is available from the British Library. Distributed by Weburbia Tel 01454 617659 Electronic copies of this book are available on the world wide web at http://www.weburbia.com/press/esst.htm Philip Gibbs’s right to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved, except that permission is given that this booklet may be reproduced by photocopy or electronic duplication by individuals for personal use only, or by libraries and educational establishments for non-profit purposes only. Mass distribution of copies in any form is prohibited except with the written permission of the author. Printed copies are printed and bound in Great Britain by Weburbia. Dedicated to Alice Contents THE STORYTELLER ................................................................................... 11 Between a story and the world ............................................................. 11 Dreams of Rationalism ....................................................................... -

United States District Court Southern District Of

Case 3:11-cv-01812-JM-JMA Document 31 Filed 02/20/13 Page 1 of 10 1 2 3 4 5 6 7 8 9 UNITED STATES DISTRICT COURT 10 SOUTHERN DISTRICT OF CALIFORNIA 11 12 In re JIFFY LUBE ) Case No.: 3:11-MD-2261-JM (JMA) INTERNATIONAL, INC. TEXT ) 13 SPAM LITIGATION ) FINAL APPROVAL OF CLASS ) ACTION AND ORDER OF 14 ) DISMISSAL WITH PREJUDICE ) 15 16 Pending before the Court are Plaintiffs’ Motion for Final Approval of Class 17 Action Settlement (Dkt. 90) and Plaintiffs’ Motion for Approval of Attorneys’ Fees and 18 Expenses and Class Representative Incentive Awards (Dkt. 86) (collectively, the 19 “Motions”). The Court, having reviewed the papers filed in support of the Motions, 20 having heard argument of counsel, and finding good cause appearing therein, hereby 21 GRANTS Plaintiffs’ Motions and it is hereby ORDERED, ADJUDGED, and DECREED 22 THAT: 23 1. Terms and phrases in this Order shall have the same meaning as ascribed to 24 them in the Parties’ August 1, 2012 Class Action Settlement Agreement, as amended by 25 the First Amendment to Class Action Settlement Agreement as of September 28, 2012 26 (the “Settlement Agreement”). 27 2. This Court has jurisdiction over the subject matter of this action and over all 28 Parties to the Action, including all Settlement Class Members. 1 3:11-md-2261-JM (JMA) Case 3:11-cv-01812-JM-JMA Document 31 Filed 02/20/13 Page 2 of 10 1 3. On October 10, 2012, this Court granted Preliminary Approval of the 2 Settlement Agreement and preliminarily certified a settlement class consisting of: 3 4 All persons or entities in the United States and its Territories who in April 2011 were sent a text message from short codes 5 72345 or 41411 promoting Jiffy Lube containing language similar to the following: 6 7 JIFFY LUBE CUSTOMERS 1 TIME OFFER: REPLY Y TO JOIN OUR ECLUB FOR 45% OFF 8 A SIGNATURE SERVICE OIL CHANGE! STOP TO 9 UNSUB MSG&DATA RATES MAY APPLY T&C: JIFFYTOS.COM. -

GPS Operation V1 4.Fm

Operation of the Courtyard CY490 SPG While Connected to the GPS Receiver No part of this document may be transmitted or reproduced in any form or by any means, electronic or mechanical, including photocopy, recording or any information storage and retrieval system, without permission in writing from Courtyard Electronics Limited. Introduction One of the best sources of reference for both frequency and time, is the Global Positioning System. The GPS system only transmits a complete UTC-GPS time message once every 12.5 minutes. So when powering up the GPS receiver it might be best to wait 12.5 minutes before relying on the GPS time. The CY490 SPG uses a 12 channel GPS receiver. As the SPG is powered the GPS receiver begins searching for satellites from which it can get time, frequency and phase information. In a good reception area as many as 12 satellites may be visible. The SPG only requires 3 satellites to be located and fully decoded before it can start using the GPS data. The search for the first 3 satellites can take up to 3 minutes, but is often accomplished in 2. If less than 3 satellites are available, the SPG will not lock to GPS. The number of satellites can be monitored on the first menu and on the remote control program CY490MiniMessage. The CY490MiniMessage gives a graphic view of satellites and provides much information for the user. GPS and television From a GPS receiver, the Master Clock can derive UTC and also add the appropriate time offset and provide corrected local time. -

What Happened Before the Big Bang?

Quarks and the Cosmos ICHEP Public Lecture II Seoul, Korea 10 July 2018 Michael S. Turner Kavli Institute for Cosmological Physics University of Chicago 100 years of General Relativity 90 years of Big Bang 50 years of Hot Big Bang 40 years of Quarks & Cosmos deep connections between the very big & the very small 100 years of QM & atoms 50 years of the “Standard Model” The Universe is very big (billions and billions of everything) and often beyond the reach of our minds and instruments Big ideas and powerful instruments have enabled revolutionary progress a very big idea connections between quarks & the cosmos big telescopes on the ground Hawaii Chile and in space: Hubble, Spitzer, Chandra, and Fermi at the South Pole basics of our Universe • 100 billion galaxies • each lit with the light of 100 billion stars • carried away from each other by expanding space from a • big bang beginning 14 billion yrs ago Hubble (1925): nebulae are “island Universes” Universe comprised of billions of galaxies Hubble Deep Field: one ten millionth of the sky, 10,000 galaxies 100 billion galaxies in the observable Universe Universe is expanding and had a beginning … Hubble, 1929 Signature of big bang beginning Einstein: Big Bang = explosion of space with galaxies carried along The big questions circa 1978 just two numbers: H0 and q0 Allan Sandage, Hubble’s “student” H0: expansion rate (slope age) q0: deceleration (“droopiness” destiny) … tens of astronomers working (alone) to figure it all out Microwave echo of the big bang Hot MichaelBig S Turner Bang -

8253/8253·5 Programmable Interval Timer

TABLE OF CONTENTS HOW TO CONFIGURE YOUR SYSTEM SUPPORT 1 IN UNDER 5 MINUTES, WITHOUT READING THE MANUAL • • •• 5 Other options and jumpers ••••• 6 Important note about system memory 6 ABOUT SYSTEM SUPPORT 7 Technical overview • 7 CONFIGURING THE SYSTEM SUPPORT 1 9 Setting I/O address 9 Setting memory address •• 10 Other memory options • • • • • • • • • 11 Disabling the memory • • 11 Global/extended address selection 11 Phantom* response options •••• 12 Battery back-up for CMOS RAM • • 12 Wait states ••••••••••• 12 Using higher speed 9511A or 9512 • 13 Interrupt jumpers and options 13 Using a 9511 or 9512 with interrupts 15 Interval timer options • • • • • • • 15 Configuring the serial channel • • • • 16 Other miscellaneous hardware options 17 Connecting the battery • • • • 18 Mounting the battery holder 18 Replacing the battery • • • • • 18 I/O port map • • • • • • • • 19 PROGRAMMING CONSIDERATIONS FOR THE SYSTEM SUPPORT 1 20 Power-up initialization • • • • 20 Programming the serial channel 20 UART initialization • • • • • • • • • • 25 Sample UART program • • • • • 25 Programming the real time clock • • • • 26 Clock programming sequence • • • • 28 Sample clock program • • • • • • • • • • 29 Programming the interrupt controllers 35 Important note about using DDT to debug interrupts 35 "INTEL 8259A Programmable Interrupt Controller". 36 Initializing the 8259A • • • • • • • • • • • 56 Routine for initializing master/slave 8259As 56 Disabling the 8259As •••••••••••• 57 Programming the interval timer. • • • • • • •••• 58 "INTEL 8253/8253-5 -

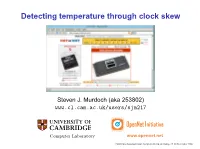

Detecting Temperature Through Clock Skew

Detecting temperature through clock skew Steven J. Murdoch (aka 253802) www.cl.cam.ac.uk/users/sjm217 OpenNet Initiative Computer Laboratory www.opennet.net 23rd Chaos Communication Congress, Berlin, Germany, 27–30 December 2006 This presentation introduces clock skew and how it can introduce security vulnerabilities 0.0 De−noised ●● 26.4 ● ● ● ● 26.3 −0.5 Non−linear offset ● ● ●●Temperature C) ° ● ● ●●● 26.2 −1.0 ●● ●●● ● ●●●●●●●● 26.1 ●●●●●●● ●●●● ● ●● ●●●● −1.5 ●● ● ●● ● ●● ●● 26.0 Temperature ( ● Clock skew, its link to temperature, and measurement ●●● ●●●●●●●●● ● ●●●●● 25.9 Non−linear offset component (ms) ●●●●●●●●●●●●●●●● −−2.0 Variable skew ● 25.8 Fri 11:00 Fri 21:00 Sat 07:00 Sat 17:00 Time Service Publication Connection Setup Data Transfer Hidden Service 1 6 * RP 7 Introduction Point (IP) IP Rendevous 2 Point (RP) Tor and hidden services 5 RP 8 Directory data Server 4 IP Client 3 Measurer Attacking Tor with clock skew Attacker Tor Network Hidden Server Other applications of clock skew Computers have multiple clocks which are constructed from hardware and software components A clock consists of an: • Oscillator, controlled by a crystal, ticks at an nominal frequency • Counter, counts the number of ticks produce by the oscillator On Linux there are several clocks available (other OS are similar): • Jiffy counter: An uncorrected clock used internally to the OS • System clock: A clock corrected by NTP (used by applications) • BIOS clock (also known as CMOS clock): runs even when PC is off and initialises the system clock Computers -

143847-003.Pdf

iSBC® 88/25 SINGLE BOARD COMPUTER 8-Bit 8088 Microprocessor Operating at Programmable Synchronousl • 5 MHz • Asynchronous RS232C Compatible One Megabyte Addressing Range Serial Interface with Software • Selectable Baud Rates Two iSBXTM Bus Connectors • 24 Programmable Parallel I/O Lines Optional Numeric Data Processor with • • iSBC® 337 MUL TIMODULETM Processor Two Programmable 16-Bit BCD or • Binary Timers/Event Counters 4K Bytes of Static RAM; Expandable • On-Board to 16K Bytes 9 Levels of Vectored Interrupt Control, • Expandable to 65 Levels Sockets for up to 64K Bytes of JEDEC • 24/28-Pin Standard Memory Devices; • MUL TIBUS® Interface for Multimaster Expandable On-Board to 128K Bytes Configurations and System Expansion Development Support with Intel's iPDS, • Low Cost Personal Development System, and EMV-88 Emulator The iSBC 88/25 Single Board Computer is a member of Intel's complete line of OEM microcomputer systems which take full advantage of Intel's technology to provide economical, self-contained, computer-based solu tions for OEM applications. The iSBC 88/25 board is complete computer system on a single 6.75 x 12.00-in. printed circuit card. The CPU, system clock, read/write memory, nonvolatile read only memory, I/O ports and drivers, serial communications interface, priority interrupt logic and programmable timers, all reside on the board. The large control storage capacity makes the iSBC 88/25 board ideally suited for control-oriented applications such as process control, instrumentation, industrial automation and many others. 143847-1 September 1987 3-63 Order Number: 143847-003 infef iSBC® 88/25 FUNCTIONAL DESCRIPTION ASCII data, and iterative word and byte string manip ulation functions.