Basic Thermodynamics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Equilibrium Thermodynamics

Equilibrium Thermodynamics Instructor: - Clare Yu (e-mail [email protected], phone: 824-6216) - office hours: Wed from 2:30 pm to 3:30 pm in Rowland Hall 210E Textbooks: - primary: Herbert Callen “Thermodynamics and an Introduction to Thermostatistics” - secondary: Frederick Reif “Statistical and Thermal Physics” - secondary: Kittel and Kroemer “Thermal Physics” - secondary: Enrico Fermi “Thermodynamics” Grading: - weekly homework: 25% - discussion problems: 10% - midterm exam: 30% - final exam: 35% Equilibrium Thermodynamics Material Covered: Equilibrium thermodynamics, phase transitions, critical phenomena (~ 10 first chapters of Callen’s textbook) Homework: - Homework assignments posted on course website Exams: - One midterm, 80 minutes, Tuesday, May 8 - Final, 2 hours, Tuesday, June 12, 10:30 am - 12:20 pm - All exams are in this room 210M RH Course website is at http://eiffel.ps.uci.edu/cyu/p115B/class.html The Subject of Thermodynamics Thermodynamics describes average properties of macroscopic matter in equilibrium. - Macroscopic matter: large objects that consist of many atoms and molecules. - Average properties: properties (such as volume, pressure, temperature etc) that do not depend on the detailed positions and velocities of atoms and molecules of macroscopic matter. Such quantities are called thermodynamic coordinates, variables or parameters. - Equilibrium: state of a macroscopic system in which all average properties do not change with time. (System is not driven by external driving force.) Why Study Thermodynamics ? - Thermodynamics predicts that the average macroscopic properties of a system in equilibrium are not independent from each other. Therefore, if we measure a subset of these properties, we can calculate the rest of them using thermodynamic relations. - Thermodynamics not only gives the exact description of the state of equilibrium but also provides an approximate description (to a very high degree of precision!) of relatively slow processes. -

Thermodynamics of Solar Energy Conversion in to Work

Sri Lanka Journal of Physics, Vol. 9 (2008) 47-60 Institute of Physics - Sri Lanka Research Article Thermodynamic investigation of solar energy conversion into work W.T.T. Dayanga and K.A.I.L.W. Gamalath Department of Physics, University of Colombo, Colombo 03, Sri Lanka Abstract Using a simple thermodynamic upper bound efficiency model for the conversion of solar energy into work, the best material for a converter was obtained. Modifying the existing detailed terrestrial application model of direct solar radiation to include an atmospheric transmission coefficient with cloud factors and a maximum concentration ratio, the best shape for a solar concentrator was derived. Using a Carnot engine in detailed space application model, the best shape for the mirror of a concentrator was obtained. A new conversion model was introduced for a solar chimney power plant to obtain the efficiency of the power plant and power output. 1. INTRODUCTION A system that collects and converts solar energy in to mechanical or electrical power is important from various aspects. There are two major types of solar power systems at present, one using photovoltaic cells for direct conversion of solar radiation energy in to electrical energy in combination with electrochemical storage and the other based on thermodynamic cycles. The efficiency of a solar thermal power plant is significantly higher [1] compared to the maximum efficiency of twenty percent of a solar cell. Although the initial cost of a solar thermal power plant is very high, the running cost is lower compared to the other power plants. Therefore most countries tend to build solar thermal power plants. -

31 Jan 2021 Laws of Thermodynamics . L01–1 Review of Thermodynamics. 1

31 jan 2021 laws of thermodynamics . L01{1 Review of Thermodynamics. 1: The Basic Laws What is Thermodynamics? • Idea: The study of states of physical systems that can be characterized by macroscopic variables, usu- ally equilibrium states (mechanical, thermal or chemical), and possible transformations between them. It started as a phenomenological subject motivated by practical applications, but it gradually developed into a coherent framework that we will view here as the macroscopic counterpart to the statistical mechanics of the microscopic constituents, and provides the observational context in which to verify many of its predictions. • Plan: We will mostly be interested in internal states, so the allowed processes will include heat exchanges and the main variables will always include the internal energy and temperature. We will recall the main facts (definitions, laws and relationships) of thermodynamics and discuss physical properties that characterize different substances, rather than practical applications such as properties of specific engines. The connection with statistical mechanics, based on a microscopic model of each system, will be established later. States and State Variables for a Thermodynamical System • Energy: The internal energy E is the central quantity in the theory, and is seen as a function of some complete set of variables characterizing each state. Notice that often energy is the only relevant macroscopic conservation law, while momentum, angular momentum or other quantities may not need to be considered. • Extensive variables: For each system one can choose a set of extensive variables (S; X~ ) whose values specify ~ the equilibrium states of the system; S is the entropy and the X are quantities that may include V , fNig, ~ ~ q, M, ~p, L, .. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

Solar Thermal Energy

22 Solar Thermal Energy Solar thermal energy is an application of solar energy that is very different from photovol- taics. In contrast to photovoltaics, where we used electrodynamics and solid state physics for explaining the underlying principles, solar thermal energy is mainly based on the laws of thermodynamics. In this chapter we give a brief introduction to that field. After intro- ducing some basics in Section 22.1, we will discuss Solar Thermal Heating in Section 22.2 and Concentrated Solar (electric) Power (CSP) in Section 22.3. 22.1 Solar thermal basics We start this section with the definition of heat, which sometimes also is called thermal energy . The molecules of a body with a temperature different from 0 K exhibit a disordered movement. The kinetic energy of this movement is called heat. The average of this kinetic energy is related linearly to the temperature of the body. 1 Usually, we denote heat with the symbol Q. As it is a form of energy, its unit is Joule (J). If two bodies with different temperatures are brought together, heat will flow from the hotter to the cooler body and as a result the cooler body will be heated. Dependent on its physical properties and temperature, this heat can be absorbed in the cooler body in two forms, sensible heat and latent heat. Sensible heat is that form of heat that results in changes in temperature. It is given as − Q = mC p(T2 T1), (22.1) where Q is the amount of heat that is absorbed by the body, m is its mass, Cp is its heat − capacity and (T2 T1) is the temperature difference. -

The First Law of Thermodynamics for Closed Systems A) the Energy

Chapter 3: The First Law of Thermodynamics for Closed Systems a) The Energy Equation for Closed Systems We consider the First Law of Thermodynamics applied to stationary closed systems as a conservation of energy principle. Thus energy is transferred between the system and the surroundings in the form of heat and work, resulting in a change of internal energy of the system. Internal energy change can be considered as a measure of molecular activity associated with change of phase or temperature of the system and the energy equation is represented as follows: Heat (Q) Energy transferred across the boundary of a system in the form of heat always results from a difference in temperature between the system and its immediate surroundings. We will not consider the mode of heat transfer, whether by conduction, convection or radiation, thus the quantity of heat transferred during any process will either be specified or evaluated as the unknown of the energy equation. By convention, positive heat is that transferred from the surroundings to the system, resulting in an increase in internal energy of the system Work (W) In this course we consider three modes of work transfer across the boundary of a system, as shown in the following diagram: Source URL: http://www.ohio.edu/mechanical/thermo/Intro/Chapt.1_6/Chapter3a.html Saylor URL: http://www.saylor.org/me103#4.1 Attributed to: Israel Urieli www.saylor.org Page 1 of 7 In this course we are primarily concerned with Boundary Work due to compression or expansion of a system in a piston-cylinder device as shown above. -

Law of Conversation of Energy

Law of Conservation of Mass: "In any kind of physical or chemical process, mass is neither created nor destroyed - the mass before the process equals the mass after the process." - the total mass of the system does not change, the total mass of the products of a chemical reaction is always the same as the total mass of the original materials. "Physics for scientists and engineers," 4th edition, Vol.1, Raymond A. Serway, Saunders College Publishing, 1996. Ex. 1) When wood burns, mass seems to disappear because some of the products of reaction are gases; if the mass of the original wood is added to the mass of the oxygen that combined with it and if the mass of the resulting ash is added to the mass o the gaseous products, the two sums will turn out exactly equal. 2) Iron increases in weight on rusting because it combines with gases from the air, and the increase in weight is exactly equal to the weight of gas consumed. Out of thousands of reactions that have been tested with accurate chemical balances, no deviation from the law has ever been found. Law of Conversation of Energy: The total energy of a closed system is constant. Matter is neither created nor destroyed – total mass of reactants equals total mass of products You can calculate the change of temp by simply understanding that energy and the mass is conserved - it means that we added the two heat quantities together we can calculate the change of temperature by using the law or measure change of temp and show the conservation of energy E1 + E2 = E3 -> E(universe) = E(System) + E(Surroundings) M1 + M2 = M3 Is T1 + T2 = unknown (No, no law of conservation of temperature, so we have to use the concept of conservation of energy) Total amount of thermal energy in beaker of water in absolute terms as opposed to differential terms (reference point is 0 degrees Kelvin) Knowns: M1, M2, T1, T2 (Kelvin) When add the two together, want to know what T3 and M3 are going to be. -

Isobaric Expansion Engines: New Opportunities in Energy Conversion for Heat Engines, Pumps and Compressors

energies Concept Paper Isobaric Expansion Engines: New Opportunities in Energy Conversion for Heat Engines, Pumps and Compressors Maxim Glushenkov 1, Alexander Kronberg 1,*, Torben Knoke 2 and Eugeny Y. Kenig 2,3 1 Encontech B.V. ET/TE, P.O. Box 217, 7500 AE Enschede, The Netherlands; [email protected] 2 Chair of Fluid Process Engineering, Paderborn University, Pohlweg 55, 33098 Paderborn, Germany; [email protected] (T.K.); [email protected] (E.Y.K.) 3 Chair of Thermodynamics and Heat Engines, Gubkin Russian State University of Oil and Gas, Leninsky Prospekt 65, Moscow 119991, Russia * Correspondence: [email protected] or [email protected]; Tel.: +31-53-489-1088 Received: 12 December 2017; Accepted: 4 January 2018; Published: 8 January 2018 Abstract: Isobaric expansion (IE) engines are a very uncommon type of heat-to-mechanical-power converters, radically different from all well-known heat engines. Useful work is extracted during an isobaric expansion process, i.e., without a polytropic gas/vapour expansion accompanied by a pressure decrease typical of state-of-the-art piston engines, turbines, etc. This distinctive feature permits isobaric expansion machines to serve as very simple and inexpensive heat-driven pumps and compressors as well as heat-to-shaft-power converters with desired speed/torque. Commercial application of such machines, however, is scarce, mainly due to a low efficiency. This article aims to revive the long-known concept by proposing important modifications to make IE machines competitive and cost-effective alternatives to state-of-the-art heat conversion technologies. Experimental and theoretical results supporting the isobaric expansion technology are presented and promising potential applications, including emerging power generation methods, are discussed. -

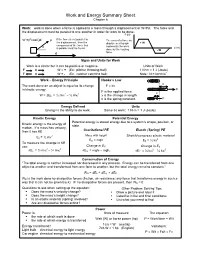

Work and Energy Summary Sheet Chapter 6

Work and Energy Summary Sheet Chapter 6 Work: work is done when a force is applied to a mass through a displacement or W=Fd. The force and the displacement must be parallel to one another in order for work to be done. F (N) W =(Fcosθ)d F If the force is not parallel to The area of a force vs. the displacement, then the displacement graph + W component of the force that represents the work θ d (m) is parallel must be found. done by the varying - W d force. Signs and Units for Work Work is a scalar but it can be positive or negative. Units of Work F d W = + (Ex: pitcher throwing ball) 1 N•m = 1 J (Joule) F d W = - (Ex. catcher catching ball) Note: N = kg m/s2 • Work – Energy Principle Hooke’s Law x The work done on an object is equal to its change F = kx in kinetic energy. F F is the applied force. 2 2 x W = ΔEk = ½ mvf – ½ mvi x is the change in length. k is the spring constant. F Energy Defined Units Energy is the ability to do work. Same as work: 1 N•m = 1 J (Joule) Kinetic Energy Potential Energy Potential energy is stored energy due to a system’s shape, position, or Kinetic energy is the energy of state. motion. If a mass has velocity, Gravitational PE Elastic (Spring) PE then it has KE 2 Mass with height Stretch/compress elastic material Ek = ½ mv 2 EG = mgh EE = ½ kx To measure the change in KE Change in E use: G Change in ES 2 2 2 2 ΔEk = ½ mvf – ½ mvi ΔEG = mghf – mghi ΔEE = ½ kxf – ½ kxi Conservation of Energy “The total energy is neither increased nor decreased in any process. -

Demonstration of a Persistent Current in Superfluid Atomic Gas

Demonstration of a persistent current in superfluid atomic gas One of the most remarkable properties of macroscopic quantum systems is the phenomenon of persistent flow. Current in a loop of superconducting wire will flow essentially forever. In a neutral superfluid, like liquid helium below the lambda point, persistent flow is observed as frictionless circulation in a hollow toroidal container. A Bose-Einstein condensate (BEC) of an atomic gas also exhibits superfluid behavior. While a number of experiments have confirmed superfluidity in an atomic gas BEC, persistent flow, which is regarded as the “gold standard” of superfluidity, had not been observed. The main reason for this is that persistent flow is most easily observed in a topology such as a ring or toroid, while past BEC experiments were primarily performed in spheroidal traps. Using a combination of magnetic and optical fields, we were able to create an atomic BEC in a toriodal trap, with the condensate filling the entire ring. Once the BEC was formed in the toroidal trap, we coherently transferred orbital angular momentum of light to the atoms (using a technique we had previously demonstrated) to get them to circulate in the trap. We observed the flow of atoms to persist for a time more than twenty times what was observed for the atoms confined in a spheroidal trap. The flow was observed to persist even when there was a large (80%) thermal fraction present in the toroidal trap. These experiments open the possibility of investigations of the fundamental role of flow in superfluidity and of realizing the atomic equivalent of superconducting circuits and devices such as SQUIDs. -

Novel Hot Air Engine and Its Mathematical Model – Experimental Measurements and Numerical Analysis

POLLACK PERIODICA An International Journal for Engineering and Information Sciences DOI: 10.1556/606.2019.14.1.5 Vol. 14, No. 1, pp. 47–58 (2019) www.akademiai.com NOVEL HOT AIR ENGINE AND ITS MATHEMATICAL MODEL – EXPERIMENTAL MEASUREMENTS AND NUMERICAL ANALYSIS 1 Gyula KRAMER, 2 Gabor SZEPESI *, 3 Zoltán SIMÉNFALVI 1,2,3 Department of Chemical Machinery, Institute of Energy and Chemical Machinery University of Miskolc, Miskolc-Egyetemváros 3515, Hungary e-mail: [email protected], [email protected], [email protected] Received 11 December 2017; accepted 25 June 2018 Abstract: In the relevant literature there are many types of heat engines. One of those is the group of the so called hot air engines. This paper introduces their world, also introduces the new kind of machine that was developed and built at Department of Chemical Machinery, Institute of Energy and Chemical Machinery, University of Miskolc. Emphasizing the novelty of construction and the working principle are explained. Also the mathematical model of this new engine was prepared and compared to the real model of engine. Keywords: Hot, Air, Engine, Mathematical model 1. Introduction There are three types of volumetric heat engines: the internal combustion engines; steam engines; and hot air engines. The first one is well known, because it is on zenith nowadays. The steam machines are also well known, because their time has just passed, even the elder ones could see those in use. But the hot air engines are forgotten. Our aim is to consider that one. The history of hot air engines is 200 years old. -

Nonequilibrium Thermodynamics and Scale Invariance

Article Nonequilibrium Thermodynamics and Scale Invariance Leonid M. Martyushev 1,2,* and Vladimir Celezneff 3 1 Technical Physics Department, Ural Federal University, 19 Mira St., Ekaterinburg 620002, Russia 2 Institute of Industrial Ecology, Russian Academy of Sciences, 20 S. Kovalevskaya St., Ekaterinburg 620219, Russia 3 The Racah Institute of Physics, Hebrew University of Jerusalem, Jerusalem 91904, Israel; [email protected] * Correspondence: [email protected]; Tel.: +7-92-222-77-425 Academic Editors: Milivoje M. Kostic, Miguel Rubi and Kevin H. Knuth Received: 30 January 2017; Accepted: 14 March 2017; Published: 16 March 2017 Abstract: A variant of continuous nonequilibrium thermodynamic theory based on the postulate of the scale invariance of the local relation between generalized fluxes and forces is proposed here. This single postulate replaces the assumptions on local equilibrium and on the known relation between thermodynamic fluxes and forces, which are widely used in classical nonequilibrium thermodynamics. It is shown here that such a modification not only makes it possible to deductively obtain the main results of classical linear nonequilibrium thermodynamics, but also provides evidence for a number of statements for a nonlinear case (the maximum entropy production principle, the macroscopic reversibility principle, and generalized reciprocity relations) that are under discussion in the literature. Keywords: dissipation function; nonequilibrium thermodynamics; generalized fluxes and forces 1. Introduction Classical nonequilibrium thermodynamics is an important field of modern physics that was developed for more than a century [1–5]. It is fundamentally based on thermodynamics and statistical physics (including kinetic theory and theory of random processes) and is widely applied in biophysics, geophysics, chemistry, economics, etc.