Mimicking Human Player Strategies in Fighting Games Using Game Artificial Intelligence Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Persistent Realtim E Building Interior Generation

Persistent Realtime Building Interior G eneration By Evan Hahn A thesis submitted to the Faculty of Graduate Studies and Research in partial fulfilment of the requirements for the degree of Master of Computer Science Ottawa-Carleton Institute for Computer Science School of Computer Science Carleton University Ottawa, Ontario September 2006 © Copyright 2006, Evan Hahn Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. Library and Bibliotheque et Archives Canada Archives Canada Published Heritage Direction du Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A 0N4 Ottawa ON K1A 0N4 Canada Canada Your file Votre reference ISBN: 978-0-494-18353-3 Our file Notre reference ISBN: 978-0-494-18353-3 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library permettant a la Bibliotheque et Archives and Archives Canada to reproduce,Canada de reproduire, publier, archiver, publish, archive, preserve, conserve,sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par I'lnternet, preter, telecommunication or on the Internet,distribuer et vendre des theses partout dans loan, distribute and sell theses le monde, a des fins commerciales ou autres, worldwide, for commercial or non sur support microforme, papier, electronique commercial purposes, in microform,et/ou autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in et des droits moraux qui protege cette these. this thesis. Neither the thesis Ni la these ni des extraits substantiels de nor substantial extracts from it celle-ci ne doivent etre imprimes ou autrement may be printed or otherwise reproduits sans son autorisation. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

Toshinenden Pc Download Battle Arena Toshinden

toshinenden pc download Battle Arena Toshinden. While lacking in the depth and complexity of the best fighters, like Street Fighter IV, Battle Arena Toshinden makes for an enjoyably action- packed entry into the genre. The game was one of the first to introduce 3D to this style of beat 'em up, standing alongside Virtua Fighter, and it scores some points by simply being well done and by offering fighting fans what they want. There is the barest element of narrative to the game and which describes how the various characters have all been invited into a mysterious tournament and who each have their own motivations in their quest for victory, but it's all pretty insubstantial stuff and can easily be ignored. The fighting itself however, is much more interesting. There are eight characters initially playable, with more unlockable and which provide the usual variety of fighting styles to try out, but one of the game's interesting additions is that of weapons, similar to later games like Soul Edge and which gives it an extra degree of strategy. Apart from this, Battle Arena is a pretty standard fighter, simply requiring players engage in a series of bouts, making use of a variety of moves to knock merry hell out their opponents. The desperation move is a curious element, a special attack that can only be used when you're down to your last health and which also helps to give the game a little personality. Visually, this stands up a little better than Virtua Fighter, as it is less stylised, and the sprites and environments are chunky and well detailed, with a good sense of fluid movement. -

Download the Catalog

WE SERVE BUSINESSES OF ALL SIZES WHO WE ARE We are an ambitious laser tag design and manufacturing company with a passion for changing the way people EXPERIENCE LIVE-COMBAT GAMING. We PROVIDE MANUFACTURING and support to businesses both big or small, around the world! With fresh thinking and INNOVATIVE GAMING concepts, our reputation has made us a leader in the live-action gaming space. One of the hallmarks of our approach to DESIGN & manufacturing is equipment versatility. Whether operating an indoor arena, outdoor battleground, mobile business, or a special entertainment OUR PRODUCTS HAVE THE HIGHEST REPLAY attraction our equipment can be CUSTOMIZED TO FIT your needs. Battle Company systems will expand your income opportunities and offer possibilities where the rest of the industry can only provide VALUE IN THE LASER TAG INDUSTRY limitations. Our products are DESIGNED AND TESTED at our 13-acre property headquarters. We’ve put the equipment into action in our 5,000 square-foot indoor laser tag facility as well as our newly constructed outdoor battlefield. This is to ensure the highest level of design quality across the different types of environments where laser tag is played. Using Agile development methodology and manufacturing that is ISO 9001 CERTIFIED, we are the fastest manufacturer in the industry when it comes to bringing new products and software to market. Battle Company equipment has a strong competitive advantage over other brands and our COMMITMENT TO R&D is the reason why we are leading the evolution of the laser tag industry! 3 OUT OF 4 PLAYERS WHO USE BATTLE COMPANY BUSINESSES THAT CHOOSE US TO POWER THEIR EXPERIENCE EQUIPMENT RETURN TO PLAY AGAIN! WE SERVE THE MILITARY 3 BIG ATTRACTION SMALL FOOTPRINT Have a 10ft x 12.5ft space not making your facility much money? Remove the clutter and fill that area with the Battle Cage. -

Fighting Games, Performativity, and Social Game Play a Dissertation

The Art of War: Fighting Games, Performativity, and Social Game Play A dissertation presented to the faculty of the Scripps College of Communication of Ohio University In partial fulfillment of the requirements for the degree Doctor of Philosophy Todd L. Harper November 2010 © 2010 Todd L. Harper. All Rights Reserved. This dissertation titled The Art of War: Fighting Games, Performativity, and Social Game Play by TODD L. HARPER has been approved for the School of Media Arts and Studies and the Scripps College of Communication by Mia L. Consalvo Associate Professor of Media Arts and Studies Gregory J. Shepherd Dean, Scripps College of Communication ii ABSTRACT HARPER, TODD L., Ph.D., November 2010, Mass Communications The Art of War: Fighting Games, Performativity, and Social Game Play (244 pp.) Director of Dissertation: Mia L. Consalvo This dissertation draws on feminist theory – specifically, performance and performativity – to explore how digital game players construct the game experience and social play. Scholarship in game studies has established the formal aspects of a game as being a combination of its rules and the fiction or narrative that contextualizes those rules. The question remains, how do the ways people play games influence what makes up a game, and how those players understand themselves as players and as social actors through the gaming experience? Taking a qualitative approach, this study explored players of fighting games: competitive games of one-on-one combat. Specifically, it combined observations at the Evolution fighting game tournament in July, 2009 and in-depth interviews with fighting game enthusiasts. In addition, three groups of college students with varying histories and experiences with games were observed playing both competitive and cooperative games together. -

Deepcrawl: Deep Reinforcement Learning for Turn-Based Strategy Games

DeepCrawl: Deep Reinforcement Learning for Turn-based Strategy Games Alessandro Sestini,1 Alexander Kuhnle,2 Andrew D. Bagdanov1 1Dipartimento di Ingegneria dell’Informazione, Universita` degli Studi di Firenze, Florence, Italy 2Department of Computer Science and Technology, University of Cambridge, United Kingdom falessandro.sestini, andrew.bagdanovg@unifi.it Abstract super-human skills able to solve a variety of environments. However the main objective of DRL of this type is training In this paper we introduce DeepCrawl, a fully-playable Rogue- like prototype for iOS and Android in which all agents are con- agents to surpass human players in competitive play in clas- trolled by policy networks trained using Deep Reinforcement sical games like Go (Silver et al. 2016) and video games Learning (DRL). Our aim is to understand whether recent ad- like DOTA 2 (OpenAI 2019). The resulting, however, agents vances in DRL can be used to develop convincing behavioral clearly run the risk of being too strong, of exhibiting artificial models for non-player characters in videogames. We begin behavior, and in the end not being a fun gameplay element. with an analysis of requirements that such an AI system should Video games have become an integral part of our entertain- satisfy in order to be practically applicable in video game de- ment experience, and our goal in this work is to demonstrate velopment, and identify the elements of the DRL model used that DRL techniques can be used as an effective game design in the DeepCrawl prototype. The successes and limitations tool for learning compelling and convincing NPC behaviors of DeepCrawl are documented through a series of playability tests performed on the final game. -

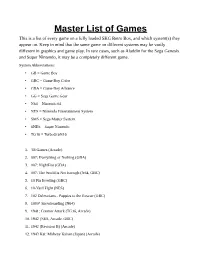

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

BAM5 Wrap Up: Five Years of Salt, Sweat and the Return of the Bomb

Search the site... Reviews About Contact DC Web Store The archive Behind the site Get in touch Grab sweet gear! Please consider disabling AdBlock for our site. HOME (../../INDEX.HTM) » FEATURES (../INDEX.HTM) » BAM5 WRAP UP: FIVE YEARS OF SALT, SWEAT AND THE RETURN OF THE BOMB FEATURES REVIEWS BAM5 Wrap Up: Five years of salt, (../INDEX.HTM) (../../REVIEWS/INDEX.HTM) ROUND- PREVIEWS sweat and the return of the Bomb UPS (../../PREVIEWS/INDEX.HTM) 1 year ago (../../2013/05/index.htm) (P.O./D../CRAOSUTNSD- VIDEOS by Chee Seng Siow (../../author/chee/index.htm) 1 comment U(..P/S../IPNODDECXA.HSTMS/)IN(.D./E..X/V.HIDTEMO)S/INDEX.HTM) CULTURE INTERVIEWS (../../CULTURE/IND(E..X/...H/ITNMTE) RVIEWS/INDEX.HTM) Please consider disabling AdBlock for our site. “CAN YOU DO THE OVERHEAD?” roars the crowd at Daichi Akahoshi as he completes his disgusting Vergil comeback over his training partner ToXy. It is the Sunday of the BAM5 (Battle Arena Melbourne) weekend, it is finals day and it is MAHVEL, baby. Sitting in the crowd one can feel overwhelmed by the level of sound in the room; you have gasps, cheers, jeers, tribal chants and even pleading. It is loud and it is hype. Register Login Daichi is notorious in Melbourne for looking away from the screen inmatch to jive with (../../wp- (../../wp- the audience. He once famously dropped a combo when indulging in said login.php- login.php.htm showmanship, only to spin back and win the match with an ohsodirty Vergil swords registration=d ) instant overhead. -

Inside the Video Game Industry

Inside the Video Game Industry GameDevelopersTalkAbout theBusinessofPlay Judd Ethan Ruggill, Ken S. McAllister, Randy Nichols, and Ryan Kaufman Downloaded by [Pennsylvania State University] at 11:09 14 September 2017 First published by Routledge Th ird Avenue, New York, NY and by Routledge Park Square, Milton Park, Abingdon, Oxon OX RN Routledge is an imprint of the Taylor & Francis Group, an Informa business © Taylor & Francis Th e right of Judd Ethan Ruggill, Ken S. McAllister, Randy Nichols, and Ryan Kaufman to be identifi ed as authors of this work has been asserted by them in accordance with sections and of the Copyright, Designs and Patents Act . All rights reserved. No part of this book may be reprinted or reproduced or utilised in any form or by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying and recording, or in any information storage or retrieval system, without permission in writing from the publishers. Trademark notice : Product or corporate names may be trademarks or registered trademarks, and are used only for identifi cation and explanation without intent to infringe. Library of Congress Cataloging in Publication Data Names: Ruggill, Judd Ethan, editor. | McAllister, Ken S., – editor. | Nichols, Randall K., editor. | Kaufman, Ryan, editor. Title: Inside the video game industry : game developers talk about the business of play / edited by Judd Ethan Ruggill, Ken S. McAllister, Randy Nichols, and Ryan Kaufman. Description: New York : Routledge is an imprint of the Taylor & Francis Group, an Informa Business, [] | Includes index. Identifi ers: LCCN | ISBN (hardback) | ISBN (pbk.) | ISBN (ebk) Subjects: LCSH: Video games industry. -

Defend the City

COMPUTER GAMES LABORATORY DOCUMENTATION Defend the City By ToBeUmbenannt Prepared By: Last Updated: Josef Stumpfegger 11/01/2020 Sergey Mitchenko Winfried Baumann Defend the City Documentation TABLE OF CONTENTS GAME IDEA PROPOSAL 4 GAME DESCRIPTION 4 TECHNICAL ACHIEVEMENT 8 "BIG IDEA" BULLSEYE 8 SCHEDULE & TASKS 8 LAYERED TASK BREAKDOWN 8 TIMELINE 9 ASSESSMENT 10 PROTOTYPE REPORT 11 PROTOTYPING GOALS 11 MODELED GAME 11 GENERAL RULES 11 ACTORS 11 ENEMY BEHAVIOR 12 BUILDINGS AND THEIR PURPOSE 14 TRAPS 15 LEVEL DESIGN 15 WHAT WE’VE LEARNED 17 INFLUENCES ON THE GAME 18 INTERIM REPORT 18 PROGRESS REPORT 19 CHARACTER 19 TRAPS 20 AI 20 DESTRUCTIBLE ENVIRONMENT 21 LEVEL DESIGN 21 GENERAL GAMEPLAY 22 CHALLENGES AND PROBLEMS 22 TODOS FOR NEXT MILESTONE 23 ALPHA RELEASE REPORT 23 PROGRESS REPORT 23 TRAPS 23 22222 2 Page 2 of 42 Defend the City Documentation UI 25 AI 26 DESTRUCTIBLE ENVIRONMENT 27 CHALLENGES AND PROBLEMS 27 TODOS FOR NEXT MILESTONE 27 PLAYTESTING 28 CHANGES BEFORE PLAYTESTING 28 PLAYTESTING REPORT 30 SETTING 30 QUESTIONS 30 OBSERVATIONS 31 RESULTS AND CHANGES 33 ADDITIONAL FEEDBACK 35 CONCLUSION 36 THE FINAL PRODUCT 36 EXPERIENCE 38 COURSE PERSONAL IMPRESSION 38 33333 3 Page 3 of 42 Defend the City Documentation Game Idea Proposal Game Description Our game takes place in medieval times setting combined with some fantasy aspects. You control in a standard 3rd person fashion a hero, whose task is to protect the kingdom from a series of attacks on different cities of bloodthirsty creatures. The attack is continuous but sometimes offers some time where the soldiers of the city can defend on their own while you prepare some additional defenses to assist you when the attacks intensify again. -

Episode 289 – Talking Sammo Hung | Whistlekickmartialartsradio.Com

Episode 289 – Talking Sammo Hung | whistlekickMartialArtsRadio.com Jeremy Lesniak: Hey there, thanks for tuning in. Welcome, this is whistlekick martial arts radio and today were going to talk all about the man that I believe is the most underrated martial arts actor of today, possibly of all time, Sammo Hung. If you're new to the show you may not know my voice I'm Jeremy Lesniak, I'm the founder of whistlekick we make apparel and sparring gear and training aids and we produce things like this show. I wanna thank you for stopping by, if you want to check out the show notes for this or any of the other episodes we've done, you can find those over at whistlekickmartialartsradio.com. You find our products at whistlekick.com or on Amazon or maybe if you're one of the lucky ones, at your martial arts school because we do offer wholesale accounts. Thank you to everyone who has supported us through purchases and even if you aren’t wearing a whistlekick shirt or something like that right now, thank you for taking the time out of your day to listen to this episode. As I said, here on today's episode, were talking about one of the most respected martial arts actor still working today, a man who has been active in the film industry for almost 60 years. None other the Sammo Hung. Also known as Hung Kam Bow i'll admit my pronunciation is not always the best I'm doing what I can. Hung originates from Hong Kong where he is known not only as an actor but also as an action choreographer, producer, and director. -

Newsletter 15/07 DIGITAL EDITION Nr

ISSN 1610-2606 ISSN 1610-2606 newsletter 15/07 DIGITAL EDITION Nr. 212 - September 2007 Michael J. Fox Christopher Lloyd LASER HOTLINE - Inh. Dipl.-Ing. (FH) Wolfram Hannemann, MBKS - Talstr. 3 - 70825 K o r n t a l Fon: 0711-832188 - Fax: 0711-8380518 - E-Mail: [email protected] - Web: www.laserhotline.de Newsletter 15/07 (Nr. 212) September 2007 editorial Hallo Laserdisc- und DVD-Fans, schen und japanischen DVDs Aus- Nach den in diesem Jahr bereits liebe Filmfreunde! schau halten, dann dürfen Sie sich absolvierten Filmfestivals Es gibt Tage, da wünscht man sich, schon auf die Ausgaben 213 und ”Widescreen Weekend” (Bradford), mit mindestens fünf Armen und 214 freuen. Diese werden wir so ”Bollywood and Beyond” (Stutt- mehr als nur zwei Hirnhälften ge- bald wie möglich publizieren. Lei- gart) und ”Fantasy Filmfest” (Stutt- boren zu sein. Denn das würde die der erfordert das Einpflegen neuer gart) steht am ersten Oktober- tägliche Arbeit sicherlich wesent- Titel in unsere Datenbank gerade wochenende das vierte Highlight lich einfacher machen. Als enthu- bei deutschen DVDs sehr viel mehr am Festivalhimmel an. Nunmehr siastischer Filmfanatiker vermutet Zeit als bei Übersee-Releases. Und bereits zum dritten Mal lädt die man natürlich schon lange, dass Sie können sich kaum vorstellen, Schauburg in Karlsruhe zum irgendwo auf der Welt in einem was sich seit Beginn unserer Som- ”Todd-AO Filmfestival” in die ba- kleinen, total unauffälligen Labor merpause alles angesammelt hat! dische Hauptstadt ein. Das diesjäh- inmitten einer Wüstenlandschaft Man merkt deutlich, dass wir uns rige Programm wurde gerade eben bereits mit genmanipulierten Men- bereits auf das Herbst- und Winter- offiziell verkündet und das wollen schen experimentiert wird, die ge- geschäft zubewegen.