Techniques for Processing TCP/IP Flow Content in Network Switches at Gigabit Line Rates

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Postgresql Development Environment

A PostgreSQL development environment Peter Eisentraut [email protected] @petereisentraut The plan tooling building testing developing documenting maintaining Tooling: Git commands Useful commands: git add (-N, -p) git ls-files git am git format-patch git apply git grep (-i, -w, -W) git bisect git log (-S, --grep) git blame -w git merge git branch (--contains, -d, -D, --list, git pull -m, -r) git push (-n, -f, -u) git checkout (file, branch, -b) git rebase git cherry-pick git reset (--hard) git clean (-f, -d, -x, -n) git show git commit (--amend, --reset, -- git status fixup, -a) git stash git diff git tag Tooling: Git configuration [diff] colorMoved = true colorMovedWS = allow-indentation-change [log] date = local [merge] conflictStyle = diff3 [rebase] autosquash = true [stash] showPatch = true [tag] sort = version:refname [versionsort] suffix = "_BETA" suffix = "_RC" Tooling: Git aliases [alias] check-whitespace = \ !git diff-tree --check $(git hash-object -t tree /dev/null) HEAD ${1:-$GIT_PREFIX} find = !git ls-files "*/$1" gtags = !git ls-files | gtags -f - st = status tags = tag -l wdiff = diff --color-words=[[:alnum:]]+ wshow = show --color-words Tooling: Git shell integration zsh vcs_info Tooling: Editor Custom settings for PostgreSQL sources exist. Advanced options: whitespace checking automatic spell checking (flyspell) automatic building (flycheck, flymake) symbol lookup ("tags") Tooling: Shell settings https://superuser.com/questions/521657/zsh-automatically-set-environment-variables-for-a-directory zstyle ':chpwd:profiles:/*/*/devel/postgresql(|/|/*)' profile postgresql chpwd_profile_postgresql() { chpwd_profile_default export EMAIL="[email protected]" alias nano='nano -T4' LESS="$LESS -x4" } Tooling — Summary Your four friends: vcs editor shell terminal Building: How to run configure ./configure -C/--config-cache --prefix=$(cd . -

Lecture 7 Network Management and Debugging

SYSTEM ADMINISTRATION MTAT.08.021 LECTURE 7 NETWORK MANAGEMENT AND DEBUGGING Prepared By: Amnir Hadachi and Artjom Lind University of Tartu, Institute of Computer Science [email protected] / [email protected] 1 LECTURE 7: NETWORK MGT AND DEBUGGING OUTLINE 1.Intro 2.Network Troubleshooting 3.Ping 4.SmokePing 5.Trace route 6.Network statistics 7.Inspection of live interface activity 8.Packet sniffers 9.Network management protocols 10.Network mapper 2 1. INTRO 3 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO QUOTE: Networks has tendency to increase the number of interdependencies among machine; therefore, they tend to magnify problems. • Network management tasks: ✴ Fault detection for networks, gateways, and critical servers ✴ Schemes for notifying an administrator of problems ✴ General network monitoring, to balance load and plan expansion ✴ Documentation and visualization of the network ✴ Administration of network devices from a central site 4 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO Network Size 160 120 80 40 Management Procedures 0 AUTOMATION ILLUSTRATION OF NETWORK GROWTH VS MGT PROCEDURES AUTOMATION 5 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO • Network: • Subnets + Routers / switches Time to consider • Automating mgt tasks: • shell scripting source: http://www.eventhelix.com/RealtimeMantra/Networking/ip_routing.htm#.VvjkA2MQhIY • network mgt station 6 2. NETWORK TROUBLES HOOTING 7 LECTURE 7: NETWORK MGT AND DEBUGGING NETWORK TROUBLESHOOTING • Many tools are available for debugging • Debugging: • Low-level (e.g. TCP/IP layer) • high-level (e.g. DNS, NFS, and HTTP) • This section progress: ping trace route GENERAL ESSENTIAL TROUBLESHOOTING netstat TOOLS STRATEGY nmap tcpdump … 8 LECTURE 7: NETWORK MGT AND DEBUGGING NETWORK TROUBLESHOOTING • Before action, principle to consider: ✴ Make one change at a time ✴ Document the situation as it was before you got involved. -

Version 7.8-Systemd

Linux From Scratch Version 7.8-systemd Created by Gerard Beekmans Edited by Douglas R. Reno Linux From Scratch: Version 7.8-systemd by Created by Gerard Beekmans and Edited by Douglas R. Reno Copyright © 1999-2015 Gerard Beekmans Copyright © 1999-2015, Gerard Beekmans All rights reserved. This book is licensed under a Creative Commons License. Computer instructions may be extracted from the book under the MIT License. Linux® is a registered trademark of Linus Torvalds. Linux From Scratch - Version 7.8-systemd Table of Contents Preface .......................................................................................................................................................................... vii i. Foreword ............................................................................................................................................................. vii ii. Audience ............................................................................................................................................................ vii iii. LFS Target Architectures ................................................................................................................................ viii iv. LFS and Standards ............................................................................................................................................ ix v. Rationale for Packages in the Book .................................................................................................................... x vi. Prerequisites -

Software Release Notes for Lucent Omc-H Release

OMC-H 7.3 R ELEASE NOTES SOFTWARE RELEASE NOTES FOR LUCENT OMC-H R ELEASE 7.3 (I N SUPPORT OF USDS 6.0 SU3, USDS 6.0) FILE: 06.cust_srn_omch_7.3.1.5 Page 1 of 17 Issue 1.0 Dated 2007-01-12 Alcatel-Lucent Proprietary Base lined OMC-H 7.3 R ELEASE NOTES TABLE OF CONTENTS 1. INTRODUCTION ............................................................................................................................. 3 1.1 RELEASE INFORMATION ........................................................................................................ 3 1.2 RELEASE NOTES OVERVIEW ................................................................................................ 4 2 SYSTEM REQUIREMENTS .............................................................................................................. 5 2.1 HARDWARE REQUIREMENTS ............................................................................................... 5 2.2 SOFTWARE REQUIREMENTS ................................................................................................ 5 2.3 PRE-INSTALLATION REQUIREMENTS ................................................................................ 5 3 RELEASE DESCRIPTION ................................................................................................................. 6 3.1 NEW FEATURES ........................................................................................................................ 6 3.2 ENHANCEMENTS ..................................................................................................................... -

Oracle Solaris 11 Implementation and Operations Procedure Guide

Oracle Solaris 11 Implementation and Operations Procedure Guide December 2016 Edition 1.0 Fujitsu Limited Let's Use Oracle Solaris 11 (Build and Operations Procedure Guide) About This Document Purpose - This document presents procedures for building and operating Oracle Solaris 11. To use this procedure guide effectively, read it together with the Oracle Solaris 11 Implementation and Operations Guide , a separate volume. Oracle Solaris 11 Implementation and Operations Guide . http://www.fujitsu.com/global/products/computing/servers/unix/sparc/downloads/documents/ Notes - This document presents procedures that use Oracle Solaris 11.3. - There may be differences from the log values written in this procedure guide, depending on the environment. - "Oracle Solaris" may be abbreviated to "Solaris". - Fujitsu M10 is sold as SPARC M10 Systems by Fujitsu in Japan. Fujitsu M10 and SPARC M10 Systems are identical products. Positioning of documents Oracle Solaris 11 Implementation and Operations Guide Oracle Solaris 11 Overview and Design Guide Oracle Solaris 11 Implementation and Operations Procedure Guide Design Install Operate Terms of Use Copyrights, trademarks, and other intellectual property rights - The contents (text, images, audio, etc.) are protected by copyrights, trademarks, and other intellectual property rights. The contents can be printed or downloaded for personal use only. The contents may not be otherwise used (reused on a personal webpage, uploaded to another server, etc.) without the prior permission of Fujitsu or the rights holder. Limitation of warranties - Fujitsu does not warrant the accuracy, merchantability, fitness of use, etc. of the contents. Fujitsu assumes no liability for any damages arising from use of the contents. The contents may be modified or deleted without any prior notice. -

Unix (And Linux)

AWK....................................................................................................................................4 BC .....................................................................................................................................11 CHGRP .............................................................................................................................16 CHMOD.............................................................................................................................19 CHOWN ............................................................................................................................26 CP .....................................................................................................................................29 CRON................................................................................................................................34 CSH...................................................................................................................................36 CUT...................................................................................................................................71 DATE ................................................................................................................................75 DF .....................................................................................................................................79 DIFF ..................................................................................................................................84 -

GNU Coreutils Cheat Sheet (V1.00) Created by Peteris Krumins ([email protected], -- Good Coders Code, Great Coders Reuse)

GNU Coreutils Cheat Sheet (v1.00) Created by Peteris Krumins ([email protected], www.catonmat.net -- good coders code, great coders reuse) Utility Description Utility Description arch Print machine hardware name nproc Print the number of processors base64 Base64 encode/decode strings or files od Dump files in octal and other formats basename Strip directory and suffix from file names paste Merge lines of files cat Concatenate files and print on the standard output pathchk Check whether file names are valid or portable chcon Change SELinux context of file pinky Lightweight finger chgrp Change group ownership of files pr Convert text files for printing chmod Change permission modes of files printenv Print all or part of environment chown Change user and group ownership of files printf Format and print data chroot Run command or shell with special root directory ptx Permuted index for GNU, with keywords in their context cksum Print CRC checksum and byte counts pwd Print current directory comm Compare two sorted files line by line readlink Display value of a symbolic link cp Copy files realpath Print the resolved file name csplit Split a file into context-determined pieces rm Delete files cut Remove parts of lines of files rmdir Remove directories date Print or set the system date and time runcon Run command with specified security context dd Convert a file while copying it seq Print sequence of numbers to standard output df Summarize free disk space setuidgid Run a command with the UID and GID of a specified user dir Briefly list directory -

07 07 Unixintropart2 Lucio Week 3

Unix Basics Command line tools Daniel Lucio Overview • Where to use it? • Command syntax • What are commands? • Where to get help? • Standard streams(stdin, stdout, stderr) • Pipelines (Power of combining commands) • Redirection • More Information Introduction to Unix Where to use it? • Login to a Unix system like ’kraken’ or any other NICS/ UT/XSEDE resource. • Download and boot from a Linux LiveCD either from a CD/DVD or USB drive. • http://www.puppylinux.com/ • http://www.knopper.net/knoppix/index-en.html • http://www.ubuntu.com/ Introduction to Unix Where to use it? • Install Cygwin: a collection of tools which provide a Linux look and feel environment for Windows. • http://cygwin.com/index.html • https://newton.utk.edu/bin/view/Main/Workshop0InstallingCygwin • Online terminal emulator • http://bellard.org/jslinux/ • http://cb.vu/ • http://simpleshell.com/ Introduction to Unix Command syntax $ command [<options>] [<file> | <argument> ...] Example: cp [-R [-H | -L | -P]] [-fi | -n] [-apvX] source_file target_file Introduction to Unix What are commands? • An executable program (date) • A command built into the shell itself (cd) • A shell program/function • An alias Introduction to Unix Bash commands (Linux) alias! crontab! false! if! mknod! ram! strace! unshar! apropos! csplit! fdformat! ifconfig! more! rcp! su! until! apt-get! cut! fdisk! ifdown! mount! read! sudo! uptime! aptitude! date! fg! ifup! mtools! readarray! sum! useradd! aspell! dc! fgrep! import! mtr! readonly! suspend! userdel! awk! dd! file! install! mv! reboot! symlink! -

ICMP Usage in Scanning – the Complete Know How Version 3.0

ICMP Usage in Scanning – The Complete Know How Version 3.0 ICMP Usage in Scanning The Complete Know-How Ofir Arkin Founder The Sys-Security Group http :// www .sys-security .com ofir@sys-security .com Version 3.0 June 2001 1 Copyright © Ofir Arkin 2000-2001 http://www.sys-security.com ICMP Usage in Scanning – The Complete Know How Version 3.0 Trust No One 2 Copyright © Ofir Arkin 2000-2001 http://www.sys-security.com ICMP Usage in Scanning – The Complete Know How Version 3.0 Table of Contents 1.0 INTRODUCTION......................................................................................................11 1.1 Introduction to Version 1.0 .......................................................................................11 1.2 Introduction to Version 2.0 ...................................................................................11 1.3 Introduction to Version 2.5 .......................................................................................12 1.4 Introduction to Version 3.0 .......................................................................................12 2.0 THE ICMP PROTOCOL...........................................................................................13 2.1 The ICMP Specifications..........................................................................................13 2.1.1 Special Conditions with ICMP messages..........................................................13 2.2 ICMP Messages.......................................................................................................14 -

Table of Contents Local Transfers

Table of Contents Local Transfers......................................................................................................1 Checking File Integrity.......................................................................................................1 Local File Transfer Commands...........................................................................................3 Shift Transfer Tool Overview..............................................................................................5 Local Transfers Checking File Integrity It is a good practice to confirm whether your files are complete and accurate before you transfer the files to or from NAS, and again after the transfer is complete. The easiest way to verify the integrity of file transfers is to use the NAS-developed Shift tool for the transfer, with the --verify option enabled. As part of the transfer, Shift will automatically checksum the data at both the source and destination to detect corruption. If corruption is detected, partial file transfers/checksums will be performed until the corruption is rectified. For example: pfe21% shiftc --verify $HOME/filename /nobackuppX/username lou% shiftc --verify /nobackuppX/username/filename $HOME your_localhost% sup shiftc --verify filename pfe: In addition to Shift, there are several algorithms and programs you can use to compute a checksum. If the results of the pre-transfer checksum match the results obtained after the transfer, you can be reasonably certain that the data in the transferred files is not corrupted. If -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering. -

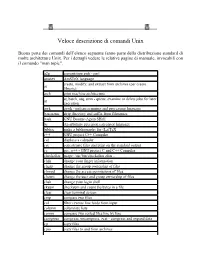

Unix Command

Veloce descrizione di comandi Unix Buona parte dei comandi dell’elenco seguente fanno parte della distribuzione standard di molte architetture Unix. Per i dettagli vedere le relative pagine di manuale, invocabili con il comando "man topic". a2p convertitore awk - perl amstex AmSTeX language create, modify, and extract from archives (per creare ar librerie) arch print machine architecture at, batch, atq, atrm - queue, examine or delete jobs for later at execution awk gawk - pattern scanning and processing language basename strip directory and suffix from filenames bash GNU Bourne-Again SHell bc An arbitrary precision calculator language bibtex make a bibliography for (La)TeX c++ GNU project C++ Compiler cal displays a calendar cat concatenate files and print on the standard output cc gcc, g++ - GNU project C and C++ Compiler checkalias usage: /usr/bin/checkalias alias .. chfn change your finger information chgrp change the group ownership of files chmod change the access permissions of files chown change the user and group ownership of files chsh change your login shell cksum checksum and count the bytes in a file clear clear terminal screen cmp compare two files col filter reverse line feeds from input column columnate lists comm compare two sorted files line by line compress compress, uncompress, zcat - compress and expand data cp copy files cpio copy files to and from archives tcsh - C shell with file name completion and command line csh editing csplit split a file into sections determined by context lines cut remove sections from each