Classification of Musical Timbre Using Bayesian Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Rise of the Tenor Voice in the Late Eighteenth Century: Mozart’S Opera and Concert Arias Joshua M

University of Connecticut OpenCommons@UConn Doctoral Dissertations University of Connecticut Graduate School 10-3-2014 The Rise of the Tenor Voice in the Late Eighteenth Century: Mozart’s Opera and Concert Arias Joshua M. May University of Connecticut - Storrs, [email protected] Follow this and additional works at: https://opencommons.uconn.edu/dissertations Recommended Citation May, Joshua M., "The Rise of the Tenor Voice in the Late Eighteenth Century: Mozart’s Opera and Concert Arias" (2014). Doctoral Dissertations. 580. https://opencommons.uconn.edu/dissertations/580 ABSTRACT The Rise of the Tenor Voice in the Late Eighteenth Century: Mozart’s Opera and Concert Arias Joshua Michael May University of Connecticut, 2014 W. A. Mozart’s opera and concert arias for tenor are among the first music written specifically for this voice type as it is understood today, and they form an essential pillar of the pedagogy and repertoire for the modern tenor voice. Yet while the opera arias have received a great deal of attention from scholars of the vocal literature, the concert arias have been comparatively overlooked; they are neglected also in relation to their counterparts for soprano, about which a great deal has been written. There has been some pedagogical discussion of the tenor concert arias in relation to the correction of vocal faults, but otherwise they have received little scrutiny. This is surprising, not least because in most cases Mozart’s concert arias were composed for singers with whom he also worked in the opera house, and Mozart always paid close attention to the particular capabilities of the musicians for whom he wrote: these arias offer us unusually intimate insights into how a first-rank composer explored and shaped the potential of the newly-emerging voice type of the modern tenor voice. -

Finale Transposition Chart, by Makemusic User Forum Member Motet (6/5/2016) Trans

Finale Transposition Chart, by MakeMusic user forum member Motet (6/5/2016) Trans. Sounding Written Inter- Key Usage (Some Common Western Instruments) val Alter C Up 2 octaves Down 2 octaves -14 0 Glockenspiel D¯ Up min. 9th Down min. 9th -8 5 D¯ Piccolo C* Up octave Down octave -7 0 Piccolo, Celesta, Xylophone, Handbells B¯ Up min. 7th Down min. 7th -6 2 B¯ Piccolo Trumpet, Soprillo Sax A Up maj. 6th Down maj. 6th -5 -3 A Piccolo Trumpet A¯ Up min. 6th Down min. 6th -5 4 A¯ Clarinet F Up perf. 4th Down perf. 4th -3 1 F Trumpet E Up maj. 3rd Down maj. 3rd -2 -4 E Trumpet E¯* Up min. 3rd Down min. 3rd -2 3 E¯ Clarinet, E¯ Flute, E¯ Trumpet, Soprano Cornet, Sopranino Sax D Up maj. 2nd Down maj. 2nd -1 -2 D Clarinet, D Trumpet D¯ Up min. 2nd Down min. 2nd -1 5 D¯ Flute C Unison Unison 0 0 Concert pitch, Horn in C alto B Down min. 2nd Up min. 2nd 1 -5 Horn in B (natural) alto, B Trumpet B¯* Down maj. 2nd Up maj. 2nd 1 2 B¯ Clarinet, B¯ Trumpet, Soprano Sax, Horn in B¯ alto, Flugelhorn A* Down min. 3rd Up min. 3rd 2 -3 A Clarinet, Horn in A, Oboe d’Amore A¯ Down maj. 3rd Up maj. 3rd 2 4 Horn in A¯ G* Down perf. 4th Up perf. 4th 3 -1 Horn in G, Alto Flute G¯ Down aug. 4th Up aug. 4th 3 6 Horn in G¯ F# Down dim. -

The Identification of Basic Problems Found in the Bassoon Parts of a Selected Group of Band Compositions

Utah State University DigitalCommons@USU All Graduate Theses and Dissertations Graduate Studies 5-1966 The Identification of Basic Problems Found in the Bassoon Parts of a Selected Group of Band Compositions J. Wayne Johnson Utah State University Follow this and additional works at: https://digitalcommons.usu.edu/etd Part of the Music Commons Recommended Citation Johnson, J. Wayne, "The Identification of Basic Problems Found in the Bassoon Parts of a Selected Group of Band Compositions" (1966). All Graduate Theses and Dissertations. 2804. https://digitalcommons.usu.edu/etd/2804 This Thesis is brought to you for free and open access by the Graduate Studies at DigitalCommons@USU. It has been accepted for inclusion in All Graduate Theses and Dissertations by an authorized administrator of DigitalCommons@USU. For more information, please contact [email protected]. THE IDENTIFICATION OF BAS~C PROBLEMS FOUND IN THE BASSOON PARTS OF A SELECTED GROUP OF BAND COMPOSITI ONS by J. Wayne Johnson A thesis submitted in partial fulfillment of the r equ irements for the degree of MASTER OF SCIENCE in Music Education UTAH STATE UNIVERSITY Logan , Ut a h 1966 TABLE OF CONTENTS INTRODUCTION A BRIEF HISTORY OF THE BASSOON 3 THE I NSTRUMENT 20 Testing the bassoon 20 Removing moisture 22 Oiling 23 Suspending the bassoon 24 The reed 24 Adjusting the reed 25 Testing the r eed 28 Care of the reed 29 TONAL PROBLEMS FOUND IN BAND MUSIC 31 Range and embouchure ad j ustment 31 Embouchure · 35 Intonation 37 Breath control 38 Tonguing 40 KEY SIGNATURES AND RELATED FINGERINGS 43 INTERPRETIVE ASPECTS 50 Terms and symbols Rhythm patterns SUMMARY 55 LITERATURE CITED 56 LIST OF FIGURES Figure Page 1. -

A Countertenor's Reference Guide to Operatic Repertoire

A COUNTERTENOR’S REFERENCE GUIDE TO OPERATIC REPERTOIRE Brad Morris A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF MUSIC May 2019 Committee: Christopher Scholl, Advisor Kevin Bylsma Eftychia Papanikolaou © 2019 Brad Morris All Rights Reserved iii ABSTRACT Christopher Scholl, Advisor There are few resources available for countertenors to find operatic repertoire. The purpose of the thesis is to provide an operatic repertoire guide for countertenors, and teachers with countertenors as students. Arias were selected based on the premise that the original singer was a castrato, the original singer was a countertenor, or the role is commonly performed by countertenors of today. Information about the composer, information about the opera, and the pedagogical significance of each aria is listed within each section. Study sheets are provided after each aria to list additional resources for countertenors and teachers with countertenors as students. It is the goal that any countertenor or male soprano can find usable repertoire in this guide. iv I dedicate this thesis to all of the music educators who encouraged me on my countertenor journey and who pushed me to find my own path in this field. v PREFACE One of the hardships while working on my Master of Music degree was determining the lack of resources available to countertenors. While there are opera repertoire books for sopranos, mezzo-sopranos, tenors, baritones, and basses, none is readily available for countertenors. Although there are online resources, it requires a great deal of research to verify the validity of those sources. -

Keyboard Playing and the Mechanization of Polyphony in Italian Music, Circa 1600

Keyboard Playing and the Mechanization of Polyphony in Italian Music, Circa 1600 By Leon Chisholm A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Music in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Kate van Orden, Co-Chair Professor James Q. Davies, Co-Chair Professor Mary Ann Smart Professor Massimo Mazzotti Summer 2015 Keyboard Playing and the Mechanization of Polyphony in Italian Music, Circa 1600 Copyright 2015 by Leon Chisholm Abstract Keyboard Playing and the Mechanization of Polyphony in Italian Music, Circa 1600 by Leon Chisholm Doctor of Philosophy in Music University of California, Berkeley Professor Kate van Orden, Co-Chair Professor James Q. Davies, Co-Chair Keyboard instruments are ubiquitous in the history of European music. Despite the centrality of keyboards to everyday music making, their influence over the ways in which musicians have conceptualized music and, consequently, the music that they have created has received little attention. This dissertation explores how keyboard playing fits into revolutionary developments in music around 1600 – a period which roughly coincided with the emergence of the keyboard as the multipurpose instrument that has served musicians ever since. During the sixteenth century, keyboard playing became an increasingly common mode of experiencing polyphonic music, challenging the longstanding status of ensemble singing as the paradigmatic vehicle for the art of counterpoint – and ultimately replacing it in the eighteenth century. The competing paradigms differed radically: whereas ensemble singing comprised a group of musicians using their bodies as instruments, keyboard playing involved a lone musician operating a machine with her hands. -

How to Read Choral Music.Pages

! How to Read Choral Music ! Compiled by Tim Korthuis Sheet music is a road map to help you create beautiful music. Please note that is only there as a guide. Follow the director for cues on dynamics (volume) and phrasing (cues and cuts). !DO NOT RELY ENTIRELY ON YOUR MUSIC!!! Only glance at it for words and notes. This ‘manual’ is a very condensed version, and is here as a reference. It does not include everything to do with reading music, only the basics to help you on your way. There may be !many markings that you wonder about. If you have questions, don’t be afraid to ask. 1. Where is YOUR part? • You need to determine whether you are Soprano or Alto (high or low ladies), or Tenor (hi men/low ladies) or Bass (low men) • Soprano is the highest note, followed by Alto, Tenor, (Baritone) & Bass Soprano NOTE: ! Alto If there is another staff ! Tenor ! ! Bass above the choir bracket, it is Bracket usually for a solo or ! ! ‘descant’ (high soprano). ! Brace !Piano ! ! ! • ! The Treble Clef usually indicates Soprano and Alto parts o If there are three notes in the Treble Clef, ask the director which section will be ‘split’ (eg. 1st and 2nd Soprano). o Music written solely for women will usually have two Treble Clefs. • ! The Bass Clef indicates Tenor, Baritone and Bass parts o If there are three parts in the Bass Clef, the usual configuration is: Top - Tenor, Middle - Baritone, Bottom – Bass, though this too may be ‘split’ (eg. 1st and 2nd Tenor) o Music written solely for men will often have two Bass Clefs, though Treble Clef is used for men as well (written 1 octave higher). -

The String Family

The String Family When you look at a string instrument, the first thing you'll probably notice is that it's made of wood, so why is it called a string instrument? The bodies of the string instruments, which are hollow inside to allow sound to vibrate within them, are made of different kinds of wood, but the part of the instrument that makes the sound is the strings, which are made of nylon, steel or sometimes gut. The strings are played most often by drawing a bow across them. The handle of the bow is made of wood and the strings of the bow are actually horsehair from horses' tails! Sometimes the musicians will use their fingers to pluck the strings, and occasionally they will turn the bow upside down and play the strings with the wooden handle. The strings are the largest family of instruments in the orchestra and they come in four sizes: the violin, which is the smallest, viola, cello, and the biggest, the double bass, sometimes called the contrabass. (Bass is pronounced "base," as in "baseball.") The smaller instruments, the violin and viola, make higher-pitched sounds, while the larger cello and double bass produce low rich sounds. They are all similarly shaped, with curvy wooden bodies and wooden necks. The strings stretch over the body and neck and attach to small decorative heads, where they are tuned with small tuning pegs. The violin is the smallest instrument of the string family, and makes the highest sounds. There are more violins in the orchestra than any other instrument they are divided into two groups: first and second. -

New Zealand Works for Contrabassoon

Hayley Elizabeth Roud 300220780 NZSM596 Supervisor- Professor Donald Maurice Master of Musical Arts Exegesis 10 December 2010 New Zealand Works for Contrabassoon Contents 1 Introduction 3 2.1 History of the contrabassoon in the international context 3 Development of the instrument 3 Contrabassoonists 9 2.2 History of the contrabassoon in the New Zealand context 10 3 Selected New Zealand repertoire 16 Composers: 3.1 Bryony Jagger 16 3.2 Michael Norris 20 3.3 Chris Adams 26 3.4 Tristan Carter 31 3.5 Natalie Matias 35 4 Summary 38 Appendix A 39 Appendix B 45 Appendix C 47 Appendix D 54 Glossary 55 Bibliography 68 Hayley Roud, 300220780, New Zealand Works for Contrabassoon, 2010 3 Introduction The contrabassoon is seldom thought of as a solo instrument. Throughout the long history of contra- register double-reed instruments the assumed role has been to provide a foundation for the wind chord, along the same line as the double bass does for the strings. Due to the scale of these instruments - close to six metres in acoustic length, to reach the subcontra B flat’’, an octave below the bassoon’s lowest note, B flat’ - they have always been difficult and expensive to build, difficult to play, and often unsatisfactory in evenness of scale and dynamic range, and thus instruments and performers are relatively rare. Given this bleak outlook it is unusual to find a number of works written for solo contrabassoon by New Zealand composers. This exegesis considers the development of contra-register double-reed instruments both internationally and within New Zealand, and studies five works by New Zealand composers for solo contrabassoon, illuminating what it was that led them to compose for an instrument that has been described as the 'step-child' or 'Cinderella' of both the wind chord and instrument makers. -

Arnold Schering on “Who Sang the Soprano and Alto Parts in Bach's

Arnold Schering on “Who sang the soprano and alto parts in Bach’s cantatas?” Translation by Thomas Braatz © 2009 [The following is a translation of pages 43 to 48 from Arnold Schering’s book, Johann Sebastian Bachs Leipziger Kirchenmusik, published in 1936 in Leipzig and presented in facsimile after the translation. To distinguish between Schering’s original footnotes and mine, his are highlighted in red while mine are left in black.] The paragraph leading into this passage describes Georg PhilippTelemann’s (1681- 1767) sacred music activities in the Neukirche (New Church) in Leipzig. These cantata performances were accomplished by Telemann with the help of university students only and without any assistance from the Thomaner1 choir(s) which were under Johann Kuhnau’s (1660-1722 - Bach’s immediate predecessor) direction. Jealous of the success that Telemann was having with his performances, Kuhnau commented that the young people there [Neukirche] had no real idea about what the proper style of singing in a church was all about and that their goal was “directed toward a so- called cantata-like manner of singing”. By this he evidently meant the elegant, modern way that male falsettists2 sang their solos [compared to the soprano and alto voices of young boys before their mutation]. What was Bach’s way of treating this matter in his sacred music? A cantor’s constant concern, as we have seen, is the ability to obtain and train good sopranos and basses. Kuhnau’s experience, that among his young scholars strong bass singers were a rarity,3 was an experience that his cantor predecessors in Leipzig had already had. -

'The Performing Pitch of William Byrd's Latin Liturgical Polyphony: a Guide

The Performing Pitch of William Byrd’s Latin Liturgical Polyphony: A Guide for Historically Minded Interpreters Andrew Johnstone REA: A Journal of Religion, Education and the Arts, Issue 10, 'Sacred Music', 2016 The choosing of a suitable performing pitch is a task that faces all interpreters of sixteenth- century vocal polyphony. As any choral director with the relevant experience will know, decisions about pitch are inseparable from decisions about programming, since some degree of transposition—be it effected on the printed page or by the mental agility of the singers—is almost invariably required to bring the conventions of Renaissance vocal scoring into alignment with the parameters of the more modern SATB ensemble. To be sure, the problem will always admit the purely pragmatic solution of adopting the pitch that best suits the available voices. Such a solution cannot of itself be to the detriment of a compelling, musicianly interpretation, and precedent for it may be cited in historic accounts of choosing a pitch according to the capabilities of the available bass voices (Ganassi 1542, chapter 11) and transposing polyphony so as to align the tenor part with the octave in which chorale melodies were customarily sung (Burmeister 1606, chapter 8). At the same time, transpositions oriented to the comfort zone of present-day choirs will almost certainly result in sonorities differing appreciably from those the composer had in mind. It is therefore to those interested in this aspect of the composer’s intentions, as well as to those curious about the why and the wherefore of Renaissance notation, that the following observations are offered. -

Acoustical Studies on the Flat-Backed and Round- Backed Double Bass

Acoustical Studies on the Flat-backed and Round- backed Double Bass Dissertation zur Erlangung des Doktorats der Philosophie eingereicht an der Universität für Musik und darstellende Kunst Wien von Mag. Andrew William Brown Betreuer: O. Prof. Mag. Gregor Widholm emer. O. Univ.-Prof. Mag. Dr. Franz Födermayr Wien, April 2004 “Nearer confidences of the gods did Sisyphus covet; his work was his reward” i Table of Contents List of Figures iii List of Tables ix Forward x 1 The Back Plate of the Double Bass 1 1.1 Introduction 1 1.2 The Form of the Double Bass 2 1.3 The Form of Other Bowed Instruments 4 2 Surveys and Literature on the Flat-backed and Round-backed Double Bass 12 2.1 Surveys of Instrument Makers 12 2.2 Surveys Among Musicians 20 2.3 Literature on the Acoustics of the Flat-backed Bass and 25 the Round-Backed Double Bass 3 Experimental Techniques in Bowed Instrument Research 31 3.1 Frequency Response Curves of Radiated Sound 32 3.2 Near-Field Acoustical Holography 33 3.3 Input Admittance 34 3.4 Modal Analysis 36 3.5 Finite Element Analysis 38 3.6 Laser Optical Methods 39 3.7 Combined Methods 41 3.8 Summary 42 ii 4 The Double Bass Under Acoustical Study 46 4.1 The Double Bass as a Static Structure 48 4.2 The Double Bass as a Sound Source 53 5 Experiments 56 5.1 Test Instruments 56 5.2 Setup of Frequency Response Measurements 58 5.3 Setup of Input Admittance Measurements 66 5.4 Setup of Laser Vibrometry Measurements 68 5.5 Setup of Listening Tests 69 6 Results 73 6.1 Results of Radiated Frequency Response Measurements 73 6.2 Results of Input Admittance Measurements 79 6.3 Results of Laser Vibrometry Measurements. -

Read Liner Notes

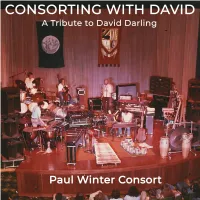

Cover Photo: Paul Winter Consort, 1975 Somewhere in America (Clockwise from left: Ben Carriel, Tigger Benford, David Darling, Paul Winter, Robert Chappell) CONSORTING WITH DAVID A Tribute to David Darling Notes on the Music A Message from Paul: You might consider first listening to this musical journey before you even read the titles of the pieces, or any of these notes. I think it could be interesting to experience how the music alone might con- vey the essence of David’s artistry. It would be ideal if you could find a quiet hour, and avail yourself of your fa- vorite deep-listening mode. For me, it’s flat on the floor, in total darkness. In any case, your listening itself will be a tribute to David. For living music, With gratitude, Paul 2 1. Icarus Ralph Towner (Distant Hills Music, ASCAP) Paul Winter / alto sax Paul McCandless / oboe David Darling / cello Ralph Towner / 12-string guitar Glen Moore / bass Collin Walcott / percussion From the album Road Produced by Phil Ramone Recorded live on summer tour, 1970 This was our first recording of “Icarus” 2. Ode to a Fillmore Dressing Room David Darling (Tasker Music, ASCAP) Paul Winter / soprano sax Paul McCandless / English horn, contrabass sarrusophone David Darling / cello Herb Bushler / Fender bass Collin Walcott / sitar From the album Icarus Produced by George Martin Recorded at Seaweed Studio, Marblehead, Massachusetts, August, 1971 3 In the spring of 1971, the Consort was booked to play at the Fillmore East in New York, opening for Procol Harum. (50 years ago this April.) The dressing rooms in this old theatre were upstairs, and we were warming up our instruments there before the afternoon sound check.