ECRYPT II Yearly Report on Algorithms and Keysizes (2011-2012)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security Evaluation of Stream Cipher Enocoro-128V2

Security Evaluation of Stream Cipher Enocoro-128v2 Hell, Martin; Johansson, Thomas 2010 Link to publication Citation for published version (APA): Hell, M., & Johansson, T. (2010). Security Evaluation of Stream Cipher Enocoro-128v2. CRYPTREC Technical Report. Total number of authors: 2 General rights Unless other specific re-use rights are stated the following general rights apply: Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Read more about Creative commons licenses: https://creativecommons.org/licenses/ Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. LUND UNIVERSITY PO Box 117 221 00 Lund +46 46-222 00 00 Security Evaluation of Stream Cipher Enocoro-128v2 Martin Hell and Thomas Johansson Abstract. This report presents a security evaluation of the Enocoro- 128v2 stream cipher. Enocoro-128v2 was proposed in 2010 and is a mem- ber of the Enocoro family of stream ciphers. This evaluation examines several different attacks applied to the Enocoro-128v2 design. No attack better than exhaustive key search has been found. -

The First Biclique Cryptanalysis of Serpent-256

The First Biclique Cryptanalysis of Serpent-256 Gabriel C. de Carvalho1, Luis A. B. Kowada1 1Instituto de Computac¸ao˜ – Universidade Federal Fluminense (UFF) – Niteroi´ – RJ – Brazil Abstract. The Serpent cipher was one of the finalists of the AES process and as of today there is no method for finding the key with fewer attempts than that of an exhaustive search of all possible keys, even when using known or chosen plaintexts for an attack. This work presents the first two biclique attacks for the full-round Serpent-256. The first uses a dimension 4 biclique while the second uses a dimension 8 biclique. The one with lower dimension covers nearly 4 complete rounds of the cipher, which is the reason for the lower time complex- ity when compared with the other attack (which covers nearly 3 rounds of the cipher). On the other hand, the second attack needs a lot less pairs of plain- texts for it to be done. The attacks require 2255:21 and 2255:45 full computations of Serpent-256 using 288 and 260 chosen ciphertexts respectively with negligible memory. 1. Introduction The Serpent cipher is, along with MARS, RC6, Twofish and Rijindael, one of the AES process finalists [Nechvatal et al. 2001] and has not had, since its proposal, its full round versions attacked. It is a Substitution Permutation Network (SPN) with 32 rounds, 128 bit block size and accepts keys of sizes 128, 192 and 256 bits. Serpent has been targeted by several cryptanalysis [Kelsey et al. 2000, Biham et al. 2001b, Biham et al. -

Public Key Cryptography And

PublicPublic KeyKey CryptographyCryptography andand RSARSA Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] Audio/Video recordings of this lecture are available at: http://www.cse.wustl.edu/~jain/cse571-11/ Washington University in St. Louis CSE571S ©2011 Raj Jain 9-1 OverviewOverview 1. Public Key Encryption 2. Symmetric vs. Public-Key 3. RSA Public Key Encryption 4. RSA Key Construction 5. Optimizing Private Key Operations 6. RSA Security These slides are based partly on Lawrie Brown’s slides supplied with William Stallings’s book “Cryptography and Network Security: Principles and Practice,” 5th Ed, 2011. Washington University in St. Louis CSE571S ©2011 Raj Jain 9-2 PublicPublic KeyKey EncryptionEncryption Invented in 1975 by Diffie and Hellman at Stanford Encrypted_Message = Encrypt(Key1, Message) Message = Decrypt(Key2, Encrypted_Message) Key1 Key2 Text Ciphertext Text Keys are interchangeable: Key2 Key1 Text Ciphertext Text One key is made public while the other is kept private Sender knows only public key of the receiver Asymmetric Washington University in St. Louis CSE571S ©2011 Raj Jain 9-3 PublicPublic KeyKey EncryptionEncryption ExampleExample Rivest, Shamir, and Adleman at MIT RSA: Encrypted_Message = m3 mod 187 Message = Encrypted_Message107 mod 187 Key1 = <3,187>, Key2 = <107,187> Message = 5 Encrypted Message = 53 = 125 Message = 125107 mod 187 = 5 = 125(64+32+8+2+1) mod 187 = {(12564 mod 187)(12532 mod 187)... (1252 mod 187)(125 mod 187)} mod 187 Washington University in -

Reconsidering the Security Bound of AES-GCM-SIV

Background on AES-GCM-SIV Fixing the Security Bound Improving Key Derivation Final Remarks Reconsidering the Security Bound of AES-GCM-SIV Tetsu Iwata1 and Yannick Seurin2 1Nagoya University, Japan 2ANSSI, France March 7, 2018 — FSE 2018 T. Iwata and Y. Seurin Reconsidering AES-GCM-SIV’s Security FSE 2018 1 / 26 Background on AES-GCM-SIV Fixing the Security Bound Improving Key Derivation Final Remarks Summary of the contribution • we reconsider the security of the AEAD scheme AES-GCM-SIV designed by Gueron, Langley, and Lindell • we identify flaws in the designers’ security analysis and propose a new security proof • our findings leads to significantly reduced security claims, especially for long messages • we propose a simple modification to the scheme (key derivation function) improving security without efficiency loss T. Iwata and Y. Seurin Reconsidering AES-GCM-SIV’s Security FSE 2018 2 / 26 Background on AES-GCM-SIV Fixing the Security Bound Improving Key Derivation Final Remarks Summary of the contribution • we reconsider the security of the AEAD scheme AES-GCM-SIV designed by Gueron, Langley, and Lindell • we identify flaws in the designers’ security analysis and propose a new security proof • our findings leads to significantly reduced security claims, especially for long messages • we propose a simple modification to the scheme (key derivation function) improving security without efficiency loss T. Iwata and Y. Seurin Reconsidering AES-GCM-SIV’s Security FSE 2018 2 / 26 Background on AES-GCM-SIV Fixing the Security Bound Improving Key Derivation Final Remarks Summary of the contribution • we reconsider the security of the AEAD scheme AES-GCM-SIV designed by Gueron, Langley, and Lindell • we identify flaws in the designers’ security analysis and propose a new security proof • our findings leads to significantly reduced security claims, especially for long messages • we propose a simple modification to the scheme (key derivation function) improving security without efficiency loss T. -

Zero Correlation Linear Cryptanalysis on LEA Family Ciphers

Journal of Communications Vol. 11, No. 7, July 2016 Zero Correlation Linear Cryptanalysis on LEA Family Ciphers Kai Zhang, Jie Guan, and Bin Hu Information Science and Technology Institute, Zhengzhou 450000, China Email: [email protected]; [email protected]; [email protected] Abstract—In recent two years, zero correlation linear Zero correlation linear cryptanalysis was firstly cryptanalysis has shown its great potential in cryptanalysis and proposed by Andrey Bogdanov and Vicent Rijmen in it has proven to be effective against massive ciphers. LEA is a 2011 [2], [3]. Generally speaking, this cryptanalytic block cipher proposed by Deukjo Hong, who is the designer of method can be concluded as “use linear approximation of an ISO standard block cipher - HIGHT. This paper evaluates the probability 1/2 to eliminate the wrong key candidates”. security level on LEA family ciphers against zero correlation linear cryptanalysis. Firstly, we identify some 9-round zero However, in this basic model of zero correlation linear correlation linear hulls for LEA. Accordingly, we propose a cryptanalysis, the data complexity is about half of the full distinguishing attack on all variants of 9-round LEA family code book. The high data complexity greatly limits the ciphers. Then we propose the first zero correlation linear application of this new method. In FSE 2012, multiple cryptanalysis on 13-round LEA-192 and 14-round LEA-256. zero correlation linear cryptanalysis [4] was proposed For 13-round LEA-192, we propose a key recovery attack with which use multiple zero correlation linear approximations time complexity of 2131.30 13-round LEA encryptions, data to reduce the data complexity. -

Authentication in Key-Exchange: Definitions, Relations and Composition

Authentication in Key-Exchange: Definitions, Relations and Composition Cyprien Delpech de Saint Guilhem1;2, Marc Fischlin3, and Bogdan Warinschi2 1 imec-COSIC, KU Leuven, Belgium 2 Dept Computer Science, University of Bristol, United Kingdom 3 Computer Science, Technische Universit¨atDarmstadt, Germany [email protected], [email protected], [email protected] Abstract. We present a systematic approach to define and study authentication notions in authenti- cated key-exchange protocols. We propose and use a flexible and expressive predicate-based definitional framework. Our definitions capture key and entity authentication, in both implicit and explicit vari- ants, as well as key and entity confirmation, for authenticated key-exchange protocols. In particular, we capture critical notions in the authentication space such as key-compromise impersonation resis- tance and security against unknown key-share attacks. We first discuss these definitions within the Bellare{Rogaway model and then extend them to Canetti{Krawczyk-style models. We then show two useful applications of our framework. First, we look at the authentication guarantees of three representative protocols to draw several useful lessons for protocol design. The core technical contribution of this paper is then to formally establish that composition of secure implicitly authenti- cated key-exchange with subsequent confirmation protocols yields explicit authentication guarantees. Without a formal separation of implicit and explicit authentication from secrecy, a proof of this folklore result could not have been established. 1 Introduction The commonly expected level of security for authenticated key-exchange (AKE) protocols comprises two aspects. Authentication provides guarantees on the identities of the parties involved in the protocol execution. -

Analysis of Selected Block Cipher Modes for Authenticated Encryption

Analysis of Selected Block Cipher Modes for Authenticated Encryption by Hassan Musallam Ahmed Qahur Al Mahri Bachelor of Engineering (Computer Systems and Networks) (Sultan Qaboos University) – 2007 Thesis submitted in fulfilment of the requirement for the degree of Doctor of Philosophy School of Electrical Engineering and Computer Science Science and Engineering Faculty Queensland University of Technology 2018 Keywords Authenticated encryption, AE, AEAD, ++AE, AEZ, block cipher, CAESAR, confidentiality, COPA, differential fault analysis, differential power analysis, ElmD, fault attack, forgery attack, integrity assurance, leakage resilience, modes of op- eration, OCB, OTR, SHELL, side channel attack, statistical fault analysis, sym- metric encryption, tweakable block cipher, XE, XEX. i ii Abstract Cryptography assures information security through different functionalities, es- pecially confidentiality and integrity assurance. According to Menezes et al. [1], confidentiality means the process of assuring that no one could interpret infor- mation, except authorised parties, while data integrity is an assurance that any unauthorised alterations to a message content will be detected. One possible ap- proach to ensure confidentiality and data integrity is to use two different schemes where one scheme provides confidentiality and the other provides integrity as- surance. A more compact approach is to use schemes, called Authenticated En- cryption (AE) schemes, that simultaneously provide confidentiality and integrity assurance for a message. AE can be constructed using different mechanisms, and the most common construction is to use block cipher modes, which is our focus in this thesis. AE schemes have been used in a wide range of applications, and defined by standardisation organizations. The National Institute of Standards and Technol- ogy (NIST) recommended two AE block cipher modes CCM [2] and GCM [3]. -

On the NIST Lightweight Cryptography Standardization

On the NIST Lightweight Cryptography Standardization Meltem S¨onmez Turan NIST Lightweight Cryptography Team ECC 2019: 23rd Workshop on Elliptic Curve Cryptography December 2, 2019 Outline • NIST's Cryptography Standards • Overview - Lightweight Cryptography • NIST Lightweight Cryptography Standardization Process • Announcements 1 NIST's Cryptography Standards National Institute of Standards and Technology • Non-regulatory federal agency within U.S. Department of Commerce. • Founded in 1901, known as the National Bureau of Standards (NBS) prior to 1988. • Headquarters in Gaithersburg, Maryland, and laboratories in Boulder, Colorado. • Employs around 6,000 employees and associates. NIST's Mission to promote U.S. innovation and industrial competitiveness by advancing measurement science, standards, and technology in ways that enhance economic security and improve our quality of life. 2 NIST Organization Chart Laboratory Programs Computer Security Division • Center for Nanoscale Science and • Cryptographic Technology Technology • Secure Systems and Applications • Communications Technology Lab. • Security Outreach and Integration • Engineering Lab. • Security Components and Mechanisms • Information Technology Lab. • Security Test, Validation and • Material Measurement Lab. Measurements • NIST Center for Neutron Research • Physical Measurement Lab. Information Technology Lab. • Advanced Network Technologies • Applied and Computational Mathematics • Applied Cybersecurity • Computer Security • Information Access • Software and Systems • Statistical -

FIPS 140-2 Non-Proprietary Security Policy Oracle Linux 7 NSS

FIPS 140-2 Non-Proprietary Security Policy Oracle Linux 7 NSS Cryptographic Module FIPS 140-2 Level 1 Validation Software Version: R7-4.0.0 Date: January 22nd, 2020 Document Version 2.3 © Oracle Corporation This document may be reproduced whole and intact including the Copyright notice. Title: Oracle Linux 7 NSS Cryptographic Module Security Policy Date: January 22nd, 2020 Author: Oracle Security Evaluations – Global Product Security Contributing Authors: Oracle Linux Engineering Oracle Corporation World Headquarters 500 Oracle Parkway Redwood Shores, CA 94065 U.S.A. Worldwide Inquiries: Phone: +1.650.506.7000 Fax: +1.650.506.7200 oracle.com Copyright © 2020, Oracle and/or its affiliates. All rights reserved. This document is provided for information purposes only and the contents hereof are subject to change without notice. This document is not warranted to be error-free, nor subject to any other warranties or conditions, whether expressed orally or implied in law, including implied warranties and conditions of merchantability or fitness for a particular purpose. Oracle specifically disclaim any liability with respect to this document and no contractual obligations are formed either directly or indirectly by this document. This document may reproduced or distributed whole and intact including this copyright notice. Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners. Oracle Linux 7 NSS Cryptographic Module Security Policy i TABLE OF CONTENTS Section Title -

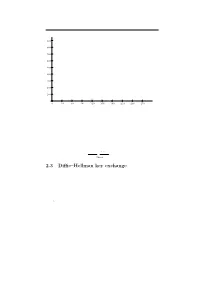

2.3 Diffie–Hellman Key Exchange

2.3. Di±e{Hellman key exchange 65 q q q q q q 6 q qq q q q q q q 900 q q q q q q q qq q q q q q q q q q q q q q q q q 800 q q q qq q q q q q q q q q qq q q q q q q q q q q q 700 q q q q q q q q q q q q q q q q q q q q q q q q q q qq q 600 q q q q q q q q q q q q qq q q q q q q q q q q q q q q q q q qq q q q q q q q q 500 q qq q q q q q qq q q q q q qqq q q q q q q q q q q q q q qq q q q 400 q q q q q q q q q q q q q q q q q q q q q q q q q 300 q q q q q q q q q q q q q q q q q q qqqq qqq q q q q q q q q q q q 200 q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q qq q q qq q q 100 q q q q q q q q q q q q q q q q q q q q q q q q q 0 q - 0 30 60 90 120 150 180 210 240 270 Figure 2.2: Powers 627i mod 941 for i = 1; 2; 3;::: any group and use the group law instead of multiplication. -

A Password-Based Key Derivation Algorithm Using the KBRP Method

American Journal of Applied Sciences 5 (7): 777-782, 2008 ISSN 1546-9239 © 2008 Science Publications A Password-Based Key Derivation Algorithm Using the KBRP Method 1Shakir M. Hussain and 2Hussein Al-Bahadili 1Faculty of CSIT, Applied Science University, P.O. Box 22, Amman 11931, Jordan 2Faculty of Information Systems and Technology, Arab Academy for Banking and Financial Sciences, P.O. Box 13190, Amman 11942, Jordan Abstract: This study presents a new efficient password-based strong key derivation algorithm using the key based random permutation the KBRP method. The algorithm consists of five steps, the first three steps are similar to those formed the KBRP method. The last two steps are added to derive a key and to ensure that the derived key has all the characteristics of a strong key. In order to demonstrate the efficiency of the algorithm, a number of keys are derived using various passwords of different content and length. The features of the derived keys show a good agreement with all characteristics of strong keys. In addition, they are compared with features of keys generated using the WLAN strong key generator v2.2 by Warewolf Labs. Key words: Key derivation, key generation, strong key, random permutation, Key Based Random Permutation (KBRP), key authentication, password authentication, key exchange INTRODUCTION • The key size must be long enough so that it can not Key derivation is the process of generating one or be easily broken more keys for cryptography and authentication, where a • The key should have the highest possible entropy key is a sequence of characters that controls the process (close to unity) [1,2] of a cryptographic or authentication algorithm . -

3GPP TR 55.919 V6.1.0 (2002-12) Technical Report

3GPP TR 55.919 V6.1.0 (2002-12) Technical Report 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; 3G Security; Specification of the A5/3 Encryption Algorithms for GSM and ECSD, and the GEA3 Encryption Algorithm for GPRS; Document 4: Design and evaluation report (Release 6) R GLOBAL SYSTEM FOR MOBILE COMMUNICATIONS The present document has been developed within the 3rd Generation Partnership Project (3GPP TM) and may be further elaborated for the purposes of 3GPP. The present document has not been subject to any approval process by the 3GPP Organizational Partners and shall not be implemented. This Specification is provided for future development work within 3GPP only. The Organizational Partners accept no liability for any use of this Specification. Specifications and reports for implementation of the 3GPP TM system should be obtained via the 3GPP Organizational Partners' Publications Offices. Release 6 2 3GPP TR 55.919 V6.1.0 (2002-12) Keywords GSM, GPRS, security, algorithm 3GPP Postal address 3GPP support office address 650 Route des Lucioles - Sophia Antipolis Valbonne - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Internet http://www.3gpp.org Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. © 2002, 3GPP Organizational Partners (ARIB, CWTS, ETSI, T1, TTA, TTC). All rights reserved. 3GPP Release 6 3 3GPP TR 55.919 V6.1.0 (2002-12) Contents Foreword ............................................................................................................................................................5