D2.4 – High-Level Music Content Analysis Framework V2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 and 1991 Bradley C. Klypchak a Dissertation Submitted to the Graduate

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 AND 1991 Bradley C. Klypchak A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2007 Committee: Dr. Jeffrey A. Brown, Advisor Dr. John Makay Graduate Faculty Representative Dr. Ron E. Shields Dr. Don McQuarie © 2007 Bradley C. Klypchak All Rights Reserved iii ABSTRACT Dr. Jeffrey A. Brown, Advisor Between 1984 and 1991, heavy metal became one of the most publicly popular and commercially successful rock music subgenres. The focus of this dissertation is to explore the following research questions: How did the subculture of heavy metal music between 1984 and 1991 evolve and what meanings can be derived from this ongoing process? How did the contextual circumstances surrounding heavy metal music during this period impact the performative choices exhibited by artists, and from a position of retrospection, what lasting significance does this particular era of heavy metal merit today? A textual analysis of metal- related materials fostered the development of themes relating to the selective choices made and performances enacted by metal artists. These themes were then considered in terms of gender, sexuality, race, and age constructions as well as the ongoing negotiations of the metal artist within multiple performative realms. Occurring at the juncture of art and commerce, heavy metal music is a purposeful construction. Metal musicians made performative choices for serving particular aims, be it fame, wealth, or art. These same individuals worked within a greater system of influence. Metal bands were the contracted employees of record labels whose own corporate aims needed to be recognized. -

Space Rock, the Popular Music Inspired by the Stars Above Us

SPACE ROCK, THE POPULAR MUSIC INSPIRED BY THE STARS ABOVE US JARKKO MATIAS MERISALO 79222N ASTRONOMICAL VIEW OF THE WORLD PART B S-92.3299AALTO UNIVERSITY 0 TABLE OF CONTENTS Table of contents ................................................................................................................. 1 1. Introduction ................................................................................................................... 2 2. What is space rock and how it was born? ..................................................................... 3 3. The Golden era ............................................................................................................. 5 3.1. Significant artists and songs to remember ................................................................ 5 3.2. Masks and Glitter – Spacemen and rock characters ................................................. 7 4. Modern times .............................................................................................................. 10 5. Conclusions ................................................................................................................. 12 6. References .................................................................................................................. 13 7. Appendices ................................................................................................................. 14 1. INTRODUCTION When the Soviets managed to launch “Sputnik 1”, the first man-made object to the Earth’s orbit in November 1957, -

Introduction 1

Notes Introduction 1. For a more in- depth discussion of patriarchy and nationalism, see Kim’s analysis of the argument of Gates (2005, 16) and Garcia (1997). 2. Omi and Winant (1986) trace the historical development of privilege tied to white- ness but also foreground discussions of race to contest claims that only those in power— that is, those considered white— can be racist. Omi and Winant argue that whenever the construct of race is used to establish in and out groups and hierarchies of power, irrespective of who perpetrates it, racism has occurred. In “Latino Racial Formation,” De Genova and Ramos-Zayas (2003b) further develop the ideas of history and context in terms of US Latinos in their argument that US imperialism and discrimination, more than any other factors, have influenced Latino racializa- tion and thus contributed to the creation of a third pseudoracial group along the black/white continuum that has historically marked US race relations. The research of Omi and Winant and De Genova and Ramos- Zayas coincides with the study of gender, particularly masculinity, in its emphasis on the shifting nature of constructs such as race and gender as well as its recognition of the different experiences of gendered history predicated upon male and female bodies of people of color. 3. In terms of the experience of sexuality through the body, Rodríguez’s memoir (1981) underscores the fact that perceptions of one’s body by the self and others depend heavily on the physical context in which an individual body is found as well as on one’s own perceptions of pride and shame based on notions of race and desire. -

Disco Inferno

Section 2 Stay in touch: Looking for monocle.com a smart podcast to give you monocle.com/radio the low-down on all cultural Edition 4 happenings worldwide? Tune in 07/06—13/06 to the freshly relaunched weekly Culture Show on Monocle 24. but that had no standing at Studio whatsoever. If you’re talking about something from 40 years ago that’s still compelling today then there’s got to be some underlying social reason. Section Section Section M: What about the door policy, the line – it was infamous. Was that something that you and Steve planned or did it just happen and you decided it worked for you? IS: It was definitely part of the idea. To me we were trying to do in the public domain what everyone else does in their private domain: to get an alchemy and an energy; we were trying to curate the crowd and we wanted it to Adam Schull be a mixture that had nothing to do with wealth or social Iamge: standing, it had to do with creating this combustible energy every night. When you are doing a door policy I wasn’t in New York then but I remember the tail-end that doesn’t have a rational objective criteria other than of that before it was cleaned up; it was very distinctive. instinct, then mistakes get made and people get infuri- TELEVISION / AUSTRIA Here, a rundown of the ladies: It’s a lost analogue world too – if you notice in the film, ated by it but we didn’t understand that because it was the telephones on Ian’s desk are rotary dial and that was really quite honest. -

Spanglish Code-Switching in Latin Pop Music: Functions of English and Audience Reception

Spanglish code-switching in Latin pop music: functions of English and audience reception A corpus and questionnaire study Magdalena Jade Monteagudo Master’s thesis in English Language - ENG4191 Department of Literature, Area Studies and European Languages UNIVERSITY OF OSLO Spring 2020 II Spanglish code-switching in Latin pop music: functions of English and audience reception A corpus and questionnaire study Magdalena Jade Monteagudo Master’s thesis in English Language - ENG4191 Department of Literature, Area Studies and European Languages UNIVERSITY OF OSLO Spring 2020 © Magdalena Jade Monteagudo 2020 Spanglish code-switching in Latin pop music: functions of English and audience reception Magdalena Jade Monteagudo http://www.duo.uio.no/ Trykk: Reprosentralen, Universitetet i Oslo IV Abstract The concept of code-switching (the use of two languages in the same unit of discourse) has been studied in the context of music for a variety of language pairings. The majority of these studies have focused on the interaction between a local language and a non-local language. In this project, I propose an analysis of the mixture of two world languages (Spanish and English), which can be categorised as both local and non-local. I do this through the analysis of the enormously successful reggaeton genre, which is characterised by its use of Spanglish. I used two data types to inform my research: a corpus of code-switching instances in top 20 reggaeton songs, and a questionnaire on attitudes towards Spanglish in general and in music. I collected 200 answers to the questionnaire – half from American English-speakers, and the other half from Spanish-speaking Hispanics of various nationalities. -

How to Re-Shine Depeche Mode on Album Covers

Sociology Study, December 2015, Vol. 5, No. 12, 920‐930 D doi: 10.17265/2159‐5526/2015.12.003 DAVID PUBLISHING Simple but Dominant: How to Reshine Depeche Mode on Album Covers After 80’s Cinla Sekera Abstract The aim of this paper is to analyze the album covers of English band Depeche Mode after 80’s according to the principles of graphic design. Established in 1980, the musical style of the band was turned from synth‐pop to new wave, from new wave to electronic, dance, and alternative‐rock in decades, but their message stayed as it was: A non‐hypocritical, humanist, and decent manner against what is wrong and in love sincerely. As a graphic design product, album covers are pre‐print design solutions of two dimensional surfaces. Graphic design, as a design field, has its own elements and principles. Visual elements and typography are the two components which should unite with the help of the six main principles which are: unity/harmony; balance; hierarchy; scale/proportion; dominance/emphasis; and similarity and contrast. All album covers of Depeche Mode after 80’s were designed in a simple but dominant way in order to form a unique style. On every album cover, there are huge color, size, tone, and location contrasts which concluded in simple domination; domination of a non‐hypocritical, humanist, and decent manner against what is wrong and in love sincerely. Keywords Graphic design, album cover, design principles, dominance, Depeche Mode Design is the formal and functional features graphic design are line, shape, color, value, texture, determination process, made before the production of and space. -

Metaldata: Heavy Metal Scholarship in the Music Department and The

Dr. Sonia Archer-Capuzzo Dr. Guy Capuzzo . Growing respect in scholarly and music communities . Interdisciplinary . Music theory . Sociology . Music History . Ethnomusicology . Cultural studies . Religious studies . Psychology . “A term used since the early 1970s to designate a subgenre of hard rock music…. During the 1960s, British hard rock bands and the American guitarist Jimi Hendrix developed a more distorted guitar sound and heavier drums and bass that led to a separation of heavy metal from other blues-based rock. Albums by Led Zeppelin, Black Sabbath and Deep Purple in 1970 codified the new genre, which was marked by distorted guitar ‘power chords’, heavy riffs, wailing vocals and virtuosic solos by guitarists and drummers. During the 1970s performers such as AC/DC, Judas Priest, Kiss and Alice Cooper toured incessantly with elaborate stage shows, building a fan base for an internationally-successful style.” (Grove Music Online, “Heavy metal”) . “Heavy metal fans became known as ‘headbangers’ on account of the vigorous nodding motions that sometimes mark their appreciation of the music.” (Grove Music Online, “Heavy metal”) . Multiple sub-genres . What’s the difference between speed metal and death metal, and does anyone really care? (The answer is YES!) . Collection development . Are there standard things every library should have?? . Cataloging . The standard difficulties with cataloging popular music . Reference . Do people even know you have this stuff? . And what did this person just ask me? . Library of Congress added first heavy metal recording for preservation March 2016: Master of Puppets . Library of Congress: search for “heavy metal music” April 2017 . 176 print resources - 25 published 1980s, 25 published 1990s, 125+ published 2000s . -

Challenging Authenticity: Fakes and Forgeries in Rock Music

Challenging authenticity: fakes and forgeries in rock music Chris Atton Chris Atton Professor of Media and Culture School of Arts and Creative Industries Edinburgh Napier University Merchiston Campus Edinburgh EH10 5DT T 0131 455 2223 E [email protected] 8210 words (exc. abstract) Challenging authenticity: fakes and forgeries in rock music Abstract Authenticity is a key concept in the evaluation of rock music by critics and fans. The production of fakes challenges the means by which listeners evaluate the authentic, by questioning central notions of integrity and sincerity. This paper examines the nature and motives of faking in recorded music, such as inventing imaginary groups or passing off studio recordings as live performances. In addition to a survey of types of fakes and the motives of those responsible for them, the paper presents two case studies, one of the ‘fake’ American group the Residents, the other of the Unknown Deutschland series of releases, purporting to be hitherto unknown recordings of German rock groups from the 1970s. By examining the critical reception of these cases and taking into account ethical and aesthetic considerations, the paper argues that the relationship between the authentic (the ‘real’) and the inauthentic (the ‘fake’) is complex. It concludes that, to judge from fans’ responses at least, the fake can be judged as possessing cultural value and may even be considered as authentic. 1 Challenging authenticity: fakes and forgeries in rock music Little consideration has been given to the aesthetic and cultural significance of the inauthentic through the production of fakes in popular music, except for negatively critical assessments of pop music as ‘plastic’ or ‘manufactured’ (McLeod 2001 provides examples from American rock criticism). -

Exploring the Chinese Metal Scene in Contemporary Chinese Society (1996-2015)

"THE SCREAMING SUCCESSOR": EXPLORING THE CHINESE METAL SCENE IN CONTEMPORARY CHINESE SOCIETY (1996-2015) Yu Zheng A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF ARTS December 2016 Committee: Jeremy Wallach, Advisor Esther Clinton Kristen Rudisill © 2016 Yu Zheng All Rights Reserved iii ABSTRACT Jeremy Wallach, Advisor This research project explores the characteristics and the trajectory of metal development in China and examines how various factors have influenced the localization of this music scene. I examine three significant roles – musicians, audiences, and mediators, and focus on the interaction between the localized Chinese metal scene and metal globalization. This thesis project uses multiple methods, including textual analysis, observation, surveys, and in-depth interviews. In this thesis, I illustrate an image of the Chinese metal scene, present the characteristics and the development of metal musicians, fans, and mediators in China, discuss their contributions to scene’s construction, and analyze various internal and external factors that influence the localization of metal in China. After that, I argue that the development and the localization of the metal scene in China goes through three stages, the emerging stage (1988-1996), the underground stage (1997-2005), the indie stage (2006-present), with Chinese characteristics. And, this localized trajectory is influenced by the accessibility of metal resources, the rapid economic growth, urbanization, and the progress of modernization in China, and the overall development of cultural industry and international cultural communication. iv For Yisheng and our unborn baby! v ACKNOWLEDGMENTS First of all, I would like to show my deepest gratitude to my advisor, Dr. -

Sooloos Collections: Advanced Guide

Sooloos Collections: Advanced Guide Sooloos Collectiions: Advanced Guide Contents Introduction ...........................................................................................................................................................3 Organising and Using a Sooloos Collection ...........................................................................................................4 Working with Sets ..................................................................................................................................................5 Organising through Naming ..................................................................................................................................7 Album Detail ....................................................................................................................................................... 11 Finding Content .................................................................................................................................................. 12 Explore ............................................................................................................................................................ 12 Search ............................................................................................................................................................. 14 Focus .............................................................................................................................................................. -

Nu-Metal As Reflexive Art Niccolo Porcello Vassar College, [email protected]

Vassar College Digital Window @ Vassar Senior Capstone Projects 2016 Affective masculinities and suburban identities: Nu-metal as reflexive art Niccolo Porcello Vassar College, [email protected] Follow this and additional works at: http://digitalwindow.vassar.edu/senior_capstone Recommended Citation Porcello, Niccolo, "Affective masculinities and suburban identities: Nu-metal as reflexive art" (2016). Senior Capstone Projects. Paper 580. This Open Access is brought to you for free and open access by Digital Window @ Vassar. It has been accepted for inclusion in Senior Capstone Projects by an authorized administrator of Digital Window @ Vassar. For more information, please contact [email protected]. ! ! ! ! ! ! ! ! ! ! ! AFFECTIVE!MASCULINITES!AND!SUBURBAN!IDENTITIES:!! NU2METAL!AS!REFLEXIVE!ART! ! ! ! ! ! Niccolo&Dante&Porcello& April&25,&2016& & & & & & & Senior&Thesis& & Submitted&in&partial&fulfillment&of&the&requirements& for&the&Bachelor&of&Arts&in&Urban&Studies&& & & & & & & & _________________________________________ &&&&&&&&&Adviser,&Leonard&Nevarez& & & & & & & _________________________________________& Adviser,&Justin&Patch& Porcello 1 This thesis is dedicated to my brother, who gave me everything, and also his CD case when he left for college. Porcello 2 Table of Contents Acknowledgements .......................................................................................................... 3 Chapter 1: Click Click Boom ............................................................................................. -

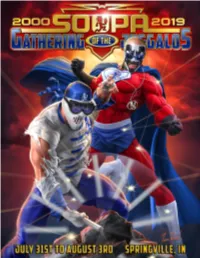

To View the Official Program!

20 years of the gathering of the juggalos... On this momentous occasion, we Gather together not just to celebrate the 20th Annual Gathering of the Juggalos, but to uphold the legacy of our Juggalo Family. For two decades strong, we have converged at the height of the summer season for something so much more than the concerts, the lights, the sounds, the revelry, and the circus. There is no mistaking that the Gathering is the Greatest Show on Earth and has rightfully earned the title as the longest running independent rap festival on this or any other known planet. And while all these accolades are well-deserved and a point of pride, in our hearts we know...There is so much more to this. A greater reason and purpose. A magic that calls us together. That knowing. The spirit of the tribe. The call of the Dark Carnival. The magic mists that float by as we gaze through the trees into starry skies. We are together. And THAT is what we celebrate here, after 20 long, fresh, hilarious, incredible, tremendously karma-filled years. We call this the Soopa Gathering because we are here to celebrate the superpowers of the Juggalo Family. All of us here together and united are capable of heroics and strength beyond measure. We are Soopa. We are mighty. For we have found each other by the magic of the Carnival—standing 20 years strong on the Dirtball as we see into the eternity of Shangri-La. Finding Forever together, may the Dark Carnival empower and ignite the Hero in you ALL..