Managing App Testing Device Clouds: Issues and Opportunities

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Yibo Wu Final.Pdf

The Pennsylvania State University The Graduate School RESOURCE-AWARE CROWDSOURCING IN WIRELESS NETWORKS A Dissertation in Computer Science and Engineering by Yibo Wu c 2018 Yibo Wu Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy May 2018 The dissertation of Yibo Wu was reviewed and approved∗ by the following: Guohong Cao Professor of Computer Science and Engineering Dissertation Adviser, Chair of Committee Robert Collins Associate Professor of Computer Science and Engineering Sencun Zhu Associate Professor of Computer Science and Engineering Associate Professor of Information Sciences and Technology Zhenhui Li Associate Professor of Information Sciences and Technology Bhuvan Urgaonkar Associate Professor of Computer Science and Engineering Graduate Program Chair of Computer Science and Engineering ∗Signatures are on file in the Graduate School. Abstract The ubiquity of mobile devices has opened up opportunities for a wide range of applications based on photo/video crowdsourcing, where the server collects a large number of photos/videos from the public to obtain desired information. How- ever, transmitting large numbers of photos/videos in a wireless environment with bandwidth constraints is challenging, and it is hard to run computation-intensive image processing techniques on mobile devices with limited energy and computa- tion power to identify the useful photos/videos and remove redundancy. To address these challenges, we propose a framework to quantify the quality of crowdsourced photos/videos based on the accessible geographical and geometrical information (called metadata) including the orientation, position, and all other related param- eters of the built-in camera. From metadata, we can infer where and how the photo/video is taken, and then only transmit the most useful photos/videos. -

Terraillon Wellness Coach Supported Devices

Terraillon Wellness Coach Supported Devices Warning This document lists the smartphones compatible with the download of the Wellness Coach app from the App Store (Apple) and Play Store (Android). Some models have been tested by Terraillon to check the compatibility and smooth operation of the Wellness Coach app. However, many models have not been tested. Therefore, Terraillon doesn't ensure the proper functioning of the Wellness Coach application on these models. If your smartphone model does not appear in the list, thank you to send an email to [email protected] giving us the model of your smartphone so that we can activate if the application store allows it. BRAND MODEL NAME MANUFACTURER MODEL NAME OS REQUIRED ACER Liquid Z530 acer_T02 Android 4.3+ ACER Liquid Jade S acer_S56 Android 4.3+ ACER Liquid E700 acer_e39 Android 4.3+ ACER Liquid Z630 acer_t03 Android 4.3+ ACER Liquid Z320 T012 Android 4.3+ ARCHOS 45 Helium 4G a45he Android 4.3+ ARCHOS 50 Helium 4G a50he Android 4.3+ ARCHOS Archos 45b Helium ac45bhe Android 4.3+ ARCHOS ARCHOS 50c Helium ac50che Android 4.3+ APPLE iPhone 4S iOS8+ APPLE iPhone 5 iOS8+ APPLE iPhone 5C iOS8+ APPLE iPhone 5S iOS8+ APPLE iPhone 6 iOS8+ APPLE iPhone 6 Plus iOS8+ APPLE iPhone 6S iOS8+ APPLE iPhone 6S Plus iOS8+ APPLE iPad Mini 1 iOS8+ APPLE iPad Mini 2 iOS8+ 1 / 48 www.terraillon.com Terraillon Wellness Coach Supported Devices BRAND MODEL NAME MANUFACTURER MODEL NAME OS REQUIRED APPLE iPad Mini 3 iOS8+ APPLE iPad Mini 4 iOS8+ APPLE iPad 3 iOS8+ APPLE iPad 4 iOS8+ APPLE iPad Air iOS8+ -

Engage Customers in a New Way. Presenting the Galaxy Tab S5e

Engage customers in Key Features Greater engagement. a new way. Presenting Crisp. Clear. Captivating. A stunningly bright 10.5" state-of-the-art Super AMOLED display gives you room for dynamic, on-the-spot presentations. Samsung DeX means more doing. When the Tab S5e is connected to a the Galaxy Tab S5e. workstation via Samsung DeX, you can use Dual Mode to turn the Tab S5e into a second screen.1 Guide clients through a presentation on the Tab S5e while you push more detailed content to a larger screen. You Put enhanced productivity in the hands of your workforce. can also view multiple windows and screens at once on your Tab S5e. Cinematic surround sound. Bring presentations, videos and more to The Galaxy Tab S5e delivers a more versatile mobile experience life like never before. Dolby Atmos® and quad speakers tuned by AKG that gives you more freedom to work the way you want. surround you in extraordinary sound. Connect, type and share with ease; where, how and when it’s Seamless everywhere. best for you and your customers. And with security from the Samsung DeX gives you a powerful desktop-like experience. Make any place a work space. Attach the optional keyboard cover, and Samsung chip up and easy deployment and management, the Galaxy DeX transforms the Tab S5e into a powerful desktop-like experience.1 Tab S5e is truly enterprise-ready. Powerful, long-lasting battery. When your team has a long-lasting battery of up to 14.5 hours on a single charge, they’re always ready.2 The world’s thinnest and lightest tablet. -

HR Kompatibilitätsübersicht

HR-imotion Kompatibilität/Compatibility 2018 / 11 Gerätetyp Telefon 22410001 23010201 22110001 23010001 23010101 22010401 22010501 22010301 22010201 22110101 22010701 22011101 22010101 22210101 22210001 23510101 23010501 23010601 23010701 23510320 22610001 23510420 Smartphone Acer Liquid Zest Plus Smartphone AEG Voxtel M250 Smartphone Alcatel 1X Smartphone Alcatel 3 Smartphone Alcatel 3C Smartphone Alcatel 3V Smartphone Alcatel 3X Smartphone Alcatel 5 Smartphone Alcatel 5v Smartphone Alcatel 7 Smartphone Alcatel A3 Smartphone Alcatel A3 XL Smartphone Alcatel A5 LED Smartphone Alcatel Idol 4S Smartphone Alcatel U5 Smartphone Allview P8 Pro Smartphone Allview Soul X5 Pro Smartphone Allview V3 Viper Smartphone Allview X3 Soul Smartphone Allview X5 Soul Smartphone Apple iPhone Smartphone Apple iPhone 3G / 3GS Smartphone Apple iPhone 4 / 4S Smartphone Apple iPhone 5 / 5S Smartphone Apple iPhone 5C Smartphone Apple iPhone 6 / 6S Smartphone Apple iPhone 6 Plus / 6S Plus Smartphone Apple iPhone 7 Smartphone Apple iPhone 7 Plus Smartphone Apple iPhone 8 Smartphone Apple iPhone 8 Plus Smartphone Apple iPhone SE Smartphone Apple iPhone X Smartphone Apple iPhone XR Smartphone Apple iPhone Xs Smartphone Apple iPhone Xs Max Smartphone Archos 50 Saphir Smartphone Archos Diamond 2 Plus Smartphone Archos Saphir 50x Smartphone Asus ROG Phone Smartphone Asus ZenFone 3 Smartphone Asus ZenFone 3 Deluxe Smartphone Asus ZenFone 3 Zoom Smartphone Asus Zenfone 5 Lite ZC600KL Smartphone Asus Zenfone 5 ZE620KL Smartphone Asus Zenfone 5z ZS620KL Smartphone Asus -

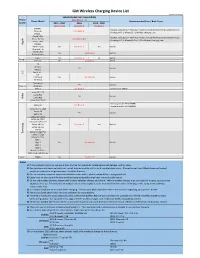

GM Wireless Charging Device List

GM Wireless Charging Device List Revision: Aug 30, 2019 Vehicle Model Year Compatibility Phone ( See Notes 1 - 7) Phone Model Recommended Case / Back Cover Brand 2015 - 2017 2018 2019 - 2020 (See Note 8) (See Note 8) (See Note 9) iPhone 6 ŸBEZALEL Latitude [Qi + PMA] Dual-Mode Universal Wireless Charging Receiver Case iPhone 6s See Note: A ŸAircharge MFi Qi iPhone 6S / 6 Wireless Charging Case iPhone 7 iPhone 6 Plus ŸBEZALEL Latitude [Qi + PMA] Dual-Mode Universal Wireless Charging Receiver Case e iPhone 6s Plus See Note: A & B l ŸAircharge MFi Qi iPhone 6S Plus / 6 Plus Wireless Charging Case p iPhone 7 Plus p A iPhone 8 iPhone X (10) No See Note: C Yes Built-in iPhone Xs / Xr iPhone 8 Plus No See Note: B Built-in iPhone Xs Max Pixel 3 No See Note: C Yes Built-in Google Pixel 3XL No See Note: B Built-in G6 Nexus 4 Yes Built-in Nexus 5 G Spectrum 2 L V30 V40 ThinQ No See Note: B Built-in G7 ThinQ Droid Maxx Yes Built-in Motorola Droid Mini Moto X See Note: A Incipio model: MT231 Lumia 830 / 930 a i Lumia 920 k Yes Built-in o Lumia 928 N Lumia 950 / 950 XL ŸSamsung model: EP-VG900BBU Galaxy S5 See Note: A ŸSamsung model: EP-CG900IBA Galaxy S6 / S6 Edge Galaxy S8 Yes Built-in Galaxy S9 Galaxy S10 / S10e Galaxy S6 Active Galaxy S7 / S7 Active g Galaxy S8 Plus No See Note: C Yes Built-in n u Galaxy S9 Plus s m S10 5G a Galaxy S6 Edge Plus S Galaxy S7 Edge Galaxy S10 Plus Note 5 Note 7 No See Note: B Built-in Note 8 Note 9 Note 10 / 10 Plus Note 10 5G / 10 Plus 5G Xiami MIX2S No See Note: B Built-in Notes: 1) If phone does not charge: remove it from charger for 3 seconds, rotate phone 180 degrees, and try again. -

Case 6:19-Cv-00474-ADA Document 46 Filed 05/05/20 Page 1 of 208

Case 6:19-cv-00474-ADA Document 46 Filed 05/05/20 Page 1 of 208 IN THE UNITED STATES DISTRICT COURT FOR THE WESTERN DISTRICT OF TEXAS WACO DIVISION GPU++, LLC, ) ) Plaintiff, ) ) v. ) ) Civil Action No. W-19-CV-00474-ADA QUALCOMM INCORPORATED; ) QUALCOMM TECHNOLOGIES, INC., ) JURY TRIAL DEMANDED SAMSUNG ELECTRONICS CO., LTD.; ) SAMSUNG ELECTRONICS AMERICA, INC.; ) U.S. Dist. Ct. Judge: Hon. Alan. D. Albright SAMSUNG SEMICONDUCTOR, INC.; ) SAMSUNG AUSTIN SEMICONDUCTOR, ) LLC, ) ) Defendants. ) FIRST AMENDED COMPLAINT Plaintiff GPU++, LLC (“Plaintiff”), by its attorneys, demands a trial by jury on all issues so triable and for its complaint against Qualcomm Incorporated and Qualcomm Technologies, Inc. (collectively, “Qualcomm”) and Samsung Electronics Co., Ltd., Samsung Electronics America, Inc., Samsung Semiconductor, Inc., and Samsung Austin Semiconductor, LLC (collectively, “Samsung”) (Qualcomm and Samsung collectively, “Defendants”), and alleges as follows: NATURE OF THE ACTION 1. This is a civil action for patent infringement under the patent laws of the United States, Title 35, United States Code, Section 271, et seq., involving United States Patent No. 8,988,441 (Exhibit A, “’441 patent”) and seeking damages and injunctive relief as provided in 35 U.S.C. §§ 281 and 283-285. THE PARTIES 2. Plaintiff is a California limited liability company with a principal place of business Case 6:19-cv-00474-ADA Document 46 Filed 05/05/20 Page 2 of 208 at 650-B Fremont Avenue #137, Los Altos, CA 94024. Plaintiff is the owner by assignment of the ʼ441 patent. 3. On information and belief, Qualcomm Incorporated is a corporation organized and existing under the laws of the State of Delaware, having a principal place of business at 5775 Morehouse Dr., San Diego, California, 92121. -

Device VOLTE SUNRISE VOWIFI SUNRISE Apple Iphone 11

Device VOLTE_SUNRISE VOWIFI_SUNRISE Apple iPhone 11 (A2221) Supported Supported Apple iPhone 11 Pro (A2215) Supported Supported Apple iPhone 11 Pro Max (A2218) Supported Supported Apple iPhone 5c (A1532) NotSupported Supported Apple iPhone 5S (A1457) NotSupported Supported Apple iPhone 6 (A1586) Supported Supported Apple iPhone 6 Plus (A1524) Supported Supported Apple iPhone 6S (A1688) Supported Supported Apple iPhone 6S Plus (A1687) Supported Supported Apple iPhone 7 (A1778) Supported Supported Apple iPhone 7 Plus (A1784) Supported Supported Apple iPhone 8 (A1905) Supported Supported Apple iPhone 8 Plus (A1897) Supported Supported Apple iPhone SE (A1723) Supported Supported Apple iPhone SE 2020 (A2296) Supported Supported Apple iPhone X (A1901) Supported Supported Apple iPhone XR (A2105) Supported Supported Apple iPhone XS (A2097) Supported Supported Apple iPhone XS Max (A2101) Supported Supported Apple Watch S3 (38mm) (A1889) Supported Supported Apple Watch S3 (42mm) (A1891) Supported Supported Apple Watch S4 (40mm) (A2007) Supported Supported Apple Watch S4 (44mm) (A2008) Supported Supported Apple Watch S5 (40mm) (A2156) Supported Supported Apple Watch S5 (44mm) (A2157) Supported Supported Caterpillar CAT B35 Supported Supported Huawei Honor 10 Supported Supported Huawei Honor 20 (YAL-L21) Supported Supported Huawei Honor 7x (BND-L21) Supported Supported Huawei Honor V10 Supported Supported Huawei Mate 10 Lite (RNE-L21) Supported Supported Huawei Mate 10 pro (BLA-L29) Supported Supported Huawei Mate 20 lite (SNE-LX1) Supported Supported -

Samsung Galaxy Tab S4 T837R4 User Manual

SI\MSUNG Tab S4 Galaxy User manual Table of contents Features 1 S Pen 1 Camera 1 Night mode 1 Expandable storage 1 Security 1 Getting started 2 Front view 3 Back view 4 Assemble your device 5 Accessories 6 Start using your device 6 Use the Setup Wizard 6 Lock or unlock your device 7 Accounts 8 Transfer data from an old device 9 Navigation 10 Navigation bar 15 i USC_T837R4_EN_UM_TN_SCB_051519_FINAL Table of contents Customize your home screen 16 S Pen 22 Bixby 29 Digital wellbeing 30 Flexible security 31 Multi window 34 Enter text 35 Apps 39 Using apps 40 Uninstall or disable apps 40 Search for apps 40 Sort apps 40 Create and use folders 41 Samsung apps 42 Galaxy Essentials 42 Galaxy Store 42 PENUP 42 Samsung Flow 42 Samsung Notes 43 ii Table of contents SmartThings 45 Calculator 46 Calendar 47 Camera 49 Clock 53 Contacts 57 Email 62 Gallery 65 Internet 69 My Files 72 Google apps 74 Chrome 74 Drive 74 Gmail 74 Google 74 Hangouts 74 Maps 75 Photos 75 Play Movies & TV 75 iii Table of contents Play Music 75 Play Store 75 YouTube 75 Additional apps 76 OneDrive 76 Skype 76 Settings 77 Access Settings 78 Search for Settings 78 Connections 78 Wi-Fi 78 Bluetooth 80 Tablet visibility 81 Airplane mode 81 Mobile networks 82 Data usage 82 Mobile hotspot 84 Tethering 86 Nearby device scanning 86 iv Table of contents Connect to a printer 86 Download booster 87 Virtual Private Networks 87 Private DNS 88 Ethernet 88 Sounds and vibration 89 Sound mode 89 Vibrations 90 Notification sounds 90 Volume 90 System sounds and vibration 91 Dolby Atmos 92 Equalizer -

List of Smartphones Compatible with Airkey System

List of smartphones compatible with AirKey system Android Unlocking Maintenance tasks Unlocking Maintenance tasks Android smartphone Model number Media updates via NFC version via NFC via NFC via Bluetooth via Bluetooth Asus Nexus 7 (Tablet) Nexus 7 5.1.1 ✔ ✔ ✔ – – Blackberry PRIV STV100-4 6.0.1 ✔ ✔ ✔ ✔ ✔ CAT S61 S61 9 ✔ ✔ ✔ ✔ ✔ Doro 8035 Doro 8035 7.1.2 – – – ✔ ✔ Doro 8040 Doro 8040 7.0 – – – ✔ ✔ Google Nexus 4 Nexus 4 5.1.1 ✔ X ✔ – – Google Nexus 5 Nexus 5 6.0.1 ✔ ✔ ✔ ✔ ✔ Google Pixel 2 Pixel 2 9 X X X ✔ ✔ Google Pixel 4 Pixel 4 10 ✔ ✔ ✔ ✔ ✔ HTC One HTC One 5.0.2 ✔ ✔ X – – HTC One M8 HTC One M8 6.0 ✔ ✔ X ✔ ✔ HTC One M9 HTC One M9 7.0 ✔ ✔ ✔ ✔ ✔ HTC 10 HTC 10 8.0.0 ✔ X X ✔ ✔ HTC U11 HTC U11 8.0.0 ✔ ✔ ✔ ✔ ✔ HTC U12+ HTC U12+ 8.0.0 ✔ ✔ ✔ ✔ ✔ HUAWEI Mate 9 MHA-L09 7.0 ✔ ✔ ✔ ✔ ✔ HUAWEI Nexus 6P Nexus 6P 8.1.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P8 lite ALE-L21 5.0.1 ✔ ✔ ✔ – – HUAWEI P9 EVA-L09 7.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P9 lite VNS-L21 7.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P10 VTR-L09 7.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P10 lite WAS-LX1 7.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P20 EML-L29 8.1.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P20 lite ANE-LX1 8.0.0 ✔ X ✔ ✔ ✔ HUAWEI P20 Pro CLT-L29 8.1.0 ✔ ✔ ✔ ✔ ✔ HUAWEI P30 ELE-L29 10 ✔ ✔ ✔ ✔ ✔ HUAWEI P30 lite MAR-LX1A 10 ✔ ✔ ✔ ✔ ✔ HUAWEI P30 Pro VOG-L29 10 ✔ ✔ ✔ ✔ ✔ LG G2 Mini LG-D620r 5.0.2 ✔ ✔ ✔ – – LG G3 LG-D855 5.0 ✔ X ✔ – – LG G4 LG-H815 6.0 ✔ ✔ ✔ ✔ ✔ LG G6 LG-H870 8.0.0 ✔ X ✔ ✔ ✔ LG G7 ThinQ LM-G710EM 8.0.0 ✔ X ✔ ✔ ✔ LG Nexus 5X Nexus 5X 8.1.0 ✔ ✔ ✔ ✔ X Motorola Moto X Moto X 5.1 ✔ ✔ ✔ – – Motorola Nexus 6 Nexus 6 7.0 ✔ X ✔ ✔ ✔ Nokia 7.1 TA-1095 8.1.0 ✔ ✔ X ✔ ✔ Nokia 7.2 TA-1196 10 ✔ ✔ ✔ -

Nexus-4 User Manual

NeXus-4 User Manual Version 2.0 0344 Table of contents 1 Service and support 3 1.1 About this manual 3 1.2 Warranty information 3 2 Safety information 4 2.1 Explanation of markings 4 2.2 Limitations of use 5 2.3 Safety measures and warnings 5 2.4 Precautionary measures 7 2.5 Disclosure of residual risk 7 2.6 Information for lay operators 7 3 Product overview 8 3.1 Product components 8 3.2 Intended use 8 3.3 NeXus-4 views 9 3.4 User interface 9 3.5 Patient connections 10 3.6 Device label 10 4 Instructions for use 11 4.1 Software 11 4.2 Powering the NeXus-4 12 4.3 Transfer data to PC 12 4.4 Perform measurement 13 4.5 Mobility 13 5 Operational principles 14 5.1 Bipolar input channels 14 5.2 Auxiliary input channel 14 5.3 Filtering 14 6 Maintenance 15 7 Electromagnetic guidance 16 8 Technical specifications 19 2 1 Service and support 1.1 About this manual This manual is intended for the user of the NeXus-4 system – referred to as ‘product’ throughout this manual. It contains general operating instructions, precautionary measures, maintenance instructions and information for use of the product. Read this manual carefully and familiarize yourself with the various controls and accessories before starting to use the product. This product is exclusively manufactured by Twente Medical Systems International B.V. for Mind Media B.V. Distribution and service is exclusively performed by or through Mind Media B.V. -

Vívosmart® Google Google Nexus 4 Google Nexus 5X (H791) Google Nexus 6P (H1512) Google Nexus 5 Google Nexus 6 Google Nexus

vívosmart® Google Google Nexus 4 Google Nexus 5X (H791) Google Nexus 6P (H1512) Google Nexus 5 Google Nexus 6 Google Nexus 7 HTC HTC One (M7) HTC One (M9) HTC Butterfly S HTC One (M8) HTC One (M10) LG LG Flex LG V20 LG G4 H815 LG G3 LG E988 Gpro LG G5 H860 LG V10 H962 LG G3 Titan Motorola Motorola RAZR M Motorola DROID Turbo Motorola Moto G (2st Gen) Motorola Droid MAXX Motorola Moto G (1st Gen) Motorola Moto X (1st Gen) Samsung Samsung Galaxy S6 edge + Samsung Galaxy Note 2 Samsung Galaxy S4 Active (SM-G9287) Samsung Galaxy S7 edge (SM- Samsung Galaxy Note 3 Samsung Galaxy S4 Mini G935FD) Samsung Galaxy Note 4 Samsung Galaxy S5 Samsung GALAXY J Samsung Galaxy Note 5 (SM- N9208) Samsung Galaxy S5 Active Samsung Galaxy A5 Duos Samsung Galaxy A9 (SM- Samsung Galaxy S3 Samsung Galaxy S5 Mini A9000) Samsung Galaxy S4 Samsung Galaxy S6 Sony Sony Ericsson Xperia Z Sony Xperia Z2 Sony Xperia X Sony Ericsson Xperia Z Ultra Sony Xperia Z3 Sony XPERIA Z5 Sony Ericsson Xperia Z1 Sony Xperia Z3 Compact Asus ASUS Zenfone 2 ASUS Zenfone 5 ASUS Zenfone 6 Huawei HUAWEI P8 HUAWEI CRR_L09 HUAWEI P9 Oppo OPPO X9076 OPPO X9009 Xiaomi XIAOMI 2S XIAOMI 3 XIAOMI 5 XIAOMI Note One Plus OnePlus 3 (A3000) vívosmart® 3 Google Google Nexus 5X (H791) Google Nexus 6P (H1512) Google Pixel HTC HTC One (M9) HTC One (M10) HTC U11 HTC One (A9) HTC U Ultra LG LG V10 H962 LG G4 H815 LG G6 H870 LG V20 LG G5 H860 Motorola Motorola Moto Z Samsung Samsung Galaxy Note 3 Samsung Galaxy S6 Samsung Galaxy J5 Samsung Galaxy S6 edge + Samsung Galaxy Note 4 (SM-G9287) Samsung Galaxy -

Phone's Model, the Type of Wall Charger

Before you start: For Anker 5W Wireless Chargers This document will help you identify problems and get the most out of your experience with our wireless chargers. It is important to note that although wireless chargers are a transformatively convenient way to charge, they are not yet capable of the charging speeds provided by traditional wired chargers. Attachments (Must-Read!) If you're experiencing interrupted or slow charging, check the following: The back of your phone should not have any metal, pop sockets, or credit cards. Put the phone in the center of the charging surface (this lets the charging coil in your phone line up with the one inside the wireless charger). The wireless charging coil on Sony phones is located slightly below center. Phone cases should be no thicker than 5 mm for effective charging. Choose the correct adapter for your wireless charger: 5V/2A or above adapter for Standard Charge Mode. Not compatible with 5V/1A adapters. Phone Brands & Power Input For Anker 5W Wireless Chargers Apple iPhone X iPhone XS iPhone XS Max iPhone 8 / 8 Plus iPhone XR This wireless charger provides a standard 5W charge (Standard Charge Mode) to all wirelessly-charged devices. Use with wall chargers, desktop chargers, car chargers, and power strips with an output of 5V/2A or above. Not compatible with the iPhone stock charger (5V/1A). Phone Brands & Power Input For Anker 5W Wireless Chargers Samsung Galaxy S9/S9+ Galaxy S10/S10+/S10e Galaxy S8+ / S8+ Galaxy Note 9 Galaxy S7 / S7 edge / S7 Active Galaxy S6 edge This wireless charger provides a standard 5W charge (Standard Charge Mode) to all wirelessly-charged devices.