Cryptanalysis of the Sidelnikov Cryptosystem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

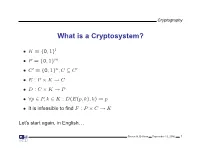

What Is a Cryptosystem?

Cryptography What is a Cryptosystem? • K = {0, 1}l • P = {0, 1}m • C′ = {0, 1}n,C ⊆ C′ • E : P × K → C • D : C × K → P • ∀p ∈ P, k ∈ K : D(E(p, k), k) = p • It is infeasible to find F : P × C → K Let’s start again, in English. Steven M. Bellovin September 13, 2006 1 Cryptography What is a Cryptosystem? A cryptosystem is pair of algorithms that take a key and convert plaintext to ciphertext and back. Plaintext is what you want to protect; ciphertext should appear to be random gibberish. The design and analysis of today’s cryptographic algorithms is highly mathematical. Do not try to design your own algorithms. Steven M. Bellovin September 13, 2006 2 Cryptography A Tiny Bit of History • Encryption goes back thousands of years • Classical ciphers encrypted letters (and perhaps digits), and yielded all sorts of bizarre outputs. • The advent of military telegraphy led to ciphers that produced only letters. Steven M. Bellovin September 13, 2006 3 Cryptography Codes vs. Ciphers • Ciphers operate syntactically, on letters or groups of letters: A → D, B → E, etc. • Codes operate semantically, on words, phrases, or sentences, per this 1910 codebook Steven M. Bellovin September 13, 2006 4 Cryptography A 1910 Commercial Codebook Steven M. Bellovin September 13, 2006 5 Cryptography Commercial Telegraph Codes • Most were aimed at economy • Secrecy from casual snoopers was a useful side-effect, but not the primary motivation • That said, a few such codes were intended for secrecy; I have some in my collection, including one intended for union use Steven M. -

A Covert Encryption Method for Applications in Electronic Data Interchange

Technological University Dublin ARROW@TU Dublin Articles School of Electrical and Electronic Engineering 2009-01-01 A Covert Encryption Method for Applications in Electronic Data Interchange Jonathan Blackledge Technological University Dublin, [email protected] Dmitry Dubovitskiy Oxford Recognition Limited, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/engscheleart2 Part of the Digital Communications and Networking Commons, Numerical Analysis and Computation Commons, and the Software Engineering Commons Recommended Citation Blackledge, J., Dubovitskiy, D.: A Covert Encryption Method for Applications in Electronic Data Interchange. ISAST Journal on Electronics and Signal Processing, vol: 4, issue: 1, pages: 107 -128, 2009. doi:10.21427/D7RS67 This Article is brought to you for free and open access by the School of Electrical and Electronic Engineering at ARROW@TU Dublin. It has been accepted for inclusion in Articles by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License A Covert Encryption Method for Applications in Electronic Data Interchange Jonathan M Blackledge, Fellow, IET, Fellow, BCS and Dmitry A Dubovitskiy, Member IET Abstract— A principal weakness of all encryption systems is to make sure that the ciphertext is relatively strong (but not that the output data can be ‘seen’ to be encrypted. In other too strong!) and that the information extracted is of good words, encrypted data provides a ‘flag’ on the potential value quality in terms of providing the attacker with ‘intelligence’ of the information that has been encrypted. -

A New Cryptosystem for Ciphers Using Transposition Techniques

Published by : International Journal of Engineering Research & Technology (IJERT) http://www.ijert.org ISSN: 2278-0181 Vol. 8 Issue 04, April-2019 A New Cryptosystem for Ciphers using Transposition Techniques U. Thirupalu Dr. E. Kesavulu Reddy FCSRC (USA) Research Scholar Assistant Professor, Dept. of Computer Science Dept. of Computer Science S V U CM&CS – Tirupati S V U CM&CS - Tirupati India – 517502 India – 517502 Abstract:- Data Encryption techniques is used to avoid the performs the decryption. Cryptographic algorithms are unauthorized access original content of a data in broadly classified as Symmetric key cryptography and communication between two parties, but the data can be Asymmetric key cryptography. recovered only through using a key known as decryption process. The objective of the encryption is to secure or save data from unauthorized access in term of inspecting or A. Symmetric Cryptography adapting the data. Encryption can be implemented occurs by In the symmetric key encryption, same key is used for both using some substitute technique, shifting technique, or encryption and decryption process. Symmetric algorithms mathematical operations. By adapting these techniques we more advantageous with low consuming with more can generate a different form of that data which can be computing power and it works with high speed in encrypt difficult to understand by any person. The original data is them. The symmetric key encryption takes place in two referred to as the plaintext and the encrypted data as the modes either as the block ciphers or as the stream ciphers. cipher text. Several symmetric key base algorithms have been The block cipher mode provides, whole data is divided into developed in the past year. -

Caesar's Cipher Is a Monoalphabetic

Mathematical summer in Paris - Cryptography Exercise 1 (Caesar’s cipher ?) Caesar’s cipher is a monoalphabetic encryption method where all letters in the alphabet are shifted by a certain constant d. For example, with d = 3, we obtain A ! D; B ! E;:::; Y ! B; Z ! C: 1. Translate the encryption function f in algebraic terms. What is the decryption function g? 2. What is the key in Caesar’s cipher? How many keys are possible? 3. Decrypt the ciphertext LIPPSASVPH. Exercise 2 (Affine encryption ?) We now want to study a more general kind of encryption method, called affine encryption. We represent each letter in the latin alphabet by its position in the alphabet, starting with 0, i.e. A ! 0; B ! 1; C ! 2;:::; Z ! 25; and we consider the map f : x ! ax + b mod 26; where a and b are fixed integers between 0 and 25. We want to use the map f to encrypt each letter in the plaintext. 1. What happens if we choose a = 1? We now choose a = 10 and b = 3. def 2. Let 0 ≤ y ≤ 25 such that y = f(x) = 10x + 3 mod 26. Prove that there exists k 2 Z such that y = 10x + 26k + 3. 3. Prove that y is always odd. 4. What can we say about this encryption method? We now choose a = 9 and b = 4. 5. Find an integer u 2 Z such that 9u = 1 mod 26. 6. Explain why the equation y = 9x + 4 mod 26, with 0 ≤ y ≤ 25, always has a solution. -

The Mathemathics of Secrets.Pdf

THE MATHEMATICS OF SECRETS THE MATHEMATICS OF SECRETS CRYPTOGRAPHY FROM CAESAR CIPHERS TO DIGITAL ENCRYPTION JOSHUA HOLDEN PRINCETON UNIVERSITY PRESS PRINCETON AND OXFORD Copyright c 2017 by Princeton University Press Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 6 Oxford Street, Woodstock, Oxfordshire OX20 1TR press.princeton.edu Jacket image courtesy of Shutterstock; design by Lorraine Betz Doneker All Rights Reserved Library of Congress Cataloging-in-Publication Data Names: Holden, Joshua, 1970– author. Title: The mathematics of secrets : cryptography from Caesar ciphers to digital encryption / Joshua Holden. Description: Princeton : Princeton University Press, [2017] | Includes bibliographical references and index. Identifiers: LCCN 2016014840 | ISBN 9780691141756 (hardcover : alk. paper) Subjects: LCSH: Cryptography—Mathematics. | Ciphers. | Computer security. Classification: LCC Z103 .H664 2017 | DDC 005.8/2—dc23 LC record available at https://lccn.loc.gov/2016014840 British Library Cataloging-in-Publication Data is available This book has been composed in Linux Libertine Printed on acid-free paper. ∞ Printed in the United States of America 13579108642 To Lana and Richard for their love and support CONTENTS Preface xi Acknowledgments xiii Introduction to Ciphers and Substitution 1 1.1 Alice and Bob and Carl and Julius: Terminology and Caesar Cipher 1 1.2 The Key to the Matter: Generalizing the Caesar Cipher 4 1.3 Multiplicative Ciphers 6 -

A Secure Variant of the Hill Cipher

† A Secure Variant of the Hill Cipher Mohsen Toorani ‡ Abolfazl Falahati Abstract the corresponding key of each block but it has several security problems [7]. The Hill cipher is a classical symmetric encryption In this paper, a secure cryptosystem is introduced that algorithm that succumbs to the know-plaintext attack. overcomes all the security drawbacks of the Hill cipher. Although its vulnerability to cryptanalysis has rendered it The proposed scheme includes an encryption algorithm unusable in practice, it still serves an important that is a variant of the Affine Hill cipher for which a pedagogical role in cryptology and linear algebra. In this secure cryptographic protocol is introduced. The paper, a variant of the Hill cipher is introduced that encryption core of the proposed scheme has the same makes the Hill cipher secure while it retains the structure of the Affine Hill cipher but its internal efficiency. The proposed scheme includes a ciphering manipulations are different from the previously proposed core for which a cryptographic protocol is introduced. cryptosystems. The rest of this paper is organized as follows. Section 2 briefly introduces the Hill cipher. Our 1. Introduction proposed scheme is introduced and its computational costs are evaluated in Section 3, and Section 4 concludes The Hill cipher was invented by L.S. Hill in 1929 [1]. It is the paper. a famous polygram and a classical symmetric cipher based on matrix transformation but it succumbs to the 2. The Hill Cipher known-plaintext attack [2]. Although its vulnerability to cryptanalysis has rendered it unusable in practice, it still In the Hill cipher, the ciphertext is obtained from the serves an important pedagogical role in both cryptology plaintext by means of a linear transformation. -

A Hybrid Cryptosystem Based on Vigenère Cipher and Columnar Transposition Cipher

International Journal of Advanced Technology & Engineering Research (IJATER) www.ijater.com A HYBRID CRYPTOSYSTEM BASED ON VIGENÈRE CIPHER AND COLUMNAR TRANSPOSITION CIPHER Quist-Aphetsi Kester, MIEEE, Lecturer Faculty of Informatics, Ghana Technology University College, PMB 100 Accra North, Ghana Phone Contact +233 209822141 Email: [email protected] / [email protected] graphy that use the same cryptographic keys for both en- Abstract cryption of plaintext and decryption of cipher text. The keys may be identical or there may be a simple transformation to Privacy is one of the key issues addressed by information go between the two keys. The keys, in practice, represent a Security. Through cryptographic encryption methods, one shared secret between two or more parties that can be used can prevent a third party from understanding transmitted raw to maintain a private information link [5]. This requirement data over unsecured channel during signal transmission. The that both parties have access to the secret key is one of the cryptographic methods for enhancing the security of digital main drawbacks of symmetric key encryption, in compari- contents have gained high significance in the current era. son to public-key encryption. Typical examples symmetric Breach of security and misuse of confidential information algorithms are Advanced Encryption Standard (AES), Blow- that has been intercepted by unauthorized parties are key fish, Tripple Data Encryption Standard (3DES) and Serpent problems that information security tries to solve. [6]. This paper sets out to contribute to the general body of Asymmetric or Public key encryption on the other hand is an knowledge in the area of classical cryptography by develop- encryption method where a message encrypted with a reci- ing a new hybrid way of encryption of plaintext. -

Cryptography Using Steganography: New Algorithms and Applications

Technological University Dublin ARROW@TU Dublin Articles School of Electrical and Electronic Engineering 2011 Cryptography Using Steganography: New Algorithms and Applications Jonathan Blackledge Technological University Dublin, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/engscheleart2 Part of the Dynamic Systems Commons, and the Theory and Algorithms Commons Recommended Citation Blackledge, J. (2011) Cryptography and Steganography: New Algorithms and Applications. Lecture Notes, Centre for Advanced Studies, Warsaw University of Technology, Warsaw, 2011. doi:10.21427/D7ZS63 This Article is brought to you for free and open access by the School of Electrical and Electronic Engineering at ARROW@TU Dublin. It has been accepted for inclusion in Articles by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 License Cryptography and Steganography: New Algorithms and Applications Prof. Dr. Jonathan Blackledge Science Foundation Ireland Stokes Professor School of Electrical Engineering Systems Dublin Institute of Technology Distinguished Professor Centre for Advanced Studies Warsaw University of Technology http://eleceng.dit.ie/blackledge http://jmblackledge.web.officelive.com [email protected] [email protected] Editor: Stanislaw Janeczko Technical editors: Malgorzata Zielinska, Anna Zubrowska General layout and cover design: Emilia Bojanczyk c Centre for Advanced Studies, Warsaw University of Technology, Warsaw 2011 For additional information on this series, visit the CAS Publications Website at http://www.csz.pw.edu.pl/index.php/en/publications ISBN: 978-83-61993-01-83 Pinted in Poland Preface Developing methods for ensuring the secure exchange of information is one of the oldest occupations in history. -

Shift Cipher Substitution Cipher Vigenère Cipher Hill Cipher

Lecture 2 Classical Cryptosystems Shift cipher Substitution cipher Vigenère cipher Hill cipher 1 Shift Cipher • A Substitution Cipher • The Key Space: – [0 … 25] • Encryption given a key K: – each letter in the plaintext P is replaced with the K’th letter following the corresponding number ( shift right ) • Decryption given K: – shift left • History: K = 3, Caesar’s cipher 2 Shift Cipher • Formally: • Let P=C= K=Z 26 For 0≤K≤25 ek(x) = x+K mod 26 and dk(y) = y-K mod 26 ʚͬ, ͭ ∈ ͔ͦͪ ʛ 3 Shift Cipher: An Example ABCDEFGHIJKLMNOPQRSTUVWXYZ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 • P = CRYPTOGRAPHYISFUN Note that punctuation is often • K = 11 eliminated • C = NCJAVZRCLASJTDQFY • C → 2; 2+11 mod 26 = 13 → N • R → 17; 17+11 mod 26 = 2 → C • … • N → 13; 13+11 mod 26 = 24 → Y 4 Shift Cipher: Cryptanalysis • Can an attacker find K? – YES: exhaustive search, key space is small (<= 26 possible keys). – Once K is found, very easy to decrypt Exercise 1: decrypt the following ciphertext hphtwwxppelextoytrse Exercise 2: decrypt the following ciphertext jbcrclqrwcrvnbjenbwrwn VERY useful MATLAB functions can be found here: http://www2.math.umd.edu/~lcw/MatlabCode/ 5 General Mono-alphabetical Substitution Cipher • The key space: all possible permutations of Σ = {A, B, C, …, Z} • Encryption, given a key (permutation) π: – each letter X in the plaintext P is replaced with π(X) • Decryption, given a key π: – each letter Y in the ciphertext C is replaced with π-1(Y) • Example ABCDEFGHIJKLMNOPQRSTUVWXYZ πBADCZHWYGOQXSVTRNMSKJI PEFU • BECAUSE AZDBJSZ 6 Strength of the General Substitution Cipher • Exhaustive search is now infeasible – key space size is 26! ≈ 4*10 26 • Dominates the art of secret writing throughout the first millennium A.D. -

The Mathematics of the RSA Public-Key Cryptosystem Burt Kaliski RSA Laboratories

The Mathematics of the RSA Public-Key Cryptosystem Burt Kaliski RSA Laboratories ABOUT THE AUTHOR: Dr Burt Kaliski is a computer scientist whose involvement with the security industry has been through the company that Ronald Rivest, Adi Shamir and Leonard Adleman started in 1982 to commercialize the RSA encryption algorithm that they had invented. At the time, Kaliski had just started his undergraduate degree at MIT. Professor Rivest was the advisor of his bachelor’s, master’s and doctoral theses, all of which were about cryptography. When Kaliski finished his graduate work, Rivest asked him to consider joining the company, then called RSA Data Security. In 1989, he became employed as the company’s first full-time scientist. He is currently chief scientist at RSA Laboratories and vice president of research for RSA Security. Introduction Number theory may be one of the “purest” branches of mathematics, but it has turned out to be one of the most useful when it comes to computer security. For instance, number theory helps to protect sensitive data such as credit card numbers when you shop online. This is the result of some remarkable mathematics research from the 1970s that is now being applied worldwide. Sensitive data exchanged between a user and a Web site needs to be encrypted to prevent it from being disclosed to or modified by unauthorized parties. The encryption must be done in such a way that decryption is only possible with knowledge of a secret decryption key. The decryption key should only be known by authorized parties. In traditional cryptography, such as was available prior to the 1970s, the encryption and decryption operations are performed with the same key. -

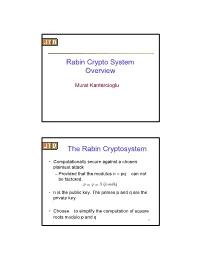

Rabin Crypto System Overview the Rabin Cryptosystem

Rabin Crypto System Overview Murat Kantarcioglu The Rabin Cryptosystem • Computationally secure against a chosen plaintext attack – Provided that the modulus n = pq can not be factored. o binXa @ @ • n is the public key. The primes p and q are the private key. • Choose to simplify the computation of square roots modulo p and q 2 The Rabin Cryptosystem • B encrypts a message m and sends the ciphertext c to A • Encryption: – Obtain A’s public key n. – Represent the message as an integer m in the range {0, 1, . ,n-1}. – Compute F bN bin ò – Send the ciphertext c to A 3 The Rabin Cryptosystem • A decrypts the ciphertext c as follows: • Decryption: – Compute Aù bin ò – There are four square roots b Ç I b N I b å I b X of c modulo n. – The message m is equal to one of these four messages 4 The Rabin Cryptosystem • When b i n X there is a simple formula to compute t@he square root of c in mod p. ï ùïiÇaXaN ùïiÇaN bin û % @ ù aN ù bin û @ . ù bin û @ • Here we have made use of Euler’s criterion to claim that ùï ÇaN Ç bin û . @ 5 The Rabin Cryptosystem • Hence the two square roots of c mod p are ùïiÇaX bin û % • In a similar fashion, the two square roots of c mod q are ùïoiÇaÄX bin o % • Then we can obtain the four square roots of c mod n using the Chinese Remainder Theorem 6 The Rabin Cryptosystem • Example: F ùù F ù ÇÇ * – Suppose F bN bin ùù – Then for message m the ciphertext c is computed as Aù bin ùù – And for decryption we need to compute ù ÇEN Nå bin ùù @ @ – Suppose Alice wants to send message m = 10 7 The Rabin Cryptosystem • To find the square roots of 23 in mod 7 and in mod 11 we can use the formula since 7 and 11 are cogruent to 3 mod 4. -

EE 418 Network Security and Cryptography Lecture #5 Outline: 1

EE 418 Network Security and Cryptography Lecture #5 October 13, 2016 Polyalphabetic Classical cryptosystems. Introduction to Cryptanalysis. Lecture notes prepared by Professor Radha Poovendran. Tamara Bonaci Department of Electrical Engineering University of Washington, Seattle Outline: 1. Review: The Vigen`ereCipher 2. The Hill Cipher 3. The Permutation Cipher 4. Introduction to Cryptanalysis 5. Cryptanalysis of the Shift Cipher 6. Remarks on Letter Distribution of the English Language 1 Review: The Vigen`ereCipher Last time, we talked about The Vigen`ere cipher, and we said that it is the first polyalphabetic cryptosystem that we are encountering in this course. A polyalphabetic cryptosystem is a cryptosystem where multiple alphabetic characters are encrypted at a time. In other words, each plaintext element is equivalent to m alphabetic characters. The idea behind this cryptosystem is to use a vector of m keys, i.e., K = (K1;K2; ::; Km). m m P = C = K = (Z26) where (Z26) is an m-tuple: y = eK (x1; x2; ::; xm) = (x1 + K1; x2 + K2; ::; xm + Km) mod 26; (1) dK (y1; y2; ::; ym) = (y1 − K1; y2 − K2; ::; ym − Km) mod 26: (2) Note: The difference between the Vigen`erecipher and the shift, substitution, and affine ciphers is that in the Vigen`erecipher each alphabetic character is not uniquely mapped to another alphabetic character. Example Let the plaintext be vector, and let m = 4; K = (2; 4; 6; 7). From the correspondence table we have x = (21; 4; 2; 19; 14; 17), and the cipher is shown in Table 1. PLAINTEXT: 21 4 2 19 14 17 KEY: 2 4 6 7 2 4 CIPHER: 23 8 8 0 16 21 XIIA QV To decrypt, we use the same keyword, but modulo subtraction is performed instead of modulo addition.