A SHORT HISTORY of CIRCUITS and SYSTEMS CIRCUITS a SHORT HISTORYA SHORT of CIRCUITS and SYSTEMS CIRCUITS and Franco Maloberti and Anthony C

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bob Pease, Analog Guru, Died 5 Years Ago - Media Rako.Com/Media 1 of 12

Bob Pease, analog guru, died 5 years ago - Media Rako.com/Media 1 of 12 Rako Studios » Media » Tech » Electronics » Bob Pease, analog guru, died 5 years ago Bob Pease, analog guru, died 5 years ago Bob Pease was a true standout, the gentleman genius. Notorious analog engineer Bob Pease died five Saturday, Bob had come to the service from years ago, on June 18, 2011. His passing was his office at National Semiconductor, now all the more tragic since he died driving home Texas Instruments. My buddy has a from a remembrance for fellow analog saying, "Everyone wants to be somebody, no great Jim Williams. Although it was a one wants to become somebody." 1 of 12 9/2/2018 6:49 PM 1 of 12 Bob Pease, analog guru, died 5 years ago - Media Rako.com/Media 2 of 12 Bob's being the most famous analog designer After coming to National Semi, Bob learned was the result of his hard work becoming a analog IC design, back in the days ofhand-cut brilliant engineer, with a passion for helping Rubylith masks. There was no Spice simulator others. Fran Hoffart, retired Linear Tech apps back then, and Pease had deep ridicule for engineer and former colleague recalls, "Citing a engineers that relied on computer simulations, need for educating fellow engineers in the insteadof thinking through the problem and design of bandgap references, Bob anointed making some quickback-of-envelope himself 'The Czar of Bandgaps,' complete with calculations. He accepted that Spice was useful a quasi-military suit with a sword and a especially for inexperienced engineers, but was necklace made from metal TO-3 packages." He concerned thatengineers were substituting would help any engineer with a problem, even computer smarts for real smarts. -

Scientists on Currencies

“For making the world a wealthier place” TOP 10 ___________________________________________________________________________________ SCIENTISTS ON BANKNOTES 1. Isaac Newton 2. Charles Darwin 3. Michael Faraday 4. Copernicus 5. Galileo 6. Marie Curie 7. Leonardo da Vinci 8. Albert Einstein 9. Hideyo Noguchi 10. Nikola Tesla Will Philip Emeagwali be on the Nigerian Money? “Put Philip Emeagwali on Nigeria’s Currency,” Central Bank of Nigeria official pleads. See Details Below Physicist Albert Einstein is honored on Israeli five pound currency. Galileo Gallilei on the Italian 2000 Lire. Isaac Newton honored on the British pound. Bacteriologist Hideyo Noguchi honored on the Japanese banknote. The image of Carl Friedrich Gauss on Germany's 10- mark banknote inspired young Germans to become mathematicians. Leonardo da Vinci (1452 – 1519), the Italian polymath, is honored on their banknote. Maria Sklodowska–Curie is honoured on the Polish banknote. Carl Linnaeus is honored on the Swedish banknote (100 Swedish Krona) Nikola Tesla is honored on Serbia’s banknote. Note actual equation on banknote (Serbia’s 100-dinar note) Honorable Mentions Developing Story Banker Wanted Emeagwali on Nigerian Currency In early 2000s, the Central Bank of Nigeria announced that new banknotes will be commissioned. An economist of the Central Bank of Nigeria commented: “In Europe, heroes of science are portrayed on banknotes. In Africa, former heads of state are portrayed on banknotes. Why is that so?” His logic was that the Central Bank of Nigeria should end its era of putting only Nigerian political leaders on the money. In Africa, only politicians were permitted by politicians to be on the money. Perhaps, you’ve heard of the one-of-a-kind debate to put Philip Emeagwali on the Nigerian money. -

Units in Electromagnetism (PDF)

Units in electromagnetism Almost all textbooks on electricity and magnetism (including Griffiths’s book) use the same set of units | the so-called rationalized or Giorgi units. These have the advantage of common use. On the other hand there are all sorts of \0"s and \µ0"s to memorize. Could anyone think of a system that doesn't have all this junk to memorize? Yes, Carl Friedrich Gauss could. This problem describes the Gaussian system of units. [In working this problem, keep in mind the distinction between \dimensions" (like length, time, and charge) and \units" (like meters, seconds, and coulombs).] a. In the Gaussian system, the measure of charge is q q~ = p : 4π0 Write down Coulomb's law in the Gaussian system. Show that in this system, the dimensions ofq ~ are [length]3=2[mass]1=2[time]−1: There is no need, in this system, for a unit of charge like the coulomb, which is independent of the units of mass, length, and time. b. The electric field in the Gaussian system is given by F~ E~~ = : q~ How is this measure of electric field (E~~) related to the standard (Giorgi) field (E~ )? What are the dimensions of E~~? c. The magnetic field in the Gaussian system is given by r4π B~~ = B~ : µ0 What are the dimensions of B~~ and how do they compare to the dimensions of E~~? d. In the Giorgi system, the Lorentz force law is F~ = q(E~ + ~v × B~ ): p What is the Lorentz force law expressed in the Gaussian system? Recall that c = 1= 0µ0. -

Analog Father.Indd

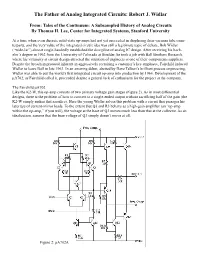

The Father of Analog Integrated Circuits: Robert J. Widlar From: Tales of the Continuum: A Subsampled History of Analog Circuits By Thomas H. Lee, Center for Integrated Systems, Stanford University At a time when even discrete solid-state op-amps had not yet succeeded in displacing their vacuum tube coun- terparts, and the very value of the integrated circuit idea was still a legitimate topic of debate, Bob Widlar (“wide-lar”) almost single-handedly established the discipline of analog IC design. After receiving his bach- elor’s degree in 1962 from the University of Colorado at Boulder, he took a job with Ball Brothers Research, where his virtuosity at circuit design attracted the attention of engineers at one of their components suppliers. Despite the breach in protocol inherent in aggressively recruiting a customer’s key employee, Fairchild induced Widlar to leave Ball in late 1963. In an amazing debut, abetted by Dave Talbert’s brilliant process engineering, Widlar was able to put the world’s first integrated circuit op-amp into production by 1964. Development of the μA702, as Fairchild called it, proceeded despite a general lack of enthusiasm for the project at the company. The Fairchild μA702 Like the K2-W, this op-amp consists of two primary voltage gain stages (Figure 2). As in most differential designs, there is the problem of how to convert to a single-ended output without sacrificing half of the gain (the K2-W simply makes that sacrifice). Here the young Widlar solves this problem with a circuit that presages his later use of current-mirror loads. -

Alessandro Volta and the Discovery of the Battery

1 Primary Source 12.2 VOLTA AND THE DISCOVERY OF THE BATTERY1 Alessandro Volta (1745–1827) was born in the Duchy of Milan in a town called Como. He was raised as a Catholic and remained so throughout his life. Volta became a professor of physics in Como, and soon took a significant interest in electricity. First, he began to work with the chemistry of gases, during which he discovered methane gas. He then studied electrical capacitance, as well as derived new ways of studying both electrical potential and charge. Most famously, Volta discovered what he termed a Voltaic pile, which was the first electrical battery that could continuously provide electrical current to a circuit. Needless to say, Volta’s discovery had a major impact in science and technology. In light of his contribution to the study of electrical capacitance and discovery of the battery, the electrical potential difference, voltage, and the unit of electric potential, the volt, were named in honor of him. The following passage is excerpted from an essay, written in French, “On the Electricity Excited by the Mere Contact of Conducting Substances of Different Kinds,” which Volta sent in 1800 to the President of the Royal Society in London, Joseph Banks, in hope of its publication. The essay, described how to construct a battery, a source of steady electrical current, which paved the way toward the “electric age.” At this time, Volta was working as a professor at the University of Pavia. For the excerpt online, click here. The chief of these results, and which comprehends nearly all the others, is the construction of an apparatus which resembles in its effects viz. -

High Frequency Integrated MOS Filters C

N94-71118 2nd NASA SERC Symposium on VLSI Design 1990 5.4.1 High Frequency Integrated MOS Filters C. Peterson International Microelectronic Products San Jose, CA 95134 Abstract- Several techniques exist for implementing integrated MOS filters. These techniques fit into the general categories of sampled and tuned continuous- time niters. Advantages and limitations of each approach are discussed. This paper focuses primarily on the high frequency capabilities of MOS integrated filters. 1 Introduction The use of MOS in the design of analog integrated circuits continues to grow. Competitive pressures drive system designers towards system-level integration. System-level integration typically requires interface to the analog world. Often, this type of integration consists predominantly of digital circuitry, thus the efficiency of the digital in cost and power is key. MOS remains the most efficient integrated circuit technology for mixed-signal integration. The scaling of MOS process feature sizes combined with innovation in circuit design techniques have also opened new high bandwidth opportunities for MOS mixed- signal circuits. There is a trend to replace complex analog signal processing with digital signal pro- cessing (DSP). The use of over-sampled converters minimizes the requirements for pre- and post filtering. In spite of this trend, there are many applications where DSP is im- practical and where signal conditioning in the analog domain is necessary, particularly at high frequencies. Applications for high frequency filters are in data communications and the read channel of tape, disk and optical storage devices where filters bandlimit the data signal and perform amplitude and phase equalization. Other examples include filtering of radio and video signals. -

Business Unit Newsflash

No.2 2017 PDC Center for High Performance Computing Business Unit Newsflash Welcome to the PDC Business Newsflash! The newsflashes are issued in the PDC newsletters or via the PDC business email list in accordance with the frequency of PDC business events. Here you will find short articles about industrial collaborations with PDC and about business events relevant for high performance computing (HPC), along with overviews of important developments and trends in relation to HPC for small to medium-sized enterprises (SMEs) and large industries all around the world. PDC Business Unit Newsflash | No. 2 – 2017 Page 1 HPC for Industry R&D PRACE Opens its Doors Further for Industry from world-leading research conducted over the last decade by a team of researchers at the KTH Royal Institute of Technology in Stockholm. Their project, called “Automatic generation and optimization of meshes for industrial CFD”, started on the 1st of September 2017 and will continue for one year. In early 2017 PRACE opened up a new opportunity for business and industrial partners to apply for both HPC resources and PRACE expert-help through what are known as Type-D PRACE Preparatory Access (PA Type-D) Meanwhile other Swedish SMEs continue to be applications. The objective of this was to allow active within the PRACE SHAPE programme. For PRACE users to optimise, scale and test codes on example, the Swedish SME Svenska Flygtekniska PRACE systems. Type-D offers users the chance Institutet AB was successful with an application to start optimization work on a PRACE Tier-1 called “AdaptiveRotor”. The project is expected system (that is, a national system) to eventually to start soon and will last six months. -

Booster Rookie Book Typical Kicker Layout

BOOSTER Table of Contents Foreword .................................................................................................................................... 3 Introduction................................................................................................................................ 4 Booster History and Design........................................................................................................ 5 Injection ..................................................................................................................................... 9 Gradient Magnets and Power Supplies......................................................................................17 Magnets .....................................................................................................................................18 GMPS........................................................................................................................................23 RF..............................................................................................................................................27 High Level.................................................................................................................................31 Low Level/Feedback..................................................................................................................32 Notching and Cogging...............................................................................................................37 -

Named Units of Measurement

Dr. John Andraos, http://www.careerchem.com/NAMED/Named-Units.pdf 1 NAMED UNITS OF MEASUREMENT © Dr. John Andraos, 2000 - 2013 Department of Chemistry, York University 4700 Keele Street, Toronto, ONTARIO M3J 1P3, CANADA For suggestions, corrections, additional information, and comments please send e-mails to [email protected] http://www.chem.yorku.ca/NAMED/ Atomic mass unit (u, Da) John Dalton 6 September 1766 - 27 July 1844 British, b. Eaglesfield, near Cockermouth, Cumberland, England Dalton (1/12th mass of C12 atom) Dalton's atomic theory Dalton, J., A New System of Chemical Philosophy , R. Bickerstaff: London, 1808 - 1827. Biographical References: Daintith, J.; Mitchell, S.; Tootill, E.; Gjersten, D ., Biographical Encyclopedia of Dr. John Andraos, http://www.careerchem.com/NAMED/Named-Units.pdf 2 Scientists , Institute of Physics Publishing: Bristol, UK, 1994 Farber, Eduard (ed.), Great Chemists , Interscience Publishers: New York, 1961 Maurer, James F. (ed.) Concise Dictionary of Scientific Biography , Charles Scribner's Sons: New York, 1981 Abbott, David (ed.), The Biographical Dictionary of Scientists: Chemists , Peter Bedrick Books: New York, 1983 Partington, J.R., A History of Chemistry , Vol. III, Macmillan and Co., Ltd.: London, 1962, p. 755 Greenaway, F. Endeavour 1966 , 25 , 73 Proc. Roy. Soc. London 1844 , 60 , 528-530 Thackray, A. in Gillispie, Charles Coulston (ed.), Dictionary of Scientific Biography , Charles Scribner & Sons: New York, 1973, Vol. 3, 573 Clarification on symbols used: personal communication on April 26, 2013 from Prof. O. David Sparkman, Pacific Mass Spectrometry Facility, University of the Pacific, Stockton, CA. Capacitance (Farads, F) Michael Faraday 22 September 1791 - 25 August 1867 British, b. -

Microprocessors in the 1970'S

Part II 1970's -- The Altair/Apple Era. 3/1 3/2 Part II 1970’s -- The Altair/Apple era Figure 3.1: A graphical history of personal computers in the 1970’s, the MITS Altair and Apple Computer era. Microprocessors in the 1970’s 3/3 Figure 3.2: Andrew S. Grove, Robert N. Noyce and Gordon E. Moore. Figure 3.3: Marcian E. “Ted” Hoff. Photographs are courtesy of Intel Corporation. 3/4 Part II 1970’s -- The Altair/Apple era Figure 3.4: The Intel MCS-4 (Micro Computer System 4) basic system. Figure 3.5: A photomicrograph of the Intel 4004 microprocessor. Photographs are courtesy of Intel Corporation. Chapter 3 Microprocessors in the 1970's The creation of the transistor in 1947 and the development of the integrated circuit in 1958/59, is the technology that formed the basis for the microprocessor. Initially the technology only enabled a restricted number of components on a single chip. However this changed significantly in the following years. The technology evolved from Small Scale Integration (SSI) in the early 1960's to Medium Scale Integration (MSI) with a few hundred components in the mid 1960's. By the late 1960's LSI (Large Scale Integration) chips with thousands of components had occurred. This rapid increase in the number of components in an integrated circuit led to what became known as Moore’s Law. The concept of this law was described by Gordon Moore in an article entitled “Cramming More Components Onto Integrated Circuits” in the April 1965 issue of Electronics magazine [338]. -

The Equivalence of Digital and Analog Signal Processing *

Reprinted from I ~ FORMATIO:-; AXDCOXTROL, Volume 8, No. 5, October 1965 Copyright @ by AcademicPress Inc. Printed in U.S.A. INFORMATION A~D CO:";TROL 8, 455- 4G7 (19G5) The Equivalence of Digital and Analog Signal Processing * K . STEIGLITZ Departmentof Electrical Engineering, Princeton University, Princeton, l\rew J er.~e!J A specific isomorphism is constructed via the trallsform domaiIls between the analog signal spaceL2 (- 00, 00) and the digital signal space [2. It is then shown that the class of linear time-invariaIlt realizable filters is illvariallt under this isomorphism, thus demoIl- stratillg that the theories of processingsignals with such filters are identical in the digital and analog cases. This means that optimi - zatioll problems illvolving linear time-illvariant realizable filters and quadratic cost functions are equivalent in the discrete-time and the continuous-time cases, for both deterministic and random signals. FilIally , applicatiolls to the approximation problem for digital filters are discussed. LIST OF SY~IBOLS f (t ), g(t) continuous -time signals F (jCAJ), G(jCAJ) Fourier transforms of continuous -time signals A continuous -time filters , bounded linear transformations of I.J2(- 00, 00) {in}, {gn} discrete -time signals F (z), G (z) z-transforms of discrete -time signals A discrete -time filters , bounded linear transfornlations of ~ JL isomorphic mapping from L 2 ( - 00, 00) to l2 ~L 2 ( - 00, 00) space of Fourier transforms of functions in L 2( - 00,00) 512 space of z-transforms of sequences in l2 An(t ) nth Laguerre function * This work is part of a thesis submitted in partial fulfillment of requirements for the degree of Doctor of Engineering Scienceat New York University , and was supported partly by the National ScienceFoundation and partly by the Air Force Officeof Scientific Researchunder Contract No. -

Chapter 5 Capacitance and Dielectrics

Chapter 5 Capacitance and Dielectrics 5.1 Introduction...........................................................................................................5-3 5.2 Calculation of Capacitance ...................................................................................5-4 Example 5.1: Parallel-Plate Capacitor ....................................................................5-4 Interactive Simulation 5.1: Parallel-Plate Capacitor ...........................................5-6 Example 5.2: Cylindrical Capacitor........................................................................5-6 Example 5.3: Spherical Capacitor...........................................................................5-8 5.3 Capacitors in Electric Circuits ..............................................................................5-9 5.3.1 Parallel Connection......................................................................................5-10 5.3.2 Series Connection ........................................................................................5-11 Example 5.4: Equivalent Capacitance ..................................................................5-12 5.4 Storing Energy in a Capacitor.............................................................................5-13 5.4.1 Energy Density of the Electric Field............................................................5-14 Interactive Simulation 5.2: Charge Placed between Capacitor Plates..............5-14 Example 5.5: Electric Energy Density of Dry Air................................................5-15